Chunyun Fu

Deep LG-Track: An Enhanced Localization-Confidence-Guided Multi-Object Tracker

Apr 02, 2025Abstract:Multi-object tracking plays a crucial role in various applications, such as autonomous driving and security surveillance. This study introduces Deep LG-Track, a novel multi-object tracker that incorporates three key enhancements to improve the tracking accuracy and robustness. First, an adaptive Kalman filter is developed to dynamically update the covariance of measurement noise based on detection confidence and trajectory disappearance. Second, a novel cost matrix is formulated to adaptively fuse motion and appearance information, leveraging localization confidence and detection confidence as weighting factors. Third, a dynamic appearance feature updating strategy is introduced, adjusting the relative weighting of historical and current appearance features based on appearance clarity and localization accuracy. Comprehensive evaluations on the MOT17 and MOT20 datasets demonstrate that the proposed Deep LG-Track consistently outperforms state-of-the-art trackers across multiple performance metrics, highlighting its effectiveness in multi-object tracking tasks.

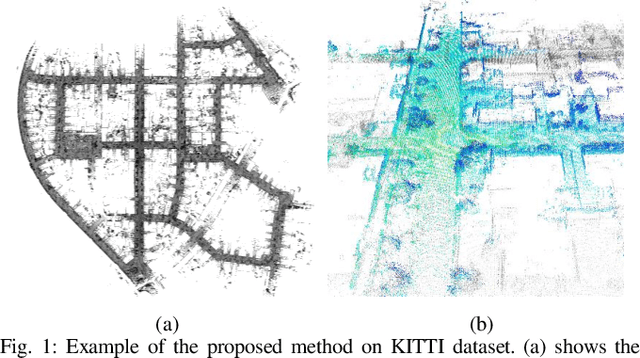

SD-SLAM: A Semantic SLAM Approach for Dynamic Scenes Based on LiDAR Point Clouds

Feb 28, 2024

Abstract:Point cloud maps generated via LiDAR sensors using extensive remotely sensed data are commonly used by autonomous vehicles and robots for localization and navigation. However, dynamic objects contained in point cloud maps not only downgrade localization accuracy and navigation performance but also jeopardize the map quality. In response to this challenge, we propose in this paper a novel semantic SLAM approach for dynamic scenes based on LiDAR point clouds, referred to as SD-SLAM hereafter. The main contributions of this work are in three aspects: 1) introducing a semantic SLAM framework dedicatedly for dynamic scenes based on LiDAR point clouds, 2) Employing semantics and Kalman filtering to effectively differentiate between dynamic and semi-static landmarks, and 3) Making full use of semi-static and pure static landmarks with semantic information in the SD-SLAM process to improve localization and mapping performance. To evaluate the proposed SD-SLAM, tests were conducted using the widely adopted KITTI odometry dataset. Results demonstrate that the proposed SD-SLAM effectively mitigates the adverse effects of dynamic objects on SLAM, improving vehicle localization and mapping performance in dynamic scenes, and simultaneously constructing a static semantic map with multiple semantic classes for enhanced environment understanding.

L-LO: Enhancing Pose Estimation Precision via a Landmark-Based LiDAR Odometry

Dec 28, 2023Abstract:The majority of existing LiDAR odometry solutions are based on simple geometric features such as points, lines or planes which cannot fully reflect the characteristics of surrounding environments. In this study, we propose a novel LiDAR odometry which effectively utilizes the overall exterior characteristics of environmental landmarks. The vehicle pose estimation is accomplished by means of two sequential pose estimation stages, namely, horizontal pose estimation and vertical pose estimation. To achieve effective landmark registration, a comprehensive index is proposed to evaluate the level of similarity between landmarks. This index takes into account two crucial aspects of landmarks, namely, dimension and shape in evaluating their similarity. To assess the performance of the proposed algorithm, we utilize the widely recognized KITTI dataset as well as experimental data collected by an unmanned ground vehicle platform. Both graphical and numerical results indicate that our algorithm outperforms leading LiDAR odometry solutions in terms of positioning accuracy.

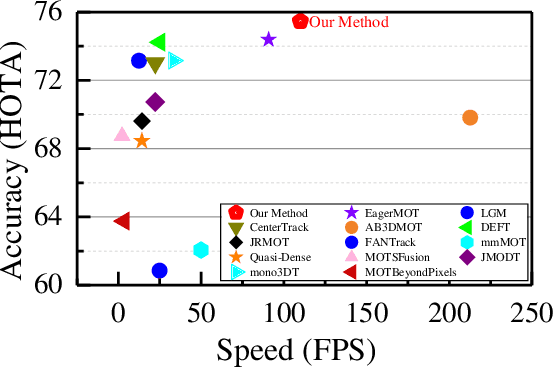

Localization-Guided Track: A Deep Association Multi-Object Tracking Framework Based on Localization Confidence of Detections

Sep 18, 2023

Abstract:In currently available literature, no tracking-by-detection (TBD) paradigm-based tracking method has considered the localization confidence of detection boxes. In most TBD-based methods, it is considered that objects of low detection confidence are highly occluded and thus it is a normal practice to directly disregard such objects or to reduce their priority in matching. In addition, appearance similarity is not a factor to consider for matching these objects. However, in terms of the detection confidence fusing classification and localization, objects of low detection confidence may have inaccurate localization but clear appearance; similarly, objects of high detection confidence may have inaccurate localization or unclear appearance; yet these objects are not further classified. In view of these issues, we propose Localization-Guided Track (LG-Track). Firstly, localization confidence is applied in MOT for the first time, with appearance clarity and localization accuracy of detection boxes taken into account, and an effective deep association mechanism is designed; secondly, based on the classification confidence and localization confidence, a more appropriate cost matrix can be selected and used; finally, extensive experiments have been conducted on MOT17 and MOT20 datasets. The results show that our proposed method outperforms the compared state-of-art tracking methods. For the benefit of the community, our code has been made publicly at https://github.com/mengting2023/LG-Track.

NDT-Map-Code: A 3D global descriptor for real-time loop closure detection in lidar SLAM

Jul 17, 2023

Abstract:Loop-closure detection, also known as place recognition, aiming to identify previously visited locations, is an essential component of a SLAM system. Existing research on lidar-based loop closure heavily relies on dense point cloud and 360 FOV lidars. This paper proposes an out-of-the-box NDT (Normal Distribution Transform) based global descriptor, NDT-Map-Code, designed for both on-road driving and underground valet parking scenarios. NDT-Map-Code can be directly extracted from the NDT map without the need for a dense point cloud, resulting in excellent scalability and low maintenance cost. The NDT representation is leveraged to identify representative patterns, which are further encoded according to their spatial location (bearing, range, and height). Experimental results on the NIO underground parking lot dataset and the KITTI dataset demonstrate that our method achieves significantly better performance compared to the state-of-the-art.

You Only Need Two Detectors to Achieve Multi-Modal 3D Multi-Object Tracking

Apr 18, 2023

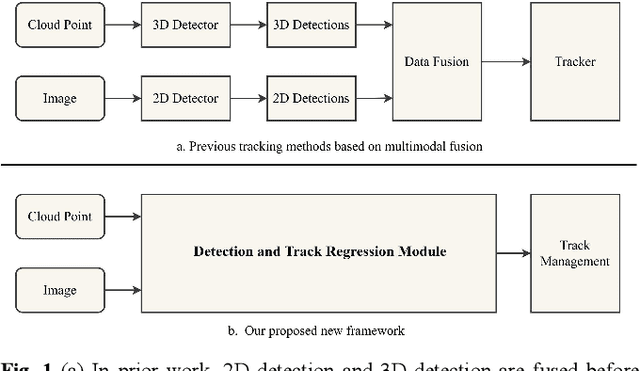

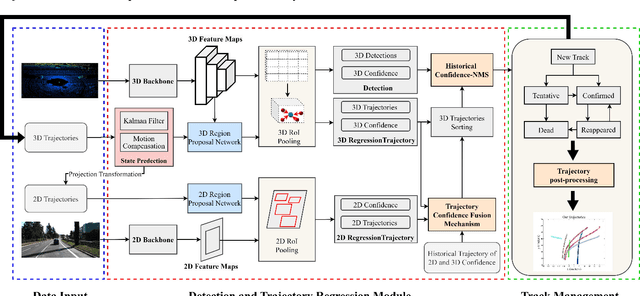

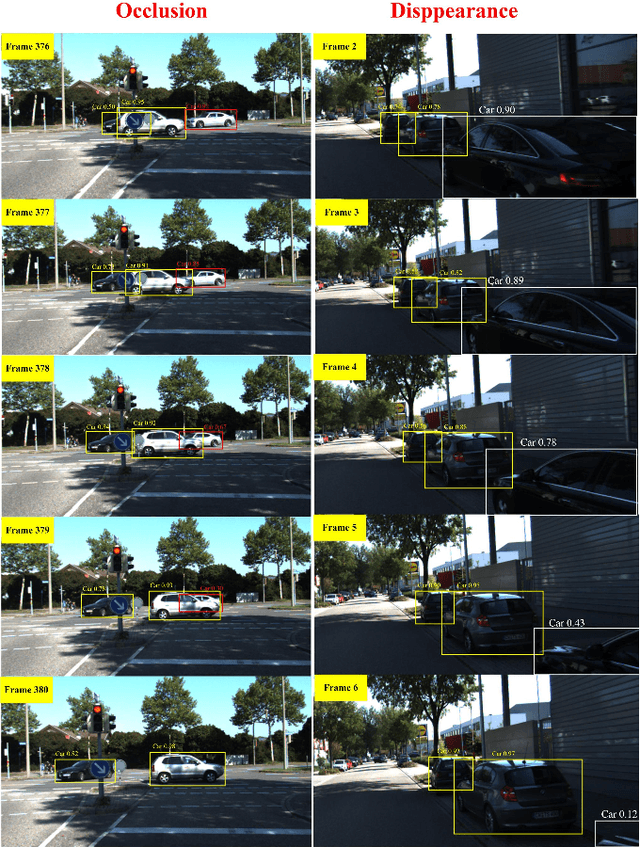

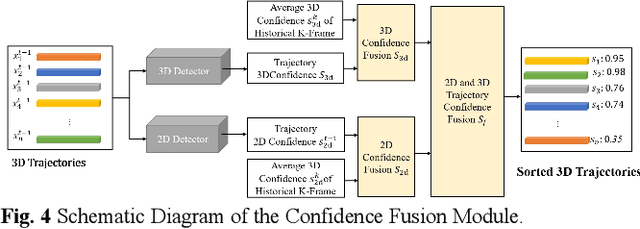

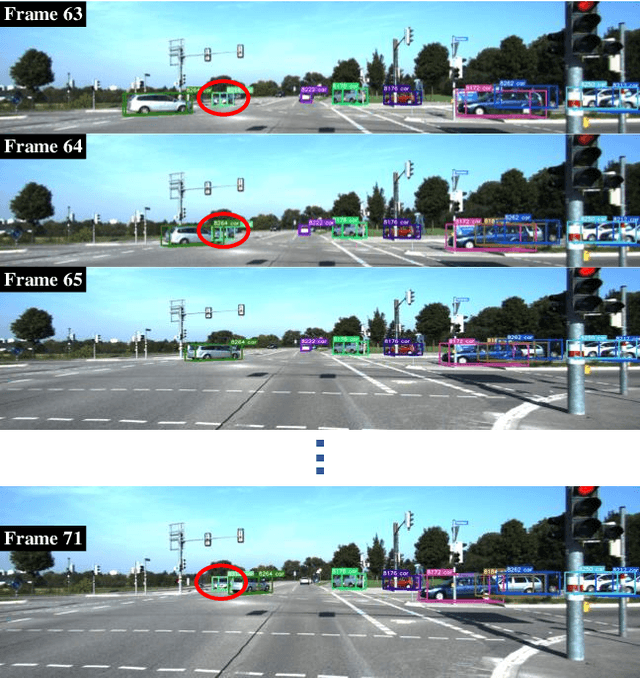

Abstract:Firstly, a new multi-object tracking framework is proposed in this paper based on multi-modal fusion. By integrating object detection and multi-object tracking into the same model, this framework avoids the complex data association process in the classical TBD paradigm, and requires no additional training. Secondly, confidence of historical trajectory regression is explored, possible states of a trajectory in the current frame (weak object or strong object) are analyzed and a confidence fusion module is designed to guide non-maximum suppression of trajectory and detection for ordered association. Finally, extensive experiments are conducted on the KITTI and Waymo datasets. The results show that the proposed method can achieve robust tracking by using only two modal detectors and it is more accurate than many of the latest TBD paradigm-based multi-modal tracking methods. The source codes of the proposed method are available at https://github.com/wangxiyang2022/YONTD-MOT

3D Multi-Object Tracking Based on Uncertainty-Guided Data Association

Mar 03, 2023

Abstract:In the existing literature, most 3D multi-object tracking algorithms based on the tracking-by-detection framework employed deterministic tracks and detections for similarity calculation in the data association stage. Namely, the inherent uncertainties existing in tracks and detections are overlooked. In this work, we discard the commonly used deterministic tracks and deterministic detections for data association, instead, we propose to model tracks and detections as random vectors in which uncertainties are taken into account. Then, based on the Jensen-Shannon divergence, the similarity between two multidimensional distributions, i.e. track and detection, is evaluated for data association purposes. Lastly, the level of track uncertainty is incorporated in our cost function design to guide the data association process. Comparative experiments have been conducted on two typical datasets, KITTI and nuScenes, and the results indicated that our proposed method outperformed the compared state-of-the-art 3D tracking algorithms. For the benefit of the community, our code has been made available at https://github.com/hejiawei2023/UG3DMOT.

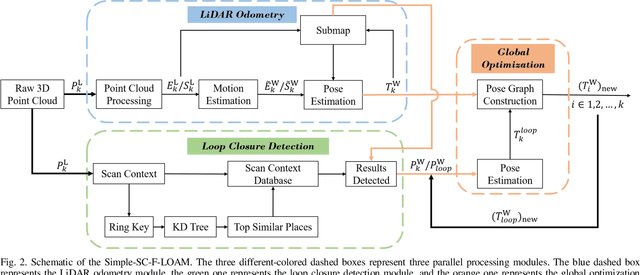

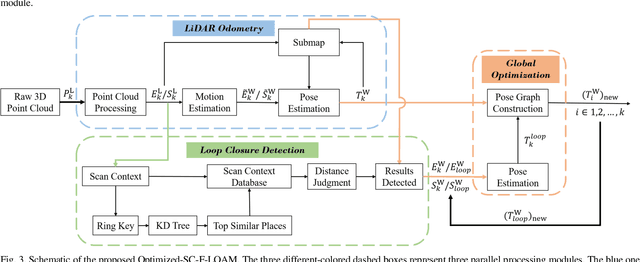

Optimized SC-F-LOAM: Optimized Fast LiDAR Odometry and Mapping Using Scan Context

Apr 11, 2022

Abstract:LiDAR odometry can achieve accurate vehicle pose estimation for short driving range or in small-scale environments, but for long driving range or in large-scale environments, the accuracy deteriorates as a result of cumulative estimation errors. This drawback necessitates the inclusion of loop closure detection in a SLAM framework to suppress the adverse effects of cumulative errors. To improve the accuracy of pose estimation, we propose a new LiDAR-based SLAM method which uses F-LOAM as LiDAR odometry, Scan Context for loop closure detection, and GTSAM for global optimization. In our approach, an adaptive distance threshold (instead of a fixed threshold) is employed for loop closure detection, which achieves more accurate loop closure detection results. Besides, a feature-based matching method is used in our approach to compute vehicle pose transformations between loop closure point cloud pairs, instead of using the raw point cloud obtained by the LiDAR sensor, which significantly reduces the computation time. The KITTI dataset and a UGV platform are used for verifications of our method, and the experimental results demonstrate that the proposed method outperforms typical LiDAR odometry/SLAM methods in the literature. Our code is made publicly available for the benefit of the community.

DeepFusionMOT: A 3D Multi-Object Tracking Framework Based on Camera-LiDAR Fusion with Deep Association

Feb 24, 2022

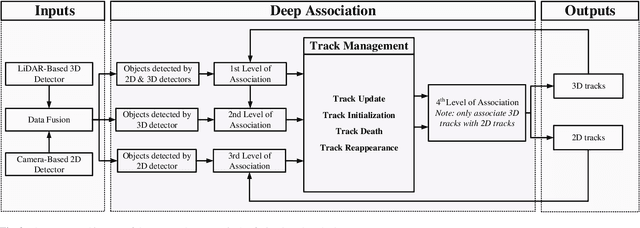

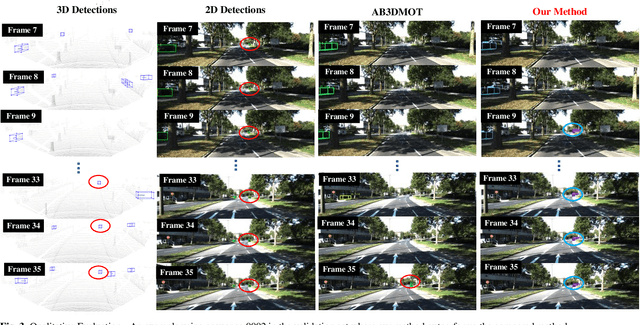

Abstract:In the recent literature, on the one hand, many 3D multi-object tracking (MOT) works have focused on tracking accuracy and neglected computation speed, commonly by designing rather complex cost functions and feature extractors. On the other hand, some methods have focused too much on computation speed at the expense of tracking accuracy. In view of these issues, this paper proposes a robust and fast camera-LiDAR fusion-based MOT method that achieves a good trade-off between accuracy and speed. Relying on the characteristics of camera and LiDAR sensors, an effective deep association mechanism is designed and embedded in the proposed MOT method. This association mechanism realizes tracking of an object in a 2D domain when the object is far away and only detected by the camera, and updating of the 2D trajectory with 3D information obtained when the object appears in the LiDAR field of view to achieve a smooth fusion of 2D and 3D trajectories. Extensive experiments based on the KITTI dataset indicate that our proposed method presents obvious advantages over the state-of-the-art MOT methods in terms of both tracking accuracy and processing speed. Our code is made publicly available for the benefit of the community

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge