Tian Su

Optimized SC-F-LOAM: Optimized Fast LiDAR Odometry and Mapping Using Scan Context

Apr 11, 2022

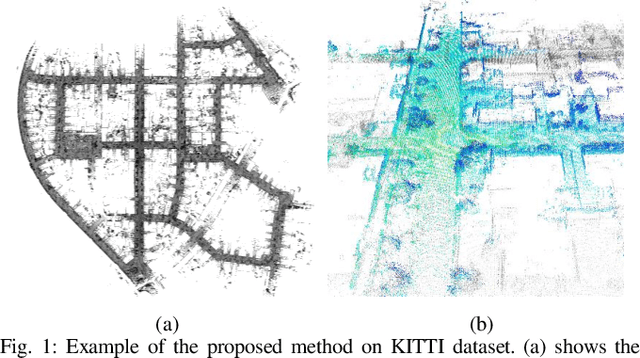

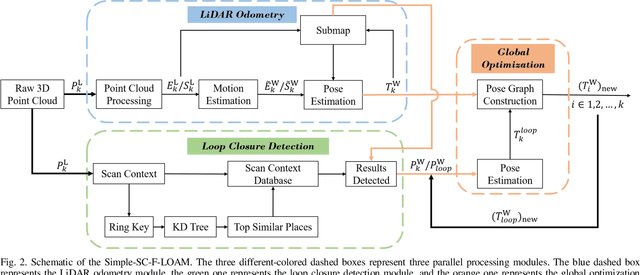

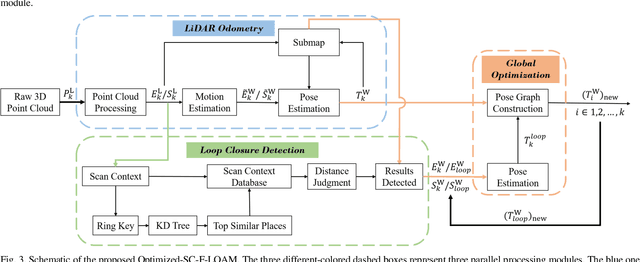

Abstract:LiDAR odometry can achieve accurate vehicle pose estimation for short driving range or in small-scale environments, but for long driving range or in large-scale environments, the accuracy deteriorates as a result of cumulative estimation errors. This drawback necessitates the inclusion of loop closure detection in a SLAM framework to suppress the adverse effects of cumulative errors. To improve the accuracy of pose estimation, we propose a new LiDAR-based SLAM method which uses F-LOAM as LiDAR odometry, Scan Context for loop closure detection, and GTSAM for global optimization. In our approach, an adaptive distance threshold (instead of a fixed threshold) is employed for loop closure detection, which achieves more accurate loop closure detection results. Besides, a feature-based matching method is used in our approach to compute vehicle pose transformations between loop closure point cloud pairs, instead of using the raw point cloud obtained by the LiDAR sensor, which significantly reduces the computation time. The KITTI dataset and a UGV platform are used for verifications of our method, and the experimental results demonstrate that the proposed method outperforms typical LiDAR odometry/SLAM methods in the literature. Our code is made publicly available for the benefit of the community.

Dynamic Multi-path Neural Network

Apr 07, 2019

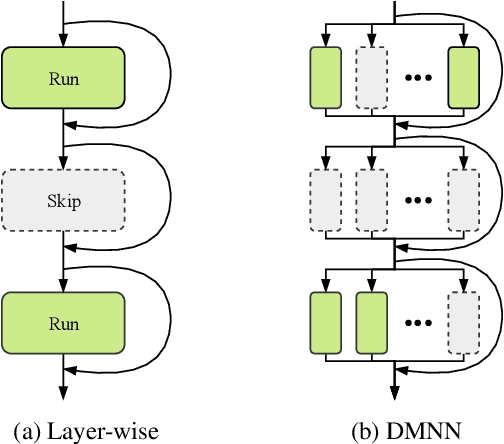

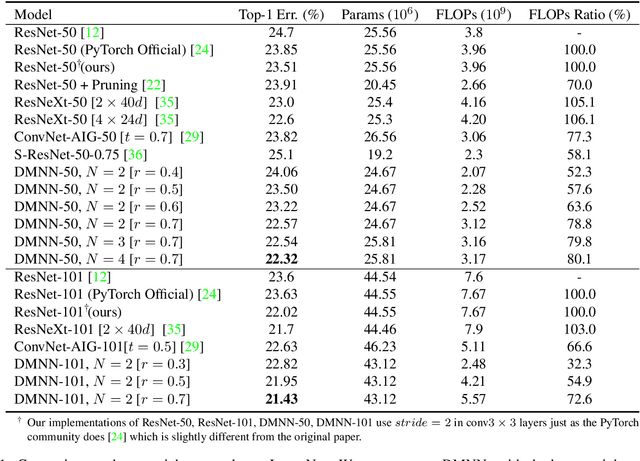

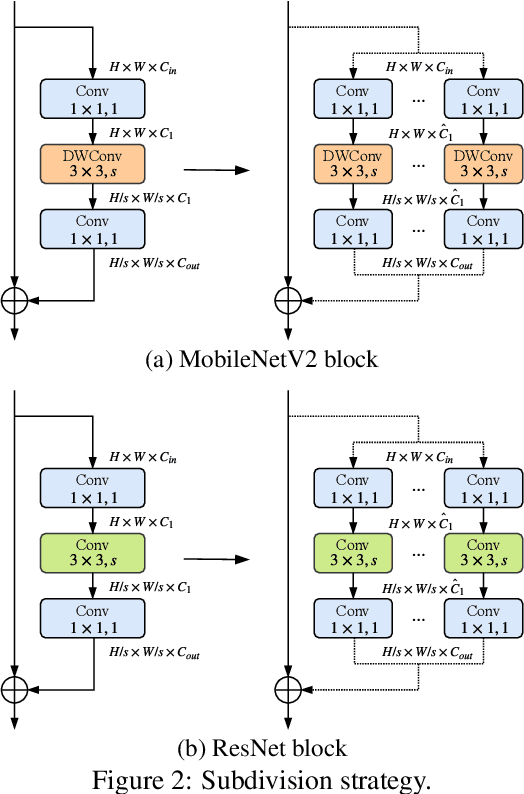

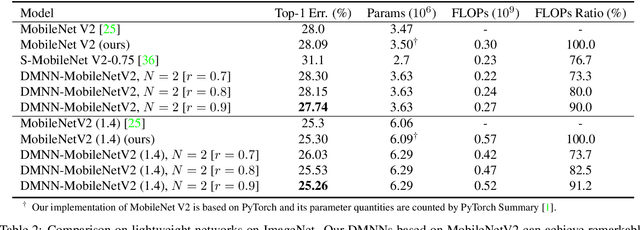

Abstract:Although deeper and larger neural networks have achieved better performance, the complex network structure and increasing computational cost cannot meet the demands of many resource-constrained applications. Existing methods usually choose to execute or skip an entire specific layer, which can only alter the depth of the network. In this paper, we propose a novel method called Dynamic Multi-path Neural Network (DMNN), which provides more path selection choices in terms of network width and depth during inference. The inference path of the network is determined by a controller, which takes into account both previous state and object category information. The proposed method can be easily incorporated into most modern network architectures. Experimental results on ImageNet and CIFAR-100 demonstrate the superiority of our method on both efficiency and overall classification accuracy. To be specific, DMNN-101 significantly outperforms ResNet-101 with an encouraging 45.1% FLOPs reduction, and DMNN-50 performs comparably to ResNet-101 while saving 42.1% parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge