Dynamic Multi-path Neural Network

Paper and Code

Apr 07, 2019

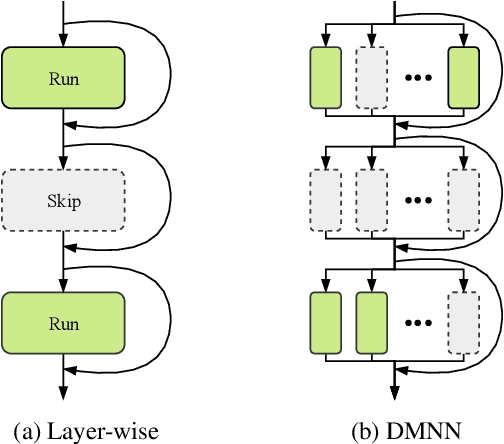

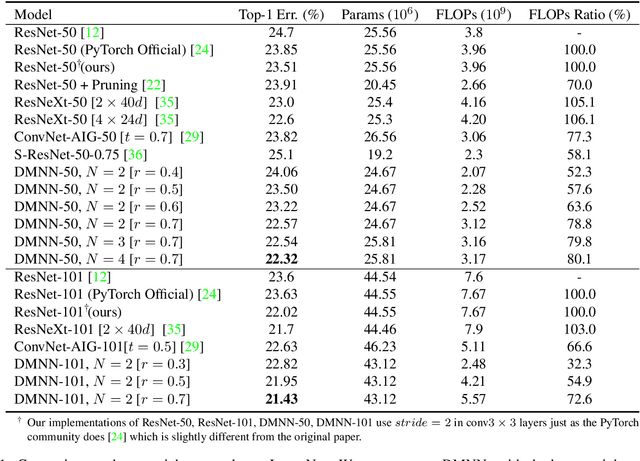

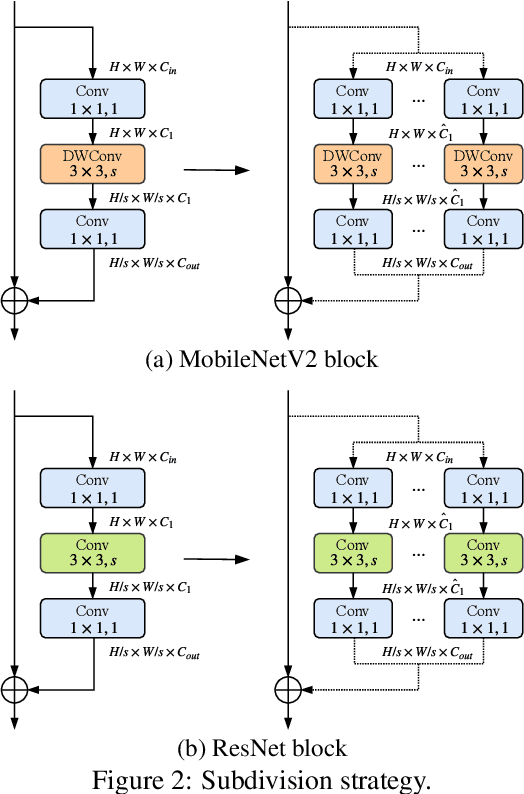

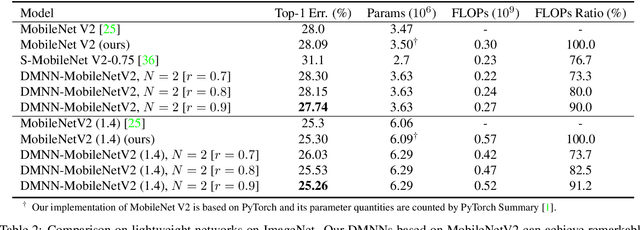

Although deeper and larger neural networks have achieved better performance, the complex network structure and increasing computational cost cannot meet the demands of many resource-constrained applications. Existing methods usually choose to execute or skip an entire specific layer, which can only alter the depth of the network. In this paper, we propose a novel method called Dynamic Multi-path Neural Network (DMNN), which provides more path selection choices in terms of network width and depth during inference. The inference path of the network is determined by a controller, which takes into account both previous state and object category information. The proposed method can be easily incorporated into most modern network architectures. Experimental results on ImageNet and CIFAR-100 demonstrate the superiority of our method on both efficiency and overall classification accuracy. To be specific, DMNN-101 significantly outperforms ResNet-101 with an encouraging 45.1% FLOPs reduction, and DMNN-50 performs comparably to ResNet-101 while saving 42.1% parameters.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge