Christoph Roch

LMU Munich

Towards Transfer Learning for Large-Scale Image Classification Using Annealing-based Quantum Boltzmann Machines

Nov 27, 2023

Abstract:Quantum Transfer Learning (QTL) recently gained popularity as a hybrid quantum-classical approach for image classification tasks by efficiently combining the feature extraction capabilities of large Convolutional Neural Networks with the potential benefits of Quantum Machine Learning (QML). Existing approaches, however, only utilize gate-based Variational Quantum Circuits for the quantum part of these procedures. In this work we present an approach to employ Quantum Annealing (QA) in QTL-based image classification. Specifically, we propose using annealing-based Quantum Boltzmann Machines as part of a hybrid quantum-classical pipeline to learn the classification of real-world, large-scale data such as medical images through supervised training. We demonstrate our approach by applying it to the three-class COVID-CT-MD dataset, a collection of lung Computed Tomography (CT) scan slices. Using Simulated Annealing as a stand-in for actual QA, we compare our method to classical transfer learning, using a neural network of the same order of magnitude, to display its improved classification performance. We find that our approach consistently outperforms its classical baseline in terms of test accuracy and AUC-ROC-Score and needs less training epochs to do this.

Hybrid Quantum Machine Learning Assisted Classification of COVID-19 from Computed Tomography Scans

Oct 04, 2023

Abstract:Practical quantum computing (QC) is still in its infancy and problems considered are usually fairly small, especially in quantum machine learning when compared to its classical counterpart. Image processing applications in particular require models that are able to handle a large amount of features, and while classical approaches can easily tackle this, it is a major challenge and a cause for harsh restrictions in contemporary QC. In this paper, we apply a hybrid quantum machine learning approach to a practically relevant problem with real world-data. That is, we apply hybrid quantum transfer learning to an image processing task in the field of medical image processing. More specifically, we classify large CT-scans of the lung into COVID-19, CAP, or Normal. We discuss quantum image embedding as well as hybrid quantum machine learning and evaluate several approaches to quantum transfer learning with various quantum circuits and embedding techniques.

SEQUENT: Towards Traceable Quantum Machine Learning using Sequential Quantum Enhanced Training

Jan 06, 2023

Abstract:Applying new computing paradigms like quantum computing to the field of machine learning has recently gained attention. However, as high-dimensional real-world applications are not yet feasible to be solved using purely quantum hardware, hybrid methods using both classical and quantum machine learning paradigms have been proposed. For instance, transfer learning methods have been shown to be successfully applicable to hybrid image classification tasks. Nevertheless, beneficial circuit architectures still need to be explored. Therefore, tracing the impact of the chosen circuit architecture and parameterization is crucial for the development of beneficially applicable hybrid methods. However, current methods include processes where both parts are trained concurrently, therefore not allowing for a strict separability of classical and quantum impact. Thus, those architectures might produce models that yield a superior prediction accuracy whilst employing the least possible quantum impact. To tackle this issue, we propose Sequential Quantum Enhanced Training (SEQUENT) an improved architecture and training process for the traceable application of quantum computing methods to hybrid machine learning. Furthermore, we provide formal evidence for the disadvantage of current methods and preliminary experimental results as a proof-of-concept for the applicability of SEQUENT.

Black Box Optimization Using QUBO and the Cross Entropy Method

Jun 24, 2022

Abstract:Black box optimization (BBO) can be used to optimize functions whose analytic form is unknown. A common approach to realize BBO is to learn a surrogate model which approximates the target black box function which can then be solved via white box optimization methods. In this paper we present our approach BOX-QUBO, where the surrogate model is a QUBO matrix. However, unlike in previous state-of-the-art approaches, this matrix is not trained entirely by regression, but mostly by classification between 'good' and 'bad' solutions. This better accounts for the low capacity of the QUBO matrix, resulting in significantly better solutions overall. We tested our approach against the state-of-the-art on four domains and in all of them BOX-QUBO showed significantly better results. A second contribution of this paper is the idea to also solve white box problems, i.e. problems which could be directly formulated as QUBO, by means of black box optimization in order to reduce the size of the QUBOs to their information-theoretic minimum. The experiments show that this significantly improves the results for MAX-$k$-SAT.

Towards Multi-Agent Reinforcement Learning using Quantum Boltzmann Machines

Sep 22, 2021

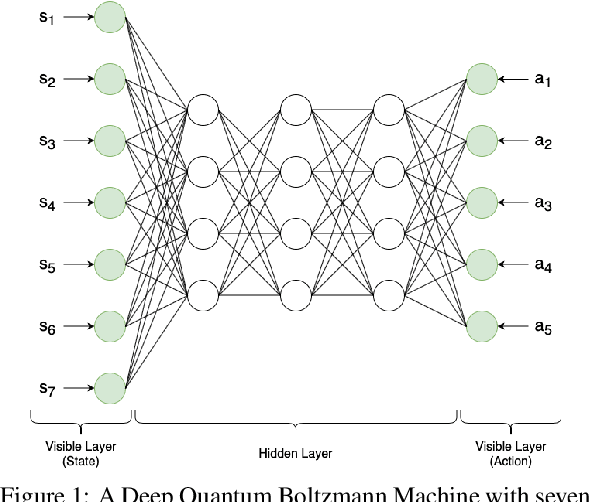

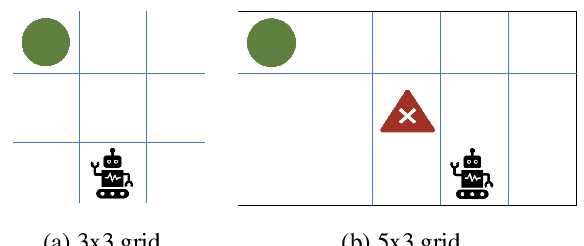

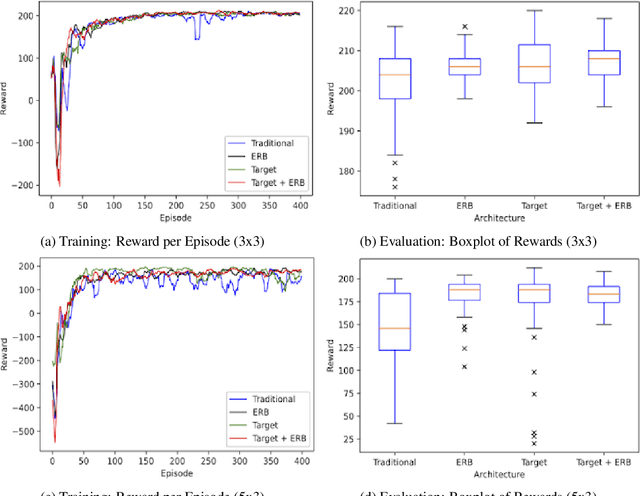

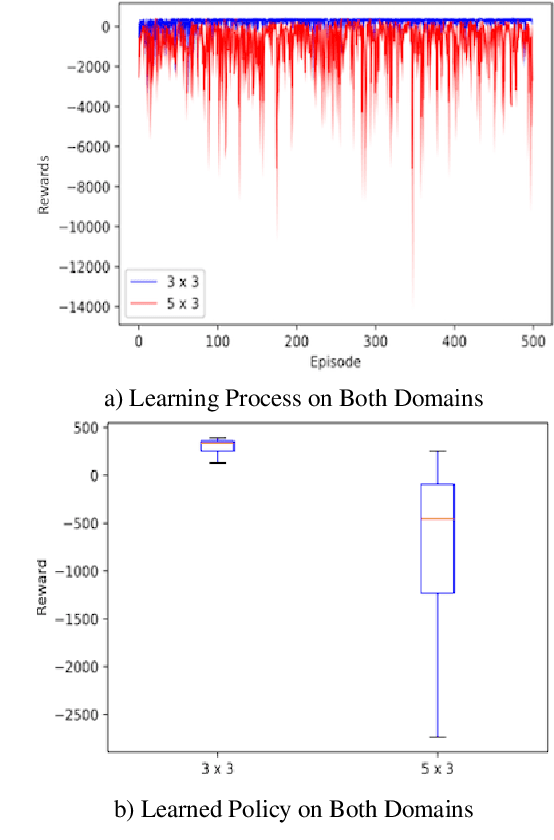

Abstract:Reinforcement learning has driven impressive advances in machine learning. Simultaneously, quantum-enhanced machine learning algorithms using quantum annealing underlie heavy developments. Recently, a multi-agent reinforcement learning (MARL) architecture combining both paradigms has been proposed. This novel algorithm, which utilizes Quantum Boltzmann Machines (QBMs) for Q-value approximation has outperformed regular deep reinforcement learning in terms of time-steps needed to converge. However, this algorithm was restricted to single-agent and small 2x2 multi-agent grid domains. In this work, we propose an extension to the original concept in order to solve more challenging problems. Similar to classic DQNs, we add an experience replay buffer and use different networks for approximating the target and policy values. The experimental results show that learning becomes more stable and enables agents to find optimal policies in grid-domains with higher complexity. Additionally, we assess how parameter sharing influences the agents behavior in multi-agent domains. Quantum sampling proves to be a promising method for reinforcement learning tasks, but is currently limited by the QPU size and therefore by the size of the input and Boltzmann machine.

A Flexible Pipeline for the Optimization of CSG Trees

Sep 12, 2020

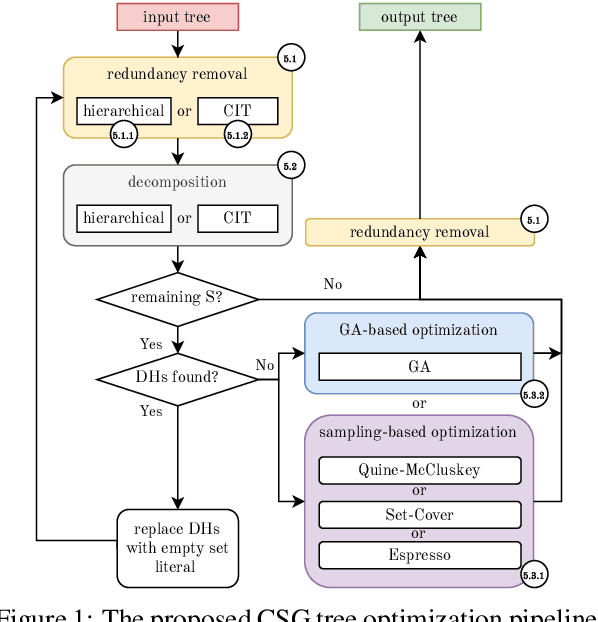

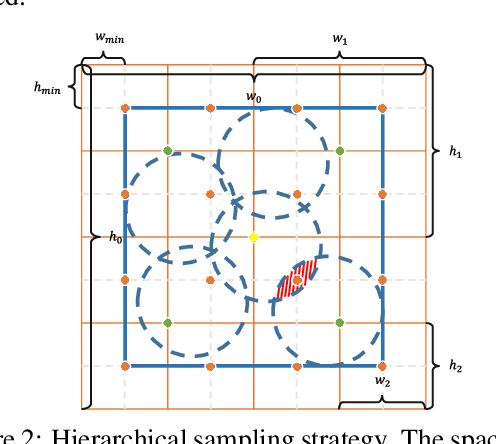

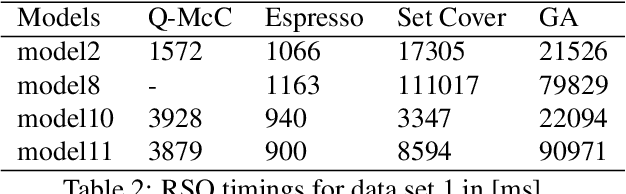

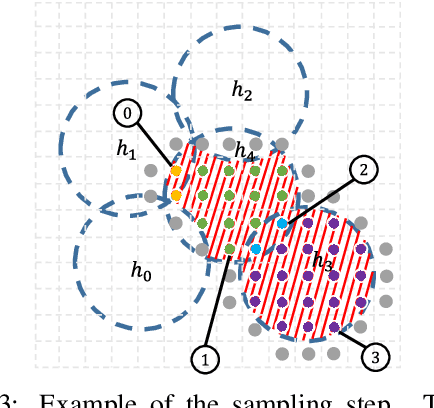

Abstract:CSG trees are an intuitive, yet powerful technique for the representation of geometry using a combination of Boolean set-operations and geometric primitives. In general, there exists an infinite number of trees all describing the same 3D solid. However, some trees are optimal regarding the number of used operations, their shape or other attributes, like their suitability for intuitive, human-controlled editing. In this paper, we present a systematic comparison of newly developed and existing tree optimization methods and propose a flexible processing pipeline with a focus on tree editability. The pipeline uses a redundancy removal and decomposition stage for complexity reduction and different (meta-)heuristics for remaining tree optimization. We also introduce a new quantitative measure for CSG tree editability and show how it can be used as a constraint in the optimization process.

The Holy Grail of Quantum Artificial Intelligence: Major Challenges in Accelerating the Machine Learning Pipeline

Apr 29, 2020

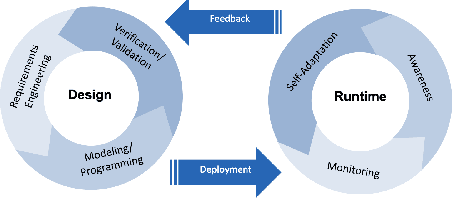

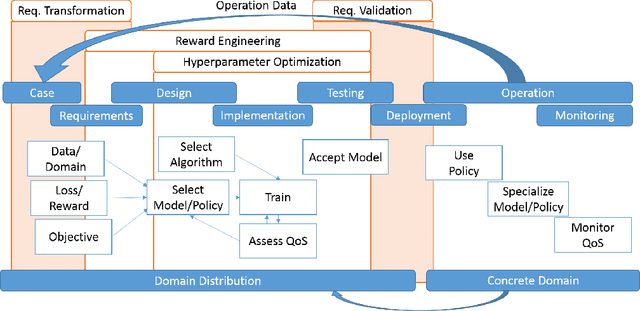

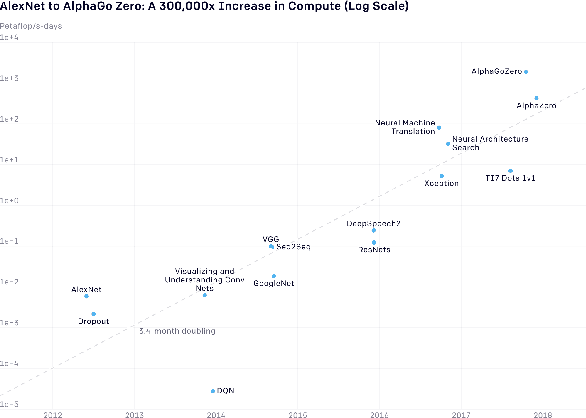

Abstract:We discuss the synergetic connection between quantum computing and artificial intelligence. After surveying current approaches to quantum artificial intelligence and relating them to a formal model for machine learning processes, we deduce four major challenges for the future of quantum artificial intelligence: (i) Replace iterative training with faster quantum algorithms, (ii) distill the experience of larger amounts of data into the training process, (iii) allow quantum and classical components to be easily combined and exchanged, and (iv) build tools to thoroughly analyze whether observed benefits really stem from quantum properties of the algorithm.

Soccer Team Vectors

Jul 30, 2019

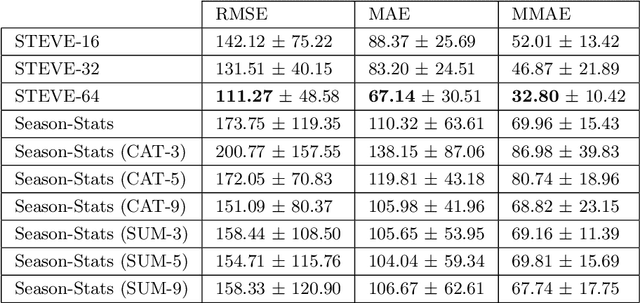

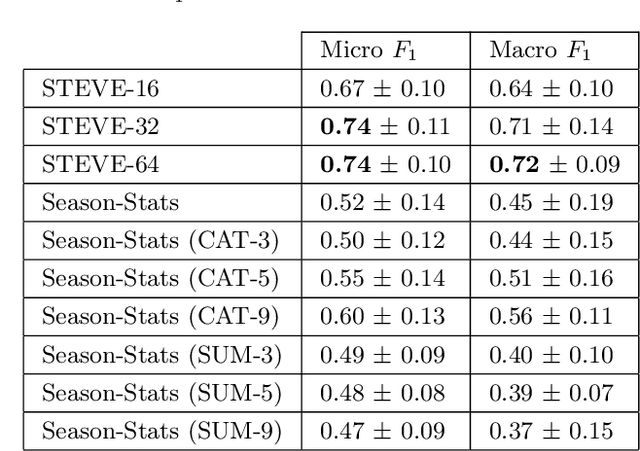

Abstract:In this work we present STEVE - Soccer TEam VEctors, a principled approach for learning real valued vectors for soccer teams where similar teams are close to each other in the resulting vector space. STEVE only relies on freely available information about the matches teams played in the past. These vectors can serve as input to various machine learning tasks. Evaluating on the task of team market value estimation, STEVE outperforms all its competitors. Moreover, we use STEVE for similarity search and to rank soccer teams.

Adaptive Thompson Sampling Stacks for Memory Bounded Open-Loop Planning

Jul 11, 2019

Abstract:We propose Stable Yet Memory Bounded Open-Loop (SYMBOL) planning, a general memory bounded approach to partially observable open-loop planning. SYMBOL maintains an adaptive stack of Thompson Sampling bandits, whose size is bounded by the planning horizon and can be automatically adapted according to the underlying domain without any prior domain knowledge beyond a generative model. We empirically test SYMBOL in four large POMDP benchmark problems to demonstrate its effectiveness and robustness w.r.t. the choice of hyperparameters and evaluate its adaptive memory consumption. We also compare its performance with other open-loop planning algorithms and POMCP.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge