Christoph H. Lampert

Towards Robust Scaling Laws for Optimizers

Feb 07, 2026Abstract:The quality of Large Language Model (LLM) pretraining depends on multiple factors, including the compute budget and the choice of optimization algorithm. Empirical scaling laws are widely used to predict loss as model size and training data grow, however, almost all existing studies fix the optimizer (typically AdamW). At the same time, a new generation of optimizers (e.g., Muon, Shampoo, SOAP) promises faster and more stable convergence, but their relationship with model and data scaling is not yet well understood. In this work, we study scaling laws across different optimizers. Empirically, we show that 1) separate Chinchilla-style scaling laws for each optimizer are ill-conditioned and have highly correlated parameters. Instead, 2) we propose a more robust law with shared power-law exponents and optimizer-specific rescaling factors, which enable direct comparison between optimizers. Finally, 3) we provide a theoretical analysis of gradient-based methods for the proxy task of a convex quadratic objective, demonstrating that Chinchilla-style scaling laws emerge naturally as a result of loss decomposition into irreducible, approximation, and optimization errors.

Matrix Factorization for Practical Continual Mean Estimation Under User-Level Differential Privacy

Jan 29, 2026Abstract:We study continual mean estimation, where data vectors arrive sequentially and the goal is to maintain accurate estimates of the running mean. We address this problem under user-level differential privacy, which protects each user's entire dataset even when they contribute multiple data points. Previous work on this problem has focused on pure differential privacy. While important, this approach limits applicability, as it leads to overly noisy estimates. In contrast, we analyze the problem under approximate differential privacy, adopting recent advances in the Matrix Factorization mechanism. We introduce a novel mean estimation specific factorization, which is both efficient and accurate, achieving asymptotically lower mean-squared error bounds in continual mean estimation under user-level differential privacy.

DP-$λ$CGD: Efficient Noise Correlation for Differentially Private Model Training

Jan 29, 2026Abstract:Differentially private stochastic gradient descent (DP-SGD) is the gold standard for training machine learning models with formal differential privacy guarantees. Several recent extensions improve its accuracy by introducing correlated noise across training iterations. Matrix factorization mechanisms are a prominent example, but they correlate noise across many iterations and require storing previously added noise vectors, leading to substantial memory overhead in some settings. In this work, we propose a new noise correlation strategy that correlates noise only with the immediately preceding iteration and cancels a controlled portion of it. Our method relies on noise regeneration using a pseudorandom noise generator, eliminating the need to store past noise. As a result, it requires no additional memory beyond standard DP-SGD. We show that the computational overhead is minimal and empirically demonstrate improved accuracy over DP-SGD.

Learning Quantized Continuous Controllers for Integer Hardware

Nov 17, 2025

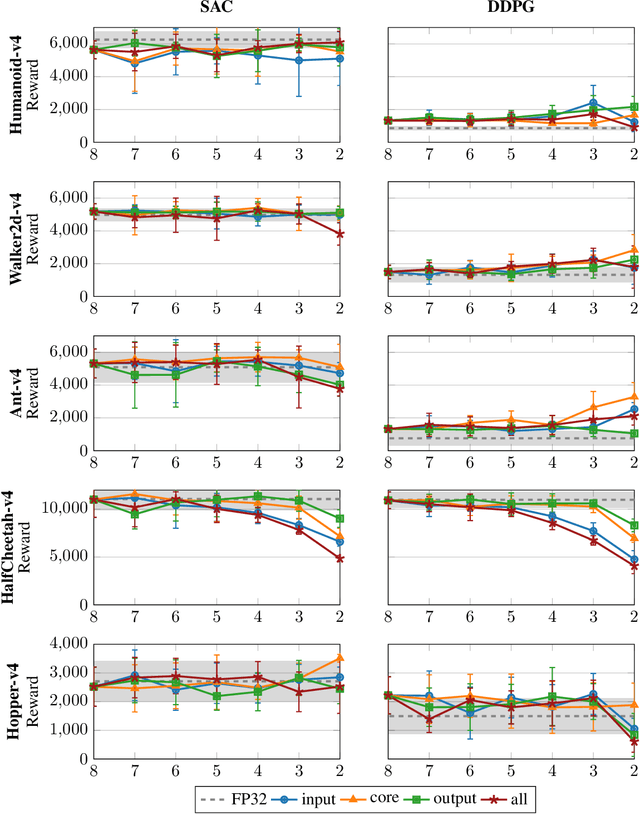

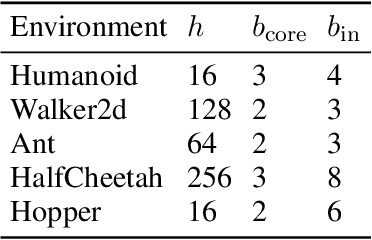

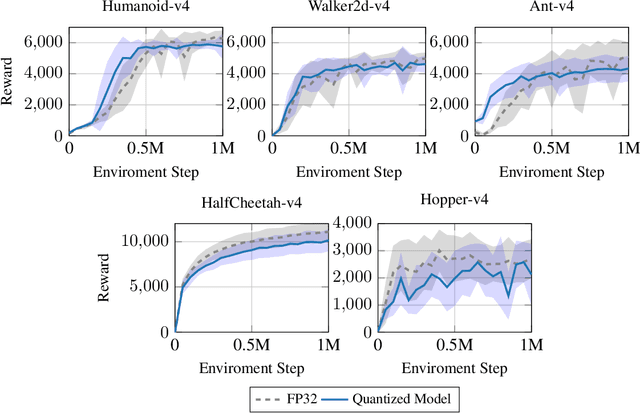

Abstract:Deploying continuous-control reinforcement learning policies on embedded hardware requires meeting tight latency and power budgets. Small FPGAs can deliver these, but only if costly floating point pipelines are avoided. We study quantization-aware training (QAT) of policies for integer inference and we present a learning-to-hardware pipeline that automatically selects low-bit policies and synthesizes them to an Artix-7 FPGA. Across five MuJoCo tasks, we obtain policy networks that are competitive with full precision (FP32) policies but require as few as 3 or even only 2 bits per weight, and per internal activation value, as long as input precision is chosen carefully. On the target hardware, the selected policies achieve inference latencies on the order of microseconds and consume microjoules per action, favorably comparing to a quantized reference. Last, we observe that the quantized policies exhibit increased input noise robustness compared to the floating-point baseline.

Scalable Interconnect Learning in Boolean Networks

Jul 03, 2025Abstract:Learned Differentiable Boolean Logic Networks (DBNs) already deliver efficient inference on resource-constrained hardware. We extend them with a trainable, differentiable interconnect whose parameter count remains constant as input width grows, allowing DBNs to scale to far wider layers than earlier learnable-interconnect designs while preserving their advantageous accuracy. To further reduce model size, we propose two complementary pruning stages: an SAT-based logic equivalence pass that removes redundant gates without affecting performance, and a similarity-based, data-driven pass that outperforms a magnitude-style greedy baseline and offers a superior compression-accuracy trade-off.

Differentially Private Federated $k$-Means Clustering with Server-Side Data

Jun 04, 2025Abstract:Clustering is a cornerstone of data analysis that is particularly suited to identifying coherent subgroups or substructures in unlabeled data, as are generated continuously in large amounts these days. However, in many cases traditional clustering methods are not applicable, because data are increasingly being produced and stored in a distributed way, e.g. on edge devices, and privacy concerns prevent it from being transferred to a central server. To address this challenge, we present \acronym, a new algorithm for $k$-means clustering that is fully-federated as well as differentially private. Our approach leverages (potentially small and out-of-distribution) server-side data to overcome the primary challenge of differentially private clustering methods: the need for a good initialization. Combining our initialization with a simple federated DP-Lloyds algorithm we obtain an algorithm that achieves excellent results on synthetic and real-world benchmark tasks. We also provide a theoretical analysis of our method that provides bounds on the convergence speed and cluster identification success.

Logic Gate Neural Networks are Good for Verification

May 26, 2025Abstract:Learning-based systems are increasingly deployed across various domains, yet the complexity of traditional neural networks poses significant challenges for formal verification. Unlike conventional neural networks, learned Logic Gate Networks (LGNs) replace multiplications with Boolean logic gates, yielding a sparse, netlist-like architecture that is inherently more amenable to symbolic verification, while still delivering promising performance. In this paper, we introduce a SAT encoding for verifying global robustness and fairness in LGNs. We evaluate our method on five benchmark datasets, including a newly constructed 5-class variant, and find that LGNs are both verification-friendly and maintain strong predictive performance.

Neural Collapse is Globally Optimal in Deep Regularized ResNets and Transformers

May 21, 2025Abstract:The empirical emergence of neural collapse -- a surprising symmetry in the feature representations of the training data in the penultimate layer of deep neural networks -- has spurred a line of theoretical research aimed at its understanding. However, existing work focuses on data-agnostic models or, when data structure is taken into account, it remains limited to multi-layer perceptrons. Our paper fills both these gaps by analyzing modern architectures in a data-aware regime: we prove that global optima of deep regularized transformers and residual networks (ResNets) with LayerNorm trained with cross entropy or mean squared error loss are approximately collapsed, and the approximation gets tighter as the depth grows. More generally, we formally reduce any end-to-end large-depth ResNet or transformer training into an equivalent unconstrained features model, thus justifying its wide use in the literature even beyond data-agnostic settings. Our theoretical results are supported by experiments on computer vision and language datasets showing that, as the depth grows, neural collapse indeed becomes more prominent.

Fast Rate Bounds for Multi-Task and Meta-Learning with Different Sample Sizes

May 21, 2025Abstract:We present new fast-rate generalization bounds for multi-task and meta-learning in the unbalanced setting, i.e. when the tasks have training sets of different sizes, as is typically the case in real-world scenarios. Previously, only standard-rate bounds were known for this situation, while fast-rate bounds were limited to the setting where all training sets are of equal size. Our new bounds are numerically computable as well as interpretable, and we demonstrate their flexibility in handling a number of cases where they give stronger guarantees than previous bounds. Besides the bounds themselves, we also make conceptual contributions: we demonstrate that the unbalanced multi-task setting has different statistical properties than the balanced situation, specifically that proofs from the balanced situation do not carry over to the unbalanced setting. Additionally, we shed light on the fact that the unbalanced situation allows two meaningful definitions of multi-task risk, depending on whether if all tasks should be considered equally important or if sample-rich tasks should receive more weight than sample-poor ones.

Federated Learning with Unlabeled Clients: Personalization Can Happen in Low Dimensions

May 21, 2025Abstract:Personalized federated learning has emerged as a popular approach to training on devices holding statistically heterogeneous data, known as clients. However, most existing approaches require a client to have labeled data for training or finetuning in order to obtain their own personalized model. In this paper we address this by proposing FLowDUP, a novel method that is able to generate a personalized model using only a forward pass with unlabeled data. The generated model parameters reside in a low-dimensional subspace, enabling efficient communication and computation. FLowDUP's learning objective is theoretically motivated by our new transductive multi-task PAC-Bayesian generalization bound, that provides performance guarantees for unlabeled clients. The objective is structured in such a way that it allows both clients with labeled data and clients with only unlabeled data to contribute to the training process. To supplement our theoretical results we carry out a thorough experimental evaluation of FLowDUP, demonstrating strong empirical performance on a range of datasets with differing sorts of statistically heterogeneous clients. Through numerous ablation studies, we test the efficacy of the individual components of the method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge