Nikita P. Kalinin

Matrix Factorization for Practical Continual Mean Estimation Under User-Level Differential Privacy

Jan 29, 2026Abstract:We study continual mean estimation, where data vectors arrive sequentially and the goal is to maintain accurate estimates of the running mean. We address this problem under user-level differential privacy, which protects each user's entire dataset even when they contribute multiple data points. Previous work on this problem has focused on pure differential privacy. While important, this approach limits applicability, as it leads to overly noisy estimates. In contrast, we analyze the problem under approximate differential privacy, adopting recent advances in the Matrix Factorization mechanism. We introduce a novel mean estimation specific factorization, which is both efficient and accurate, achieving asymptotically lower mean-squared error bounds in continual mean estimation under user-level differential privacy.

DP-$λ$CGD: Efficient Noise Correlation for Differentially Private Model Training

Jan 29, 2026Abstract:Differentially private stochastic gradient descent (DP-SGD) is the gold standard for training machine learning models with formal differential privacy guarantees. Several recent extensions improve its accuracy by introducing correlated noise across training iterations. Matrix factorization mechanisms are a prominent example, but they correlate noise across many iterations and require storing previously added noise vectors, leading to substantial memory overhead in some settings. In this work, we propose a new noise correlation strategy that correlates noise only with the immediately preceding iteration and cancels a controlled portion of it. Our method relies on noise regeneration using a pseudorandom noise generator, eliminating the need to store past noise. As a result, it requires no additional memory beyond standard DP-SGD. We show that the computational overhead is minimal and empirically demonstrate improved accuracy over DP-SGD.

Normalized Square Root: Sharper Matrix Factorization Bounds for Differentially Private Continual Counting

Sep 17, 2025Abstract:The factorization norms of the lower-triangular all-ones $n \times n$ matrix, $\gamma_2(M_{count})$ and $\gamma_{F}(M_{count})$, play a central role in differential privacy as they are used to give theoretical justification of the accuracy of the only known production-level private training algorithm of deep neural networks by Google. Prior to this work, the best known upper bound on $\gamma_2(M_{count})$ was $1 + \frac{\log n}{\pi}$ by Mathias (Linear Algebra and Applications, 1993), and the best known lower bound was $\frac{1}{\pi}(2 + \log(\frac{2n+1}{3})) \approx 0.507 + \frac{\log n}{\pi}$ (Matou\v{s}ek, Nikolov, Talwar, IMRN 2020), where $\log$ denotes the natural logarithm. Recently, Henzinger and Upadhyay (SODA 2025) gave the first explicit factorization that meets the bound of Mathias (1993) and asked whether there exists an explicit factorization that improves on Mathias' bound. We answer this question in the affirmative. Additionally, we improve the lower bound significantly. More specifically, we show that $$ 0.701 + \frac{\log n}{\pi} + o(1) \;\leq\; \gamma_2(M_{count}) \;\leq\; 0.846 + \frac{\log n}{\pi} + o(1). $$ That is, we reduce the gap between the upper and lower bound to $0.14 + o(1)$. We also show that our factors achieve a better upper bound for $\gamma_{F}(M_{count})$ compared to prior work, and we establish an improved lower bound: $$ 0.701 + \frac{\log n}{\pi} + o(1) \;\leq\; \gamma_{F}(M_{count}) \;\leq\; 0.748 + \frac{\log n}{\pi} + o(1). $$ That is, the gap between the lower and upper bound provided by our explicit factorization is $0.047 + o(1)$.

Back to Square Roots: An Optimal Bound on the Matrix Factorization Error for Multi-Epoch Differentially Private SGD

May 17, 2025Abstract:Matrix factorization mechanisms for differentially private training have emerged as a promising approach to improve model utility under privacy constraints. In practical settings, models are typically trained over multiple epochs, requiring matrix factorizations that account for repeated participation. Existing theoretical upper and lower bounds on multi-epoch factorization error leave a significant gap. In this work, we introduce a new explicit factorization method, Banded Inverse Square Root (BISR), which imposes a banded structure on the inverse correlation matrix. This factorization enables us to derive an explicit and tight characterization of the multi-epoch error. We further prove that BISR achieves asymptotically optimal error by matching the upper and lower bounds. Empirically, BISR performs on par with state-of-the-art factorization methods, while being simpler to implement, computationally efficient, and easier to analyze.

Binned Group Algebra Factorization for Differentially Private Continual Counting

Apr 06, 2025Abstract:We study memory-efficient matrix factorization for differentially private counting under continual observation. While recent work by Henzinger and Upadhyay 2024 introduced a factorization method with reduced error based on group algebra, its practicality in streaming settings remains limited by computational constraints. We present new structural properties of the group algebra factorization, enabling the use of a binning technique from Andersson and Pagh (2024). By grouping similar values in rows, the binning method reduces memory usage and running time to $\tilde O(\sqrt{n})$, where $n$ is the length of the input stream, while maintaining a low error. Our work bridges the gap between theoretical improvements in factorization accuracy and practical efficiency in large-scale private learning systems.

Continual Release Moment Estimation with Differential Privacy

Feb 10, 2025

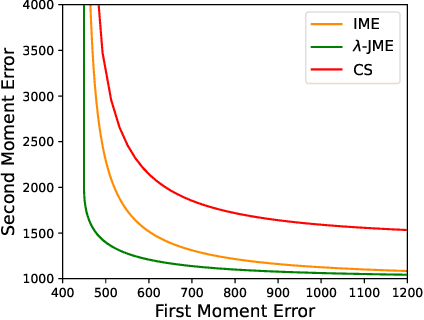

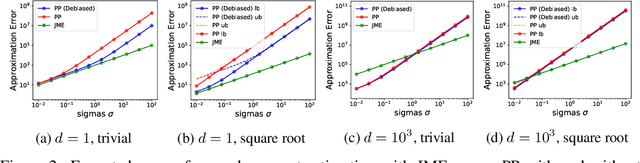

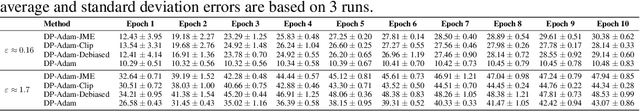

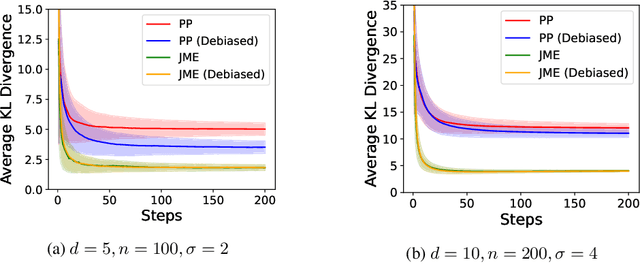

Abstract:We propose Joint Moment Estimation (JME), a method for continually and privately estimating both the first and second moments of data with reduced noise compared to naive approaches. JME uses the matrix mechanism and a joint sensitivity analysis to allow the second moment estimation with no additional privacy cost, thereby improving accuracy while maintaining privacy. We demonstrate JME's effectiveness in two applications: estimating the running mean and covariance matrix for Gaussian density estimation, and model training with DP-Adam on CIFAR-10.

Notes on Sampled Gaussian Mechanism

Sep 06, 2024Abstract:In these notes, we prove a recent conjecture posed in the paper by R\"ais\"a, O. et al. [Subsampling is not Magic: Why Large Batch Sizes Work for Differentially Private Stochastic Optimization (2024)]. Theorem 6.2 of the paper asserts that for the Sampled Gaussian Mechanism - a composition of subsampling and additive Gaussian noise, the effective noise level, $\sigma_{\text{eff}} = \frac{\sigma(q)}{q}$, decreases as a function of the subsampling rate $q$. Consequently, larger subsampling rates are preferred for better privacy-utility trade-offs. Our notes provide a rigorous proof of Conjecture 6.3, which was left unresolved in the original paper, thereby completing the proof of Theorem 6.2.

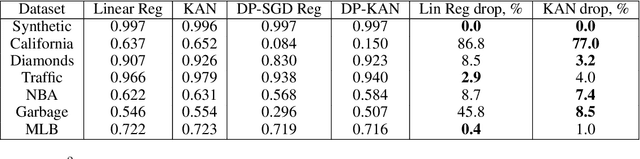

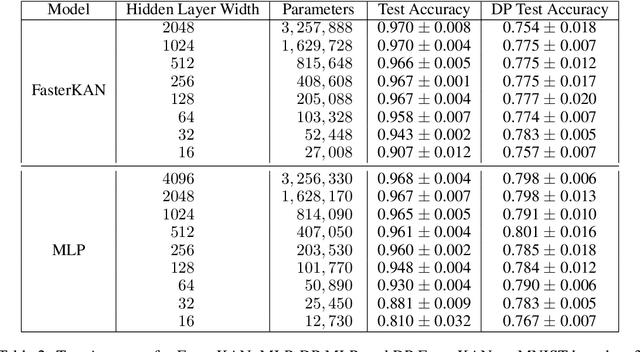

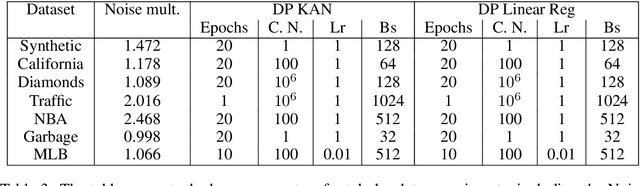

DP-KAN: Differentially Private Kolmogorov-Arnold Networks

Jul 17, 2024

Abstract:We study the Kolmogorov-Arnold Network (KAN), recently proposed as an alternative to the classical Multilayer Perceptron (MLP), in the application for differentially private model training. Using the DP-SGD algorithm, we demonstrate that KAN can be made private in a straightforward manner and evaluated its performance across several datasets. Our results indicate that the accuracy of KAN is not only comparable with MLP but also experiences similar deterioration due to privacy constraints, making it suitable for differentially private model training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge