Chris Dick

CSI-Based User Positioning, Channel Charting, and Device Classification with an NVIDIA 5G Testbed

Dec 11, 2025Abstract:Channel-state information (CSI)-based sensing will play a key role in future cellular systems. However, no CSI dataset has been published from a real-world 5G NR system that facilitates the development and validation of suitable sensing algorithms. To close this gap, we publish three real-world wideband multi-antenna multi-open RAN radio unit (O-RU) CSI datasets from the 5G NR uplink channel: an indoor lab/office room dataset, an outdoor campus courtyard dataset, and a device classification dataset with six commercial-off-the-shelf (COTS) user equipments (UEs). These datasets have been recorded using a software-defined 5G NR testbed based on NVIDIA Aerial RAN CoLab Over-the-Air (ARC-OTA) with COTS hardware, which we have deployed at ETH Zurich. We demonstrate the utility of these datasets for three CSI-based sensing tasks: neural UE positioning, channel charting in real-world coordinates, and closed-set device classification. For all these tasks, our results show high accuracy: neural UE positioning achieves 0.6cm (indoor) and 5.7cm (outdoor) mean absolute error, channel charting in real-world coordinates achieves 73cm mean absolute error (outdoor), and device classification achieves 99% (same day) and 95% (next day) accuracy. The CSI datasets, ground-truth UE position labels, CSI features, and simulation code are publicly available at https://caez.ethz.ch

Optimizing Puncturing Patterns of 5G NR LDPC Codes for Few-Iteration Decoding

Oct 28, 2024

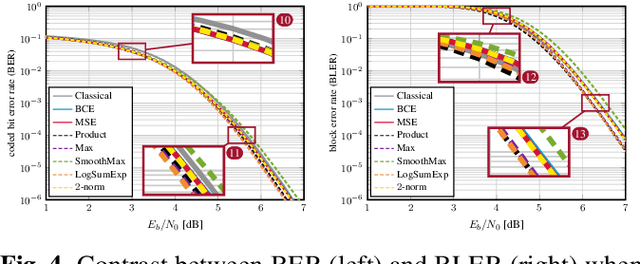

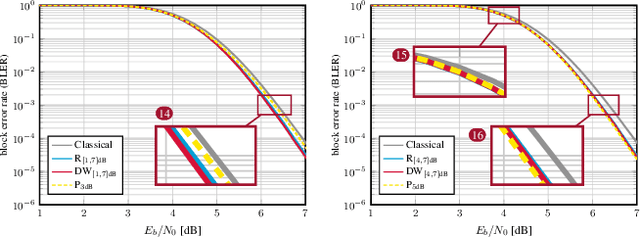

Abstract:Rate-matching of low-density parity-check (LDPC) codes enables a single code description to support a wide range of code lengths and rates. In 5G NR, rate matching is accomplished by extending (lifting) a base code to a desired target length and by puncturing (not transmitting) certain code bits. LDPC codes and rate matching are typically designed for the asymptotic performance limit with an ideal decoder. Practical LDPC decoders, however, carry out tens or fewer message-passing decoding iterations to achieve the target throughput and latency of modern wireless systems. We show that one can optimize LDPC code puncturing patterns for such few-iteration-constrained decoders using a method we call swapping of punctured and transmitted blocks (SPAT). Our simulation results show that SPAT yields from 0.20 dB up to 0.55 dB improved signal-to-noise ratio performance compared to the standard 5G NR LDPC code puncturing pattern for a wide range of code lengths and rates.

ML-Based Feedback-Free Adaptive MCS Selection for Massive Multi-User MIMO

Oct 20, 2023

Abstract:As wireless communication systems strive to improve spectral efficiency, there has been a growing interest in employing machine learning (ML)-based approaches for adaptive modulation and coding scheme (MCS) selection. In this paper, we introduce a new adaptive MCS selection framework for massive MIMO systems that operates without any feedback from users by solely relying on instantaneous uplink channel estimates. Our proposed method can effectively operate in multi-user scenarios where user feedback imposes excessive delay and bandwidth overhead. To learn the mapping between the user channel matrices and the optimal MCS level of each user, we develop a Convolutional Neural Network (CNN)-Long Short-Term Memory Network (LSTM)-based model and compare the performance with the state-of-the-art methods. Finally, we validate the effectiveness of our algorithm by evaluating it experimentally using real-world datasets collected from the RENEW massive MIMO platform.

DUIDD: Deep-Unfolded Interleaved Detection and Decoding for MIMO Wireless Systems

Dec 15, 2022

Abstract:Iterative detection and decoding (IDD) is known to achieve near-capacity performance in multi-antenna wireless systems. We propose deep-unfolded interleaved detection and decoding (DUIDD), a new paradigm that reduces the complexity of IDD while achieving even lower error rates. DUIDD interleaves the inner stages of the data detector and channel decoder, which expedites convergence and reduces complexity. Furthermore, DUIDD applies deep unfolding to automatically optimize algorithmic hyperparameters, soft-information exchange, message damping, and state forwarding. We demonstrate the efficacy of DUIDD using NVIDIA's Sionna link-level simulator in a 5G-near multi-user MIMO-OFDM wireless system with a novel low-complexity soft-input soft-output data detector, an optimized low-density parity-check decoder, and channel vectors from a commercial ray-tracer. Our results show that DUIDD outperforms classical IDD both in terms of block error rate and computational complexity.

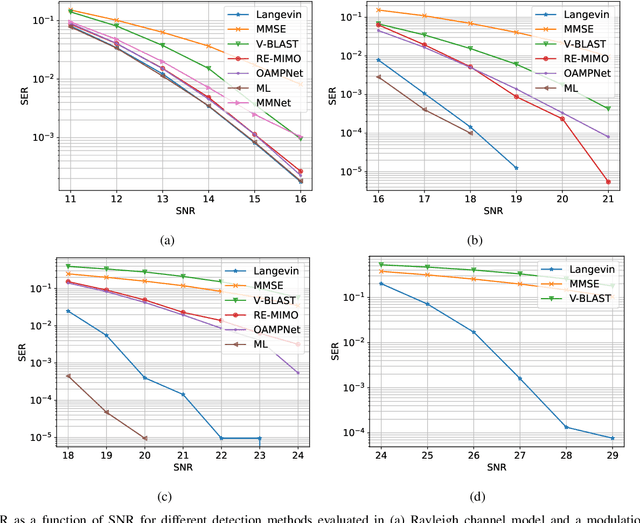

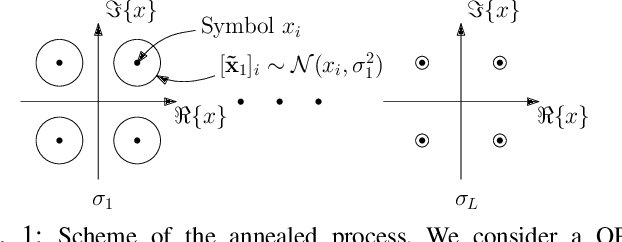

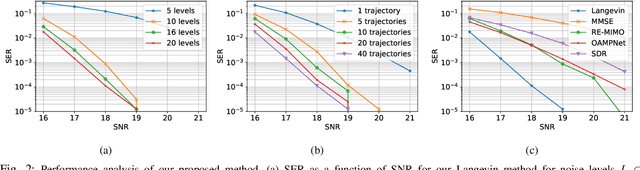

Accelerated massive MIMO detector based on annealed underdamped Langevin dynamics

Oct 26, 2022Abstract:We propose a multiple-input multiple-output (MIMO) detector based on an annealed version of the \emph{underdamped} Langevin (stochastic) dynamic. Our detector achieves state-of-the-art performance in terms of symbol error rate (SER) while keeping the computational complexity in check. Indeed, our method can be easily tuned to strike the right balance between computational complexity and performance as required by the application at hand. This balance is achieved by tuning hyperparameters that control the length of the simulated Langevin dynamic. Through numerical experiments, we demonstrate that our detector yields lower SER than competing approaches (including learning-based ones) with a lower running time compared to a previously proposed \emph{overdamped} Langevin-based MIMO detector.

Bit Error and Block Error Rate Training for ML-Assisted Communication

Oct 25, 2022

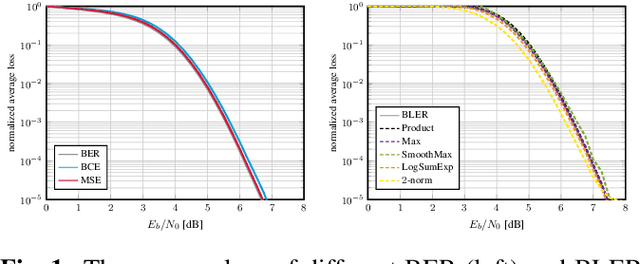

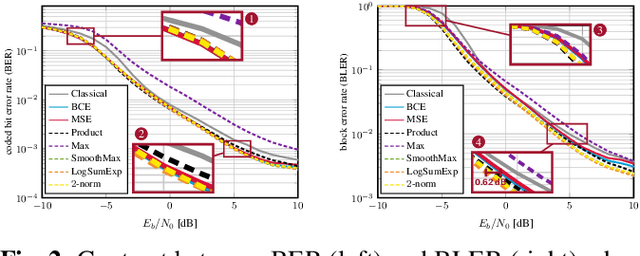

Abstract:Even though machine learning (ML) techniques are being widely used in communications, the question of how to train communication systems has received surprisingly little attention. In this paper, we show that the commonly used binary cross-entropy (BCE) loss is a sensible choice in uncoded systems, e.g., for training ML-assisted data detectors, but may not be optimal in coded systems. We propose new loss functions targeted at minimizing the block error rate and SNR de-weighting, a novel method that trains communication systems for optimal performance over a range of signal-to-noise ratios. The utility of the proposed loss functions as well as of SNR de-weighting is shown through simulations in NVIDIA Sionna.

Annealed Langevin Dynamics for Massive MIMO Detection

May 11, 2022

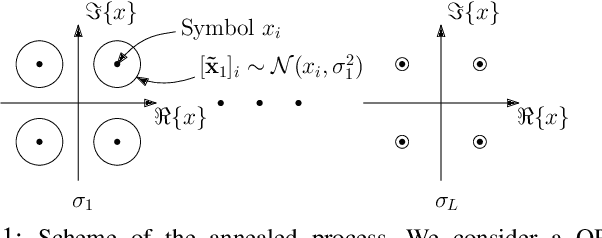

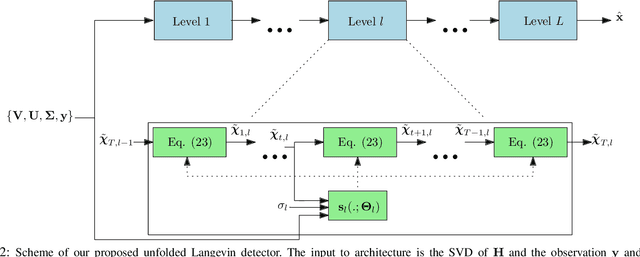

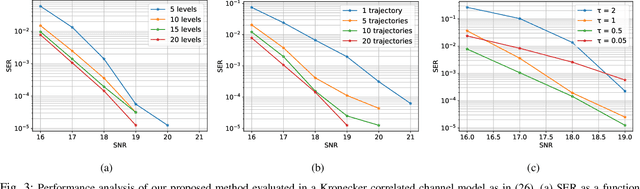

Abstract:Solving the optimal symbol detection problem in multiple-input multiple-output (MIMO) systems is known to be NP-hard. Hence, the objective of any detector of practical relevance is to get reasonably close to the optimal solution while keeping the computational complexity in check. In this work, we propose a MIMO detector based on an annealed version of Langevin (stochastic) dynamics. More precisely, we define a stochastic dynamical process whose stationary distribution coincides with the posterior distribution of the symbols given our observations. In essence, this allows us to approximate the maximum a posteriori estimator of the transmitted symbols by sampling from the proposed Langevin dynamic. Furthermore, we carefully craft this stochastic dynamic by gradually adding a sequence of noise with decreasing variance to the trajectories, which ensures that the estimated symbols belong to a pre-specified discrete constellation. Based on the proposed MIMO detector, we also design a robust version of the method by unfolding and parameterizing one term -- the score of the likelihood -- by a neural network. Through numerical experiments in both synthetic and real-world data, we show that our proposed detector yields state-of-the-art symbol error rate performance and the robust version becomes noise-variance agnostic.

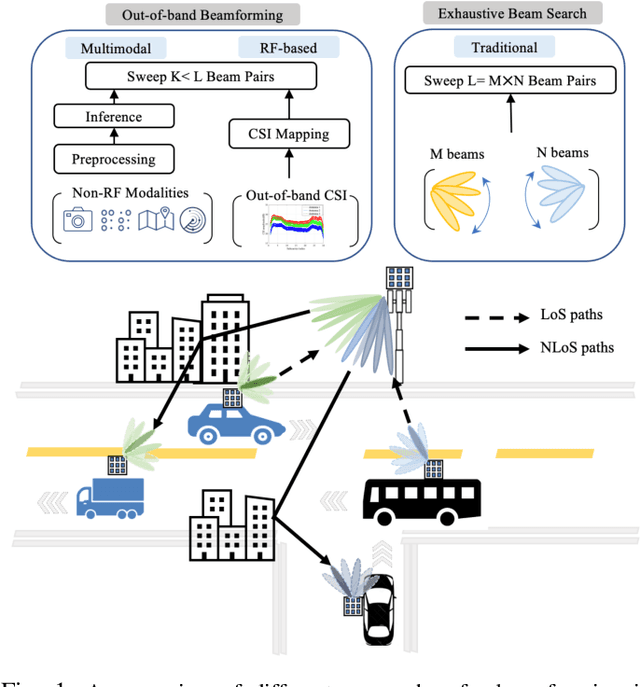

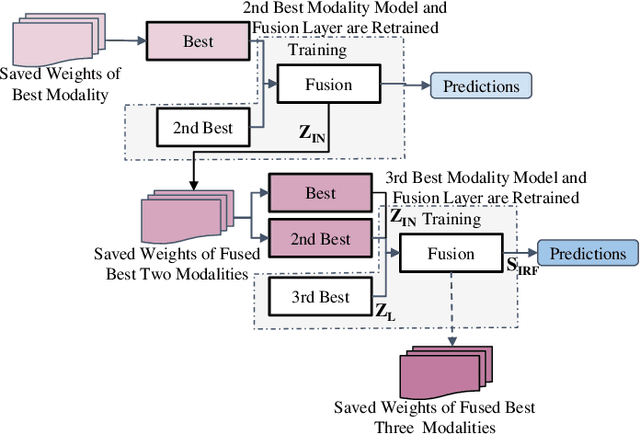

Going Beyond RF: How AI-enabled Multimodal Beamforming will Shape the NextG Standard

Mar 30, 2022

Abstract:Incorporating artificial intelligence and machine learning (AI/ML) methods within the 5G wireless standard promises autonomous network behavior and ultra-low-latency reconfiguration. However, the effort so far has purely focused on learning from radio frequency (RF) signals. Future standards and next-generation (nextG) networks beyond 5G will have two significant evolutions over the state-of-the-art 5G implementations: (i) massive number of antenna elements, scaling up to hundreds-to-thousands in number, and (ii) inclusion of AI/ML in the critical path of the network reconfiguration process that can access sensor feeds from a variety of RF and non-RF sources. While the former allows unprecedented flexibility in 'beamforming', where signals combine constructively at a target receiver, the latter enables the network with enhanced situation awareness not captured by a single and isolated data modality. This survey presents a thorough analysis of the different approaches used for beamforming today, focusing on mmWave bands, and then proceeds to make a compelling case for considering non-RF sensor data from multiple modalities, such as LiDAR, Radar, GPS for increasing beamforming directional accuracy and reducing processing time. This so called idea of multimodal beamforming will require deep learning based fusion techniques, which will serve to augment the current RF-only and classical signal processing methods that do not scale well for massive antenna arrays. The survey describes relevant deep learning architectures for multimodal beamforming, identifies computational challenges and the role of edge computing in this process, dataset generation tools, and finally, lists open challenges that the community should tackle to realize this transformative vision of the future of beamforming.

Detection by Sampling: Massive MIMO Detector based on Langevin Dynamics

Feb 24, 2022

Abstract:Optimal symbol detection in multiple-input multiple-output (MIMO) systems is known to be an NP-hard problem. Hence, the objective of any detector of practical relevance is to get reasonably close to the optimal solution while keeping the computational complexity in check. In this work, we propose a MIMO detector based on an annealed version of Langevin (stochastic) dynamics. More precisely, we define a stochastic dynamical process whose stationary distribution coincides with the posterior distribution of the symbols given our observations. In essence, this allows us to approximate the maximum a posteriori estimator of the transmitted symbols by sampling from the proposed Langevin dynamic. Furthermore, we carefully craft this stochastic dynamic by gradually adding a sequence of noise with decreasing variance to the trajectories, which ensures that the estimated symbols belong to a pre-specified discrete constellation. Through numerical experiments, we show that our proposed detector yields state-of-the-art symbol error rate performance.

Robust MIMO Detection using Hypernetworks with Learned Regularizers

Oct 13, 2021

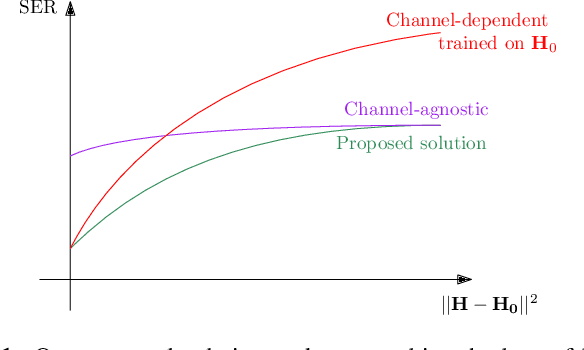

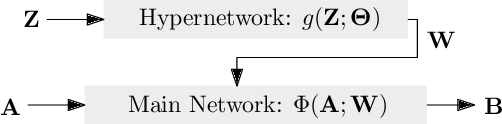

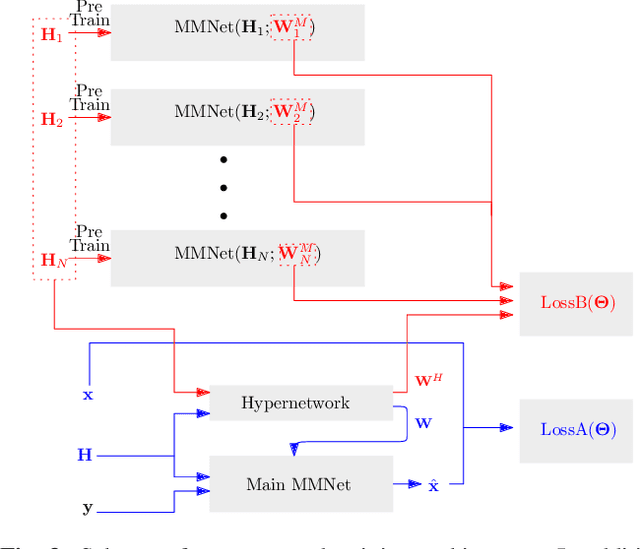

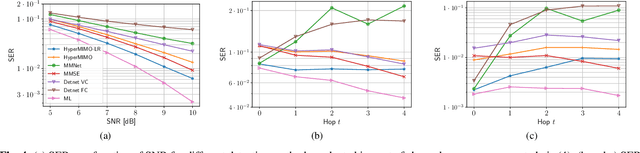

Abstract:Optimal symbol detection in multiple-input multiple-output (MIMO) systems is known to be an NP-hard problem. Recently, there has been a growing interest to get reasonably close to the optimal solution using neural networks while keeping the computational complexity in check. However, existing work based on deep learning shows that it is difficult to design a generic network that works well for a variety of channels. In this work, we propose a method that tries to strike a balance between symbol error rate (SER) performance and generality of channels. Our method is based on hypernetworks that generate the parameters of a neural network-based detector that works well on a specific channel. We propose a general framework by regularizing the training of the hypernetwork with some pre-trained instances of the channel-specific method. Through numerical experiments, we show that our proposed method yields high performance for a set of prespecified channel realizations while generalizing well to all channels drawn from a specific distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge