Bit Error and Block Error Rate Training for ML-Assisted Communication

Paper and Code

Oct 25, 2022

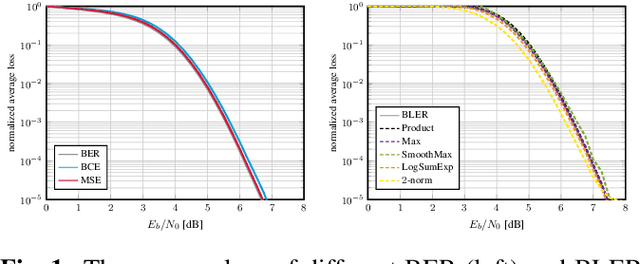

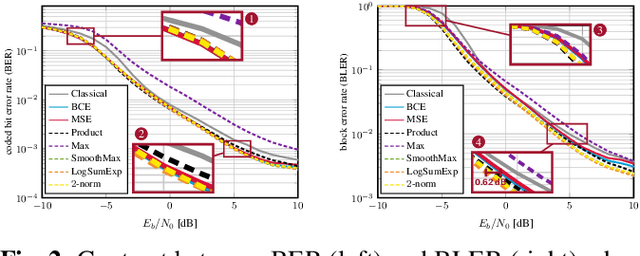

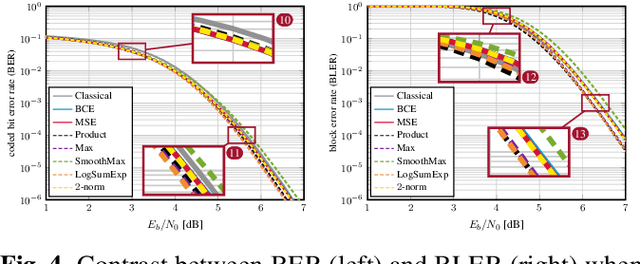

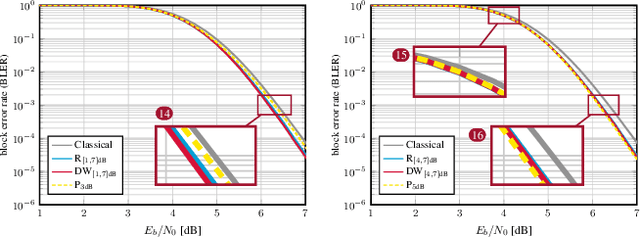

Even though machine learning (ML) techniques are being widely used in communications, the question of how to train communication systems has received surprisingly little attention. In this paper, we show that the commonly used binary cross-entropy (BCE) loss is a sensible choice in uncoded systems, e.g., for training ML-assisted data detectors, but may not be optimal in coded systems. We propose new loss functions targeted at minimizing the block error rate and SNR de-weighting, a novel method that trains communication systems for optimal performance over a range of signal-to-noise ratios. The utility of the proposed loss functions as well as of SNR de-weighting is shown through simulations in NVIDIA Sionna.

* A shorter version of this paper has been submitted to the 2023 IEEE

International Conference on Acoustics, Speech, and Signal Processing (ICASSP)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge