Mauro Belgiovine

T-PRIME: Transformer-based Protocol Identification for Machine-learning at the Edge

Jan 09, 2024

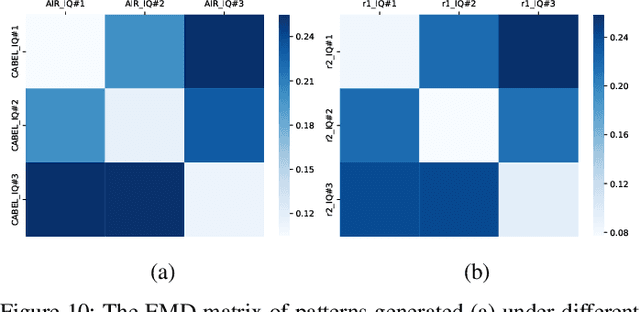

Abstract:Spectrum sharing allows different protocols of the same standard (e.g., 802.11 family) or different standards (e.g., LTE and DVB) to coexist in overlapping frequency bands. As this paradigm continues to spread, wireless systems must also evolve to identify active transmitters and unauthorized waveforms in real time under intentional distortion of preambles, extremely low signal-to-noise ratios and challenging channel conditions. We overcome limitations of correlation-based preamble matching methods in such conditions through the design of T-PRIME: a Transformer-based machine learning approach. T-PRIME learns the structural design of transmitted frames through its attention mechanism, looking at sequence patterns that go beyond the preamble alone. The paper makes three contributions: First, it compares Transformer models and demonstrates their superiority over traditional methods and state-of-the-art neural networks. Second, it rigorously analyzes T-PRIME's real-time feasibility on DeepWave's AIR-T platform. Third, it utilizes an extensive 66 GB dataset of over-the-air (OTA) WiFi transmissions for training, which is released along with the code for community use. Results reveal nearly perfect (i.e. $>98\%$) classification accuracy under simulated scenarios, showing $100\%$ detection improvement over legacy methods in low SNR ranges, $97\%$ classification accuracy for OTA single-protocol transmissions and up to $75\%$ double-protocol classification accuracy in interference scenarios.

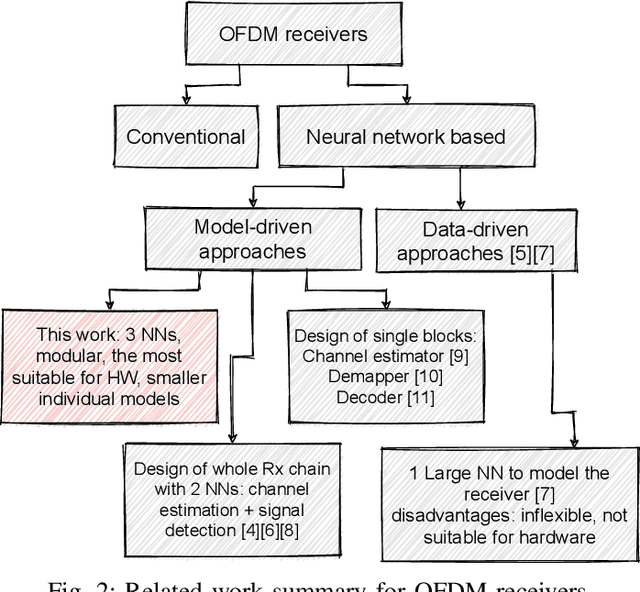

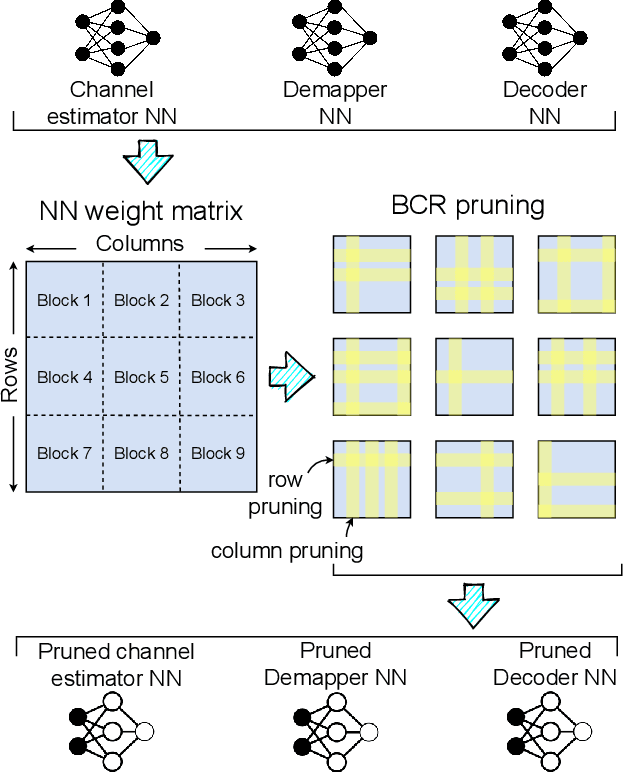

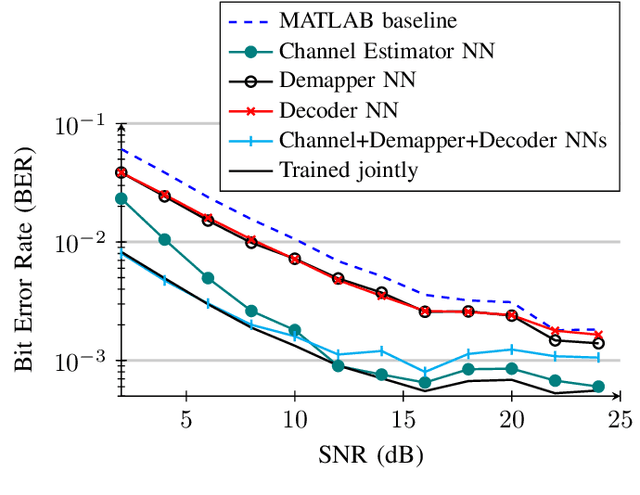

Neural Network-based OFDM Receiver for Resource Constrained IoT Devices

May 12, 2022

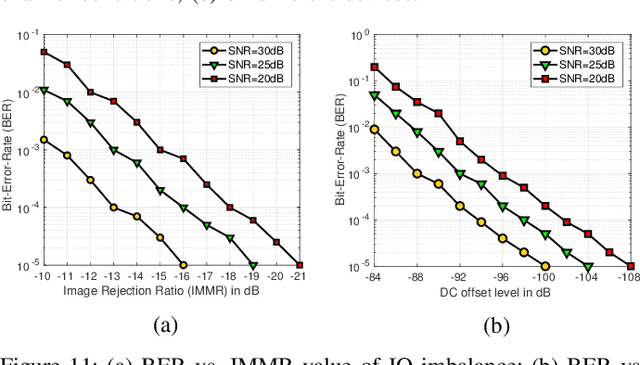

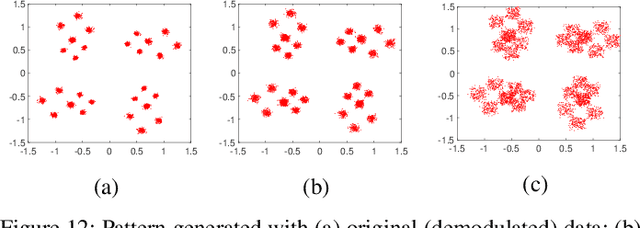

Abstract:Orthogonal Frequency Division Multiplexing (OFDM)-based waveforms are used for communication links in many current and emerging Internet of Things (IoT) applications, including the latest WiFi standards. For such OFDM-based transceivers, many core physical layer functions related to channel estimation, demapping, and decoding are implemented for specific choices of channel types and modulation schemes, among others. To decouple hard-wired choices from the receiver chain and thereby enhance the flexibility of IoT deployment in many novel scenarios without changing the underlying hardware, we explore a novel, modular Machine Learning (ML)-based receiver chain design. Here, ML blocks replace the individual processing blocks of an OFDM receiver, and we specifically describe this swapping for the legacy channel estimation, symbol demapping, and decoding blocks with Neural Networks (NNs). A unique aspect of this modular design is providing flexible allocation of processing functions to the legacy or ML blocks, allowing them to interchangeably coexist. Furthermore, we study the implementation cost-benefits of the proposed NNs in resource-constrained IoT devices through pruning and quantization, as well as emulation of these compressed NNs within Field Programmable Gate Arrays (FPGAs). Our evaluations demonstrate that the proposed modular NN-based receiver improves bit error rate of the traditional non-ML receiver by averagely 61% and 10% for the simulated and over-the-air datasets, respectively. We further show complexity-performance tradeoffs by presenting computational complexity comparisons between the traditional algorithms and the proposed compressed NNs.

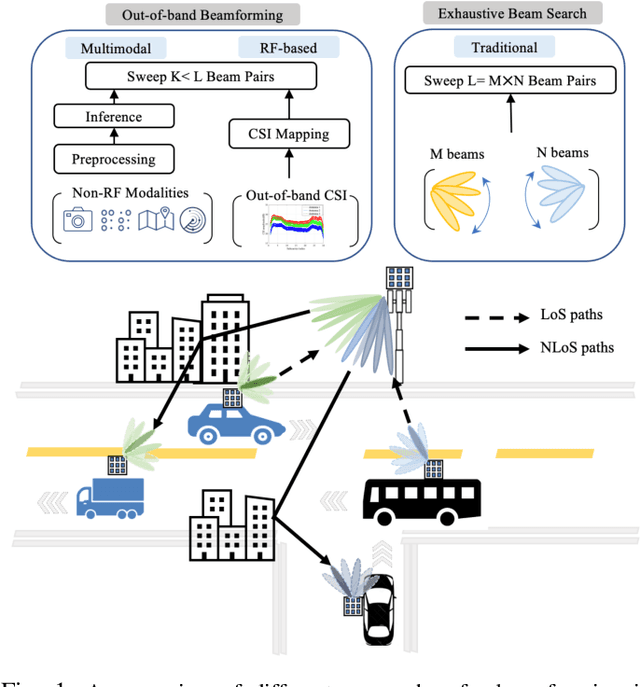

Going Beyond RF: How AI-enabled Multimodal Beamforming will Shape the NextG Standard

Mar 30, 2022

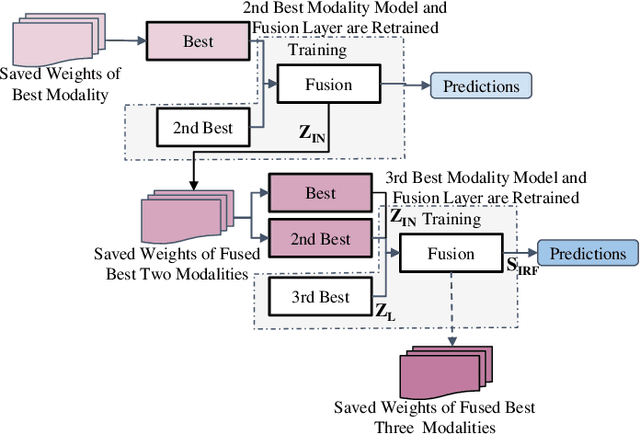

Abstract:Incorporating artificial intelligence and machine learning (AI/ML) methods within the 5G wireless standard promises autonomous network behavior and ultra-low-latency reconfiguration. However, the effort so far has purely focused on learning from radio frequency (RF) signals. Future standards and next-generation (nextG) networks beyond 5G will have two significant evolutions over the state-of-the-art 5G implementations: (i) massive number of antenna elements, scaling up to hundreds-to-thousands in number, and (ii) inclusion of AI/ML in the critical path of the network reconfiguration process that can access sensor feeds from a variety of RF and non-RF sources. While the former allows unprecedented flexibility in 'beamforming', where signals combine constructively at a target receiver, the latter enables the network with enhanced situation awareness not captured by a single and isolated data modality. This survey presents a thorough analysis of the different approaches used for beamforming today, focusing on mmWave bands, and then proceeds to make a compelling case for considering non-RF sensor data from multiple modalities, such as LiDAR, Radar, GPS for increasing beamforming directional accuracy and reducing processing time. This so called idea of multimodal beamforming will require deep learning based fusion techniques, which will serve to augment the current RF-only and classical signal processing methods that do not scale well for massive antenna arrays. The survey describes relevant deep learning architectures for multimodal beamforming, identifies computational challenges and the role of edge computing in this process, dataset generation tools, and finally, lists open challenges that the community should tackle to realize this transformative vision of the future of beamforming.

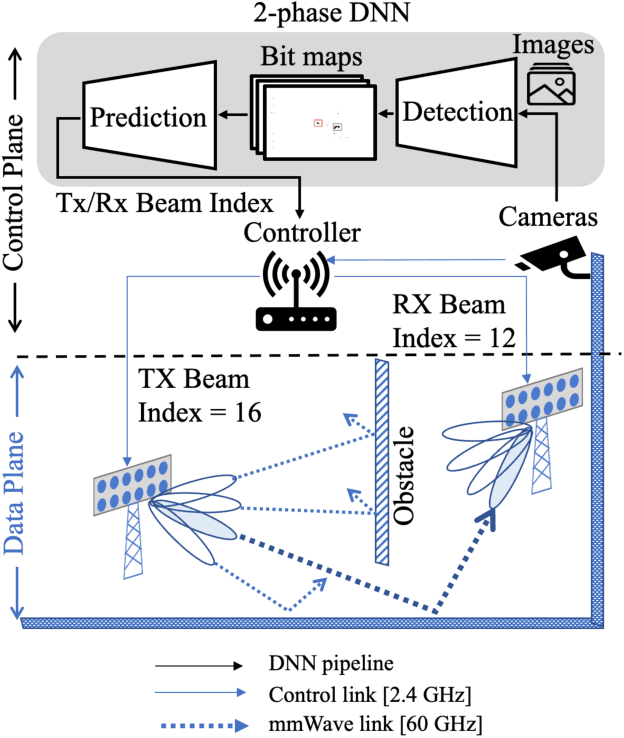

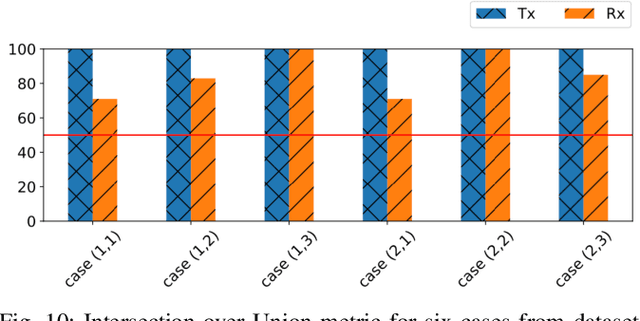

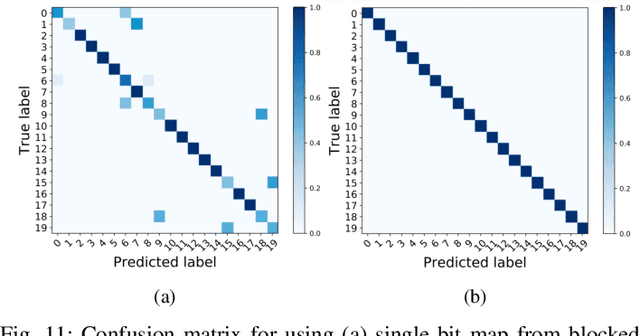

Machine Learning on Camera Images for Fast mmWave Beamforming

Feb 15, 2021

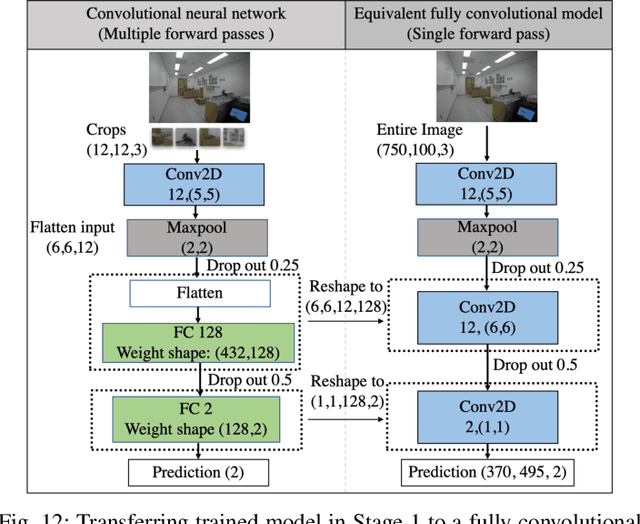

Abstract:Perfect alignment in chosen beam sectors at both transmit- and receive-nodes is required for beamforming in mmWave bands. Current 802.11ad WiFi and emerging 5G cellular standards spend up to several milliseconds exploring different sector combinations to identify the beam pair with the highest SNR. In this paper, we propose a machine learning (ML) approach with two sequential convolutional neural networks (CNN) that uses out-of-band information, in the form of camera images, to (i) rapidly identify the locations of the transmitter and receiver nodes, and then (ii) return the optimal beam pair. We experimentally validate this intriguing concept for indoor settings using the NI 60GHz mmwave transceiver. Our results reveal that our ML approach reduces beamforming related exploration time by 93% under different ambient lighting conditions, with an error of less than 1% compared to the time-intensive deterministic method defined by the current standards.

ORACLE: Optimized Radio clAssification through Convolutional neuraL nEtworks

Dec 03, 2018

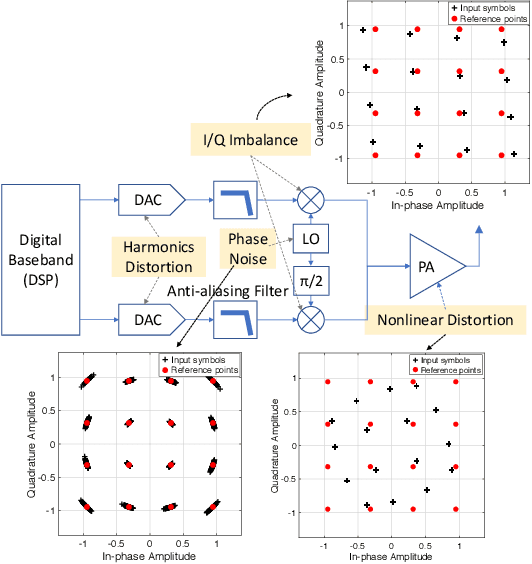

Abstract:This paper describes the architecture and performance of ORACLE, an approach for detecting a unique radio from a large pool of bit-similar devices (same hardware, protocol, physical address, MAC ID) using only IQ samples at the physical layer. ORACLE trains a convolutional neural network (CNN) that balances computational time and accuracy, showing 99\% classification accuracy for a 16-node USRP X310 SDR testbed and an external database of $>$100 COTS WiFi devices. Our work makes the following contributions: (i) it studies the hardware-centric features within the transmitter chain that causes IQ sample variations; (ii) for an idealized static channel environment, it proposes a CNN architecture requiring only raw IQ samples accessible at the front-end, without channel estimation or prior knowledge of the communication protocol; (iii) for dynamic channels, it demonstrates a principled method of feedback-driven transmitter-side modifications that uses channel estimation at the receiver to increase differentiability for the CNN classifier. The key innovation here is to intentionally introduce controlled imperfections on the transmitter side through software directives, while minimizing the change in bit error rate. Unlike previous work that imposes constant environmental conditions, ORACLE adopts the `train once deploy anywhere' paradigm with near-perfect device classification accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge