Carlos Bocanegra

Going Beyond RF: How AI-enabled Multimodal Beamforming will Shape the NextG Standard

Mar 30, 2022

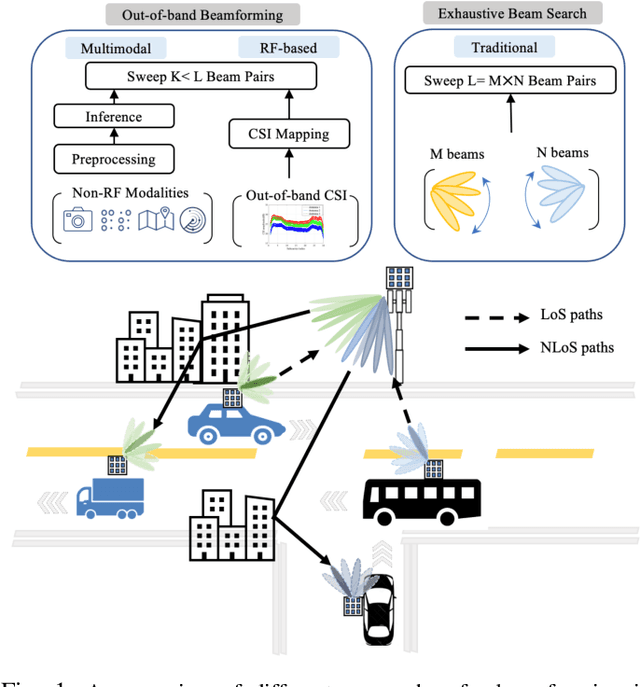

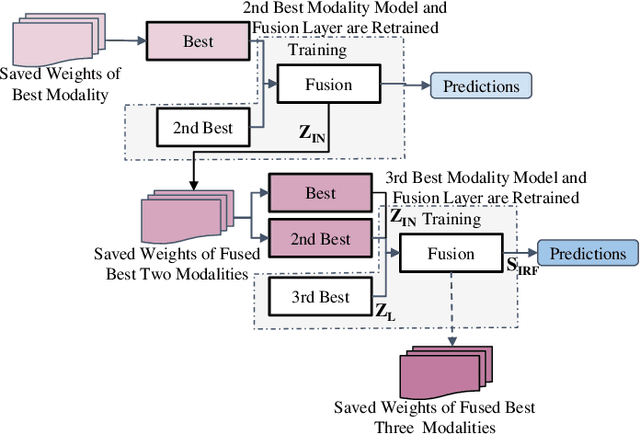

Abstract:Incorporating artificial intelligence and machine learning (AI/ML) methods within the 5G wireless standard promises autonomous network behavior and ultra-low-latency reconfiguration. However, the effort so far has purely focused on learning from radio frequency (RF) signals. Future standards and next-generation (nextG) networks beyond 5G will have two significant evolutions over the state-of-the-art 5G implementations: (i) massive number of antenna elements, scaling up to hundreds-to-thousands in number, and (ii) inclusion of AI/ML in the critical path of the network reconfiguration process that can access sensor feeds from a variety of RF and non-RF sources. While the former allows unprecedented flexibility in 'beamforming', where signals combine constructively at a target receiver, the latter enables the network with enhanced situation awareness not captured by a single and isolated data modality. This survey presents a thorough analysis of the different approaches used for beamforming today, focusing on mmWave bands, and then proceeds to make a compelling case for considering non-RF sensor data from multiple modalities, such as LiDAR, Radar, GPS for increasing beamforming directional accuracy and reducing processing time. This so called idea of multimodal beamforming will require deep learning based fusion techniques, which will serve to augment the current RF-only and classical signal processing methods that do not scale well for massive antenna arrays. The survey describes relevant deep learning architectures for multimodal beamforming, identifies computational challenges and the role of edge computing in this process, dataset generation tools, and finally, lists open challenges that the community should tackle to realize this transformative vision of the future of beamforming.

AirNN: Neural Networks with Over-the-Air Convolution via Reconfigurable Intelligent Surfaces

Feb 07, 2022

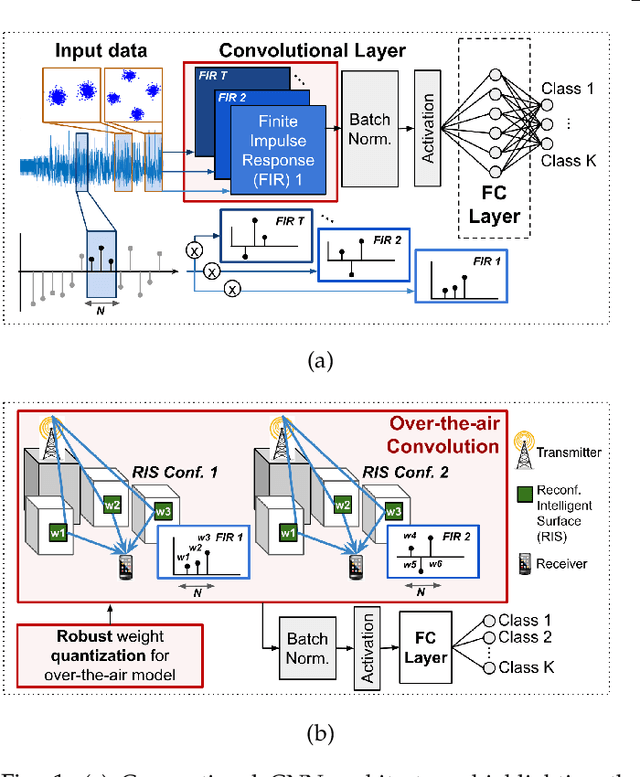

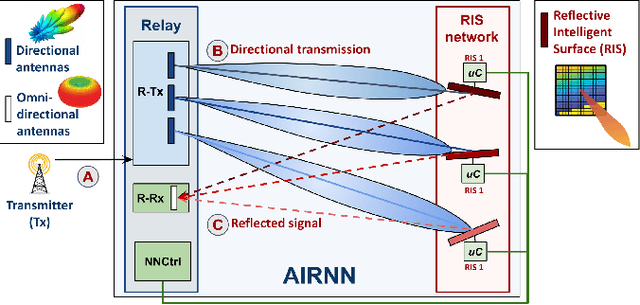

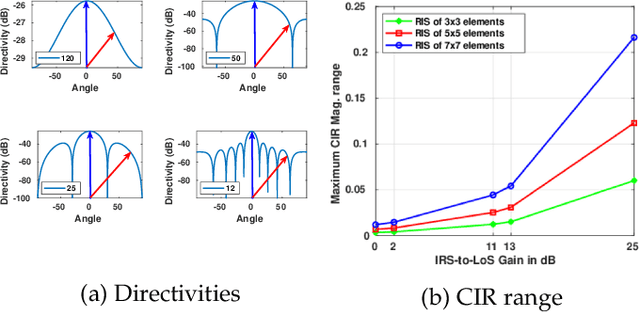

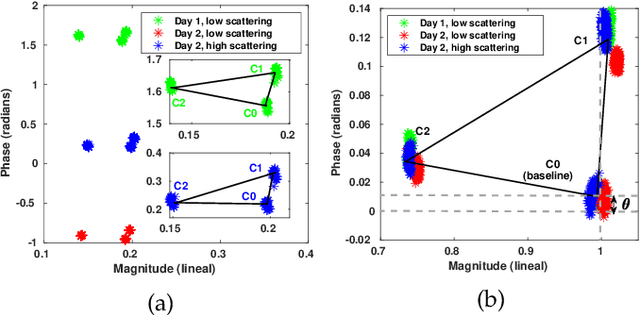

Abstract:Over-the-air analog computation allows offloading computation to the wireless environment through carefully constructed transmitted signals. In this paper, we design and implement the first-of-its-kind over-the-air convolution and demonstrate it for inference tasks in a convolutional neural network (CNN). We engineer the ambient wireless propagation environment through reconfigurable intelligent surfaces (RIS) to design such an architecture, which we call 'AirNN'. AirNN leverages the physics of wave reflection to represent a digital convolution, an essential part of a CNN architecture, in the analog domain. In contrast to classical communication, where the receiver must react to the channel-induced transformation, generally represented as finite impulse response (FIR) filter, AirNN proactively creates the signal reflections to emulate specific FIR filters through RIS. AirNN involves two steps: first, the weights of the neurons in the CNN are drawn from a finite set of channel impulse responses (CIR) that correspond to realizable FIR filters. Second, each CIR is engineered through RIS, and reflected signals combine at the receiver to determine the output of the convolution. This paper presents a proof-of-concept of AirNN by experimentally demonstrating over-the-air convolutions. We then validate the entire resulting CNN model accuracy via simulations for an example task of modulation classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge