Danijela Cabric

Millimeter-Wave True-Time Delay Array Beamforming with Robustness to Mobility

Dec 09, 2025

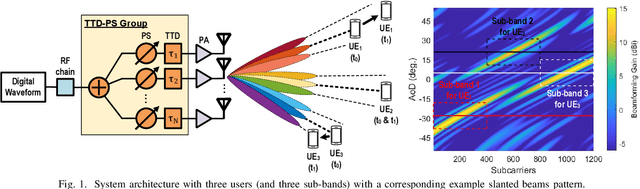

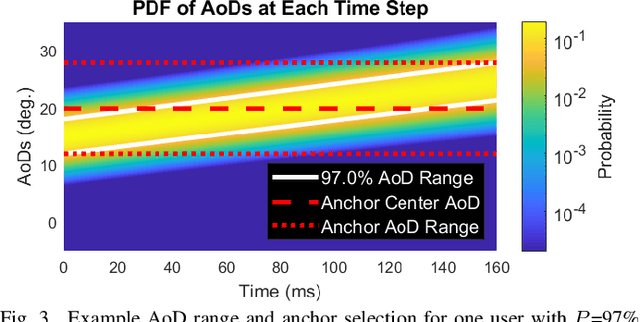

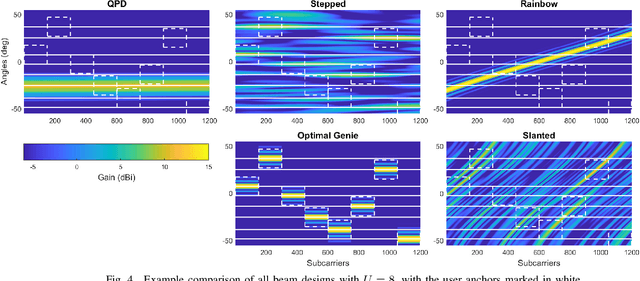

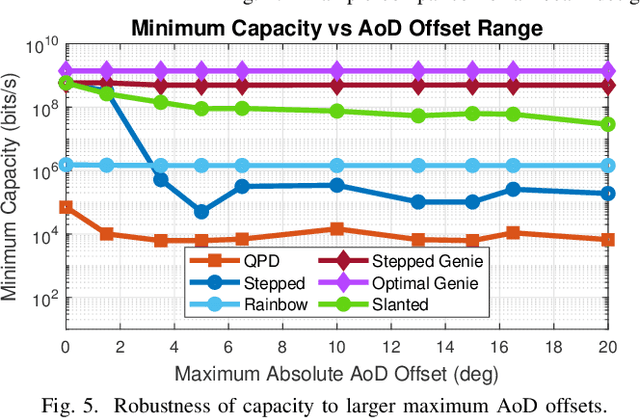

Abstract:Ultra-reliable and low-latency connectivity is required for real-time and latency-sensitive applications, like wireless augmented and virtual reality streaming. Millimeter-wave (mmW) networks have enabled extremely high data rates through large available bandwidths but struggle to maintain continuous connectivity with mobile users. Achieving the required beamforming gain from large antenna arrays with minimal disruption is particularly challenging with fast-moving users and practical analog mmW array architectures. In this work, we propose frequency-dependent slanted beams from true-time delay (TTD) analog arrays to achieve robust beamforming in wideband, multi-user downlink scenarios. Novel beams with linear angle-frequency relationships for different users and sub-bands provide a trade-off between instantaneous capacity and angular coverage. Compared to alternative analog array beamforming designs, slanted beams provide higher reliability to angle offsets and greater adaptability to varied user movement statistics.

Structured Two-Stage True-Time-Delay Array Codebook Design for Multi-User Data Communication

Nov 15, 2023Abstract:Wideband millimeter-wave and terahertz (THz) systems can facilitate simultaneous data communication with multiple spatially separated users. It is desirable to orthogonalize users across sub-bands by deploying frequency-dependent beams with a sub-band-specific spatial response. True-Time-Delay (TTD) antenna arrays are a promising wideband architecture to implement sub-band-specific dispersion of beams across space using a single radio frequency (RF) chain. This paper proposes a structured design of analog TTD codebooks to generate beams that exhibit quantized sub-band-to-angle mapping. We introduce a structured Staircase TTD codebook and analyze the frequency-spatial behaviour of the resulting beam patterns. We develop the closed-form two-stage design of the proposed codebook to achieve the desired sub-band-specific beams and evaluate their performance in multi-user communication networks.

A Coordinate Descent Approach to Atomic Norm Minimization

Oct 22, 2023

Abstract:Atomic norm minimization is of great interest in various applications of sparse signal processing including super-resolution line-spectral estimation and signal denoising. In practice, atomic norm minimization (ANM) is formulated as a semi-definite programming (SDP) which is generally hard to solve. This work introduces a low-complexity, matrix-free method for solving ANM. The method uses the framework of coordinate descent and exploits the sparsity-induced nature of atomic-norm regularization. Specifically, an equivalent, non-convex formulation of ANM is first proposed. It is then proved that applying the coordinate descent framework on the non-convex formulation leads to convergence to the global optimal point. For the case of a single measurement vector of length N in discrete fourier transform (DFT) basis, the complexity of each iteration in the coordinate descent procedure is O(N log N ), rendering the proposed method efficient even for large-scale problems. The proposed coordinate descent framework can be readily modified to solve a variety of ANM problems, including multi-dimensional ANM with multiple measurement vectors. It is easy to implement and can essentially be applied to any atomic sets as long as a corresponding rank-1 problem can be solved. Through extensive numerical simulations, it is verified that for solving sparse problems the proposed method is much faster than the alternating direction method of multipliers (ADMM) or the customized interior point SDP solver.

Deep Learning Based Active Spatial Channel Gain Prediction Using a Swarm of Unmanned Aerial Vehicles

Oct 06, 2023Abstract:Prediction of wireless channel gain (CG) across space is a necessary tool for many important wireless network design problems. In this paper, we develop prediction methods that use environment-specific features, namely building maps and CG measurements, to achieve high prediction accuracy. We assume that measurements are collected using a swarm of coordinated unmanned aerial vehicles (UAVs). We develop novel active prediction approaches which consist of both methods for UAV path planning for optimal measurement collection and methods for prediction of CG across space based on the collected measurements. We propose two active prediction approaches based on deep learning (DL) and Kriging interpolation. The first approach does not rely on the location of the transmitter and utilizes 3D maps to compensate for the lack of it. We utilize DL to incorporate 3D maps into prediction and reinforcement learning for optimal path planning for the UAVs based on DL prediction. The second active prediction approach is based on Kriging interpolation, which requires known transmitter location and cannot utilize 3D maps. We train and evaluate the two proposed approaches in a ray-tracing-based channel simulator. Using simulations, we demonstrate the importance of active prediction compared to prediction based on randomly collected measurements of channel gain. Furthermore, we show that using DL and 3D maps, we can achieve high prediction accuracy even without knowing the transmitter location. We also demonstrate the importance of coordinated path planning for active prediction when using multiple UAVs compared to UAVs collecting measurements independently in a greedy manner.

REM-U-net: Deep Learning Based Agile REM Prediction with Energy-Efficient Cell-Free Use Case

Sep 21, 2023Abstract:Radio environment maps (REMs) hold a central role in optimizing wireless network deployment, enhancing network performance, and ensuring effective spectrum management. Conventional REM prediction methods are either excessively time-consuming, e.g., ray tracing, or inaccurate, e.g., statistical models, limiting their adoption in modern inherently dynamic wireless networks. Deep-learning-based REM prediction has recently attracted considerable attention as an appealing, accurate, and time-efficient alternative. However, existing works on REM prediction using deep learning are either confined to 2D maps or use a limited dataset. In this paper, we introduce a runtime-efficient REM prediction framework based on u-nets, trained on a large-scale 3D maps dataset. In addition, data preprocessing steps are investigated to further refine the REM prediction accuracy. The proposed u-net framework, along with preprocessing steps, are evaluated in the context of the 2023 IEEE ICASSP Signal Processing Grand Challenge, namely, the First Pathloss Radio Map Prediction Challenge. The evaluation results demonstrate that the proposed method achieves an average normalized root-mean-square error (RMSE) of 0.045 with an average of 14 milliseconds (ms) runtime. Finally, we position our achieved REM prediction accuracy in the context of a relevant cell-free massive multiple-input multiple-output (CF-mMIMO) use case. We demonstrate that one can obviate consuming energy on large-scale fading measurements and rely on predicted REM instead to decide on which sleep access points (APs) to switch on in a CF-mMIMO network that adopts a minimum propagation loss AP switch ON/OFF strategy.

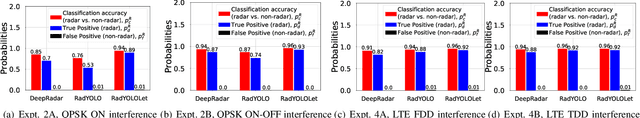

RadYOLOLet: Radar Detection and Parameter Estimation Using YOLO and WaveLet

Sep 21, 2023

Abstract:Detection of radar signals without assistance from the radar transmitter is a crucial requirement for emerging and future shared-spectrum wireless networks like Citizens Broadband Radio Service (CBRS). In this paper, we propose a supervised deep learning-based spectrum sensing approach called RadYOLOLet that can detect low-power radar signals in the presence of interference and estimate the radar signal parameters. The core of RadYOLOLet is two different convolutional neural networks (CNN), RadYOLO and Wavelet-CNN, that are trained independently. RadYOLO operates on spectrograms and provides most of the capabilities of RadYOLOLet. However, it suffers from low radar detection accuracy in the low signal-to-noise ratio (SNR) regime. We develop Wavelet-CNN specifically to deal with this limitation of RadYOLO. Wavelet-CNN operates on continuous Wavelet transform of the captured signals, and we use it only when RadYOLO fails to detect any radar signal. We thoroughly evaluate RadYOLOLet using different experiments corresponding to different types of interference signals. Based on our evaluations, we find that RadYOLOLet can achieve 100% radar detection accuracy for our considered radar types up to 16 dB SNR, which cannot be guaranteed by other comparable methods. RadYOLOLet can also function accurately under interference up to 16 dB SINR.

ProSpire: Proactive Spatial Prediction of Radio Environment Using Deep Learning

Aug 20, 2023Abstract:Spatial prediction of the radio propagation environment of a transmitter can assist and improve various aspects of wireless networks. The majority of research in this domain can be categorized as 'reactive' spatial prediction, where the predictions are made based on a small set of measurements from an active transmitter whose radio environment is to be predicted. Emerging spectrum-sharing paradigms would benefit from 'proactive' spatial prediction of the radio environment, where the spatial predictions must be done for a transmitter for which no measurement has been collected. This paper proposes a novel, supervised deep learning-based framework, ProSpire, that enables spectrum sharing by leveraging the idea of proactive spatial prediction. We carefully address several challenges in ProSpire, such as designing a framework that conveniently collects training data for learning, performing the predictions in a fast manner, enabling operations without an area map, and ensuring that the predictions do not lead to undesired interference. ProSpire relies on the crowdsourcing of transmitters and receivers during their normal operations to address some of the aforementioned challenges. The core component of ProSpire is a deep learning-based image-to-image translation method, which we call RSSu-net. We generate several diverse datasets using ray tracing software and numerically evaluate ProSpire. Our evaluations show that RSSu-net performs reasonably well in terms of signal strength prediction, 5 dB mean absolute error, which is comparable to the average error of other relevant methods. Importantly, due to the merits of RSSu-net, ProSpire creates proactive boundaries around transmitters such that they can be activated with 97% probability of not causing interference. In this regard, the performance of RSSu-net is 19% better than that of other comparable methods.

GAN-RXA: A Practical Scalable Solution to Receiver-Agnostic Transmitter Fingerprinting

Mar 25, 2023Abstract:Radio frequency fingerprinting has been proposed for device identification. However, experimental studies also demonstrated its sensitivity to deployment changes. Recent works have addressed channel impacts by developing robust algorithms accounting for time and location variability, but the impacts of receiver impairments on transmitter fingerprints are yet to be solved. In this work, we investigat the receiver-agnostic transmitter fingerprinting problem, and propose a novel two-stage supervised learning framework (RXA) to address it. In the first stage, our approach calibrates a receiver-agnostic transmitter feature-extractor. We also propose two deep-learning approaches (SD-RXA and GAN-RXA) in this first stage to improve the receiver-agnostic property of the RXA framework. In the second stage, the calibrated feature-extractor is utilized to train a transmitter classifier with only one receiver. We evaluate the proposed approaches on transmitter identification problem using a large-scale WiFi dataset. We show that when a trained transmitter-classifier is deployed on new receivers, the RXA framework can improve the classification accuracy by 19.5%, and the outlier detection rate by 10.0% compared to a naive approach without calibration. Moreover, GAN-RXA can further increase the closed-set classification accuracy by 5.0%, and the outlier detection rate by 7.5% compared to the RXA approach.

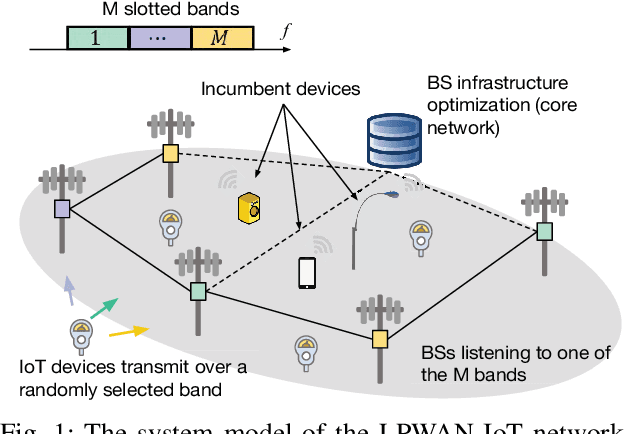

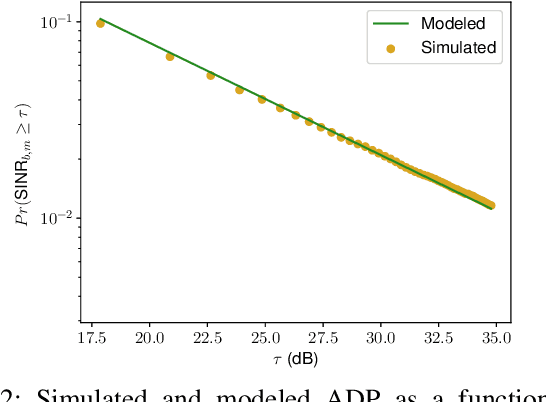

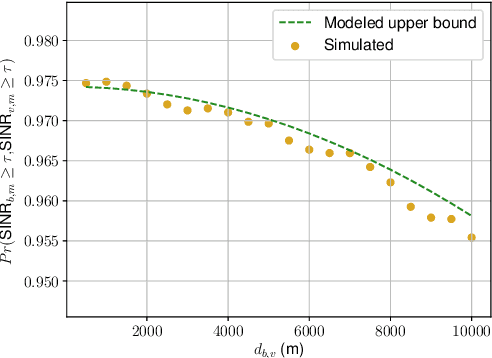

Multiband Massive IoT: A Learning Approach to Infrastructure Deployment

Jun 13, 2022

Abstract:We consider a novel ultra-narrowband (UNB) low-power wide-area network (LPWAN) architecture design for uplink transmission of a massive number of Internet of Things (IoT) devices over multiple multiplexing bands. An IoT device can randomly choose any of the multiplexing bands to transmit its packet. Due to hardware constraints, a base station (BS) is able to listen to only one multiplexing band. Our main objective is to maximize the packet decoding probability (PDP) by optimizing the placement of the BSs and frequency assignment of BSs to multiplexing bands. We develop two online approaches that adapt to the environment based on the statistics of (un)successful packets at the BSs. The first approach is based on a predefined model of the environment, while the second approach is measurement-based model-free approach, which is applicable to any environment. The benefit of the model-based approach is a lower training complexity, at the risk of a poor fit in a model-incompatible environment. The simulation results show that our proposed approaches to band assignment and BS placement offer significant improvement in PDP over baseline random approaches and perform closely to the theoretical upper bound.

Machine Learning Prediction for Phase-less Millimeter-Wave Beam Tracking

Jun 06, 2022

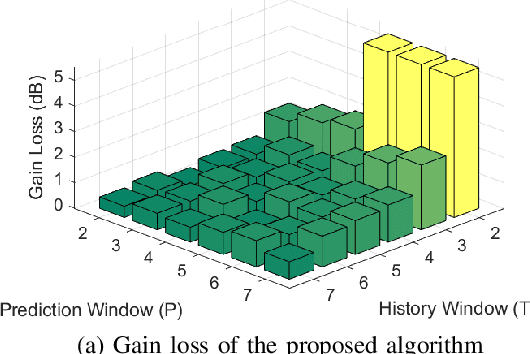

Abstract:Future wireless networks may operate at millimeter-wave (mmW) and sub-terahertz (sub-THz) frequencies to enable high data rate requirements. While large antenna arrays are critical for reliable communications at mmW and sub-THz bands, these antenna arrays would also mandate efficient and scalable initial beam alignment and link maintenance algorithms for mobile devices. Low-power phased-array architectures and phase-less power measurements due to high frequency oscillator phase noise pose additional challenges for practical beam tracking algorithms. Traditional beam tracking protocols require exhaustive sweeps of all possible beam directions and scale poorly with high mobility and large arrays. Compressive sensing and machine learning designs have been proposed to improve measurement scaling with array size but commonly degrade under hardware impairments or require raw samples respectively. In this work, we introduce a novel long short-term memory (LSTM) network assisted beam tracking and prediction algorithm utilizing only phase-less measurements from fixed compressive codebooks. We demonstrate comparable beam alignment accuracy to state-of-the-art phase-less beam alignment algorithms, while reducing the average number of required measurements over time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge