Benjamin W. Domae

Millimeter-Wave True-Time Delay Array Beamforming with Robustness to Mobility

Dec 09, 2025

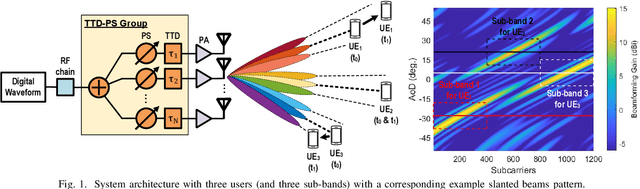

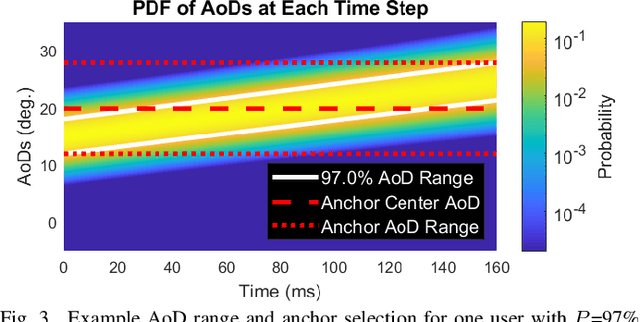

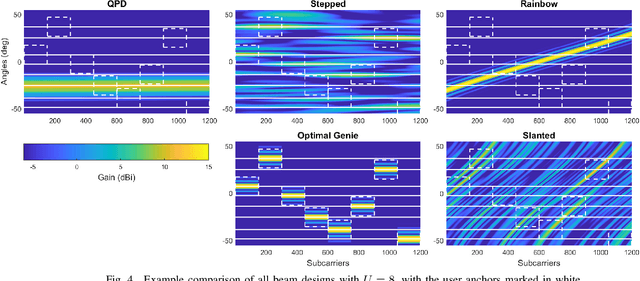

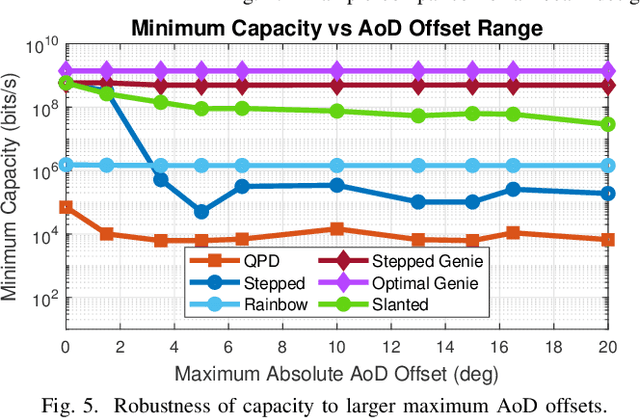

Abstract:Ultra-reliable and low-latency connectivity is required for real-time and latency-sensitive applications, like wireless augmented and virtual reality streaming. Millimeter-wave (mmW) networks have enabled extremely high data rates through large available bandwidths but struggle to maintain continuous connectivity with mobile users. Achieving the required beamforming gain from large antenna arrays with minimal disruption is particularly challenging with fast-moving users and practical analog mmW array architectures. In this work, we propose frequency-dependent slanted beams from true-time delay (TTD) analog arrays to achieve robust beamforming in wideband, multi-user downlink scenarios. Novel beams with linear angle-frequency relationships for different users and sub-bands provide a trade-off between instantaneous capacity and angular coverage. Compared to alternative analog array beamforming designs, slanted beams provide higher reliability to angle offsets and greater adaptability to varied user movement statistics.

Machine Learning Prediction for Phase-less Millimeter-Wave Beam Tracking

Jun 06, 2022

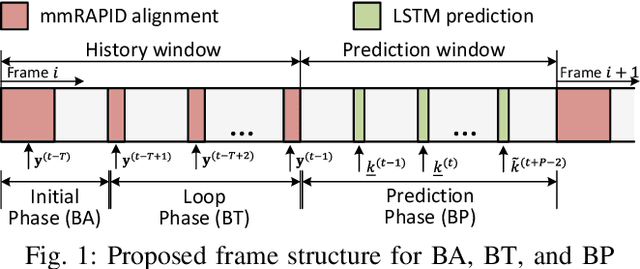

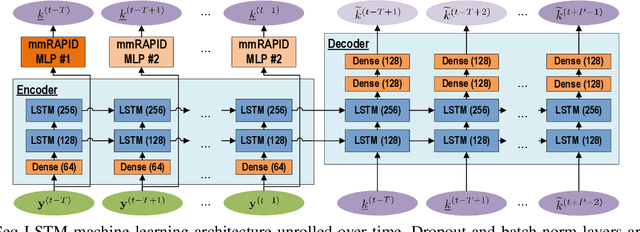

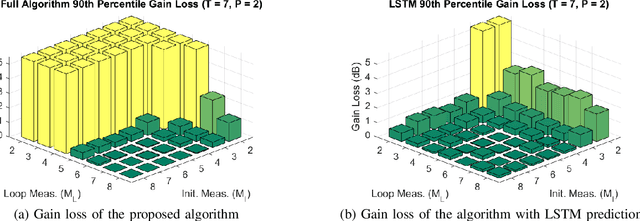

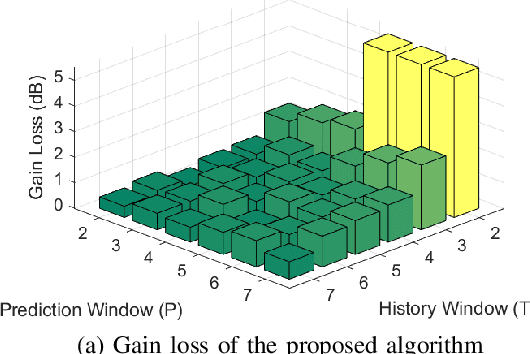

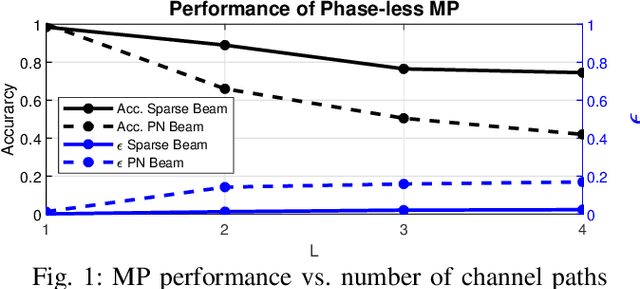

Abstract:Future wireless networks may operate at millimeter-wave (mmW) and sub-terahertz (sub-THz) frequencies to enable high data rate requirements. While large antenna arrays are critical for reliable communications at mmW and sub-THz bands, these antenna arrays would also mandate efficient and scalable initial beam alignment and link maintenance algorithms for mobile devices. Low-power phased-array architectures and phase-less power measurements due to high frequency oscillator phase noise pose additional challenges for practical beam tracking algorithms. Traditional beam tracking protocols require exhaustive sweeps of all possible beam directions and scale poorly with high mobility and large arrays. Compressive sensing and machine learning designs have been proposed to improve measurement scaling with array size but commonly degrade under hardware impairments or require raw samples respectively. In this work, we introduce a novel long short-term memory (LSTM) network assisted beam tracking and prediction algorithm utilizing only phase-less measurements from fixed compressive codebooks. We demonstrate comparable beam alignment accuracy to state-of-the-art phase-less beam alignment algorithms, while reducing the average number of required measurements over time.

Energy Efficiency Tradeoffs for Sub-THz Multi-User MIMO Base Station Receivers

Feb 16, 2022Abstract:Sub-terahertz (sub-THz) antenna array architectures significantly impact power usage and communications capacity in multi-user multiple-input multiple-output (MU-MIMO) systems. In this work, we compare the energy efficiency and spectral efficiency of three MU-MIMO capable array architectures for base station receivers. We provide a sub-THz circuits power analysis, based on our review of state-of-the-art D-band and G-band components, and compare communications capabilities through wideband simulations. Our analysis reveals that digital arrays can provide the highest spectral efficiency and energy efficiency, due to the high power consumption of sub-THz active phase shifters or when SNR and system spectral efficiency requirements are high.

Machine Learning Assisted Phase-less Millimeter-Wave Beam Alignment in Multipath Channels

Sep 29, 2021

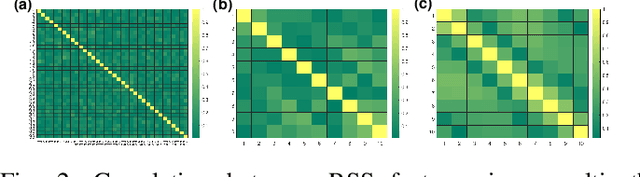

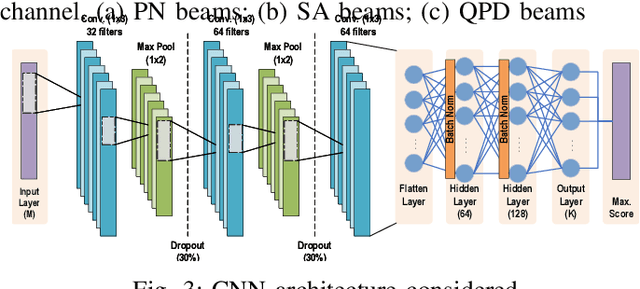

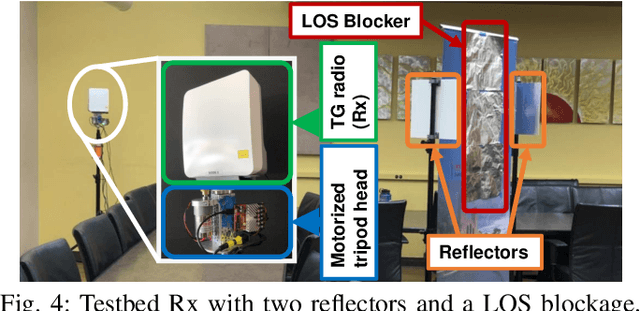

Abstract:Communication systems at millimeter-wave (mmW) and sub-terahertz frequencies are of increasing interest for future high-data rate networks. One critical challenge faced by phased array systems at these high frequencies is the efficiency of the initial beam alignment, typically using only phase-less power measurements due to high frequency oscillator phase noise. Traditional methods for beam alignment require exhaustive sweeps of all possible beam directions, thus scale communications overhead linearly with antenna array size. For better scaling with the large arrays required at high mmW bands, compressive sensing methods have been proposed as their overhead scales logarithmically with the array size. However, algorithms utilizing machine learning have shown more efficient and more accurate alignment when using real hardware due to array impairments. Additionally, few existing phase-less beam alignment algorithms have been tested over varied secondary path strength in multipath channels. In this work, we introduce a novel, machine learning based algorithm for beam alignment in multipath environments using only phase-less received power measurements. We consider the impacts of phased array sounding beam design and machine learning architectures on beam alignment performance and validate our findings experimentally using 60 GHz radios with 36-element phased arrays. Using experimental data in multipath channels, our proposed algorithm demonstrates an 88\% reduction in beam alignment overhead compared to an exhaustive search and at least a 62\% reduction in overhead compared to existing compressive methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge