Ruifu Li

A Coordinate Descent Approach to Atomic Norm Minimization

Oct 22, 2023

Abstract:Atomic norm minimization is of great interest in various applications of sparse signal processing including super-resolution line-spectral estimation and signal denoising. In practice, atomic norm minimization (ANM) is formulated as a semi-definite programming (SDP) which is generally hard to solve. This work introduces a low-complexity, matrix-free method for solving ANM. The method uses the framework of coordinate descent and exploits the sparsity-induced nature of atomic-norm regularization. Specifically, an equivalent, non-convex formulation of ANM is first proposed. It is then proved that applying the coordinate descent framework on the non-convex formulation leads to convergence to the global optimal point. For the case of a single measurement vector of length N in discrete fourier transform (DFT) basis, the complexity of each iteration in the coordinate descent procedure is O(N log N ), rendering the proposed method efficient even for large-scale problems. The proposed coordinate descent framework can be readily modified to solve a variety of ANM problems, including multi-dimensional ANM with multiple measurement vectors. It is easy to implement and can essentially be applied to any atomic sets as long as a corresponding rank-1 problem can be solved. Through extensive numerical simulations, it is verified that for solving sparse problems the proposed method is much faster than the alternating direction method of multipliers (ADMM) or the customized interior point SDP solver.

Machine Learning Prediction for Phase-less Millimeter-Wave Beam Tracking

Jun 06, 2022

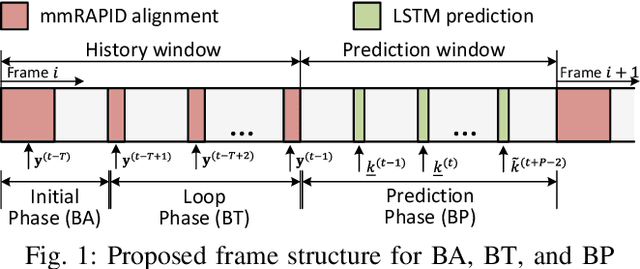

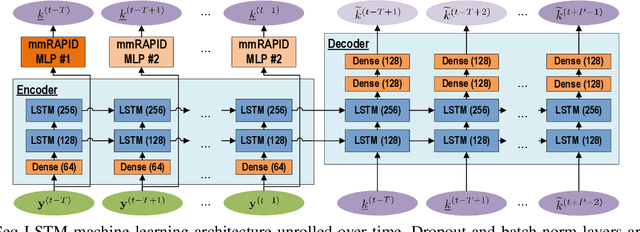

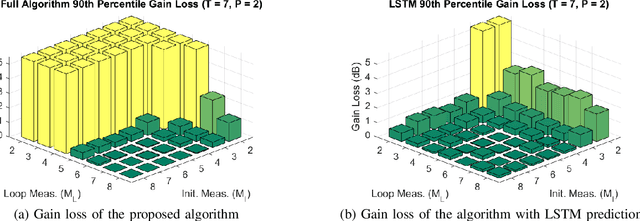

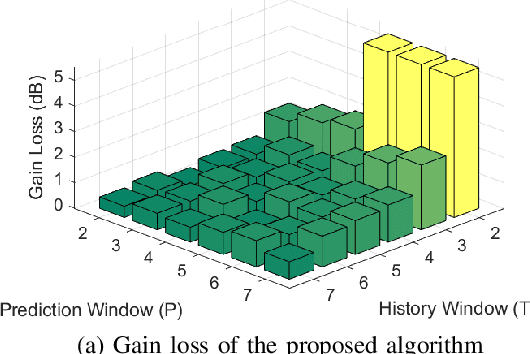

Abstract:Future wireless networks may operate at millimeter-wave (mmW) and sub-terahertz (sub-THz) frequencies to enable high data rate requirements. While large antenna arrays are critical for reliable communications at mmW and sub-THz bands, these antenna arrays would also mandate efficient and scalable initial beam alignment and link maintenance algorithms for mobile devices. Low-power phased-array architectures and phase-less power measurements due to high frequency oscillator phase noise pose additional challenges for practical beam tracking algorithms. Traditional beam tracking protocols require exhaustive sweeps of all possible beam directions and scale poorly with high mobility and large arrays. Compressive sensing and machine learning designs have been proposed to improve measurement scaling with array size but commonly degrade under hardware impairments or require raw samples respectively. In this work, we introduce a novel long short-term memory (LSTM) network assisted beam tracking and prediction algorithm utilizing only phase-less measurements from fixed compressive codebooks. We demonstrate comparable beam alignment accuracy to state-of-the-art phase-less beam alignment algorithms, while reducing the average number of required measurements over time.

Machine Learning Assisted Phase-less Millimeter-Wave Beam Alignment in Multipath Channels

Sep 29, 2021

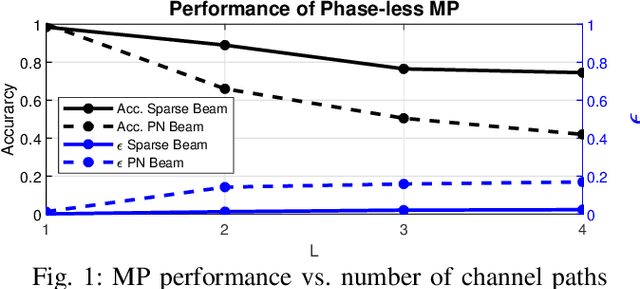

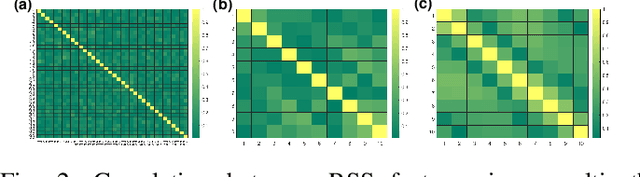

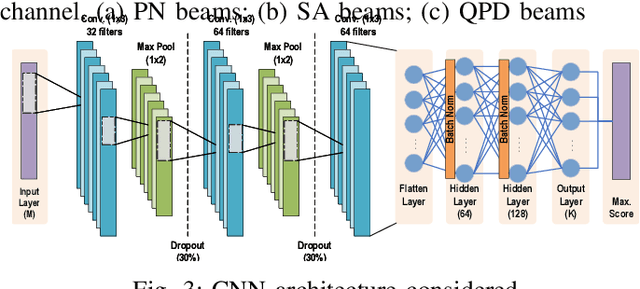

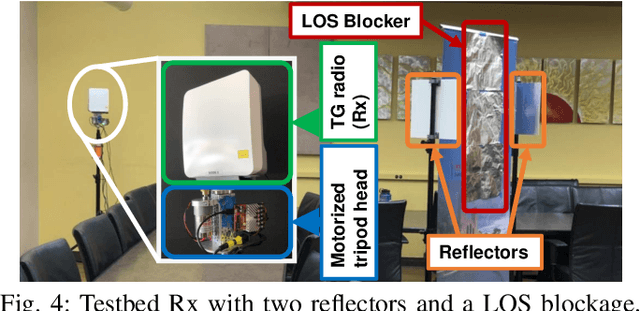

Abstract:Communication systems at millimeter-wave (mmW) and sub-terahertz frequencies are of increasing interest for future high-data rate networks. One critical challenge faced by phased array systems at these high frequencies is the efficiency of the initial beam alignment, typically using only phase-less power measurements due to high frequency oscillator phase noise. Traditional methods for beam alignment require exhaustive sweeps of all possible beam directions, thus scale communications overhead linearly with antenna array size. For better scaling with the large arrays required at high mmW bands, compressive sensing methods have been proposed as their overhead scales logarithmically with the array size. However, algorithms utilizing machine learning have shown more efficient and more accurate alignment when using real hardware due to array impairments. Additionally, few existing phase-less beam alignment algorithms have been tested over varied secondary path strength in multipath channels. In this work, we introduce a novel, machine learning based algorithm for beam alignment in multipath environments using only phase-less received power measurements. We consider the impacts of phased array sounding beam design and machine learning architectures on beam alignment performance and validate our findings experimentally using 60 GHz radios with 36-element phased arrays. Using experimental data in multipath channels, our proposed algorithm demonstrates an 88\% reduction in beam alignment overhead compared to an exhaustive search and at least a 62\% reduction in overhead compared to existing compressive methods.

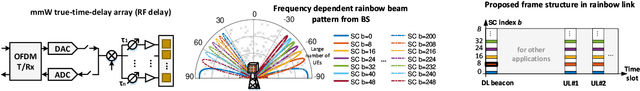

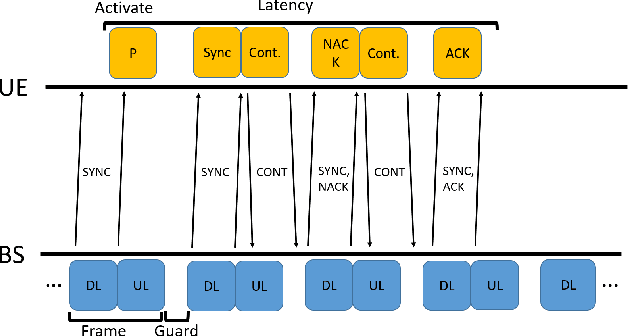

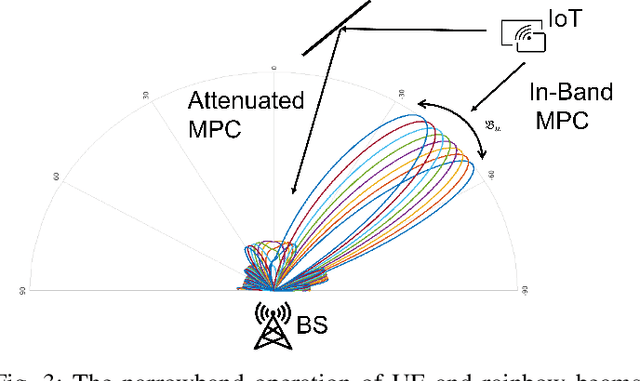

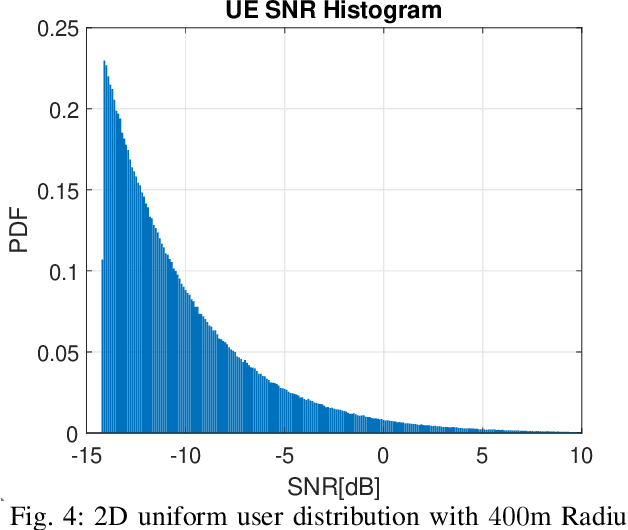

Rainbow-link: Beam-Alignment-Free and Grant-Free mmW Multiple Access using True-Time-Delay Array

Aug 21, 2021

Abstract:In this paper we propose a novel millimeter wave (mmW) multiple access method that exploits unique frequency dependent beamforming capabilities of True Time Delay (TTD) array architecture. The proposed protocol combines a contentionbased grant-free access and orthogonal frequency-division multiple access (OFDMA) scheme for uplink machine type communications. By exploiting abundant time-frequency resource blocks in mmW spectrum, we design a simple protocol that can achieve low collision rate and high network reliability for short packets and sporadic transmissions. We analyze the impact of various system parameters on system performance during synchronization and contention period. We exploit unique advantages of frequency dependent beamforming, referred as rainbow beam, to eliminate beam training overhead and analyze its impact on rates, latency, and coverage. The proposed system and protocol can flexibly accommodate different low latency applications with moderate rate requirements for a very large number of narrowband single antenna devices. By harnessing abundant resources in mmW spectrum and beamforming gain of TTD arrays rainbow link based system can simultaneously satisfy ultra-reliability and massive multiple access requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge