Machine Learning Assisted Phase-less Millimeter-Wave Beam Alignment in Multipath Channels

Paper and Code

Sep 29, 2021

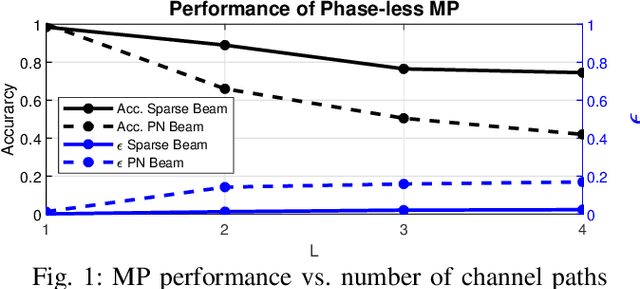

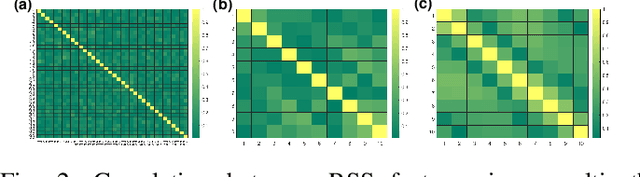

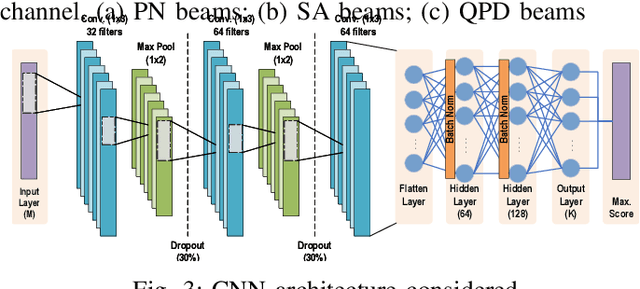

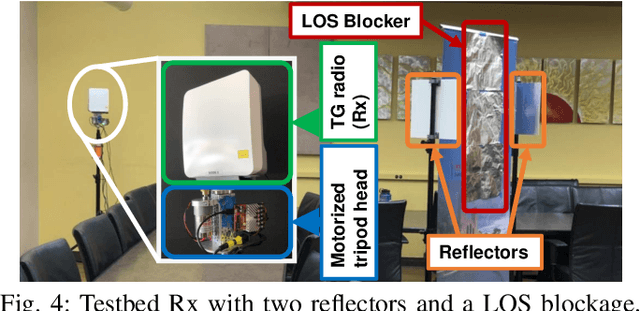

Communication systems at millimeter-wave (mmW) and sub-terahertz frequencies are of increasing interest for future high-data rate networks. One critical challenge faced by phased array systems at these high frequencies is the efficiency of the initial beam alignment, typically using only phase-less power measurements due to high frequency oscillator phase noise. Traditional methods for beam alignment require exhaustive sweeps of all possible beam directions, thus scale communications overhead linearly with antenna array size. For better scaling with the large arrays required at high mmW bands, compressive sensing methods have been proposed as their overhead scales logarithmically with the array size. However, algorithms utilizing machine learning have shown more efficient and more accurate alignment when using real hardware due to array impairments. Additionally, few existing phase-less beam alignment algorithms have been tested over varied secondary path strength in multipath channels. In this work, we introduce a novel, machine learning based algorithm for beam alignment in multipath environments using only phase-less received power measurements. We consider the impacts of phased array sounding beam design and machine learning architectures on beam alignment performance and validate our findings experimentally using 60 GHz radios with 36-element phased arrays. Using experimental data in multipath channels, our proposed algorithm demonstrates an 88\% reduction in beam alignment overhead compared to an exhaustive search and at least a 62\% reduction in overhead compared to existing compressive methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge