Hazem Sallouha

Analytical Modeling of Batteryless IoT Sensors Powered by Ambient Energy Harvesting

Jul 28, 2025Abstract:This paper presents a comprehensive mathematical model to characterize the energy dynamics of batteryless IoT sensor nodes powered entirely by ambient energy harvesting. The model captures both the energy harvesting and consumption phases, explicitly incorporating power management tasks to enable precise estimation of device behavior across diverse environmental conditions. The proposed model is applicable to a wide range of IoT devices and supports intelligent power management units designed to maximize harvested energy under fluctuating environmental conditions. We validated our model against a prototype batteryless IoT node, conducting experiments under three distinct illumination scenarios. Results show a strong correlation between analytical and measured supercapacitor voltage profiles, confirming the proposed model's accuracy.

Cell-Free Massive MIMO under a Non-Linear Power Amplifier Consumption Model

Jun 07, 2025Abstract:Existing works on Cell-Free Massive MIMO primarily focus on optimising system throughput and energy efficiency under high-traffic scenarios with only a limited focus on variable user demand as required by higher network layers. Additionally, existing works only minimise the transmitted power instead of the consumed power at the power amplifier. This work introduces a penalty-method-based approach to minimise the amplifier's power consumption while scaling much better with network size than current solutions and promoting sparsity in the power allocated to each access point. Furthermore, we demonstrate substantial reductions in power consumption (up to 24%) by considering the non-linear power consumption.

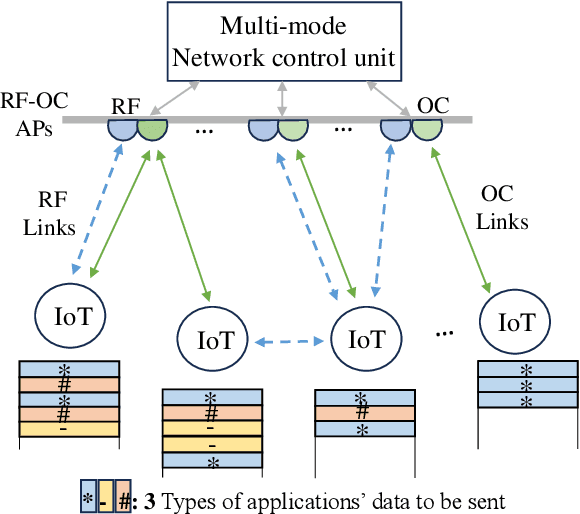

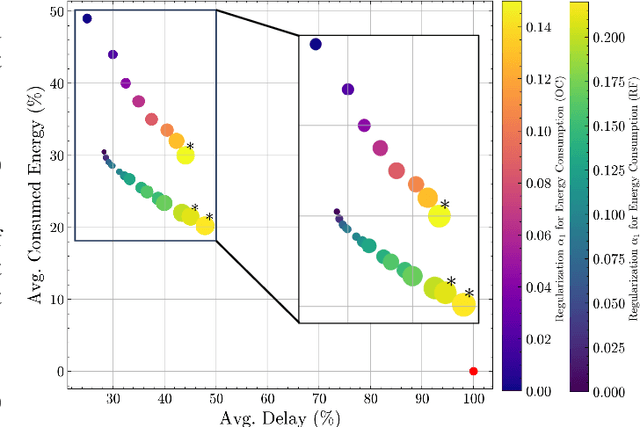

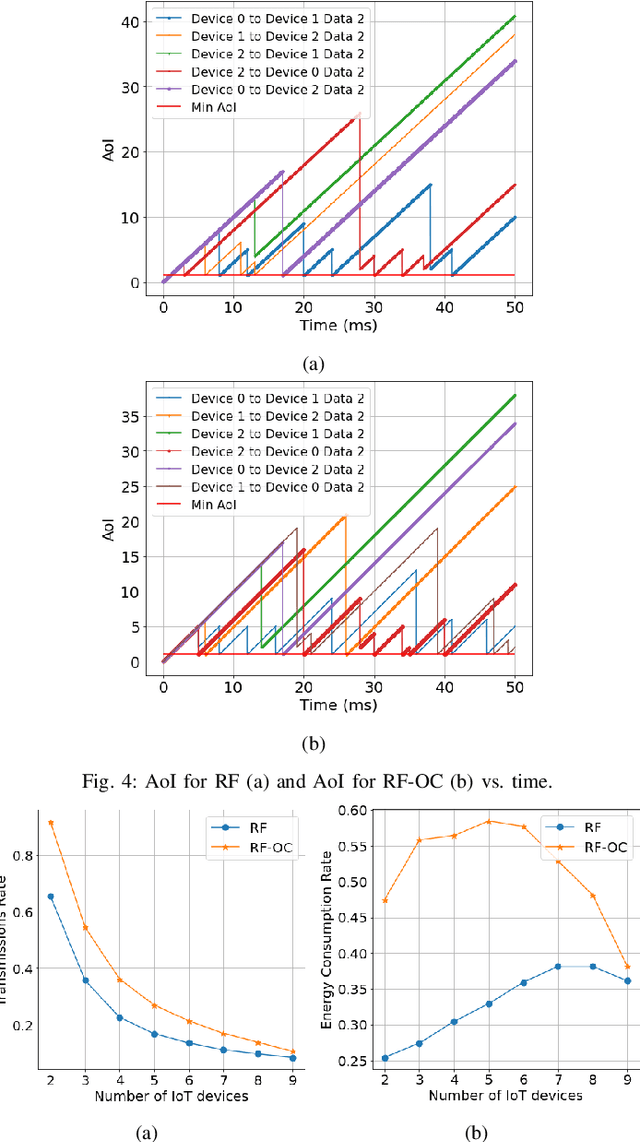

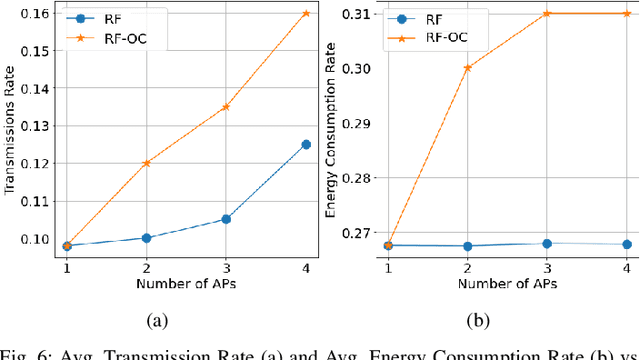

AoI in Context-Aware Hybrid Radio-Optical IoT Networks

Dec 17, 2024

Abstract:With the surge in IoT devices ranging from wearables to smart homes, prompt transmission is crucial. The Age of Information (AoI) emerges as a critical metric in this context, representing the freshness of the information transmitted across the network. This paper studies hybrid IoT networks that employ Optical Communication (OC) as a reinforcement medium to Radio Frequency (RF). We formulate a quadratic convex optimization that adopts a Pareto optimization strategy to dynamically schedule the communication between devices and select their corresponding communication technology, aiming to balance the maximization of network throughput with the minimization of energy usage and the frequency of switching between technologies. To mitigate the impact of dominant sub-objectives and their scale disparity, the designed approach employs a regularization method that approximates adequate Pareto coefficients. Simulation results show that the OC supplementary integration alongside RF enhances the network's overall performances and significantly reduces the Mean AoI and Peak AoI, allowing the collection of the freshest possible data using the best available communication technology.

Balanced Space- and Time-based Duty-cycle Scheduling for Light-based IoT

Oct 09, 2024

Abstract:In this work, we propose a Multiple Access Control (MAC) protocol for Light-based IoT (LIoT) networks, where the gateway node orchestrates and schedules batteryless nodes duty-cycles based on their location and sleep time. The LIoT concept represents a sustainable solution for massive indoor IoT applications, offering an alternative communication medium through Visible Light Communication (VLC). While most existing scheduling algorithms for intermittent batteryless IoT aim to maximize data collection and enhance dataset size, our solution is tailored for environmental sensing applications, such as temperature, humidity, and air quality monitoring, optimizing measurement distribution and minimizing blind spots to achieve comprehensive and uniform environmental sensing. We propose a Balanced Space and Time-based Time Division Multiple Access scheduling (BST-TDMA) algorithm, which addresses environmental sensing challenges by balancing spatial and temporal factors to improve the environmental sensing efficiency of batteryless LIoT nodes. Our measurement-based results show that BST-TDMA was able to efficiently schedule duty-cycles with given intervals.

Batteryless BLE and Light-based IoT Sensor Nodes for Reliable Environmental Sensing

May 27, 2024

Abstract:The sustainable design of Internet of Things (IoT) networks encompasses considerations related to energy efficiency and autonomy as well as considerations related to reliable communications, ensuring no energy is wasted on undelivered data. Under these considerations, this work proposes the design and implementation of energy-efficient Bluetooth Low Energy (BLE) and Light-based IoT (LIoT) batteryless IoT sensor nodes powered by an indoor light Energy Harvesting Unit (EHU). Our design intends to integrate these nodes into a sensing network to improve its reliability by combining both technologies and taking advantage of their features. The nodes incorporate state-of-the-art components, such as low-power sensors and efficient System-on-Chips (SoCs). Moreover, we design a strategy for adaptive switching between active and sleep cycles as a function of the available energy, allowing the IoT nodes to continuously operate without batteries. Our results show that by adapting the duty cycle of the BLE and LIoT nodes depending on the environment's light intensity, we can ensure a continuous and reliable node operation. In particular, measurements show that our proposed BLE and LIoT node designs are able to communicate with an IoT gateway in a bidirectional way, every 19.3 and 624.6 seconds, respectively, in an energy-autonomous and reliable manner.

Computational Efficient Width-Wise Early Exits in Modulation Classification

May 06, 2024

Abstract:Deep learning (DL) techniques are increasingly pervasive across various domains, including wireless communication, where they extract insights from raw radio signals. However, the computational demands of DL pose significant challenges, particularly in distributed wireless networks like Cell-free networks, where deploying DL models on edge devices becomes hard due to heightened computational loads. These computational loads escalate with larger input sizes, often correlating with improved model performance. To mitigate this challenge, Early Exiting (EE) techniques have been introduced in DL, primarily targeting the depth of the model. This approach enables models to exit during inference based on specified criteria, leveraging entropy measures at intermediate exits. Doing so makes less complex samples exit early, reducing computational load and inference time. In our contribution, we propose a novel width-wise exiting strategy for Convolutional Neural Network (CNN)-based architectures. By selectively adjusting the input size, we aim to regulate computational demands effectively. Our approach aims to decrease the average computational load during inference while maintaining performance levels comparable to conventional models. We specifically investigate Modulation Classification, a well-established application of DL in wireless communication. Our experimental results show substantial reductions in computational load, with an average decrease of 28%, and particularly notable reductions of 65% in high-SNR scenarios. Through this work, we present a practical solution for reducing computational demands in deep learning applications, particularly within the domain of wireless communication.

Location-Based Load Balancing for Energy-Efficient Cell-Free Networks

Apr 29, 2024Abstract:Cell-Free Massive MIMO (CF mMIMO) has emerged as a potential enabler for future networks. It has been shown that these networks are much more energy-efficient than classical cellular systems when they are serving users at peak capacity. However, these CF mMIMO networks are designed for peak traffic loads, and when this is not the case, they are significantly over-dimensioned and not at all energy efficient. To this end, Adaptive Access Point (AP) ON/OFF Switching (ASO) strategies have been developed to save energy when the network is not at peak traffic loads by putting unnecessary APs to sleep. Unfortunately, the existing strategies rely on measuring channel state information between every user and every access point, resulting in significant measurement energy consumption overheads. Furthermore, the current state-of-art approach has a computational complexity that scales exponentially with the number of APs. In this work, we present a novel convex feasibility testing method that allows checking per-user Quality-of-Service (QoS) requirements without necessarily considering all possible access point activations. We then propose an iterative algorithm for activating access points until all users' requirements are fulfilled. We show that our method has comparable performance to the optimal solution whilst avoiding solving costly mixed-integer problems and measuring channel state information on only a limited subset of APs.

On the Ground and in the Sky: A Tutorial on Radio Localization in Ground-Air-Space Networks

Dec 09, 2023Abstract:The inherent limitations in scaling up ground infrastructure for future wireless networks, combined with decreasing operational costs of aerial and space networks, are driving considerable research interest in multisegment ground-air-space (GAS) networks. In GAS networks, where ground and aerial users share network resources, ubiquitous and accurate user localization becomes indispensable, not only as an end-user service but also as an enabler for location-aware communications. This breaks the convention of having localization as a byproduct in networks primarily designed for communications. To address these imperative localization needs, the design and utilization of ground, aerial, and space anchors require thorough investigation. In this tutorial, we provide an in-depth systemic analysis of the radio localization problem in GAS networks, considering ground and aerial users as targets to be localized. Starting from a survey of the most relevant works, we then define the key characteristics of anchors and targets in GAS networks. Subsequently, we detail localization fundamentals in GAS networks, considering 3D positions and orientations. Afterward, we thoroughly analyze radio localization systems in GAS networks, detailing the system model, design aspects, and considerations for each of the three GAS anchors. Preliminary results are presented to provide a quantifiable perspective on key design aspects in GAS-based localization scenarios. We then identify the vital roles 6G enablers are expected to play in radio localization in GAS networks.

REM-U-net: Deep Learning Based Agile REM Prediction with Energy-Efficient Cell-Free Use Case

Sep 21, 2023Abstract:Radio environment maps (REMs) hold a central role in optimizing wireless network deployment, enhancing network performance, and ensuring effective spectrum management. Conventional REM prediction methods are either excessively time-consuming, e.g., ray tracing, or inaccurate, e.g., statistical models, limiting their adoption in modern inherently dynamic wireless networks. Deep-learning-based REM prediction has recently attracted considerable attention as an appealing, accurate, and time-efficient alternative. However, existing works on REM prediction using deep learning are either confined to 2D maps or use a limited dataset. In this paper, we introduce a runtime-efficient REM prediction framework based on u-nets, trained on a large-scale 3D maps dataset. In addition, data preprocessing steps are investigated to further refine the REM prediction accuracy. The proposed u-net framework, along with preprocessing steps, are evaluated in the context of the 2023 IEEE ICASSP Signal Processing Grand Challenge, namely, the First Pathloss Radio Map Prediction Challenge. The evaluation results demonstrate that the proposed method achieves an average normalized root-mean-square error (RMSE) of 0.045 with an average of 14 milliseconds (ms) runtime. Finally, we position our achieved REM prediction accuracy in the context of a relevant cell-free massive multiple-input multiple-output (CF-mMIMO) use case. We demonstrate that one can obviate consuming energy on large-scale fading measurements and rely on predicted REM instead to decide on which sleep access points (APs) to switch on in a CF-mMIMO network that adopts a minimum propagation loss AP switch ON/OFF strategy.

Enabling Low-Overhead Over-the-Air Synchronization Using Online Learning

Mar 02, 2023Abstract:Accurate network synchronization is a key enabler for services such as coherent transmission, cooperative decoding, and localization in distributed and cell-free networks. Unlike centralized networks, where synchronization is generally needed between a user and a base station, synchronization in distributed networks needs to be maintained between several cooperative devices, which is an inherently challenging task due to hardware imperfections and environmental influences on the clock, such as temperature. As a result, distributed networks have to be frequently synchronized, introducing a significant synchronization overhead. In this paper, we propose an online-LSTM-based model for clock skew and drift compensation, to elongate the period at which synchronization signals are needed, decreasing the synchronization overhead. We conducted comprehensive experimental results to assess the performance of the proposed model. Our measurement-based results show that the proposed model reduces the need for re-synchronization between devices by an order of magnitude, keeping devices synchronized with a precision of at least 10 microseconds with a probability 90%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge