Dieter Verbruggen

Building a real-time physical layer labeled data logging facility for 6G research

Oct 02, 2024

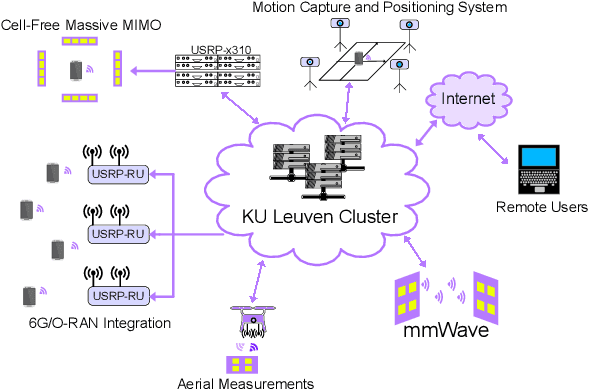

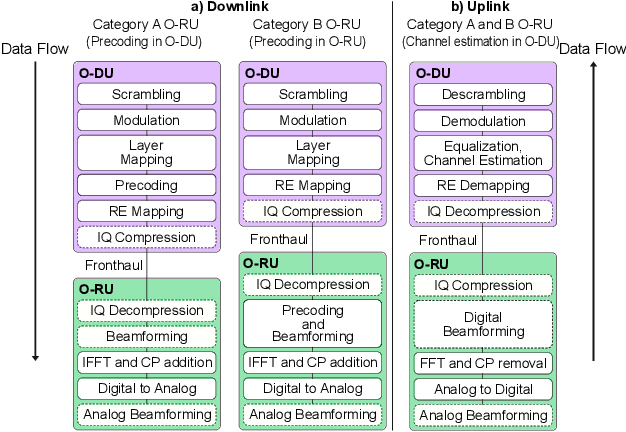

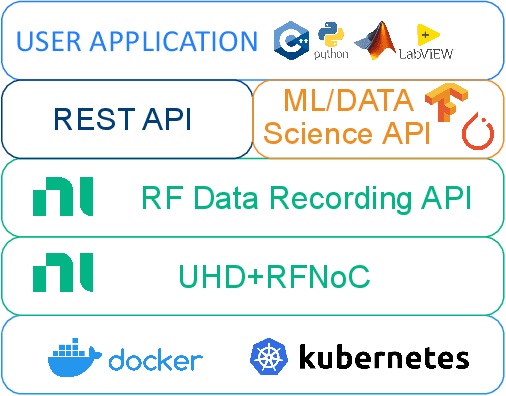

Abstract:This work describes the architecture and vision of designing and implementing a new test infrastructure for 6G physical layer research at KU Leuven. The Testbed is designed for physical layer research and experimentation following several emerging trends, such as cell-free networking, integrated communication, sensing, open disaggregated Radio Access Networks, AI-Native design, and multiband operation. The software is almost entirely based on free and open-source software, making contributing and reusing any component easy. The open Testbed is designed to provide real-time and labeled data on all parts of the physical layer, from raw IQ data to synchronization statistics, channel state information, or symbol/bit/packet error rates. Real-time labeled datasets can be collected by synchronizing the physical layer data logging with a positioning and motion capture system. One of the main goals of the design is to make it open and accessible to external users remotely. Most tests and data captures can easily be automated, and experiment code can be remotely deployed using standard containers (e.g., Docker or Podman). Finally, the paper describes how the Testbed can be used for our research on joint communication and sensing, over-the-air synchronization, distributed processing, and AI in the loop.

Computational Efficient Width-Wise Early Exits in Modulation Classification

May 06, 2024

Abstract:Deep learning (DL) techniques are increasingly pervasive across various domains, including wireless communication, where they extract insights from raw radio signals. However, the computational demands of DL pose significant challenges, particularly in distributed wireless networks like Cell-free networks, where deploying DL models on edge devices becomes hard due to heightened computational loads. These computational loads escalate with larger input sizes, often correlating with improved model performance. To mitigate this challenge, Early Exiting (EE) techniques have been introduced in DL, primarily targeting the depth of the model. This approach enables models to exit during inference based on specified criteria, leveraging entropy measures at intermediate exits. Doing so makes less complex samples exit early, reducing computational load and inference time. In our contribution, we propose a novel width-wise exiting strategy for Convolutional Neural Network (CNN)-based architectures. By selectively adjusting the input size, we aim to regulate computational demands effectively. Our approach aims to decrease the average computational load during inference while maintaining performance levels comparable to conventional models. We specifically investigate Modulation Classification, a well-established application of DL in wireless communication. Our experimental results show substantial reductions in computational load, with an average decrease of 28%, and particularly notable reductions of 65% in high-SNR scenarios. Through this work, we present a practical solution for reducing computational demands in deep learning applications, particularly within the domain of wireless communication.

Distributed Deep Learning for Modulation Classification in 6G Cell-Free Wireless Networks

Mar 13, 2024

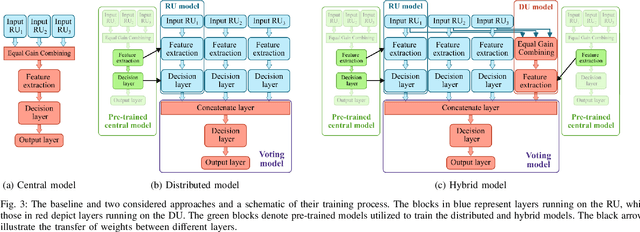

Abstract:In the evolution of 6th Generation (6G) technology, the emergence of cell-free networking presents a paradigm shift, revolutionizing user experiences within densely deployed networks where distributed access points collaborate. However, the integration of intelligent mechanisms is crucial for optimizing the efficiency, scalability, and adaptability of these 6G cell-free networks. One application aiming to optimize spectrum usage is Automatic Modulation Classification (AMC), a vital component for classifying and dynamically adjusting modulation schemes. This paper explores different distributed solutions for AMC in cell-free networks, addressing the training, computational complexity, and accuracy of two practical approaches. The first approach addresses scenarios where signal sharing is not feasible due to privacy concerns or fronthaul limitations. Our findings reveal that maintaining comparable accuracy is remarkably achievable, yet it comes with an increase in computational demand. The second approach considers a central model and multiple distributed models collaboratively classifying the modulation. This hybrid model leverages diversity gain through signal combining and requires synchronization and signal sharing. The hybrid model demonstrates superior performance, achieving a 2.5% improvement in accuracy with equivalent total computational load. Notably, the hybrid model distributes the computational load across multiple devices, resulting in a lower individual computational load.

Enabling Low-Overhead Over-the-Air Synchronization Using Online Learning

Mar 02, 2023Abstract:Accurate network synchronization is a key enabler for services such as coherent transmission, cooperative decoding, and localization in distributed and cell-free networks. Unlike centralized networks, where synchronization is generally needed between a user and a base station, synchronization in distributed networks needs to be maintained between several cooperative devices, which is an inherently challenging task due to hardware imperfections and environmental influences on the clock, such as temperature. As a result, distributed networks have to be frequently synchronized, introducing a significant synchronization overhead. In this paper, we propose an online-LSTM-based model for clock skew and drift compensation, to elongate the period at which synchronization signals are needed, decreasing the synchronization overhead. We conducted comprehensive experimental results to assess the performance of the proposed model. Our measurement-based results show that the proposed model reduces the need for re-synchronization between devices by an order of magnitude, keeping devices synchronized with a precision of at least 10 microseconds with a probability 90%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge