Sofie Pollin

KU Leuven

Sequential Processing Strategies in Fronthaul Constrained Cell-Free Massive MIMO Networks

Jan 28, 2026Abstract:In a cell-free massive MIMO (CFmMIMO) network with a daisy-chain fronthaul, the amount of information that each access point (AP) needs to communicate with the next AP in the chain is determined by the location of the AP in the sequential fronthaul. Therefore, we propose two sequential processing strategies to combat the adverse effect of fronthaul compression on the sum of users' spectral efficiency (SE): 1) linearly increasing fronthaul capacity allocation among APs and 2) Two-Path users' signal estimation. The two strategies show superior performance in terms of sum SE compared to the equal fronthaul capacity allocation and Single-Path sequential signal estimation.

Dualband OFDM Delay Estimation for Multi-Target Localization

Jan 26, 2026Abstract:Integrated localization and communication systems aim to reuse communication waveforms for simultaneous data transmission and localization, but delay resolution is fundamentally limited by the available bandwidth. In practice, large contiguous bandwidths are difficult to obtain due to hardware constraints and spectrum fragmentation. Aggregating non-contiguous narrow bands can increase the effective frequency span, but a non-contiguous frequency layout introduces challenges such as elevated sidelobes and ambiguity in delay estimation. This paper introduces a point-spread-function (PSF)-centric framework for dual-band OFDM delay estimation. We model the observed delay profile as the convolution of the true target response with a PSF determined by the dual-band subcarrier selection pattern, explicitly linking band configuration to resolution and ambiguity. To suppress PSF-induced artifacts, we adapt the RELAX algorithm for dual-band multi-target delay estimation. Simulations demonstrate improved robustness and accuracy in dual-band scenarios, supporting ILC under fragmented spectrum.

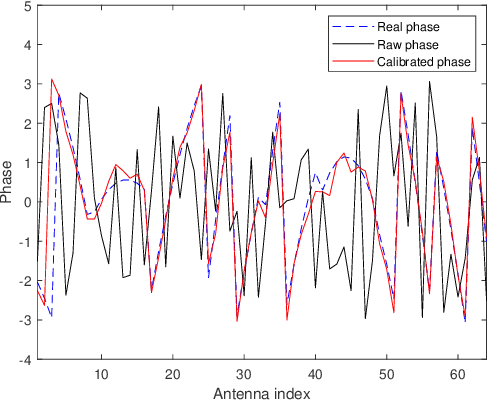

Robust Localization in OFDM-Based Massive MIMO through Phase Offset Calibration

Jan 20, 2026Abstract:Accurate localization in Orthogonal Frequency Division Multiplexing (OFDM)-based massive Multiple-Input Multiple-Output (MIMO) systems depends critically on phase coherence across subcarriers and antennas. However, practical systems suffer from frequency-dependent and (spatial) antenna-dependent phase offsets, degrading localization accuracy. This paper analytically studies the impact of phase incoherence on localization performance under a static User Equipment (UE) and Line-of-Sight (LoS) scenario. We use two complementary tools. First, we derive the Cramér-Rao Lower Bound (CRLB) to quantify the theoretical limits under phase offsets. Then, we develop a Spatial Ambiguity Function (SAF)-based model to characterize ambiguity patterns. Simulation results reveal that spatial phase offsets severely degrade localization performance, while frequency phase offsets have a minor effect in the considered system configuration. To address this, we propose a robust Channel State Information (CSI) calibration framework and validate it using real-world measurements from a practical massive MIMO testbed. The experimental results confirm that the proposed calibration framework significantly improves the localization Root Mean Squared Error (RMSE) from 5 m to 1.2 cm, aligning well with the theoretical predictions.

Efficient Channel Autoencoders for Wideband Communications leveraging Walsh-Hadamard interleaving

Jan 16, 2026Abstract:This paper investigates how end-to-end (E2E) channel autoencoders (AEs) can achieve energy-efficient wideband communications by leveraging Walsh-Hadamard (WH) interleaved converters. WH interleaving enables high sampling rate analog-digital conversion with reduced power consumption using an analog WH transformation. We demonstrate that E2E-trained neural coded modulation can transparently adapt to the WH-transceiver hardware without requiring algorithmic redesign. Focusing on the short block length regime, we train WH-domain AEs and benchmark them against standard neural and conventional baselines, including 5G Polar codes. We quantify the system-level energy tradeoffs among baseband compute, channel signal-to-noise ratio (SNR), and analog converter power. Our analysis shows that the proposed WH-AE system can approach conventional Polar code SNR performance within 0.14dB while consuming comparable or lower system power. Compared to the best neural baseline, WH-AE achieves, on average, 29% higher energy efficiency (in bit/J) for the same reliability. These findings establish WH-domain learning as a viable path to energy-efficient, high-throughput wideband communications by explicitly balancing compute complexity, SNR, and analog power consumption.

Inter-Cell Interference Rejection Based on Ultrawideband Walsh-Domain Wireless Autoencoding

Jan 16, 2026Abstract:This paper proposes a novel technique for rejecting partial-in-band inter-cell interference (ICI) in ultrawideband communication systems. We present the design of an end-to-end wireless autoencoder architecture that jointly optimizes the transmitter and receiver encoding/decoding in the Walsh domain to mitigate interference from coexisting narrower-band 5G base stations. By exploiting the orthogonality and self-inverse properties of Walsh functions, the system distributes and learns to encode bit-words across parallel Walsh branches. Through analytical modeling and simulation, we characterize how 5G CPOFDM interference maps into the Walsh domain and identify optimal ratios of transmission frequencies and sampling rate where the end-to-end autoencoder achieves the highest rejection. Experimental results show that the proposed autoencoder achieves up to 12 dB of ICI rejection while maintaining a low block error rate (BLER) for the same baseline channel noise, i.e., baseline Signal-to-Noise-Ratio (SNR) without the interference.

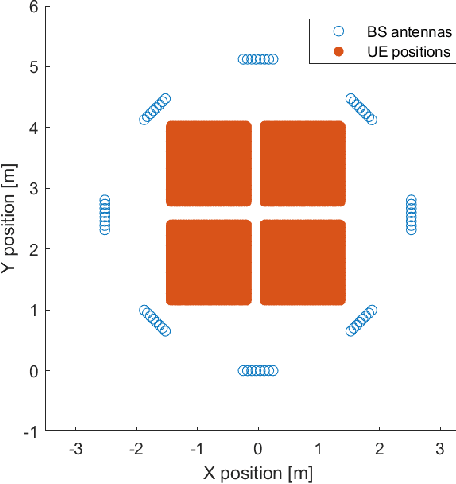

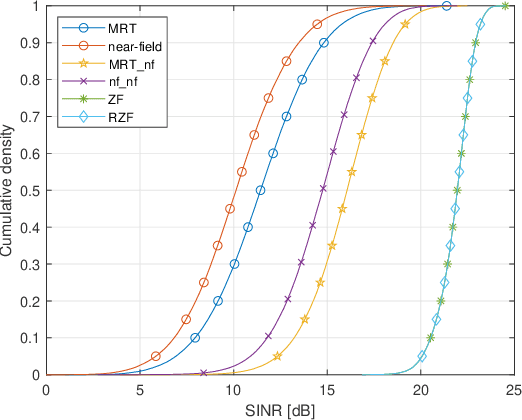

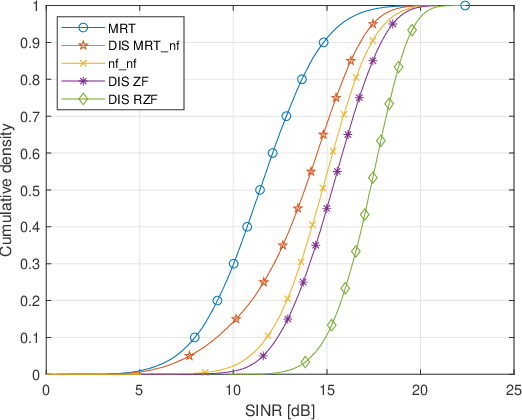

Location-Informed Interference Suppression Precoding Methods for Distributed Massive MIMO Systems

Nov 07, 2025

Abstract:The evolution of mobile networks towards user-centric cell-free distributed Massive MIMO configurations requires the development of novel signal processing techniques. More specifically, digital precoding algorithms have to be designed or adopted to enable distributed operation. Future deployments are expected to improve coexistence between cellular generations, and between mobile networks and incumbent services such as radar. In dense cell-free deployments, it might also not be possible to have full channel state information for all users at all antennas. To leverage location information in a dense deployment area, we suggest and investigate several algorithmic alterations on existing precoding methods, aimed at location-informed interference suppression, for usage in existing and emerging systems where user locations are known. The proposed algorithms are derived using a theoretical channel model and validated and numerically evaluated using an empirical dataset containing channel measurements from an indoor distributed Massive MIMO testbed. When dealing with measured CSI, the impact of the hardware, in addition to the location-based channel, needs to be compensated for. We propose a method to calibrate the hardware and achieve measurement-based evaluation of our location-based interference suppression algorithms. The results demonstrate that the proposed methods allow location-based interference suppression without explicit CSI knowledge at the transmitter, under certain realistic network conditions.

Approximation of the Range Ambiguity Function in Near-field Sensing Systems

Sep 26, 2025

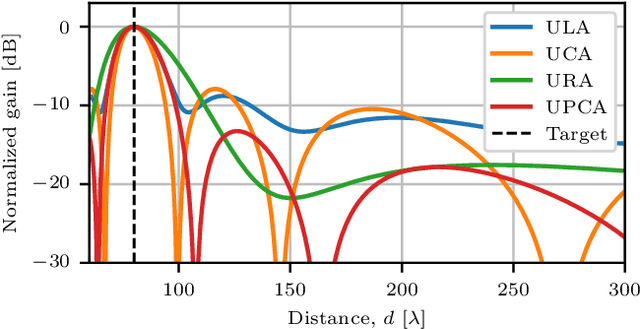

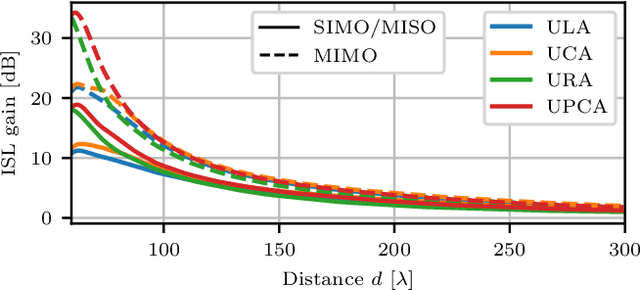

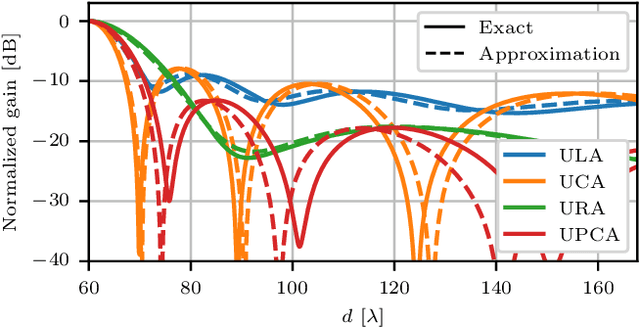

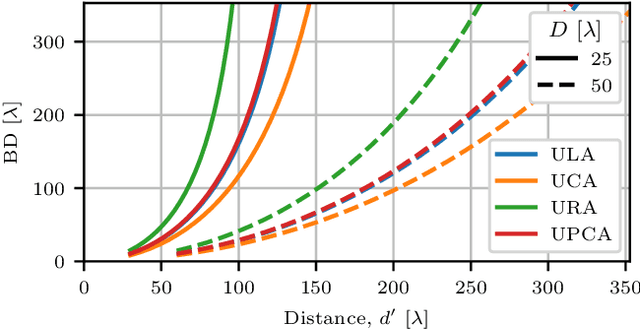

Abstract:This paper investigates the range ambiguity function of near-field systems where bandwidth and near-field beamfocusing jointly determine the resolution. First, the general matched filter ambiguity function is derived and the near-field array factors of different antenna array geometries are introduced. Next, the near-field ambiguity function is approximated as a product of the range-dependent near-field array factor and the ambiguity function due to the utilized bandwidth and waveform. An approximation criterion based on the aperture-bandwidth product is formulated, and its accuracy is examined. Finally, the improvements to the ambiguity function offered by the near-field beamfocusing, as compared to the far-field case, are presented. The performance gains are evaluated in terms of resolution improvement offered by beamfocusing, peak-to-sidelobe and integrated-sidelobe level improvement. The gains offered by the near-field regime are shown to be range-dependent and substantial only in close proximity to the array.

Near-Field Spatial non-Stationary Channel Estimation: Visibility-Region-HMM-Aided Polar-Domain Simultaneous OMP

Aug 06, 2025Abstract:This work focuses on channel estimation in extremely large aperture array (ELAA) systems, where near-field propagation and spatial non-stationarity introduce complexities that hinder the effectiveness of traditional estimation techniques. A physics-based hybrid channel model is developed, incorporating non-binary visibility region (VR) masks to simulate diffraction-induced power variations across the antenna array. To address the estimation challenges posed by these channel conditions, a novel algorithm is proposed: Visibility-Region-HMM-Aided Polar-Domain Simultaneous Orthogonal Matching Pursuit (VR-HMM-P-SOMP). The method extends a greedy sparse recovery framework by integrating VR estimation through a hidden Markov model (HMM), using a novel emission formulation and Viterbi decoding. This allows the algorithm to adaptively mask steering vectors and account for spatial non-stationarity at the antenna level. Simulation results demonstrate that the proposed method enhances estimation accuracy compared to existing techniques, particularly in low-SNR and sparse scenarios, while maintaining a low computational complexity. The algorithm presents robustness across a range of design parameters and channel conditions, offering a practical solution for ELAA systems.

CASH: Context-Aware Smart Handover for Reliable UAV Connectivity on Aerial Corridors

Aug 05, 2025Abstract:Urban Air Mobility (UAM) envisions aerial corridors for Unmanned Aerial Vehicles (UAVs) to reduce ground traffic congestion by supporting 3D mobility, such as air taxis. A key challenge in these high-mobility aerial corridors is ensuring reliable connectivity, where frequent handovers can degrade network performance. To resolve this, we present a Context-Aware Smart Handover (CASH) protocol that uses a forward-looking scoring mechanism based on UAV trajectory to make proactive handover decisions. We evaluate the performance of the proposed CASH against existing handover protocols in a custom-built simulator. Results show that CASH reduces handover frequency by up to 78% while maintaining low outage probability. We then investigate the impact of base station density and safety margin on handover performance, where their optimal setups are empirically obtained to ensure reliable UAM communication.

Analytical Modeling of Batteryless IoT Sensors Powered by Ambient Energy Harvesting

Jul 28, 2025Abstract:This paper presents a comprehensive mathematical model to characterize the energy dynamics of batteryless IoT sensor nodes powered entirely by ambient energy harvesting. The model captures both the energy harvesting and consumption phases, explicitly incorporating power management tasks to enable precise estimation of device behavior across diverse environmental conditions. The proposed model is applicable to a wide range of IoT devices and supports intelligent power management units designed to maximize harvested energy under fluctuating environmental conditions. We validated our model against a prototype batteryless IoT node, conducting experiments under three distinct illumination scenarios. Results show a strong correlation between analytical and measured supercapacitor voltage profiles, confirming the proposed model's accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge