Shamik Sarkar

RadYOLOLet: Radar Detection and Parameter Estimation Using YOLO and WaveLet

Sep 21, 2023

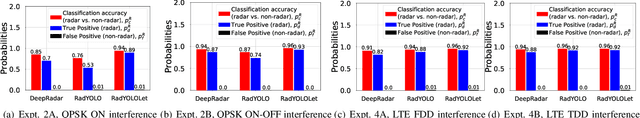

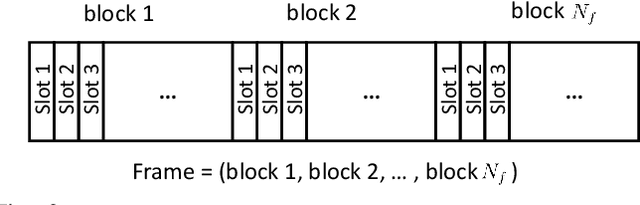

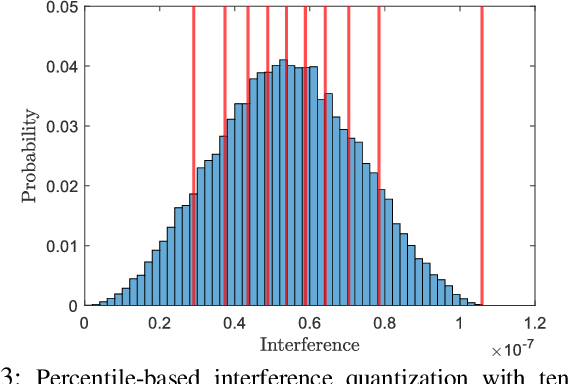

Abstract:Detection of radar signals without assistance from the radar transmitter is a crucial requirement for emerging and future shared-spectrum wireless networks like Citizens Broadband Radio Service (CBRS). In this paper, we propose a supervised deep learning-based spectrum sensing approach called RadYOLOLet that can detect low-power radar signals in the presence of interference and estimate the radar signal parameters. The core of RadYOLOLet is two different convolutional neural networks (CNN), RadYOLO and Wavelet-CNN, that are trained independently. RadYOLO operates on spectrograms and provides most of the capabilities of RadYOLOLet. However, it suffers from low radar detection accuracy in the low signal-to-noise ratio (SNR) regime. We develop Wavelet-CNN specifically to deal with this limitation of RadYOLO. Wavelet-CNN operates on continuous Wavelet transform of the captured signals, and we use it only when RadYOLO fails to detect any radar signal. We thoroughly evaluate RadYOLOLet using different experiments corresponding to different types of interference signals. Based on our evaluations, we find that RadYOLOLet can achieve 100% radar detection accuracy for our considered radar types up to 16 dB SNR, which cannot be guaranteed by other comparable methods. RadYOLOLet can also function accurately under interference up to 16 dB SINR.

REM-U-net: Deep Learning Based Agile REM Prediction with Energy-Efficient Cell-Free Use Case

Sep 21, 2023Abstract:Radio environment maps (REMs) hold a central role in optimizing wireless network deployment, enhancing network performance, and ensuring effective spectrum management. Conventional REM prediction methods are either excessively time-consuming, e.g., ray tracing, or inaccurate, e.g., statistical models, limiting their adoption in modern inherently dynamic wireless networks. Deep-learning-based REM prediction has recently attracted considerable attention as an appealing, accurate, and time-efficient alternative. However, existing works on REM prediction using deep learning are either confined to 2D maps or use a limited dataset. In this paper, we introduce a runtime-efficient REM prediction framework based on u-nets, trained on a large-scale 3D maps dataset. In addition, data preprocessing steps are investigated to further refine the REM prediction accuracy. The proposed u-net framework, along with preprocessing steps, are evaluated in the context of the 2023 IEEE ICASSP Signal Processing Grand Challenge, namely, the First Pathloss Radio Map Prediction Challenge. The evaluation results demonstrate that the proposed method achieves an average normalized root-mean-square error (RMSE) of 0.045 with an average of 14 milliseconds (ms) runtime. Finally, we position our achieved REM prediction accuracy in the context of a relevant cell-free massive multiple-input multiple-output (CF-mMIMO) use case. We demonstrate that one can obviate consuming energy on large-scale fading measurements and rely on predicted REM instead to decide on which sleep access points (APs) to switch on in a CF-mMIMO network that adopts a minimum propagation loss AP switch ON/OFF strategy.

ProSpire: Proactive Spatial Prediction of Radio Environment Using Deep Learning

Aug 20, 2023Abstract:Spatial prediction of the radio propagation environment of a transmitter can assist and improve various aspects of wireless networks. The majority of research in this domain can be categorized as 'reactive' spatial prediction, where the predictions are made based on a small set of measurements from an active transmitter whose radio environment is to be predicted. Emerging spectrum-sharing paradigms would benefit from 'proactive' spatial prediction of the radio environment, where the spatial predictions must be done for a transmitter for which no measurement has been collected. This paper proposes a novel, supervised deep learning-based framework, ProSpire, that enables spectrum sharing by leveraging the idea of proactive spatial prediction. We carefully address several challenges in ProSpire, such as designing a framework that conveniently collects training data for learning, performing the predictions in a fast manner, enabling operations without an area map, and ensuring that the predictions do not lead to undesired interference. ProSpire relies on the crowdsourcing of transmitters and receivers during their normal operations to address some of the aforementioned challenges. The core component of ProSpire is a deep learning-based image-to-image translation method, which we call RSSu-net. We generate several diverse datasets using ray tracing software and numerically evaluate ProSpire. Our evaluations show that RSSu-net performs reasonably well in terms of signal strength prediction, 5 dB mean absolute error, which is comparable to the average error of other relevant methods. Importantly, due to the merits of RSSu-net, ProSpire creates proactive boundaries around transmitters such that they can be activated with 97% probability of not causing interference. In this regard, the performance of RSSu-net is 19% better than that of other comparable methods.

GAN-RXA: A Practical Scalable Solution to Receiver-Agnostic Transmitter Fingerprinting

Mar 25, 2023Abstract:Radio frequency fingerprinting has been proposed for device identification. However, experimental studies also demonstrated its sensitivity to deployment changes. Recent works have addressed channel impacts by developing robust algorithms accounting for time and location variability, but the impacts of receiver impairments on transmitter fingerprints are yet to be solved. In this work, we investigat the receiver-agnostic transmitter fingerprinting problem, and propose a novel two-stage supervised learning framework (RXA) to address it. In the first stage, our approach calibrates a receiver-agnostic transmitter feature-extractor. We also propose two deep-learning approaches (SD-RXA and GAN-RXA) in this first stage to improve the receiver-agnostic property of the RXA framework. In the second stage, the calibrated feature-extractor is utilized to train a transmitter classifier with only one receiver. We evaluate the proposed approaches on transmitter identification problem using a large-scale WiFi dataset. We show that when a trained transmitter-classifier is deployed on new receivers, the RXA framework can improve the classification accuracy by 19.5%, and the outlier detection rate by 10.0% compared to a naive approach without calibration. Moreover, GAN-RXA can further increase the closed-set classification accuracy by 5.0%, and the outlier detection rate by 7.5% compared to the RXA approach.

A Q-Learning-based Approach for Distributed Beam Scheduling in mmWave Networks

Oct 17, 2021

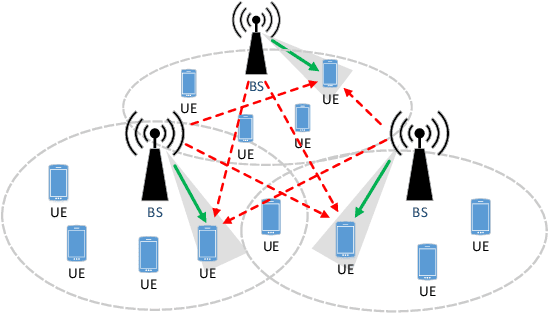

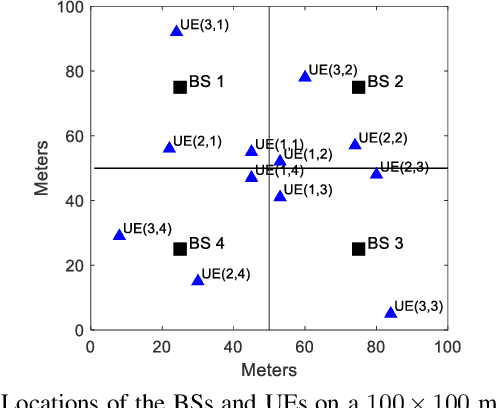

Abstract:We consider the problem of distributed downlink beam scheduling and power allocation for millimeter-Wave (mmWave) cellular networks where multiple base stations (BSs) belonging to different service operators share the same unlicensed spectrum with no central coordination or cooperation among them. Our goal is to design efficient distributed beam scheduling and power allocation algorithms such that the network-level payoff, defined as the weighted sum of the total throughput and a power penalization term, can be maximized. To this end, we propose a distributed scheduling approach to power allocation and adaptation for efficient interference management over the shared spectrum by modeling each BS as an independent Q-learning agent. As a baseline, we compare the proposed approach to the state-of-the-art non-cooperative game-based approach which was previously developed for the same problem. We conduct extensive experiments under various scenarios to verify the effect of multiple factors on the performance of both approaches. Experiment results show that the proposed approach adapts well to different interference situations by learning from experience and can achieve higher payoff than the game-based approach. The proposed approach can also be integrated into our previously developed Lyapunov stochastic optimization framework for the purpose of network utility maximization with optimality guarantee. As a result, the weights in the payoff function can be automatically and optimally determined by the virtual queue values from the sub-problems derived from the Lyapunov optimization framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge