Chenyang Shao

Diffuse Thinking: Exploring Diffusion Language Models as Efficient Thought Proposers for Reasoning

Oct 31, 2025Abstract:In recent years, large language models (LLMs) have witnessed remarkable advancements, with the test-time scaling law consistently enhancing the reasoning capabilities. Through systematic evaluation and exploration of a diverse spectrum of intermediate thoughts, LLMs demonstrate the potential to generate deliberate reasoning steps, thereby substantially enhancing reasoning accuracy. However, LLMs' autoregressive generation paradigm results in reasoning performance scaling sub-optimally with test-time computation, often requiring excessive computational overhead to propose thoughts while yielding only marginal performance gains. In contrast, diffusion language models (DLMs) can efficiently produce diverse samples through parallel denoising in a single forward pass, inspiring us to leverage them for proposing intermediate thoughts, thereby alleviating the computational burden associated with autoregressive generation while maintaining quality. In this work, we propose an efficient collaborative reasoning framework, leveraging DLMs to generate candidate thoughts and LLMs to evaluate their quality. Experiments across diverse benchmarks demonstrate that our framework achieves strong performance in complex reasoning tasks, offering a promising direction for future research. Our code is open-source at https://anonymous.4open.science/r/Diffuse-Thinking-EC60.

Simulating Generative Social Agents via Theory-Informed Workflow Design

Aug 12, 2025Abstract:Recent advances in large language models have demonstrated strong reasoning and role-playing capabilities, opening new opportunities for agent-based social simulations. However, most existing agents' implementations are scenario-tailored, without a unified framework to guide the design. This lack of a general social agent limits their ability to generalize across different social contexts and to produce consistent, realistic behaviors. To address this challenge, we propose a theory-informed framework that provides a systematic design process for LLM-based social agents. Our framework is grounded in principles from Social Cognition Theory and introduces three key modules: motivation, action planning, and learning. These modules jointly enable agents to reason about their goals, plan coherent actions, and adapt their behavior over time, leading to more flexible and contextually appropriate responses. Comprehensive experiments demonstrate that our theory-driven agents reproduce realistic human behavior patterns under complex conditions, achieving up to 75% lower deviation from real-world behavioral data across multiple fidelity metrics compared to classical generative baselines. Ablation studies further show that removing motivation, planning, or learning modules increases errors by 1.5 to 3.2 times, confirming their distinct and essential contributions to generating realistic and coherent social behaviors.

Route-and-Reason: Scaling Large Language Model Reasoning with Reinforced Model Router

Jun 06, 2025Abstract:Multi-step reasoning has proven essential for enhancing the problem-solving capabilities of Large Language Models (LLMs) by decomposing complex tasks into intermediate steps, either explicitly or implicitly. Extending the reasoning chain at test time through deeper thought processes or broader exploration, can furthur improve performance, but often incurs substantial costs due to the explosion in token usage. Yet, many reasoning steps are relatively simple and can be handled by more efficient smaller-scale language models (SLMs). This motivates hybrid approaches that allocate subtasks across models of varying capacities. However, realizing such collaboration requires accurate task decomposition and difficulty-aware subtask allocation, which is challenging. To address this, we propose R2-Reasoner, a novel framework that enables collaborative reasoning across heterogeneous LLMs by dynamically routing sub-tasks based on estimated complexity. At the core of our framework is a Reinforced Model Router, composed of a task decomposer and a subtask allocator. The task decomposer segments complex input queries into logically ordered subtasks, while the subtask allocator assigns each subtask to the most appropriate model, ranging from lightweight SLMs to powerful LLMs, balancing accuracy and efficiency. To train this router, we introduce a staged pipeline that combines supervised fine-tuning on task-specific datasets with Group Relative Policy Optimization algorithm, enabling self-supervised refinement through iterative reinforcement learning. Extensive experiments across four challenging benchmarks demonstrate that R2-Reasoner reduces API costs by 86.85% while maintaining or surpassing baseline accuracy. Our framework paves the way for more cost-effective and adaptive LLM reasoning. The code is open-source at https://anonymous.4open.science/r/R2_Reasoner .

Division-of-Thoughts: Harnessing Hybrid Language Model Synergy for Efficient On-Device Agents

Feb 06, 2025Abstract:The rapid expansion of web content has made on-device AI assistants indispensable for helping users manage the increasing complexity of online tasks. The emergent reasoning ability in large language models offer a promising path for next-generation on-device AI agents. However, deploying full-scale Large Language Models (LLMs) on resource-limited local devices is challenging. In this paper, we propose Division-of-Thoughts (DoT), a collaborative reasoning framework leveraging the synergy between locally deployed Smaller-scale Language Models (SLMs) and cloud-based LLMs. DoT leverages a Task Decomposer to elicit the inherent planning abilities in language models to decompose user queries into smaller sub-tasks, which allows hybrid language models to fully exploit their respective strengths. Besides, DoT employs a Task Scheduler to analyze the pair-wise dependency of sub-tasks and create a dependency graph, facilitating parallel reasoning of sub-tasks and the identification of key steps. To allocate the appropriate model based on the difficulty of sub-tasks, DoT leverages a Plug-and-Play Adapter, which is an additional task head attached to the SLM that does not alter the SLM's parameters. To boost adapter's task allocation capability, we propose a self-reinforced training method that relies solely on task execution feedback. Extensive experiments on various benchmarks demonstrate that our DoT significantly reduces LLM costs while maintaining competitive reasoning accuracy. Specifically, DoT reduces the average reasoning time and API costs by 66.12% and 83.57%, while achieving comparable reasoning accuracy with the best baseline methods.

Towards Large Reasoning Models: A Survey on Scaling LLM Reasoning Capabilities

Jan 17, 2025

Abstract:Language has long been conceived as an essential tool for human reasoning. The breakthrough of Large Language Models (LLMs) has sparked significant research interest in leveraging these models to tackle complex reasoning tasks. Researchers have moved beyond simple autoregressive token generation by introducing the concept of "thought" -- a sequence of tokens representing intermediate steps in the reasoning process. This innovative paradigm enables LLMs' to mimic complex human reasoning processes, such as tree search and reflective thinking. Recently, an emerging trend of learning to reason has applied reinforcement learning (RL) to train LLMs to master reasoning processes. This approach enables the automatic generation of high-quality reasoning trajectories through trial-and-error search algorithms, significantly expanding LLMs' reasoning capacity by providing substantially more training data. Furthermore, recent studies demonstrate that encouraging LLMs to "think" with more tokens during test-time inference can further significantly boost reasoning accuracy. Therefore, the train-time and test-time scaling combined to show a new research frontier -- a path toward Large Reasoning Model. The introduction of OpenAI's o1 series marks a significant milestone in this research direction. In this survey, we present a comprehensive review of recent progress in LLM reasoning. We begin by introducing the foundational background of LLMs and then explore the key technical components driving the development of large reasoning models, with a focus on automated data construction, learning-to-reason techniques, and test-time scaling. We also analyze popular open-source projects at building large reasoning models, and conclude with open challenges and future research directions.

Towards Large Reasoning Models: A Survey of Reinforced Reasoning with Large Language Models

Jan 16, 2025

Abstract:Language has long been conceived as an essential tool for human reasoning. The breakthrough of Large Language Models (LLMs) has sparked significant research interest in leveraging these models to tackle complex reasoning tasks. Researchers have moved beyond simple autoregressive token generation by introducing the concept of "thought" -- a sequence of tokens representing intermediate steps in the reasoning process. This innovative paradigm enables LLMs' to mimic complex human reasoning processes, such as tree search and reflective thinking. Recently, an emerging trend of learning to reason has applied reinforcement learning (RL) to train LLMs to master reasoning processes. This approach enables the automatic generation of high-quality reasoning trajectories through trial-and-error search algorithms, significantly expanding LLMs' reasoning capacity by providing substantially more training data. Furthermore, recent studies demonstrate that encouraging LLMs to "think" with more tokens during test-time inference can further significantly boost reasoning accuracy. Therefore, the train-time and test-time scaling combined to show a new research frontier -- a path toward Large Reasoning Model. The introduction of OpenAI's o1 series marks a significant milestone in this research direction. In this survey, we present a comprehensive review of recent progress in LLM reasoning. We begin by introducing the foundational background of LLMs and then explore the key technical components driving the development of large reasoning models, with a focus on automated data construction, learning-to-reason techniques, and test-time scaling. We also analyze popular open-source projects at building large reasoning models, and conclude with open challenges and future research directions.

A Generative Pre-Training Framework for Spatio-Temporal Graph Transfer Learning

Feb 20, 2024Abstract:Spatio-temporal graph (STG) learning is foundational for smart city applications, yet it is often hindered by data scarcity in many cities and regions. To bridge this gap, we propose a novel generative pre-training framework, GPDiff, for STG transfer learning. Unlike conventional approaches that heavily rely on common feature extraction or intricate transfer learning designs, our solution takes a novel approach by performing generative pre-training on a collection of model parameters optimized with data from source cities. We recast STG transfer learning as pre-training a generative hypernetwork, which generates tailored model parameters guided by prompts, allowing for adaptability to diverse data distributions and city-specific characteristics. GPDiff employs a diffusion model with a transformer-based denoising network, which is model-agnostic to integrate with powerful STG models. By addressing challenges arising from data gaps and the complexity of generalizing knowledge across cities, our framework consistently outperforms state-of-the-art baselines on multiple real-world datasets for tasks such as traffic speed prediction and crowd flow prediction. The implementation of our approach is available: https://github.com/PLUTO-SCY/GPDiff.

Beyond Imitation: Generating Human Mobility from Context-aware Reasoning with Large Language Models

Feb 15, 2024Abstract:Human mobility behaviours are closely linked to various important societal problems such as traffic congestion, and epidemic control. However, collecting mobility data can be prohibitively expensive and involves serious privacy issues, posing a pressing need for high-quality generative mobility models. Previous efforts focus on learning the behaviour distribution from training samples, and generate new mobility data by sampling the learned distributions. They cannot effectively capture the coherent intentions that drive mobility behavior, leading to low sample efficiency and semantic-awareness. Inspired by the emergent reasoning ability in LLMs, we propose a radical perspective shift that reformulates mobility generation as a commonsense reasoning problem. In this paper, we design a novel Mobility Generation as Reasoning (MobiGeaR) framework that prompts LLM to recursively generate mobility behaviour. Specifically, we design a context-aware chain-of-thoughts prompting technique to align LLMs with context-aware mobility behaviour by few-shot in-context learning. Besides, MobiGeaR employ a divide-and-coordinate mechanism to exploit the synergistic effect between LLM reasoning and mechanistic gravity model. It leverages the step-by-step LLM reasoning to recursively generate a temporal template of activity intentions, which are then mapped to physical locations with a mechanistic gravity model. Experiments on two real-world datasets show MobiGeaR achieves state-of-the-art performance across all metrics, and substantially reduces the size of training samples at the same time. Besides, MobiGeaR also significantly improves the semantic-awareness of mobility generation by improving the intention accuracy by 62.23% and the generated mobility data is proven effective in boosting the performance of downstream applications. The implementation of our approach is available in the paper.

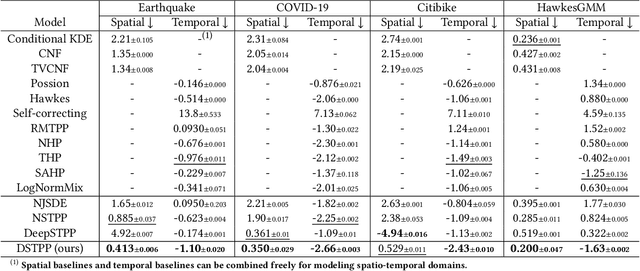

Spatio-temporal Diffusion Point Processes

May 21, 2023

Abstract:Spatio-temporal point process (STPP) is a stochastic collection of events accompanied with time and space. Due to computational complexities, existing solutions for STPPs compromise with conditional independence between time and space, which consider the temporal and spatial distributions separately. The failure to model the joint distribution leads to limited capacities in characterizing the spatio-temporal entangled interactions given past events. In this work, we propose a novel parameterization framework for STPPs, which leverages diffusion models to learn complex spatio-temporal joint distributions. We decompose the learning of the target joint distribution into multiple steps, where each step can be faithfully described by a Gaussian distribution. To enhance the learning of each step, an elaborated spatio-temporal co-attention module is proposed to capture the interdependence between the event time and space adaptively. For the first time, we break the restrictions on spatio-temporal dependencies in existing solutions, and enable a flexible and accurate modeling paradigm for STPPs. Extensive experiments from a wide range of fields, such as epidemiology, seismology, crime, and urban mobility, demonstrate that our framework outperforms the state-of-the-art baselines remarkably, with an average improvement of over 50%. Further in-depth analyses validate its ability to capture spatio-temporal interactions, which can learn adaptively for different scenarios. The datasets and source code are available online: https://github.com/tsinghua-fib-lab/Spatio-temporal-Diffusion-Point-Processes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge