Carsten Steger

The MVTec AD 2 Dataset: Advanced Scenarios for Unsupervised Anomaly Detection

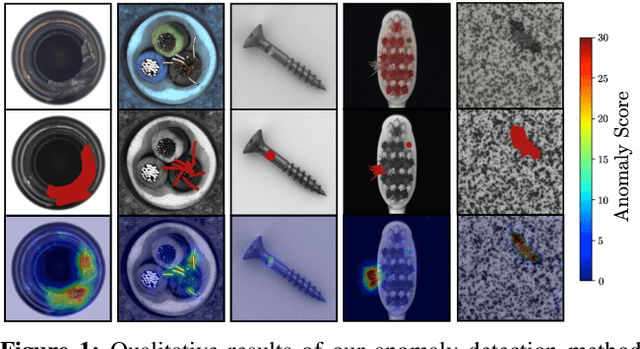

Mar 27, 2025Abstract:In recent years, performance on existing anomaly detection benchmarks like MVTec AD and VisA has started to saturate in terms of segmentation AU-PRO, with state-of-the-art models often competing in the range of less than one percentage point. This lack of discriminatory power prevents a meaningful comparison of models and thus hinders progress of the field, especially when considering the inherent stochastic nature of machine learning results. We present MVTec AD 2, a collection of eight anomaly detection scenarios with more than 8000 high-resolution images. It comprises challenging and highly relevant industrial inspection use cases that have not been considered in previous datasets, including transparent and overlapping objects, dark-field and back light illumination, objects with high variance in the normal data, and extremely small defects. We provide comprehensive evaluations of state-of-the-art methods and show that their performance remains below 60% average AU-PRO. Additionally, our dataset provides test scenarios with lighting condition changes to assess the robustness of methods under real-world distribution shifts. We host a publicly accessible evaluation server that holds the pixel-precise ground truth of the test set (https://benchmark.mvtec.com/). All image data is available at https://www.mvtec.com/company/research/datasets/mvtec-ad-2.

The MVTec 3D-AD Dataset for Unsupervised 3D Anomaly Detection and Localization

Dec 16, 2021

Abstract:We introduce the first comprehensive 3D dataset for the task of unsupervised anomaly detection and localization. It is inspired by real-world visual inspection scenarios in which a model has to detect various types of defects on manufactured products, even if it is trained only on anomaly-free data. There are defects that manifest themselves as anomalies in the geometric structure of an object. These cause significant deviations in a 3D representation of the data. We employed a high-resolution industrial 3D sensor to acquire depth scans of 10 different object categories. For all object categories, we present a training and validation set, each of which solely consists of scans of anomaly-free samples. The corresponding test sets contain samples showing various defects such as scratches, dents, holes, contaminations, or deformations. Precise ground-truth annotations are provided for every anomalous test sample. An initial benchmark of 3D anomaly detection methods on our dataset indicates a considerable room for improvement.

Uninformed Students: Student-Teacher Anomaly Detection with Discriminative Latent Embeddings

Nov 06, 2019

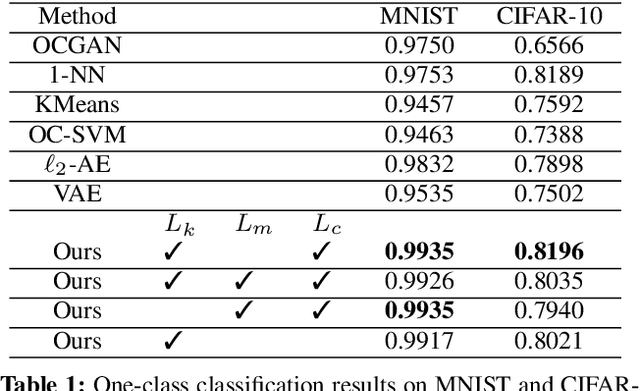

Abstract:We introduce a simple, yet powerful student-teacher framework for the challenging problem of unsupervised anomaly detection and pixel-precise anomaly segmentation in high-resolution images. To circumvent the need for prior data labeling, student networks are trained to regress the output of a descriptive teacher network that was pretrained on a large dataset of patches from natural images. Anomalies are detected when the student networks fail to generalize outside the manifold of anomaly-free training data, i.e., when the output of the student networks differ from that of the teacher network. Additionally, the intrinsic uncertainty in the student networks can be used as a scoring function that indicates anomalies. We compare our method to a large number of existing deep-learning-based methods for unsupervised anomaly detection. Our experiments demonstrate improvements over state-of-the-art methods on a number of real-world datasets, including the recently introduced MVTec Anomaly Detection dataset that was specifically designed to benchmark anomaly segmentation algorithms.

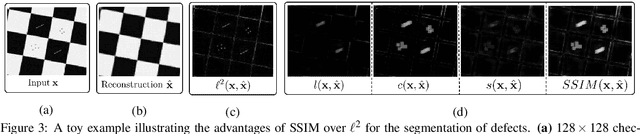

Improving Unsupervised Defect Segmentation by Applying Structural Similarity to Autoencoders

Oct 20, 2018

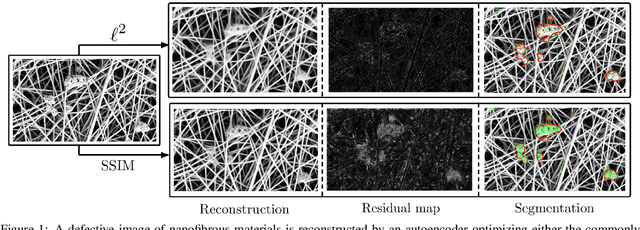

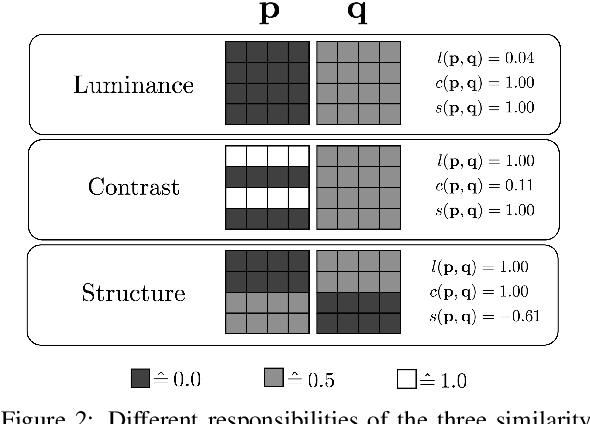

Abstract:Convolutional autoencoders have emerged as popular methods for unsupervised defect segmentation on image data. Most commonly, this task is performed by thresholding a pixel-wise reconstruction error based on an $\ell^p$ distance. This procedure, however, leads to large residuals whenever the reconstruction encompasses slight localization inaccuracies around edges. It also fails to reveal defective regions that have been visually altered when intensity values stay roughly consistent. We show that these problems prevent these approaches from being applied to complex real-world scenarios and that it cannot be easily avoided by employing more elaborate architectures such as variational or feature matching autoencoders. We propose to use a perceptual loss function based on structural similarity which examines inter-dependencies between local image regions, taking into account luminance, contrast and structural information, instead of simply comparing single pixel values. It achieves significant performance gains on a challenging real-world dataset of nanofibrous materials and a novel dataset of two woven fabrics over the state of the art approaches for unsupervised defect segmentation that use pixel-wise reconstruction error metrics.

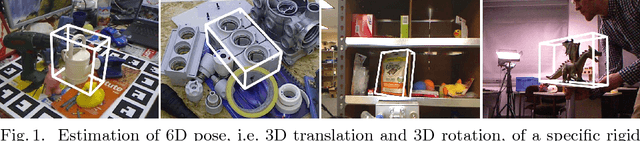

A Summary of the 4th International Workshop on Recovering 6D Object Pose

Oct 09, 2018

Abstract:This document summarizes the 4th International Workshop on Recovering 6D Object Pose which was organized in conjunction with ECCV 2018 in Munich. The workshop featured four invited talks, oral and poster presentations of accepted workshop papers, and an introduction of the BOP benchmark for 6D object pose estimation. The workshop was attended by 100+ people working on relevant topics in both academia and industry who shared up-to-date advances and discussed open problems.

Subpixel-Precise Tracking of Rigid Objects in Real-time

Jul 05, 2018

Abstract:We present a novel object tracking scheme that can track rigid objects in real time. The approach uses subpixel-precise image edges to track objects with high accuracy. It can determine the object position, scale, and rotation with subpixel-precision at around 80fps. The tracker returns a reliable score for each frame and is capable of self diagnosing a tracking failure. Furthermore, the choice of the similarity measure makes the approach inherently robust against occlusion, clutter, and nonlinear illumination changes. We evaluate the method on sequences from rigid objects from the OTB-2015 and VOT2016 dataset and discuss its performance. The evaluation shows that the tracker is more accurate than state-of-the-art real-time trackers while being equally robust.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge