Michael Fauser

Uninformed Students: Student-Teacher Anomaly Detection with Discriminative Latent Embeddings

Nov 06, 2019

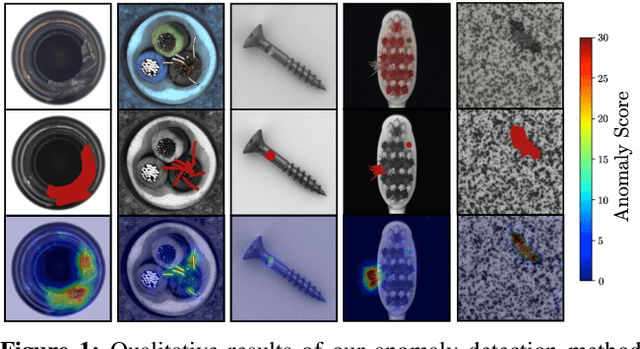

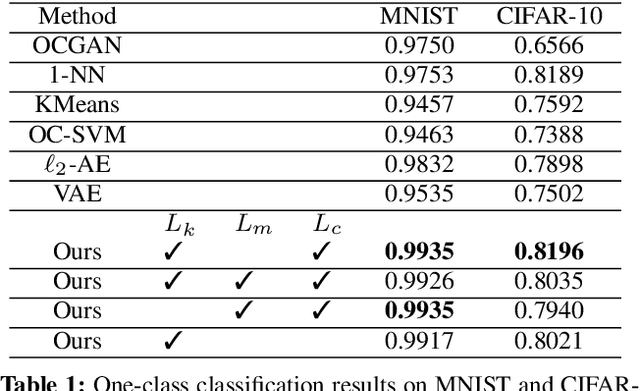

Abstract:We introduce a simple, yet powerful student-teacher framework for the challenging problem of unsupervised anomaly detection and pixel-precise anomaly segmentation in high-resolution images. To circumvent the need for prior data labeling, student networks are trained to regress the output of a descriptive teacher network that was pretrained on a large dataset of patches from natural images. Anomalies are detected when the student networks fail to generalize outside the manifold of anomaly-free training data, i.e., when the output of the student networks differ from that of the teacher network. Additionally, the intrinsic uncertainty in the student networks can be used as a scoring function that indicates anomalies. We compare our method to a large number of existing deep-learning-based methods for unsupervised anomaly detection. Our experiments demonstrate improvements over state-of-the-art methods on a number of real-world datasets, including the recently introduced MVTec Anomaly Detection dataset that was specifically designed to benchmark anomaly segmentation algorithms.

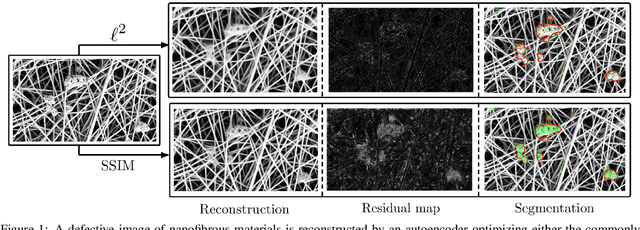

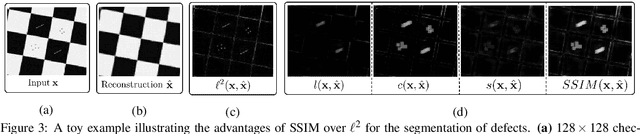

Improving Unsupervised Defect Segmentation by Applying Structural Similarity to Autoencoders

Oct 20, 2018

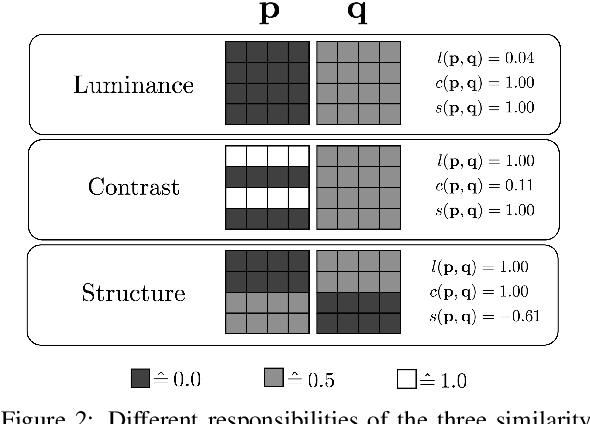

Abstract:Convolutional autoencoders have emerged as popular methods for unsupervised defect segmentation on image data. Most commonly, this task is performed by thresholding a pixel-wise reconstruction error based on an $\ell^p$ distance. This procedure, however, leads to large residuals whenever the reconstruction encompasses slight localization inaccuracies around edges. It also fails to reveal defective regions that have been visually altered when intensity values stay roughly consistent. We show that these problems prevent these approaches from being applied to complex real-world scenarios and that it cannot be easily avoided by employing more elaborate architectures such as variational or feature matching autoencoders. We propose to use a perceptual loss function based on structural similarity which examines inter-dependencies between local image regions, taking into account luminance, contrast and structural information, instead of simply comparing single pixel values. It achieves significant performance gains on a challenging real-world dataset of nanofibrous materials and a novel dataset of two woven fabrics over the state of the art approaches for unsupervised defect segmentation that use pixel-wise reconstruction error metrics.

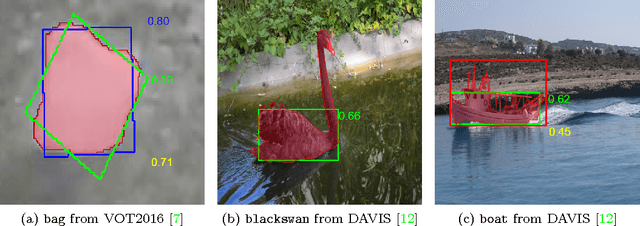

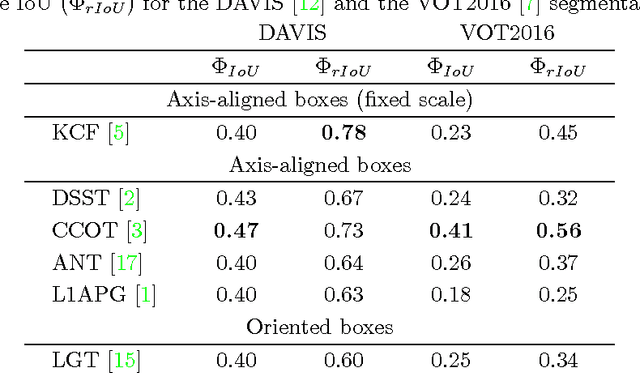

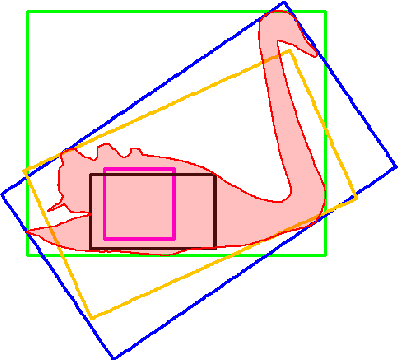

Measuring the Accuracy of Object Detectors and Trackers

Apr 24, 2017

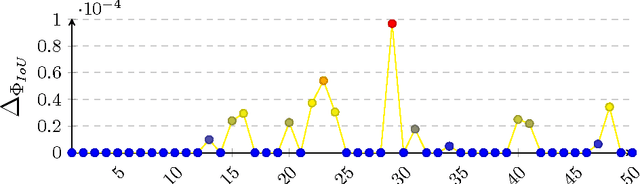

Abstract:The accuracy of object detectors and trackers is most commonly evaluated by the Intersection over Union (IoU) criterion. To date, most approaches are restricted to axis-aligned or oriented boxes and, as a consequence, many datasets are only labeled with boxes. Nevertheless, axis-aligned or oriented boxes cannot accurately capture an object's shape. To address this, a number of densely segmented datasets has started to emerge in both the object detection and the object tracking communities. However, evaluating the accuracy of object detectors and trackers that are restricted to boxes on densely segmented data is not straightforward. To close this gap, we introduce the relative Intersection over Union (rIoU) accuracy measure. The measure normalizes the IoU with the optimal box for the segmentation to generate an accuracy measure that ranges between 0 and 1 and allows a more precise measurement of accuracies. Furthermore, it enables an efficient and easy way to understand scenes and the strengths and weaknesses of an object detection or tracking approach. We display how the new measure can be efficiently calculated and present an easy-to-use evaluation framework. The framework is tested on the DAVIS and the VOT2016 segmentations and has been made available to the community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge