Byungjun Kim

Dexterous World Models

Dec 19, 2025

Abstract:Recent progress in 3D reconstruction has made it easy to create realistic digital twins from everyday environments. However, current digital twins remain largely static and are limited to navigation and view synthesis without embodied interactivity. To bridge this gap, we introduce Dexterous World Model (DWM), a scene-action-conditioned video diffusion framework that models how dexterous human actions induce dynamic changes in static 3D scenes. Given a static 3D scene rendering and an egocentric hand motion sequence, DWM generates temporally coherent videos depicting plausible human-scene interactions. Our approach conditions video generation on (1) static scene renderings following a specified camera trajectory to ensure spatial consistency, and (2) egocentric hand mesh renderings that encode both geometry and motion cues to model action-conditioned dynamics directly. To train DWM, we construct a hybrid interaction video dataset. Synthetic egocentric interactions provide fully aligned supervision for joint locomotion and manipulation learning, while fixed-camera real-world videos contribute diverse and realistic object dynamics. Experiments demonstrate that DWM enables realistic and physically plausible interactions, such as grasping, opening, and moving objects, while maintaining camera and scene consistency. This framework represents a first step toward video diffusion-based interactive digital twins and enables embodied simulation from egocentric actions.

Durian: Dual Reference-guided Portrait Animation with Attribute Transfer

Sep 04, 2025Abstract:We present Durian, the first method for generating portrait animation videos with facial attribute transfer from a given reference image to a target portrait in a zero-shot manner. To enable high-fidelity and spatially consistent attribute transfer across frames, we introduce dual reference networks that inject spatial features from both the portrait and attribute images into the denoising process of a diffusion model. We train the model using a self-reconstruction formulation, where two frames are sampled from the same portrait video: one is treated as the attribute reference and the other as the target portrait, and the remaining frames are reconstructed conditioned on these inputs and their corresponding masks. To support the transfer of attributes with varying spatial extent, we propose a mask expansion strategy using keypoint-conditioned image generation for training. In addition, we further augment the attribute and portrait images with spatial and appearance-level transformations to improve robustness to positional misalignment between them. These strategies allow the model to effectively generalize across diverse attributes and in-the-wild reference combinations, despite being trained without explicit triplet supervision. Durian achieves state-of-the-art performance on portrait animation with attribute transfer, and notably, its dual reference design enables multi-attribute composition in a single generation pass without additional training.

Decoding the Poetic Language of Emotion in Korean Modern Poetry: Insights from a Human-Labeled Dataset and AI Modeling

Sep 04, 2025Abstract:This study introduces KPoEM (Korean Poetry Emotion Mapping) , a novel dataset for computational emotion analysis in modern Korean poetry. Despite remarkable progress in text-based emotion classification using large language models, poetry-particularly Korean poetry-remains underexplored due to its figurative language and cultural specificity. We built a multi-label emotion dataset of 7,662 entries, including 7,007 line-level entries from 483 poems and 615 work-level entries, annotated with 44 fine-grained emotion categories from five influential Korean poets. A state-of-the-art Korean language model fine-tuned on this dataset significantly outperformed previous models, achieving 0.60 F1-micro compared to 0.34 from models trained on general corpora. The KPoEM model, trained through sequential fine-tuning-first on general corpora and then on the KPoEM dataset-demonstrates not only an enhanced ability to identify temporally and culturally specific emotional expressions, but also a strong capacity to preserve the core sentiments of modern Korean poetry. This study bridges computational methods and literary analysis, presenting new possibilities for the quantitative exploration of poetic emotions through structured data that faithfully retains the emotional and cultural nuances of Korean literature.

PHISH in MESH: Korean Adversarial Phonetic Substitution and Phonetic-Semantic Feature Integration Defense

May 27, 2025Abstract:As malicious users increasingly employ phonetic substitution to evade hate speech detection, researchers have investigated such strategies. However, two key challenges remain. First, existing studies have overlooked the Korean language, despite its vulnerability to phonetic perturbations due to its phonographic nature. Second, prior work has primarily focused on constructing datasets rather than developing architectural defenses. To address these challenges, we propose (1) PHonetic-Informed Substitution for Hangul (PHISH) that exploits the phonological characteristics of the Korean writing system, and (2) Mixed Encoding of Semantic-pHonetic features (MESH) that enhances the detector's robustness by incorporating phonetic information at the architectural level. Our experimental results demonstrate the effectiveness of our proposed methods on both perturbed and unperturbed datasets, suggesting that they not only improve detection performance but also reflect realistic adversarial behaviors employed by malicious users.

DART: An AIGT Detector using AMR of Rephrased Text

Dec 16, 2024Abstract:As large language models (LLMs) generate more human-like texts, concerns about the side effects of AI-generated texts (AIGT) have grown. So, researchers have developed methods for detecting AIGT. However, two challenges remain. First, the performance on detecting black-box LLMs is low, because existing models have focused on syntactic features. Second, most AIGT detectors have been tested on a single-candidate setting, which assumes that we know the origin of an AIGT and may deviate from the real-world scenario. To resolve these challenges, we propose DART, which consists of four steps: rephrasing, semantic parsing, scoring, and multiclass classification. We conducted several experiments to test the performance of DART by following previous work. The experimental result shows that DART can discriminate multiple black-box LLMs without using syntactic features and knowing the origin of AIGT.

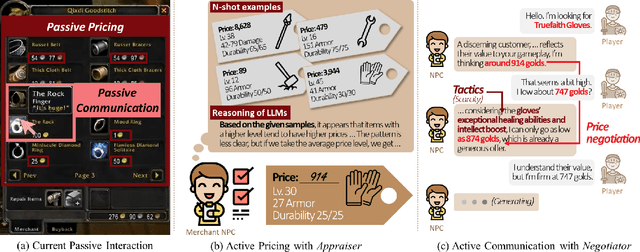

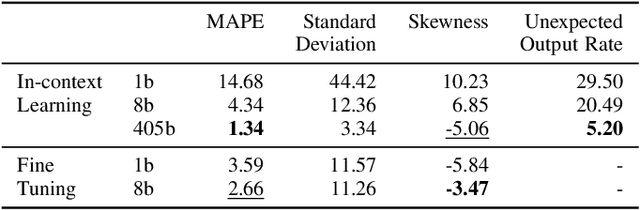

Leveraging Large Language Models for Active Merchant Non-player Characters

Dec 15, 2024

Abstract:We highlight two significant issues leading to the passivity of current merchant non-player characters (NPCs): pricing and communication. While immersive interactions have been a focus, negotiations between merchant NPCs and players on item prices have not received sufficient attention. First, we define passive pricing as the limited ability of merchants to modify predefined item prices. Second, passive communication means that merchants can only interact with players in a scripted manner. To tackle these issues and create an active merchant NPC, we propose a merchant framework based on large language models (LLMs), called MART, which consists of an appraiser module and a negotiator module. We conducted two experiments to guide game developers in selecting appropriate implementations by comparing different training methods and LLM sizes. Our findings indicate that finetuning methods, such as supervised finetuning (SFT) and knowledge distillation (KD), are effective in using smaller LLMs to implement active merchant NPCs. Additionally, we found three irregular cases arising from the responses of LLMs. We expect our findings to guide developers in using LLMs for developing active merchant NPCs.

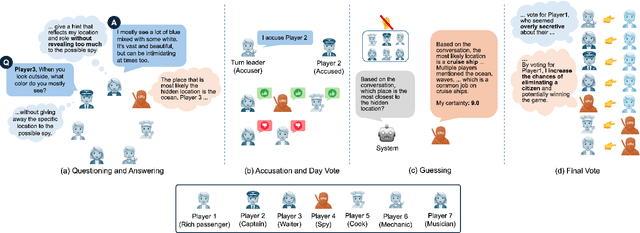

Microscopic Analysis on LLM players via Social Deduction Game

Aug 19, 2024

Abstract:Recent studies have begun developing autonomous game players for social deduction games using large language models (LLMs). When building LLM players, fine-grained evaluations are crucial for addressing weaknesses in game-playing abilities. However, existing studies have often overlooked such assessments. Specifically, we point out two issues with the evaluation methods employed. First, game-playing abilities have typically been assessed through game-level outcomes rather than specific event-level skills; Second, error analyses have lacked structured methodologies. To address these issues, we propose an approach utilizing a variant of the SpyFall game, named SpyGame. We conducted an experiment with four LLMs, analyzing their gameplay behavior in SpyGame both quantitatively and qualitatively. For the quantitative analysis, we introduced eight metrics to resolve the first issue, revealing that these metrics are more effective than existing ones for evaluating the two critical skills: intent identification and camouflage. In the qualitative analysis, we performed thematic analysis to resolve the second issue. This analysis identifies four major categories that affect gameplay of LLMs. Additionally, we demonstrate how these categories complement and support the findings from the quantitative analysis.

Guess The Unseen: Dynamic 3D Scene Reconstruction from Partial 2D Glimpses

Apr 22, 2024Abstract:In this paper, we present a method to reconstruct the world and multiple dynamic humans in 3D from a monocular video input. As a key idea, we represent both the world and multiple humans via the recently emerging 3D Gaussian Splatting (3D-GS) representation, enabling to conveniently and efficiently compose and render them together. In particular, we address the scenarios with severely limited and sparse observations in 3D human reconstruction, a common challenge encountered in the real world. To tackle this challenge, we introduce a novel approach to optimize the 3D-GS representation in a canonical space by fusing the sparse cues in the common space, where we leverage a pre-trained 2D diffusion model to synthesize unseen views while keeping the consistency with the observed 2D appearances. We demonstrate our method can reconstruct high-quality animatable 3D humans in various challenging examples, in the presence of occlusion, image crops, few-shot, and extremely sparse observations. After reconstruction, our method is capable of not only rendering the scene in any novel views at arbitrary time instances, but also editing the 3D scene by removing individual humans or applying different motions for each human. Through various experiments, we demonstrate the quality and efficiency of our methods over alternative existing approaches.

PEGASUS: Personalized Generative 3D Avatars with Composable Attributes

Feb 16, 2024Abstract:We present, PEGASUS, a method for constructing personalized generative 3D face avatars from monocular video sources. As a compositional generative model, our model enables disentangled controls to selectively alter the facial attributes (e.g., hair or nose) of the target individual, while preserving the identity. We present two key approaches to achieve this goal. First, we present a method to construct a person-specific generative 3D avatar by building a synthetic video collection of the target identity with varying facial attributes, where the videos are synthesized by borrowing parts from diverse individuals from other monocular videos. Through several experiments, we demonstrate the superior performance of our approach by generating unseen attributes with high realism. Subsequently, we introduce a zero-shot approach to achieve the same generative modeling more efficiently by leveraging a previously constructed personalized generative model.

GALA: Generating Animatable Layered Assets from a Single Scan

Jan 23, 2024Abstract:We present GALA, a framework that takes as input a single-layer clothed 3D human mesh and decomposes it into complete multi-layered 3D assets. The outputs can then be combined with other assets to create novel clothed human avatars with any pose. Existing reconstruction approaches often treat clothed humans as a single-layer of geometry and overlook the inherent compositionality of humans with hairstyles, clothing, and accessories, thereby limiting the utility of the meshes for downstream applications. Decomposing a single-layer mesh into separate layers is a challenging task because it requires the synthesis of plausible geometry and texture for the severely occluded regions. Moreover, even with successful decomposition, meshes are not normalized in terms of poses and body shapes, failing coherent composition with novel identities and poses. To address these challenges, we propose to leverage the general knowledge of a pretrained 2D diffusion model as geometry and appearance prior for humans and other assets. We first separate the input mesh using the 3D surface segmentation extracted from multi-view 2D segmentations. Then we synthesize the missing geometry of different layers in both posed and canonical spaces using a novel pose-guided Score Distillation Sampling (SDS) loss. Once we complete inpainting high-fidelity 3D geometry, we also apply the same SDS loss to its texture to obtain the complete appearance including the initially occluded regions. Through a series of decomposition steps, we obtain multiple layers of 3D assets in a shared canonical space normalized in terms of poses and human shapes, hence supporting effortless composition to novel identities and reanimation with novel poses. Our experiments demonstrate the effectiveness of our approach for decomposition, canonicalization, and composition tasks compared to existing solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge