Seongyong Ahn

UFO: Uncertainty-aware LiDAR-image Fusion for Off-road Semantic Terrain Map Estimation

Mar 05, 2024

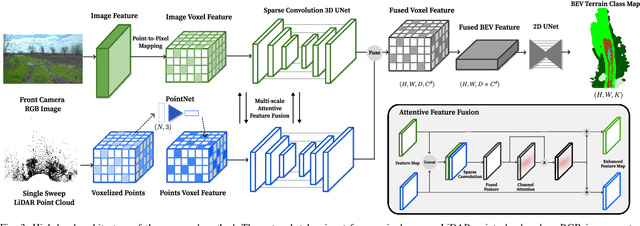

Abstract:Autonomous off-road navigation requires an accurate semantic understanding of the environment, often converted into a bird's-eye view (BEV) representation for various downstream tasks. While learning-based methods have shown success in generating local semantic terrain maps directly from sensor data, their efficacy in off-road environments is hindered by challenges in accurately representing uncertain terrain features. This paper presents a learning-based fusion method for generating dense terrain classification maps in BEV. By performing LiDAR-image fusion at multiple scales, our approach enhances the accuracy of semantic maps generated from an RGB image and a single-sweep LiDAR scan. Utilizing uncertainty-aware pseudo-labels further enhances the network's ability to learn reliably in off-road environments without requiring precise 3D annotations. By conducting thorough experiments using off-road driving datasets, we demonstrate that our method can improve accuracy in off-road terrains, validating its efficacy in facilitating reliable and safe autonomous navigation in challenging off-road settings.

METAVerse: Meta-Learning Traversability Cost Map for Off-Road Navigation

Jul 26, 2023Abstract:Autonomous navigation in off-road conditions requires an accurate estimation of terrain traversability. However, traversability estimation in unstructured environments is subject to high uncertainty due to the variability of numerous factors that influence vehicle-terrain interaction. Consequently, it is challenging to obtain a generalizable model that can accurately predict traversability in a variety of environments. This paper presents METAVerse, a meta-learning framework for learning a global model that accurately and reliably predicts terrain traversability across diverse environments. We train the traversability prediction network to generate a dense and continuous-valued cost map from a sparse LiDAR point cloud, leveraging vehicle-terrain interaction feedback in a self-supervised manner. Meta-learning is utilized to train a global model with driving data collected from multiple environments, effectively minimizing estimation uncertainty. During deployment, online adaptation is performed to rapidly adapt the network to the local environment by exploiting recent interaction experiences. To conduct a comprehensive evaluation, we collect driving data from various terrains and demonstrate that our method can obtain a global model that minimizes uncertainty. Moreover, by integrating our model with a model predictive controller, we demonstrate that the reduced uncertainty results in safe and stable navigation in unstructured and unknown terrains.

SLiDE: Self-supervised LiDAR De-snowing through Reconstruction Difficulty

Aug 08, 2022

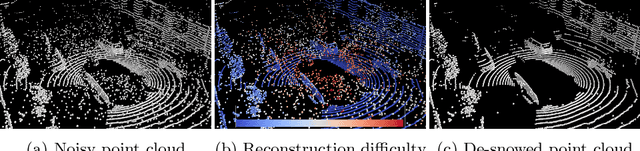

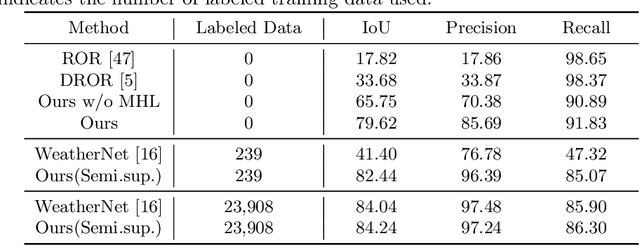

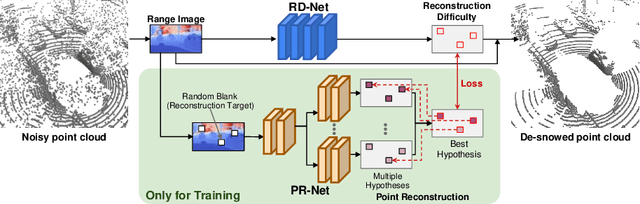

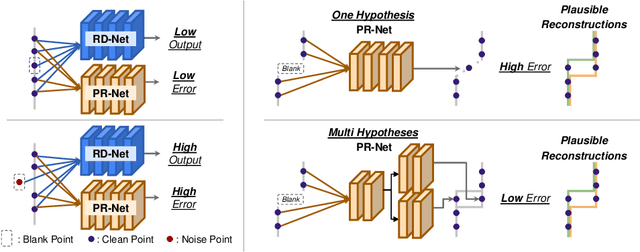

Abstract:LiDAR is widely used to capture accurate 3D outdoor scene structures. However, LiDAR produces many undesirable noise points in snowy weather, which hamper analyzing meaningful 3D scene structures. Semantic segmentation with snow labels would be a straightforward solution for removing them, but it requires laborious point-wise annotation. To address this problem, we propose a novel self-supervised learning framework for snow points removal in LiDAR point clouds. Our method exploits the structural characteristic of the noise points: low spatial correlation with their neighbors. Our method consists of two deep neural networks: Point Reconstruction Network (PR-Net) reconstructs each point from its neighbors; Reconstruction Difficulty Network (RD-Net) predicts point-wise difficulty of the reconstruction by PR-Net, which we call reconstruction difficulty. With simple post-processing, our method effectively detects snow points without any label. Our method achieves the state-of-the-art performance among label-free approaches and is comparable to the fully-supervised method. Moreover, we demonstrate that our method can be exploited as a pretext task to improve label-efficiency of supervised training of de-snowing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge