Chong Hui Kim

UFO: Uncertainty-aware LiDAR-image Fusion for Off-road Semantic Terrain Map Estimation

Mar 05, 2024

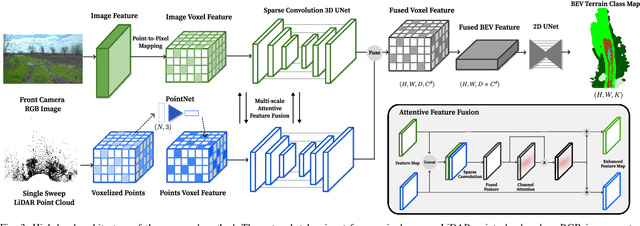

Abstract:Autonomous off-road navigation requires an accurate semantic understanding of the environment, often converted into a bird's-eye view (BEV) representation for various downstream tasks. While learning-based methods have shown success in generating local semantic terrain maps directly from sensor data, their efficacy in off-road environments is hindered by challenges in accurately representing uncertain terrain features. This paper presents a learning-based fusion method for generating dense terrain classification maps in BEV. By performing LiDAR-image fusion at multiple scales, our approach enhances the accuracy of semantic maps generated from an RGB image and a single-sweep LiDAR scan. Utilizing uncertainty-aware pseudo-labels further enhances the network's ability to learn reliably in off-road environments without requiring precise 3D annotations. By conducting thorough experiments using off-road driving datasets, we demonstrate that our method can improve accuracy in off-road terrains, validating its efficacy in facilitating reliable and safe autonomous navigation in challenging off-road settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge