Biswa Sengupta

Learning to Communicate using Contrastive Learning

Jul 03, 2023Abstract:Communication is a powerful tool for coordination in multi-agent RL. But inducing an effective, common language is a difficult challenge, particularly in the decentralized setting. In this work, we introduce an alternative perspective where communicative messages sent between agents are considered as different incomplete views of the environment state. By examining the relationship between messages sent and received, we propose to learn to communicate using contrastive learning to maximize the mutual information between messages of a given trajectory. In communication-essential environments, our method outperforms previous work in both performance and learning speed. Using qualitative metrics and representation probing, we show that our method induces more symmetric communication and captures global state information from the environment. Overall, we show the power of contrastive learning and the importance of leveraging messages as encodings for effective communication.

Learning to Infer Belief Embedded Communication

Mar 15, 2022

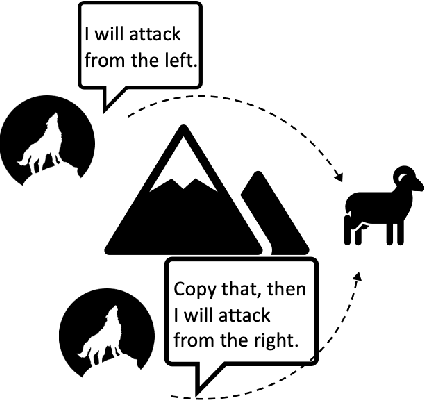

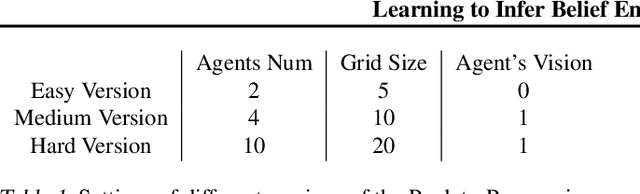

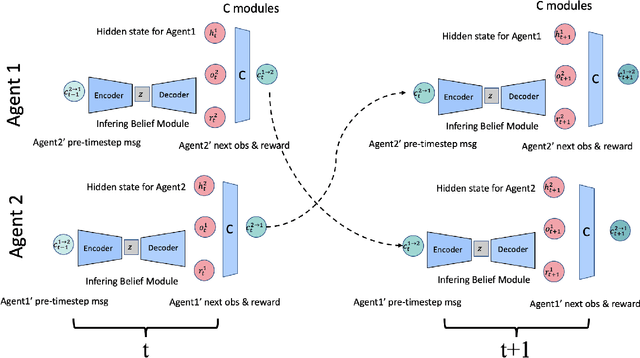

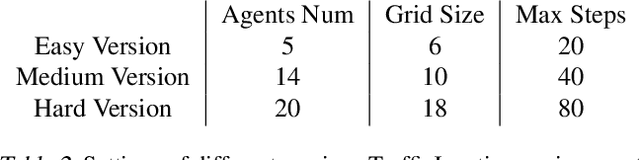

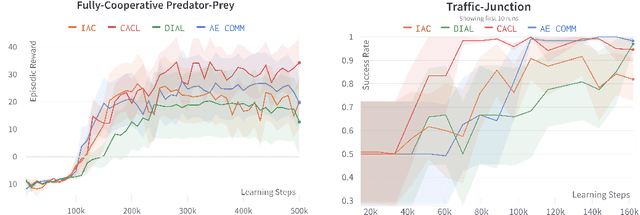

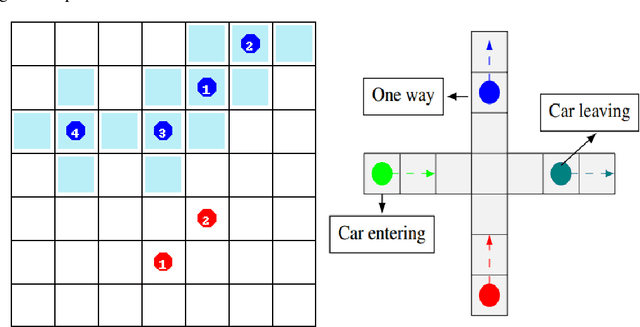

Abstract:In multi-agent collaboration problems with communication, an agent's ability to encode their intention and interpret other agents' strategies is critical for planning their future actions. This paper introduces a novel algorithm called Intention Embedded Communication (IEC) to mimic an agent's language learning ability. IEC contains a perception module for decoding other agents' intentions in response to their past actions. It also includes a language generation module for learning implicit grammar during communication with two or more agents. Such grammar, by construction, should be compact for efficient communication. Both modules undergo conjoint evolution - similar to an infant's babbling that enables it to learn a language of choice by trial and error. We utilised three multi-agent environments, namely predator/prey, traffic junction and level-based foraging and illustrate that such a co-evolution enables us to learn much quicker (50%) than state-of-the-art algorithms like MADDPG. Ablation studies further show that disabling the inferring belief module, communication module, and the hidden states reduces the model performance by 38%, 60% and 30%, respectively. Hence, we suggest that modelling other agents' behaviour accelerates another agent to learn grammar and develop a language to communicate efficiently. We evaluate our method on a set of cooperative scenarios and show its superior performance to other multi-agent baselines. We also demonstrate that it is essential for agents to reason about others' states and learn this ability by continuous communication.

Switch Trajectory Transformer with Distributional Value Approximation for Multi-Task Reinforcement Learning

Mar 14, 2022

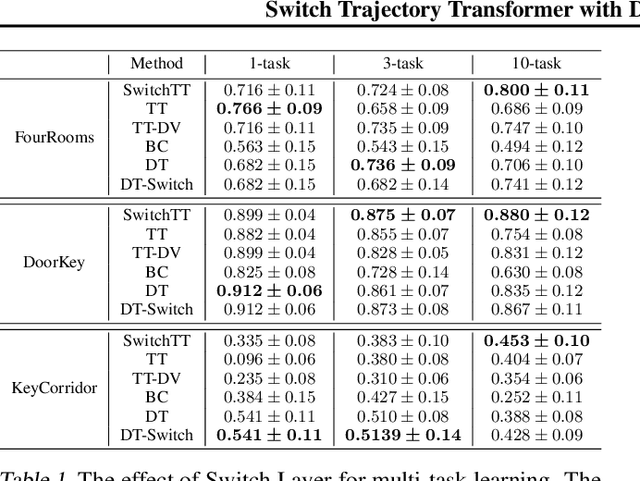

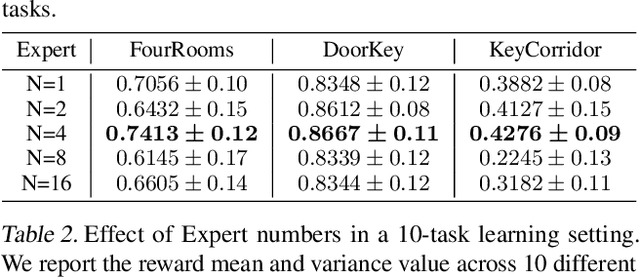

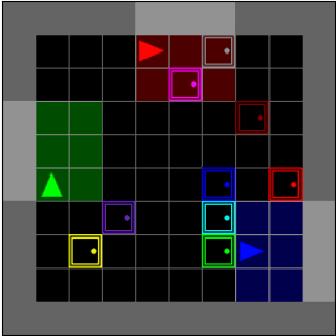

Abstract:We propose SwitchTT, a multi-task extension to Trajectory Transformer but enhanced with two striking features: (i) exploiting a sparsely activated model to reduce computation cost in multi-task offline model learning and (ii) adopting a distributional trajectory value estimator that improves policy performance, especially in sparse reward settings. These two enhancements make SwitchTT suitable for solving multi-task offline reinforcement learning problems, where model capacity is critical for absorbing the vast quantities of knowledge available in the multi-task dataset. More specifically, SwitchTT exploits switch transformer model architecture for multi-task policy learning, allowing us to improve model capacity without proportional computation cost. Also, SwitchTT approximates the distribution rather than the expectation of trajectory value, mitigating the effects of the Monte-Carlo Value estimator suffering from poor sample complexity, especially in the sparse-reward setting. We evaluate our method using the suite of ten sparse-reward tasks from the gym-mini-grid environment.We show an improvement of 10% over Trajectory Transformer across 10-task learning and obtain up to 90% increase in offline model training speed. Our results also demonstrate the advantage of the switch transformer model for absorbing expert knowledge and the importance of value distribution in evaluating the trajectory.

The Multi-Agent Pickup and Delivery Problem: MAPF, MARL and Its Warehouse Applications

Mar 14, 2022

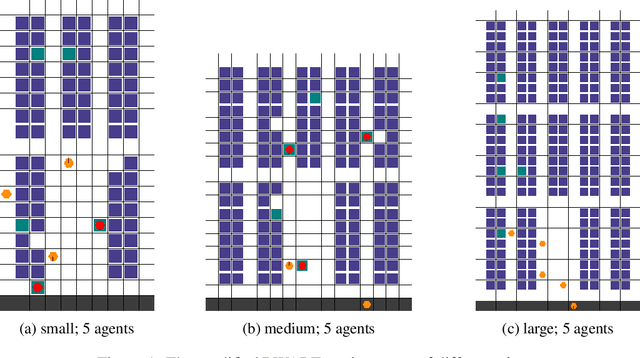

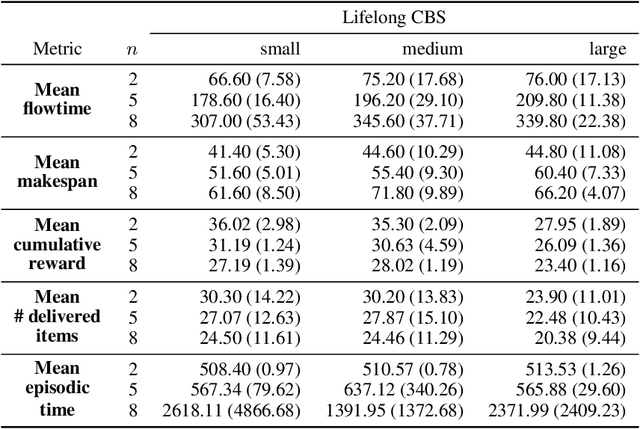

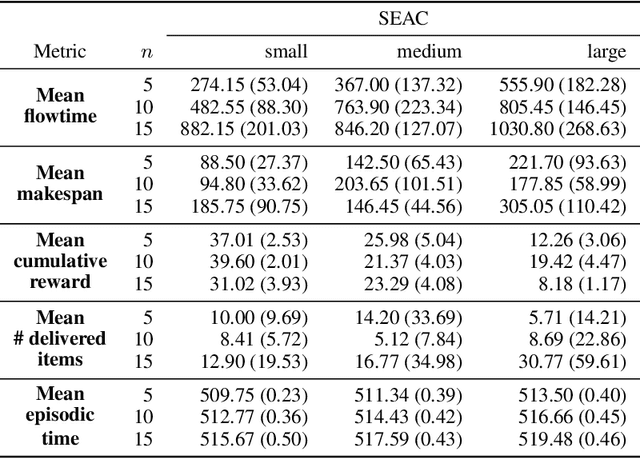

Abstract:We study two state-of-the-art solutions to the multi-agent pickup and delivery (MAPD) problem based on different principles -- multi-agent path-finding (MAPF) and multi-agent reinforcement learning (MARL). Specifically, a recent MAPF algorithm called conflict-based search (CBS) and a current MARL algorithm called shared experience actor-critic (SEAC) are studied. While the performance of these algorithms is measured using quite different metrics in their separate lines of work, we aim to benchmark these two methods comprehensively in a simulated warehouse automation environment.

Reinforcement Learning for Location-Aware Scheduling

Mar 07, 2022

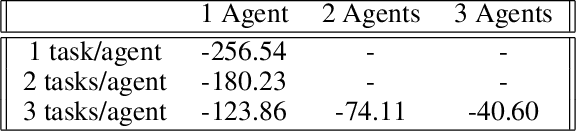

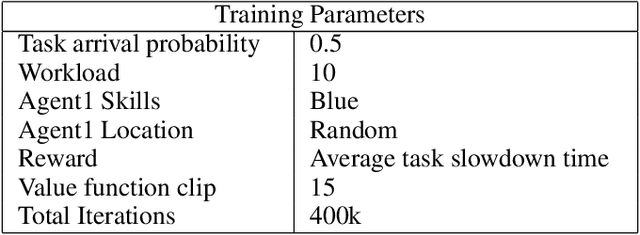

Abstract:Recent techniques in dynamical scheduling and resource management have found applications in warehouse environments due to their ability to organize and prioritize tasks in a higher temporal resolution. The rise of deep reinforcement learning, as a learning paradigm, has enabled decentralized agent populations to discover complex coordination strategies. However, training multiple agents simultaneously introduce many obstacles in training as observation and action spaces become exponentially large. In our work, we experimentally quantify how various aspects of the warehouse environment (e.g., floor plan complexity, information about agents' live location, level of task parallelizability) affect performance and execution priority. To achieve efficiency, we propose a compact representation of the state and action space for location-aware multi-agent systems, wherein each agent has knowledge of only self and task coordinates, hence only partial observability of the underlying Markov Decision Process. Finally, we show how agents trained in certain environments maintain performance in completely unseen settings and also correlate performance degradation with floor plan geometry.

Learning to Ground Decentralized Multi-Agent Communication with Contrastive Learning

Mar 07, 2022

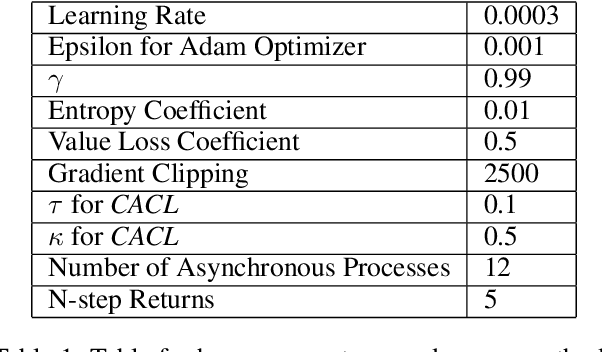

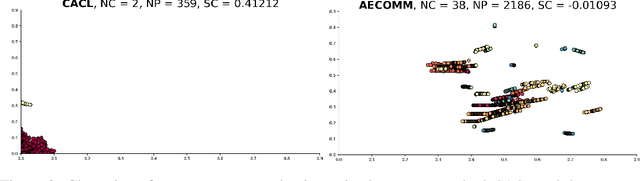

Abstract:For communication to happen successfully, a common language is required between agents to understand information communicated by one another. Inducing the emergence of a common language has been a difficult challenge to multi-agent learning systems. In this work, we introduce an alternative perspective to the communicative messages sent between agents, considering them as different incomplete views of the environment state. Based on this perspective, we propose a simple approach to induce the emergence of a common language by maximizing the mutual information between messages of a given trajectory in a self-supervised manner. By evaluating our method in communication-essential environments, we empirically show how our method leads to better learning performance and speed, and learns a more consistent common language than existing methods, without introducing additional learning parameters.

Hierarchically Structured Scheduling and Execution of Tasks in a Multi-Agent Environment

Mar 06, 2022

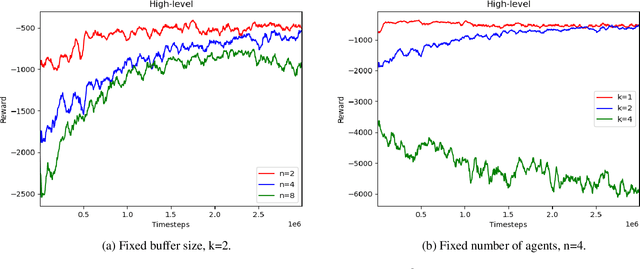

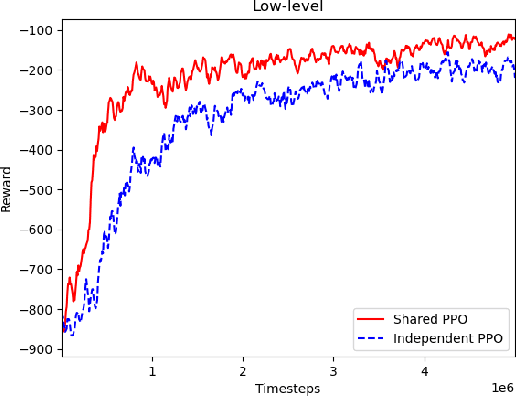

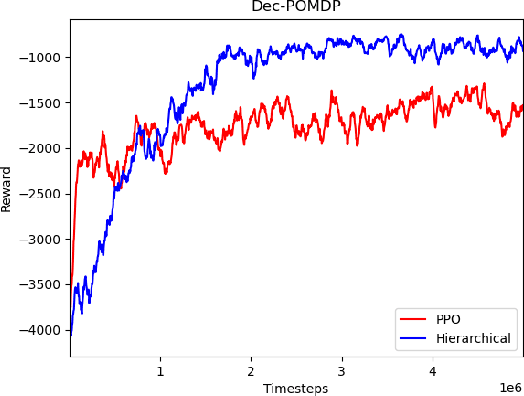

Abstract:In a warehouse environment, tasks appear dynamically. Consequently, a task management system that matches them with the workforce too early (e.g., weeks in advance) is necessarily sub-optimal. Also, the rapidly increasing size of the action space of such a system consists of a significant problem for traditional schedulers. Reinforcement learning, however, is suited to deal with issues requiring making sequential decisions towards a long-term, often remote, goal. In this work, we set ourselves on a problem that presents itself with a hierarchical structure: the task-scheduling, by a centralised agent, in a dynamic warehouse multi-agent environment and the execution of one such schedule, by decentralised agents with only partial observability thereof. We propose to use deep reinforcement learning to solve both the high-level scheduling problem and the low-level multi-agent problem of schedule execution. Finally, we also conceive the case where centralisation is impossible at test time and workers must learn how to cooperate in executing the tasks in an environment with no schedule and only partial observability.

On the Dark Side of Calibration for Modern Neural Networks

Jun 17, 2021

Abstract:Modern neural networks are highly uncalibrated. It poses a significant challenge for safety-critical systems to utilise deep neural networks (DNNs), reliably. Many recently proposed approaches have demonstrated substantial progress in improving DNN calibration. However, they hardly touch upon refinement, which historically has been an essential aspect of calibration. Refinement indicates separability of a network's correct and incorrect predictions. This paper presents a theoretically and empirically supported exposition for reviewing a model's calibration and refinement. Firstly, we show the breakdown of expected calibration error (ECE), into predicted confidence and refinement. Connecting with this result, we highlight that regularisation based calibration only focuses on naively reducing a model's confidence. This logically has a severe downside to a model's refinement. We support our claims through rigorous empirical evaluations of many state of the art calibration approaches on standard datasets. We find that many calibration approaches with the likes of label smoothing, mixup etc. lower the utility of a DNN by degrading its refinement. Even under natural data shift, this calibration-refinement trade-off holds for the majority of calibration methods. These findings call for an urgent retrospective into some popular pathways taken for modern DNN calibration.

Adversarial Information Factorization

Sep 28, 2018

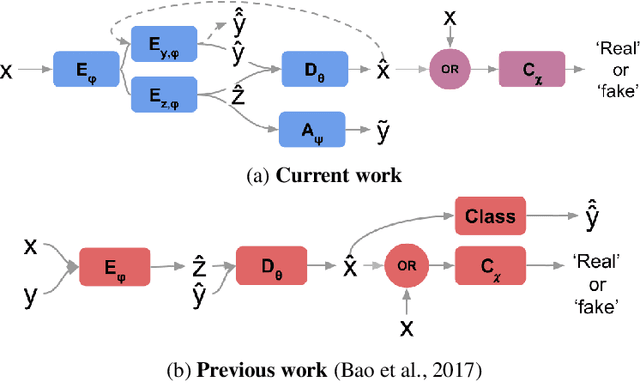

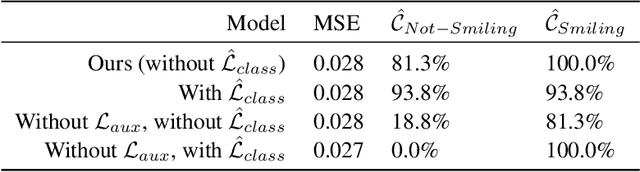

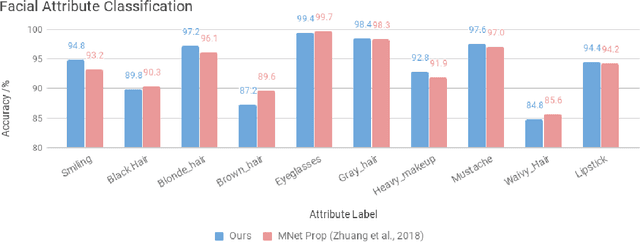

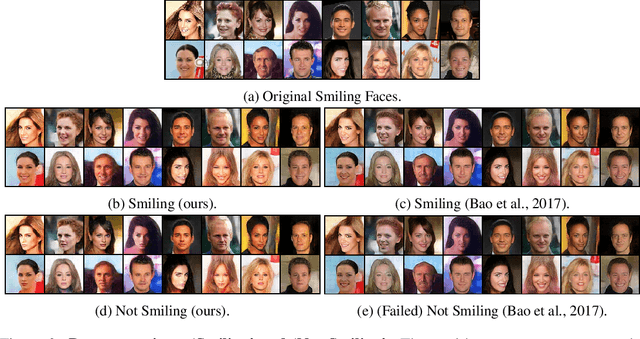

Abstract:We propose a novel generative model architecture designed to learn representations for images that factor out a single attribute from the rest of the representation. A single object may have many attributes which when altered do not change the identity of the object itself. Consider the human face; the identity of a particular person is independent of whether or not they happen to be wearing glasses. The attribute of wearing glasses can be changed without changing the identity of the person. However, the ability to manipulate and alter image attributes without altering the object identity is not a trivial task. Here, we are interested in learning a representation of the image that separates the identity of an object (such as a human face) from an attribute (such as 'wearing glasses'). We demonstrate the success of our factorization approach by using the learned representation to synthesize the same face with and without a chosen attribute. We refer to this specific synthesis process as image attribute manipulation. We further demonstrate that our model achieves competitive scores, with state of the art, on a facial attribute classification task.

How Robust are Deep Neural Networks?

Apr 30, 2018

Abstract:Convolutional and Recurrent, deep neural networks have been successful in machine learning systems for computer vision, reinforcement learning, and other allied fields. However, the robustness of such neural networks is seldom apprised, especially after high classification accuracy has been attained. In this paper, we evaluate the robustness of three recurrent neural networks to tiny perturbations, on three widely used datasets, to argue that high accuracy does not always mean a stable and a robust (to bounded perturbations, adversarial attacks, etc.) system. Especially, normalizing the spectrum of the discrete recurrent network to bound the spectrum (using power method, Rayleigh quotient, etc.) on a unit disk produces stable, albeit highly non-robust neural networks. Furthermore, using the $\epsilon$-pseudo-spectrum, we show that training of recurrent networks, say using gradient-based methods, often result in non-normal matrices that may or may not be diagonalizable. Therefore, the open problem lies in constructing methods that optimize not only for accuracy but also for the stability and the robustness of the underlying neural network, a criterion that is distinct from the other.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge