Aditya Singh

Concept Incongruence: An Exploration of Time and Death in Role Playing

May 20, 2025Abstract:Consider this prompt "Draw a unicorn with two horns". Should large language models (LLMs) recognize that a unicorn has only one horn by definition and ask users for clarifications, or proceed to generate something anyway? We introduce concept incongruence to capture such phenomena where concept boundaries clash with each other, either in user prompts or in model representations, often leading to under-specified or mis-specified behaviors. In this work, we take the first step towards defining and analyzing model behavior under concept incongruence. Focusing on temporal boundaries in the Role-Play setting, we propose three behavioral metrics--abstention rate, conditional accuracy, and answer rate--to quantify model behavior under incongruence due to the role's death. We show that models fail to abstain after death and suffer from an accuracy drop compared to the Non-Role-Play setting. Through probing experiments, we identify two main causes: (i) unreliable encoding of the "death" state across different years, leading to unsatisfactory abstention behavior, and (ii) role playing causes shifts in the model's temporal representations, resulting in accuracy drops. We leverage these insights to improve consistency in the model's abstention and answer behaviors. Our findings suggest that concept incongruence leads to unexpected model behaviors and point to future directions on improving model behavior under concept incongruence.

Neural Control Barrier Functions from Physics Informed Neural Networks

Apr 15, 2025Abstract:As autonomous systems become increasingly prevalent in daily life, ensuring their safety is paramount. Control Barrier Functions (CBFs) have emerged as an effective tool for guaranteeing safety; however, manually designing them for specific applications remains a significant challenge. With the advent of deep learning techniques, recent research has explored synthesizing CBFs using neural networks-commonly referred to as neural CBFs. This paper introduces a novel class of neural CBFs that leverages a physics-inspired neural network framework by incorporating Zubov's Partial Differential Equation (PDE) within the context of safety. This approach provides a scalable methodology for synthesizing neural CBFs applicable to high-dimensional systems. Furthermore, by utilizing reciprocal CBFs instead of zeroing CBFs, the proposed framework allows for the specification of flexible, user-defined safe regions. To validate the effectiveness of the approach, we present case studies on three different systems: an inverted pendulum, autonomous ground navigation, and aerial navigation in obstacle-laden environments.

Simple Unsupervised Knowledge Distillation With Space Similarity

Sep 20, 2024

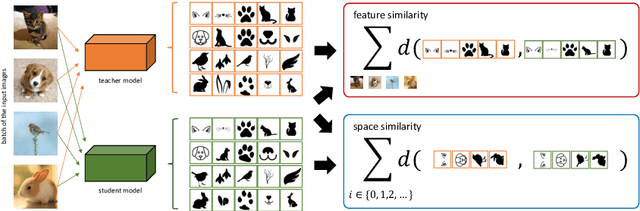

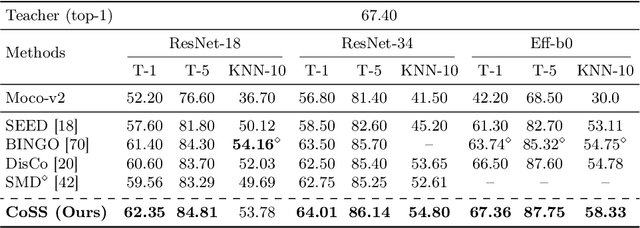

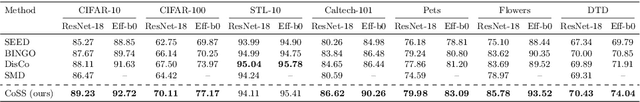

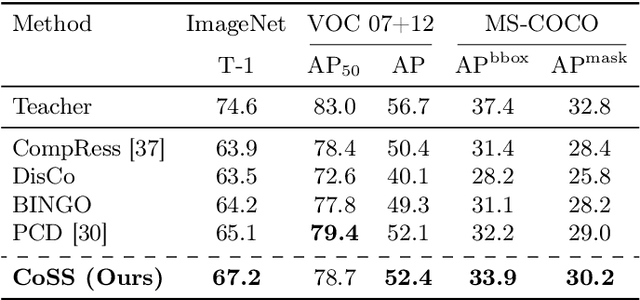

Abstract:As per recent studies, Self-supervised learning (SSL) does not readily extend to smaller architectures. One direction to mitigate this shortcoming while simultaneously training a smaller network without labels is to adopt unsupervised knowledge distillation (UKD). Existing UKD approaches handcraft preservation worthy inter/intra sample relationships between the teacher and its student. However, this may overlook/ignore other key relationships present in the mapping of a teacher. In this paper, instead of heuristically constructing preservation worthy relationships between samples, we directly motivate the student to model the teacher's embedding manifold. If the mapped manifold is similar, all inter/intra sample relationships are indirectly conserved. We first demonstrate that prior methods cannot preserve teacher's latent manifold due to their sole reliance on $L_2$ normalised embedding features. Subsequently, we propose a simple objective to capture the lost information due to normalisation. Our proposed loss component, termed \textbf{space similarity}, motivates each dimension of a student's feature space to be similar to the corresponding dimension of its teacher. We perform extensive experiments demonstrating strong performance of our proposed approach on various benchmarks.

FolkTalent: Enhancing Classification and Tagging of Indian Folk Paintings

May 14, 2024

Abstract:Indian folk paintings have a rich mosaic of symbols, colors, textures, and stories making them an invaluable repository of cultural legacy. The paper presents a novel approach to classifying these paintings into distinct art forms and tagging them with their unique salient features. A custom dataset named FolkTalent, comprising 2279 digital images of paintings across 12 different forms, has been prepared using websites that are direct outlets of Indian folk paintings. Tags covering a wide range of attributes like color, theme, artistic style, and patterns are generated using GPT4, and verified by an expert for each painting. Classification is performed employing the RandomForest ensemble technique on fine-tuned Convolutional Neural Network (CNN) models to classify Indian folk paintings, achieving an accuracy of 91.83%. Tagging is accomplished via the prominent fine-tuned CNN-based backbones with a custom classifier attached to its top to perform multi-label image classification. The generated tags offer a deeper insight into the painting, enabling an enhanced search experience based on theme and visual attributes. The proposed hybrid model sets a new benchmark in folk painting classification and tagging, significantly contributing to cataloging India's folk-art heritage.

AnoGAN for Tabular Data: A Novel Approach to Anomaly Detection

May 05, 2024

Abstract:Anomaly detection, a critical facet in data analysis, involves identifying patterns that deviate from expected behavior. This research addresses the complexities inherent in anomaly detection, exploring challenges and adapting to sophisticated malicious activities. With applications spanning cybersecurity, healthcare, finance, and surveillance, anomalies often signify critical information or potential threats. Inspired by the success of Anomaly Generative Adversarial Network (AnoGAN) in image domains, our research extends its principles to tabular data. Our contributions include adapting AnoGAN's principles to a new domain and promising advancements in detecting previously undetectable anomalies. This paper delves into the multifaceted nature of anomaly detection, considering the dynamic evolution of normal behavior, context-dependent anomaly definitions, and data-related challenges like noise and imbalances.

Imposing Exact Safety Specifications in Neural Reachable Tubes

Mar 31, 2024

Abstract:Hamilton-Jacobi (HJ) reachability analysis is a verification tool that provides safety and performance guarantees for autonomous systems. It is widely adopted because of its ability to handle nonlinear dynamical systems with bounded adversarial disturbances and constraints on states and inputs. However, it involves solving a PDE to compute a safety value function, whose computational and memory complexity scales exponentially with the state dimension, making its direct usage in large-scale systems intractable. Recently, a learning-based approach called DeepReach, has been proposed to approximate high-dimensional reachable tubes using neural networks. While DeepReach has been shown to be effective, the accuracy of the learned solution decreases with the increase in system complexity. One of the reasons for this degradation is the inexact imposition of safety constraints during the learning process, which corresponds to the PDE's boundary conditions. Specifically, DeepReach imposes boundary conditions as soft constraints in the loss function, which leaves room for error during the value function learning. Moreover, one needs to carefully adjust the relative contributions from the imposition of boundary conditions and the imposition of the PDE in the loss function. This, in turn, induces errors in the overall learned solution. In this work, we propose a variant of DeepReach that exactly imposes safety constraints during the learning process by restructuring the overall value function as a weighted sum of the boundary condition and neural network output. This eliminates the need for a boundary loss during training, thus bypassing the need for loss adjustment. We demonstrate the efficacy of the proposed approach in significantly improving the accuracy of learned solutions for challenging high-dimensional reachability tasks, such as rocket-landing and multivehicle collision-avoidance problems.

Approximate Nullspace Augmented Finetuning for Robust Vision Transformers

Mar 15, 2024

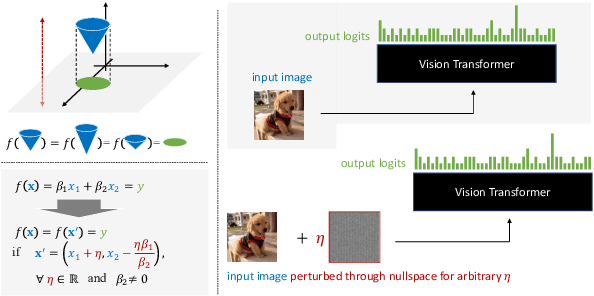

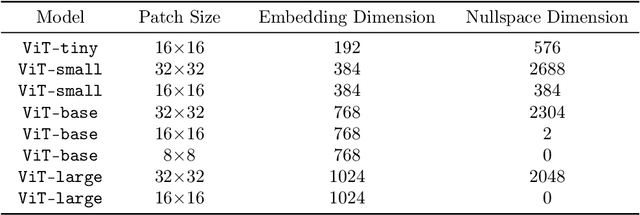

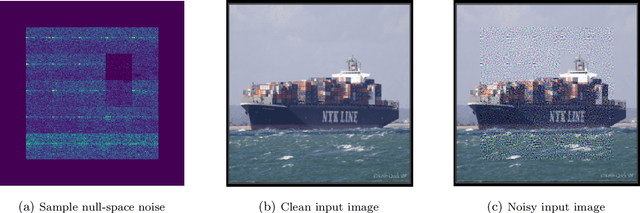

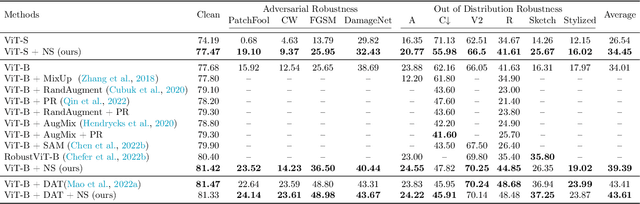

Abstract:Enhancing the robustness of deep learning models, particularly in the realm of vision transformers (ViTs), is crucial for their real-world deployment. In this work, we provide a finetuning approach to enhance the robustness of vision transformers inspired by the concept of nullspace from linear algebra. Our investigation centers on whether a vision transformer can exhibit resilience to input variations akin to the nullspace property in linear mappings, implying that perturbations sampled from this nullspace do not influence the model's output when added to the input. Firstly, we show that for many pretrained ViTs, a non-trivial nullspace exists due to the presence of the patch embedding layer. Secondly, as nullspace is a concept associated with linear algebra, we demonstrate that it is possible to synthesize approximate nullspace elements for the non-linear blocks of ViTs employing an optimisation strategy. Finally, we propose a fine-tuning strategy for ViTs wherein we augment the training data with synthesized approximate nullspace noise. After finetuning, we find that the model demonstrates robustness to adversarial and natural image perbutations alike.

A bi-objective $ε$-constrained framework for quality-cost optimization in language model ensembles

Dec 26, 2023

Abstract:We propose an ensembling framework that uses diverse open-sourced Large Language Models (LLMs) to achieve high response quality while maintaining cost efficiency. We formulate a bi-objective optimization problem to represent the quality-cost tradeoff and then introduce an additional budget constraint that reduces the problem to a straightforward 0/1 knapsack problem. We empirically demonstrate that our framework outperforms the existing ensembling approaches in response quality while significantly reducing costs.

Understanding EEG signals for subject-wise Definition of Armoni Activities

Jan 03, 2023Abstract:In a growing world of technology, psychological disorders became a challenge to be solved. The methods used for cognitive stimulation are very conventional and based on one-way communication, which only relies on the material or method used for training of an individual. It doesn't use any kind of feedback from the individual to analyze the progress of the training process. We have proposed a closed-loop methodology to improve the cognitive state of a person with ID (Intellectual disability). We have used a platform named 'Armoni', for providing training to the intellectually disabled individuals. The learning is performed in a closed-loop by using feedback in the form of change in affective state. For feedback to the Armoni, an EEG (Electroencephalograph) headband is used. All the changes in EEG are observed and classified against the change in the mean and standard deviation value of all frequency bands of signal. This comparison is being helpful in defining every activity with respect to change in brain signals. In this paper, we have discussed the process of treatment of EEG signal and its definition against the different activities of Armoni. We have tested it on 6 different systems with different age groups and cognitive levels.

Machine Learning Framework: Competitive Intelligence and Key Drivers Identification of Market Share Trends Among Healthcare Facilities

Dec 12, 2022

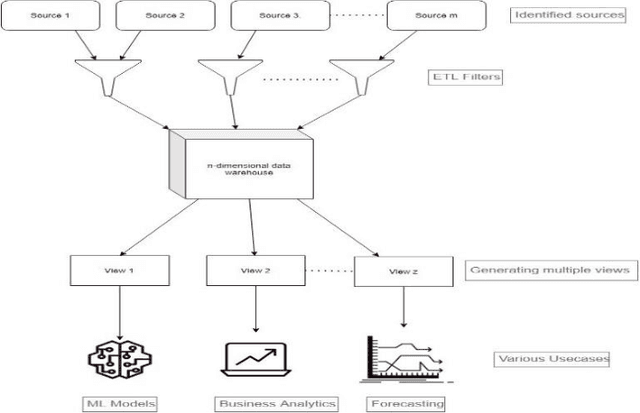

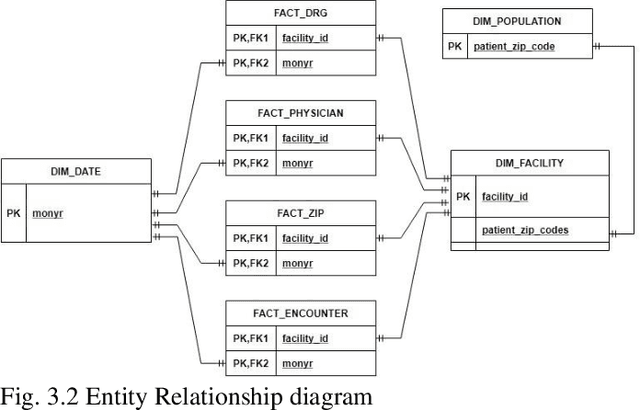

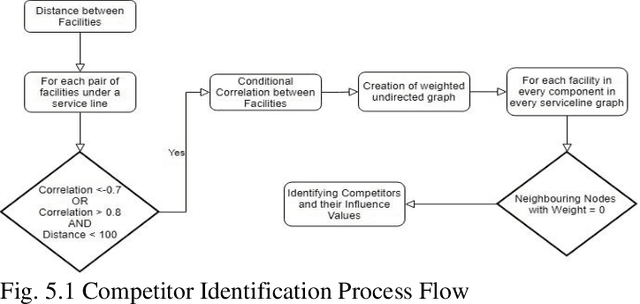

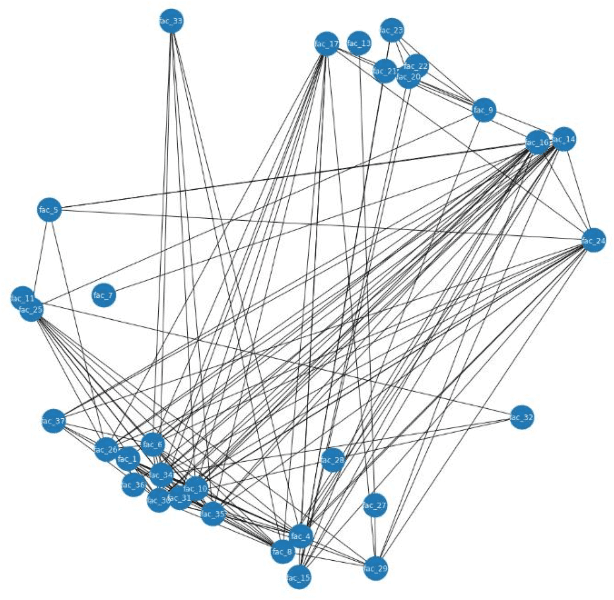

Abstract:The necessity of data driven decisions in healthcare strategy formulation is rapidly increasing. A reliable framework which helps identify factors impacting a Healthcare Provider Facility or a Hospital (from here on termed as Facility) Market Share is of key importance. This pilot study aims at developing a data driven Machine Learning - Regression framework which aids strategists in formulating key decisions to improve the Facilitys Market Share which in turn impacts in improving the quality of healthcare services. The US (United States) healthcare business is chosen for the study; and the data spanning across 60 key Facilities in Washington State and about 3 years of historical data is considered. In the current analysis Market Share is termed as the ratio of facility encounters to the total encounters among the group of potential competitor facilities. The current study proposes a novel two-pronged approach of competitor identification and regression approach to evaluate and predict market share, respectively. Leveraged model agnostic technique, SHAP, to quantify the relative importance of features impacting the market share. The proposed method to identify pool of competitors in current analysis, develops Directed Acyclic Graphs (DAGs), feature level word vectors and evaluates the key connected components at facility level. This technique is robust since its data driven which minimizes the bias from empirical techniques. Post identifying the set of competitors among facilities, developed Regression model to predict the Market share. For relative quantification of features at a facility level, incorporated SHAP a model agnostic explainer. This helped to identify and rank the attributes at each facility which impacts the market share.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge