Balu Adsumilli

TABES: Trajectory-Aware Backward-on-Entropy Steering for Masked Diffusion Models

Jan 30, 2026Abstract:Masked Diffusion Models (MDMs) have emerged as a promising non-autoregressive paradigm for generative tasks, offering parallel decoding and bidirectional context utilization. However, current sampling methods rely on simple confidence-based heuristics that ignore the long-term impact of local decisions, leading to trajectory lock-in where early hallucinations cascade into global incoherence. While search-based methods mitigate this, they incur prohibitive computational costs ($O(K)$ forward passes per step). In this work, we propose Backward-on-Entropy (BoE) Steering, a gradient-guided inference framework that approximates infinite-horizon lookahead via a single backward pass. We formally derive the Token Influence Score (TIS) from a first-order expansion of the trajectory cost functional, proving that the gradient of future entropy with respect to input embeddings serves as an optimal control signal for minimizing uncertainty. To ensure scalability, we introduce \texttt{ActiveQueryAttention}, a sparse adjoint primitive that exploits the structure of the masking objective to reduce backward pass complexity. BoE achieves a superior Pareto frontier for inference-time scaling compared to existing unmasking methods, demonstrating that gradient-guided steering offers a mathematically principled and efficient path to robust non-autoregressive generation. We will release the code.

Rate-Distortion Optimization with Non-Reference Metrics for UGC Compression

May 21, 2025Abstract:Service providers must encode a large volume of noisy videos to meet the demand for user-generated content (UGC) in online video-sharing platforms. However, low-quality UGC challenges conventional codecs based on rate-distortion optimization (RDO) with full-reference metrics (FRMs). While effective for pristine videos, FRMs drive codecs to preserve artifacts when the input is degraded, resulting in suboptimal compression. A more suitable approach used to assess UGC quality is based on non-reference metrics (NRMs). However, RDO with NRMs as a measure of distortion requires an iterative workflow of encoding, decoding, and metric evaluation, which is computationally impractical. This paper overcomes this limitation by linearizing the NRM around the uncompressed video. The resulting cost function enables block-wise bit allocation in the transform domain by estimating the alignment of the quantization error with the gradient of the NRM. To avoid large deviations from the input, we add sum of squared errors (SSE) regularization. We derive expressions for both the SSE regularization parameter and the Lagrangian, akin to the relationship used for SSE-RDO. Experiments with images and videos show bitrate savings of more than 30\% over SSE-RDO using the target NRM, with no decoder complexity overhead and minimal encoder complexity increase.

CP-LLM: Context and Pixel Aware Large Language Model for Video Quality Assessment

May 21, 2025Abstract:Video quality assessment (VQA) is a challenging research topic with broad applications. Effective VQA necessitates sensitivity to pixel-level distortions and a comprehensive understanding of video context to accurately determine the perceptual impact of distortions. Traditional hand-crafted and learning-based VQA models mainly focus on pixel-level distortions and lack contextual understanding, while recent LLM-based models struggle with sensitivity to small distortions or handle quality scoring and description as separate tasks. To address these shortcomings, we introduce CP-LLM: a Context and Pixel aware Large Language Model. CP-LLM is a novel multimodal LLM architecture featuring dual vision encoders designed to independently analyze perceptual quality at both high-level (video context) and low-level (pixel distortion) granularity, along with a language decoder subsequently reasons about the interplay between these aspects. This design enables CP-LLM to simultaneously produce robust quality scores and interpretable quality descriptions, with enhanced sensitivity to pixel distortions (e.g. compression artifacts). The model is trained via a multi-task pipeline optimizing for score prediction, description generation, and pairwise comparisons. Experiment results demonstrate that CP-LLM achieves state-of-the-art cross-dataset performance on established VQA benchmarks and superior robustness to pixel distortions, confirming its efficacy for comprehensive and practical video quality assessment in real-world scenarios.

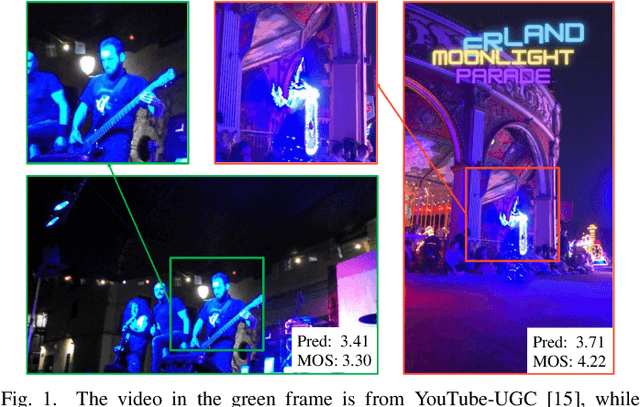

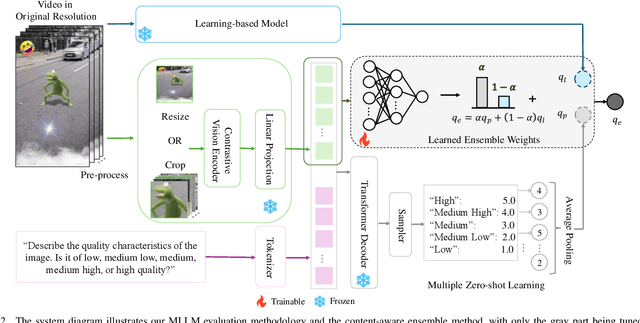

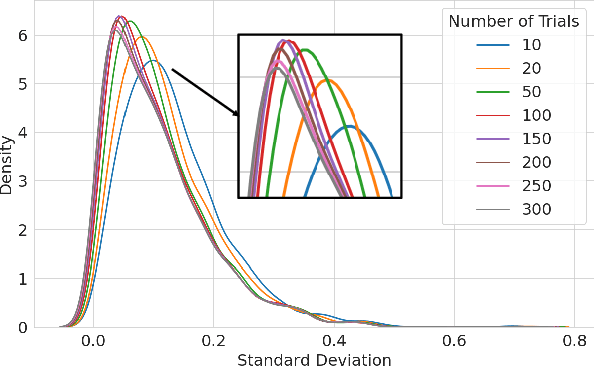

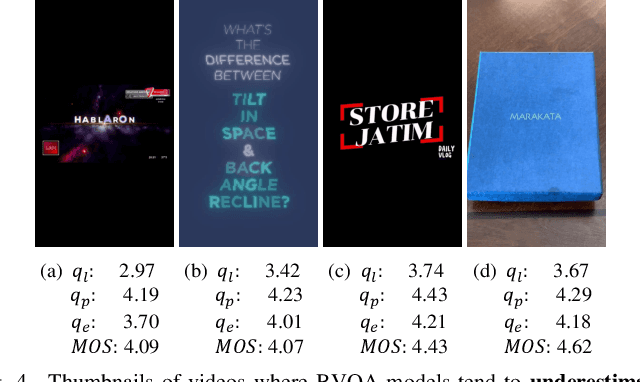

An Ensemble Approach to Short-form Video Quality Assessment Using Multimodal LLM

Dec 24, 2024

Abstract:The rise of short-form videos, characterized by diverse content, editing styles, and artifacts, poses substantial challenges for learning-based blind video quality assessment (BVQA) models. Multimodal large language models (MLLMs), renowned for their superior generalization capabilities, present a promising solution. This paper focuses on effectively leveraging a pretrained MLLM for short-form video quality assessment, regarding the impacts of pre-processing and response variability, and insights on combining the MLLM with BVQA models. We first investigated how frame pre-processing and sampling techniques influence the MLLM's performance. Then, we introduced a lightweight learning-based ensemble method that adaptively integrates predictions from the MLLM and state-of-the-art BVQA models. Our results demonstrated superior generalization performance with the proposed ensemble approach. Furthermore, the analysis of content-aware ensemble weights highlighted that some video characteristics are not fully represented by existing BVQA models, revealing potential directions to improve BVQA models further.

YouTube SFV+HDR Quality Dataset

Jun 08, 2024

Abstract:The popularity of Short form videos (SFV) has grown dramatically in the past few years, and has become a phenomenal video category with billions of viewers. Meanwhile, High Dynamic Range (HDR) as an advanced feature also becomes more and more popular on video sharing platforms. As a hot topic with huge impact, SFV and HDR bring new questions to video quality research: 1) is SFV+HDR quality assessment significantly different from traditional User Generated Content (UGC) quality assessment? 2) do objective quality metrics designed for traditional UGC still work well for SFV+HDR? To answer the above questions, we created the first large scale SFV+HDR dataset with reliable subjective quality scores, covering 10 popular content categories. Further, we also introduce a general sampling framework to maximize the representativeness of the dataset. We provided a comprehensive analysis of subjective quality scores for Short form SDR and HDR videos, and discuss the reliability of state-of-the-art UGC quality metrics and potential improvements.

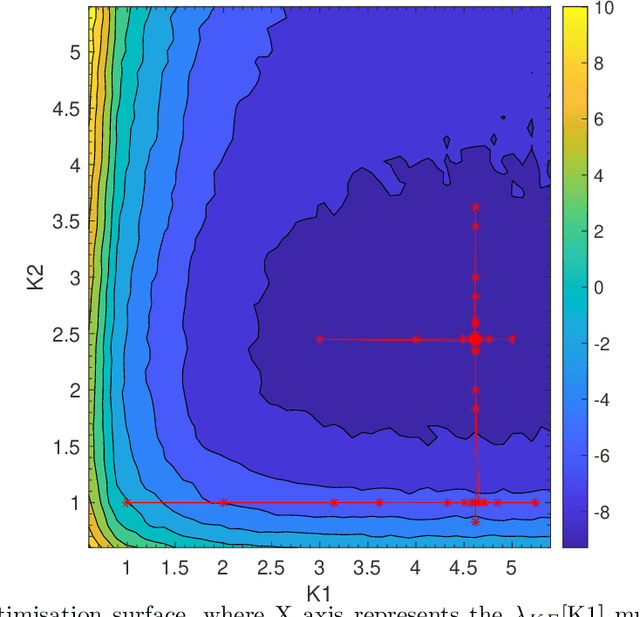

Comparison of HDR quality metrics in Per-Clip Lagrangian multiplier optimisation with AV1

Mar 28, 2023

Abstract:The complexity of modern codecs along with the increased need of delivering high-quality videos at low bitrates has reinforced the idea of a per-clip tailoring of parameters for optimised rate-distortion performance. While the objective quality metrics used for Standard Dynamic Range (SDR) videos have been well studied, the transitioning of consumer displays to support High Dynamic Range (HDR) videos, poses a new challenge to rate-distortion optimisation. In this paper, we review the popular HDR metrics DeltaE100 (DE100), PSNRL100, wPSNR, and HDR-VQM. We measure the impact of employing these metrics in per-clip direct search optimisation of the rate-distortion Lagrange multiplier in AV1. We report, on 35 HDR videos, average Bjontegaard Delta Rate (BD-Rate) gains of 4.675%, 2.226%, and 7.253% in terms of DE100, PSNRL100, and HDR-VQM. We also show that the inclusion of chroma in the quality metrics has a significant impact on optimisation, which can only be partially addressed by the use of chroma offsets.

Rate-Distortion Optimization With Alternative References For UGC Video Compression

Mar 11, 2023

Abstract:User generated content (UGC) refers to videos that are uploaded by users and shared over the Internet. UGC may have low quality due to noise and previous compression. When re-encoding UGC for streaming or downloading, a traditional video coding pipeline will perform rate-distortion (RD) optimization to choose coding parameters. However, in the UGC video coding case, since the input is not pristine, quality ``saturation'' (or even degradation) can be observed, i.e., increased bitrate only leads to improved representation of coding artifacts and noise present in the UGC input. In this paper, we study the saturation problem in UGC compression, where the goal is to identify and avoid during encoding, the coding parameters and rates that lead to quality saturation. We proposed a geometric criterion for saturation detection that works with rate-distortion optimization, and only requires a few frames from the UGC video. In addition, we show how to combine the proposed saturation detection method with existing video coding systems that implement rate-distortion optimization for efficient compression of UGC videos.

Direct Optimisation of $\boldsymbolλ$ for HDR Content Adaptive Transcoding in AV1

Aug 23, 2022

Abstract:Since the adoption of VP9 by Netflix in 2016, royalty-free coding standards continued to gain prominence through the activities of the AOMedia consortium. AV1, the latest open source standard, is now widely supported. In the early years after standardisation, HDR video tends to be under served in open source encoders for a variety of reasons including the relatively small amount of true HDR content being broadcast and the challenges in RD optimisation with that material. AV1 codec optimisation has been ongoing since 2020 including consideration of the computational load. In this paper, we explore the idea of direct optimisation of the Lagrangian $\lambda$ parameter used in the rate control of the encoders to estimate the optimal Rate-Distortion trade-off achievable for a High Dynamic Range signalled video clip. We show that by adjusting the Lagrange multiplier in the RD optimisation process on a frame-hierarchy basis, we are able to increase the Bjontegaard difference rate gains by more than 3.98$\times$ on average without visually affecting the quality.

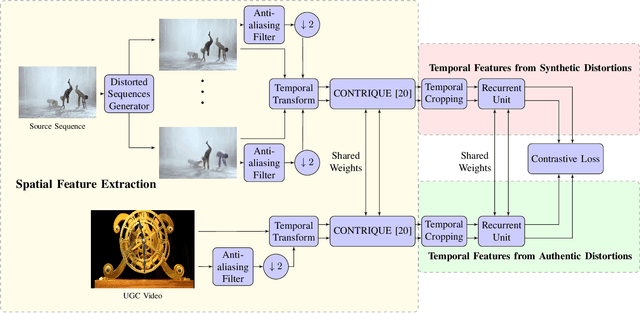

CONVIQT: Contrastive Video Quality Estimator

Jun 29, 2022

Abstract:Perceptual video quality assessment (VQA) is an integral component of many streaming and video sharing platforms. Here we consider the problem of learning perceptually relevant video quality representations in a self-supervised manner. Distortion type identification and degradation level determination is employed as an auxiliary task to train a deep learning model containing a deep Convolutional Neural Network (CNN) that extracts spatial features, as well as a recurrent unit that captures temporal information. The model is trained using a contrastive loss and we therefore refer to this training framework and resulting model as CONtrastive VIdeo Quality EstimaTor (CONVIQT). During testing, the weights of the trained model are frozen, and a linear regressor maps the learned features to quality scores in a no-reference (NR) setting. We conduct comprehensive evaluations of the proposed model on multiple VQA databases by analyzing the correlations between model predictions and ground-truth quality ratings, and achieve competitive performance when compared to state-of-the-art NR-VQA models, even though it is not trained on those databases. Our ablation experiments demonstrate that the learned representations are highly robust and generalize well across synthetic and realistic distortions. Our results indicate that compelling representations with perceptual bearing can be obtained using self-supervised learning. The implementations used in this work have been made available at https://github.com/pavancm/CONVIQT.

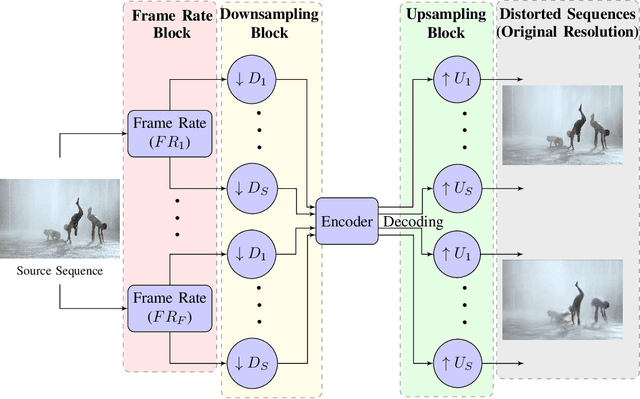

Making Video Quality Assessment Models Sensitive to Frame Rate Distortions

May 21, 2022

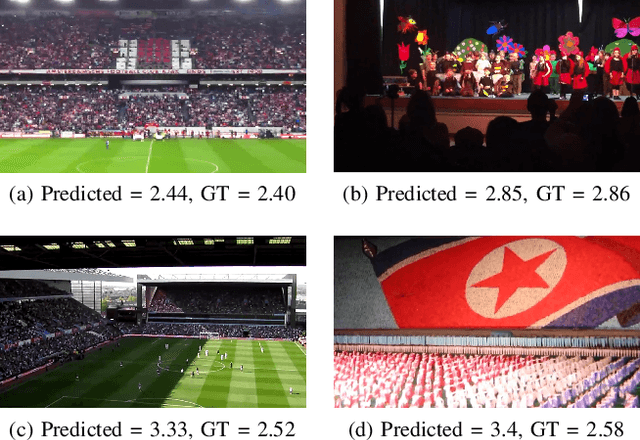

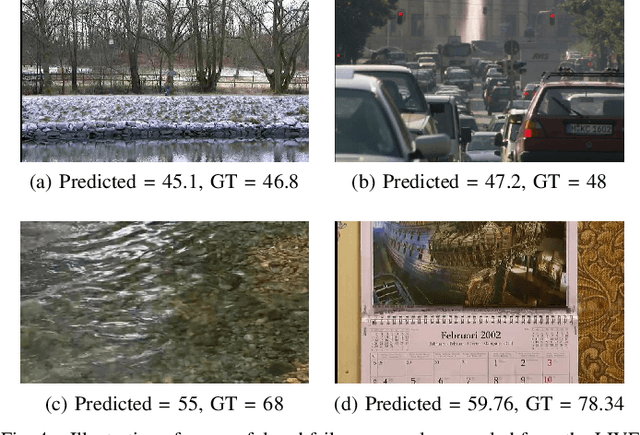

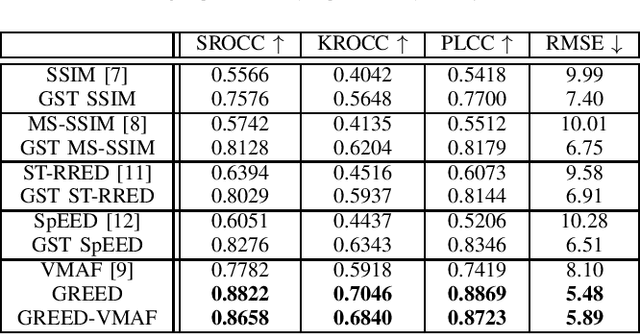

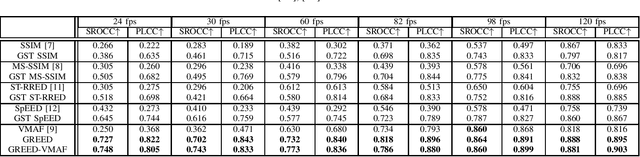

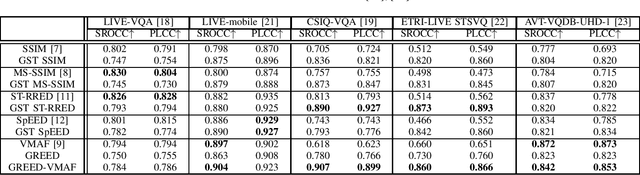

Abstract:We consider the problem of capturing distortions arising from changes in frame rate as part of Video Quality Assessment (VQA). Variable frame rate (VFR) videos have become much more common, and streamed videos commonly range from 30 frames per second (fps) up to 120 fps. VFR-VQA offers unique challenges in terms of distortion types as well as in making non-uniform comparisons of reference and distorted videos having different frame rates. The majority of current VQA models require compared videos to be of the same frame rate, but are unable to adequately account for frame rate artifacts. The recently proposed Generalized Entropic Difference (GREED) VQA model succeeds at this task, using natural video statistics models of entropic differences of temporal band-pass coefficients, delivering superior performance on predicting video quality changes arising from frame rate distortions. Here we propose a simple fusion framework, whereby temporal features from GREED are combined with existing VQA models, towards improving model sensitivity towards frame rate distortions. We find through extensive experiments that this feature fusion significantly boosts model performance on both HFR/VFR datasets as well as fixed frame rate (FFR) VQA databases. Our results suggest that employing efficient temporal representations can result much more robust and accurate VQA models when frame rate variations can occur.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge