François Pitiè

Direct Optimisation of $\boldsymbolλ$ for HDR Content Adaptive Transcoding in AV1

Aug 23, 2022

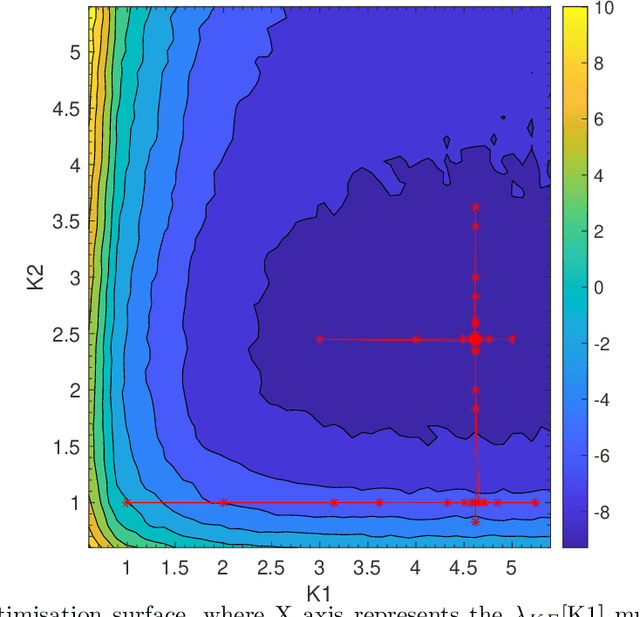

Abstract:Since the adoption of VP9 by Netflix in 2016, royalty-free coding standards continued to gain prominence through the activities of the AOMedia consortium. AV1, the latest open source standard, is now widely supported. In the early years after standardisation, HDR video tends to be under served in open source encoders for a variety of reasons including the relatively small amount of true HDR content being broadcast and the challenges in RD optimisation with that material. AV1 codec optimisation has been ongoing since 2020 including consideration of the computational load. In this paper, we explore the idea of direct optimisation of the Lagrangian $\lambda$ parameter used in the rate control of the encoders to estimate the optimal Rate-Distortion trade-off achievable for a High Dynamic Range signalled video clip. We show that by adjusting the Lagrange multiplier in the RD optimisation process on a frame-hierarchy basis, we are able to increase the Bjontegaard difference rate gains by more than 3.98$\times$ on average without visually affecting the quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge