Augusto Luis Ballardini

Human-like Semantic Navigation for Autonomous Driving using Knowledge Representation and Large Language Models

May 22, 2025Abstract:Achieving full automation in self-driving vehicles remains a challenge, especially in dynamic urban environments where navigation requires real-time adaptability. Existing systems struggle to handle navigation plans when faced with unpredictable changes in road layouts, spontaneous detours, or missing map data, due to their heavy reliance on predefined cartographic information. In this work, we explore the use of Large Language Models to generate Answer Set Programming rules by translating informal navigation instructions into structured, logic-based reasoning. ASP provides non-monotonic reasoning, allowing autonomous vehicles to adapt to evolving scenarios without relying on predefined maps. We present an experimental evaluation in which LLMs generate ASP constraints that encode real-world urban driving logic into a formal knowledge representation. By automating the translation of informal navigation instructions into logical rules, our method improves adaptability and explainability in autonomous navigation. Results show that LLM-driven ASP rule generation supports semantic-based decision-making, offering an explainable framework for dynamic navigation planning that aligns closely with how humans communicate navigational intent.

RAG-based Explainable Prediction of Road Users Behaviors for Automated Driving using Knowledge Graphs and Large Language Models

May 01, 2024

Abstract:Prediction of road users' behaviors in the context of autonomous driving has gained considerable attention by the scientific community in the last years. Most works focus on predicting behaviors based on kinematic information alone, a simplification of the reality since road users are humans, and as such they are highly influenced by their surrounding context. In addition, a large plethora of research works rely on powerful Deep Learning techniques, which exhibit high performance metrics in prediction tasks but may lack the ability to fully understand and exploit the contextual semantic information contained in the road scene, not to mention their inability to provide explainable predictions that can be understood by humans. In this work, we propose an explainable road users' behavior prediction system that integrates the reasoning abilities of Knowledge Graphs (KG) and the expressiveness capabilities of Large Language Models (LLM) by using Retrieval Augmented Generation (RAG) techniques. For that purpose, Knowledge Graph Embeddings (KGE) and Bayesian inference are combined to allow the deployment of a fully inductive reasoning system that enables the issuing of predictions that rely on legacy information contained in the graph as well as on current evidence gathered in real time by onboard sensors. Two use cases have been implemented following the proposed approach: 1) Prediction of pedestrians' crossing actions; 2) Prediction of lane change maneuvers. In both cases, the performance attained surpasses the current state of the art in terms of anticipation and F1-score, showing a promising avenue for future research in this field.

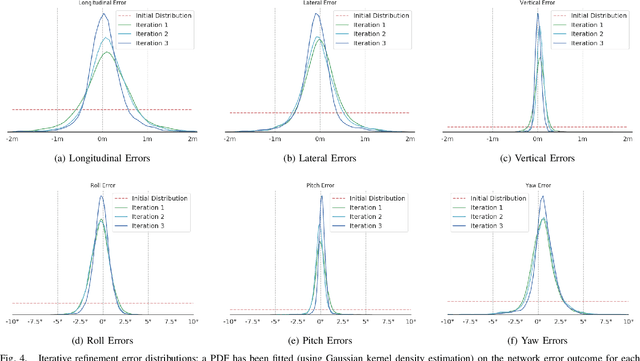

A comparison of uncertainty estimation approaches for DNN-based camera localization

Nov 02, 2022

Abstract:Camera localization, i.e., camera pose regression, represents a very important task in computer vision, since it has many practical applications, such as autonomous driving. A reliable estimation of the uncertainties in camera localization is also important, as it would allow to intercept localization failures, which would be dangerous. Even though the literature presents some uncertainty estimation methods, to the best of our knowledge their effectiveness has not been thoroughly examined. This work compares the performances of three consolidated epistemic uncertainty estimation methods: Monte Carlo Dropout (MCD), Deep Ensemble (DE), and Deep Evidential Regression (DER), in the specific context of camera localization. We exploited CMRNet, a DNN approach for multi-modal image to LiDAR map registration, by modifying its internal configuration to allow for an extensive experimental activity with the three methods on the KITTI dataset. Particularly significant has been the application of DER. We achieve accurate camera localization and a calibrated uncertainty, to the point that some method can be used for detecting localization failures.

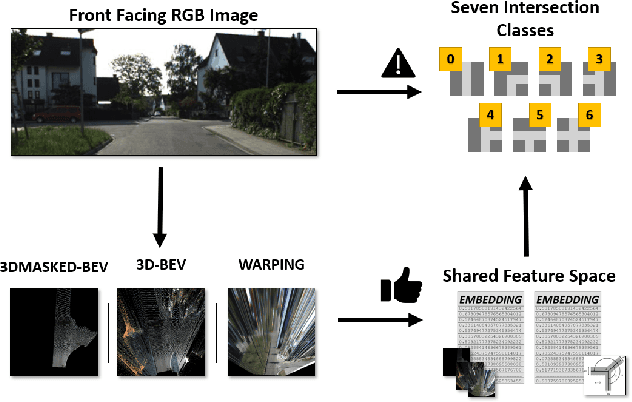

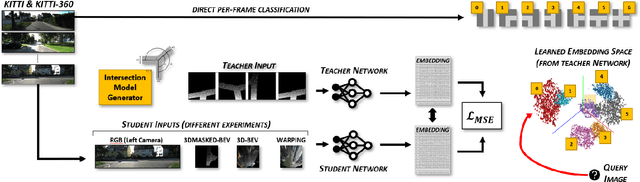

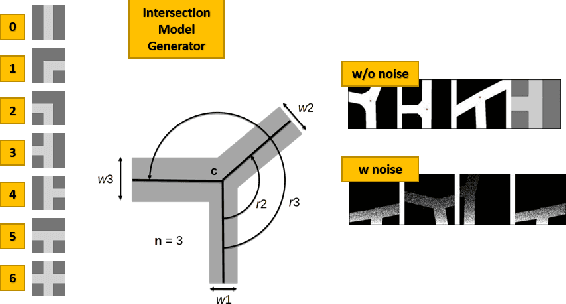

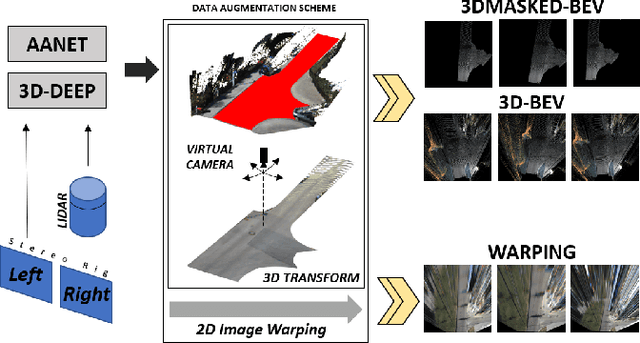

Model Guided Road Intersection Classification

Apr 26, 2021

Abstract:Understanding complex scenarios from in-vehicle cameras is essential for safely operating autonomous driving systems in densely populated areas. Among these, intersection areas are one of the most critical as they concentrate a considerable number of traffic accidents and fatalities. Detecting and understanding the scene configuration of these usually crowded areas is then of extreme importance for both autonomous vehicles and modern ADAS aimed at preventing road crashes and increasing the safety of vulnerable road users. This work investigates inter-section classification from RGB images using well-consolidate neural network approaches along with a method to enhance the results based on the teacher/student training paradigm. An extensive experimental activity aimed at identifying the best input configuration and evaluating different network parameters on both the well-known KITTI dataset and the new KITTI-360 sequences shows that our method outperforms current state-of-the-art approaches on a per-frame basis and prove the effectiveness of the proposed learning scheme.

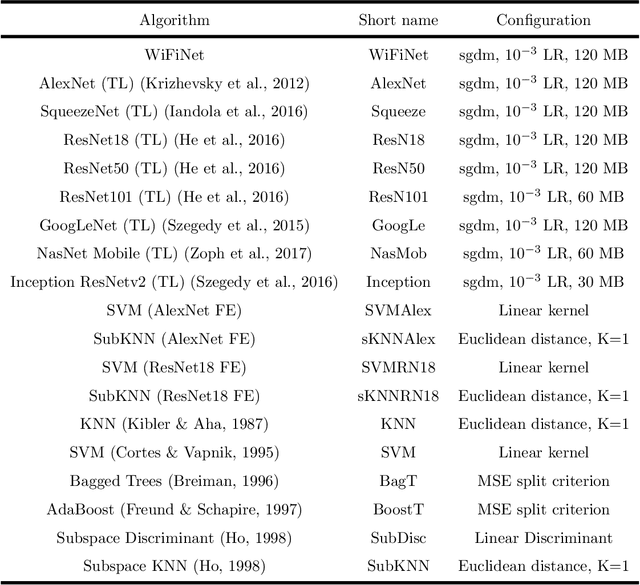

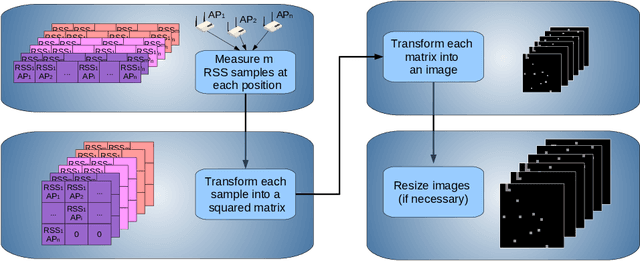

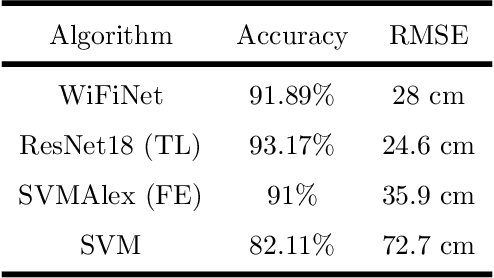

WiFiNet: WiFi-based indoor localisation using CNNs

Apr 14, 2021

Abstract:Different technologies have been proposed to provide indoor localisation: magnetic field, bluetooth , WiFi, etc. Among them, WiFi is the one with the highest availability and highest accuracy. This fact allows for an ubiquitous accurate localisation available for almost any environment and any device. However, WiFi-based localisation is still an open problem. In this article, we propose a new WiFi-based indoor localisation system that takes advantage of the great ability of Convolutional Neural Networks in classification problems. Three different approaches were used to achieve this goal: a custom architecture called WiFiNet designed and trained specifically to solve this problem and the most popular pre-trained networks using both transfer learning and feature extraction. Results indicate that WiFiNet is as a great approach for indoor localisation in a medium-sized environment (30 positions and 113 access points) as it reduces the mean localisation error (33%) and the processing time when compared with state-of-the-art WiFi indoor localisation algorithms such as SVM.

Fail-Aware LIDAR-Based Odometry for Autonomous Vehicles

Mar 05, 2021

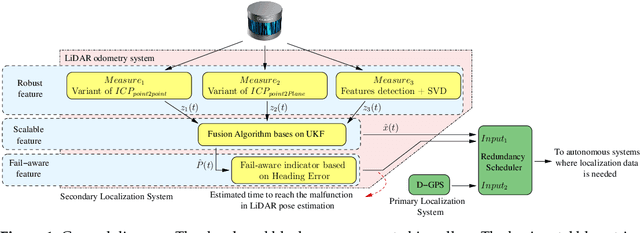

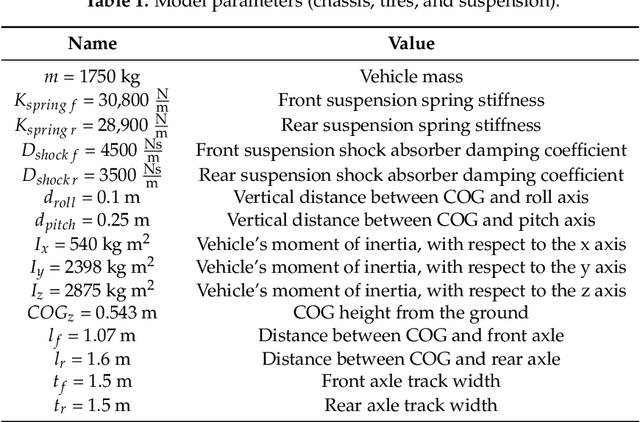

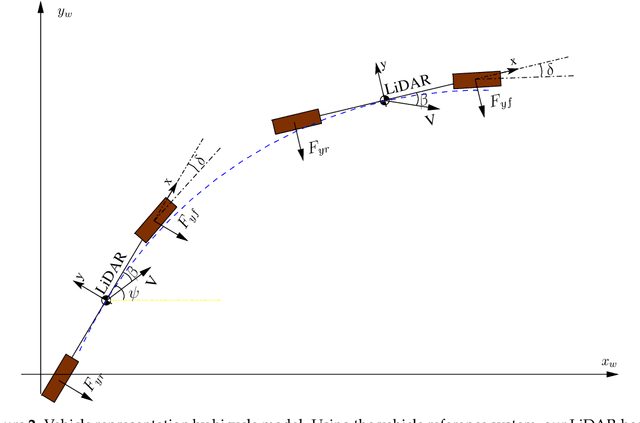

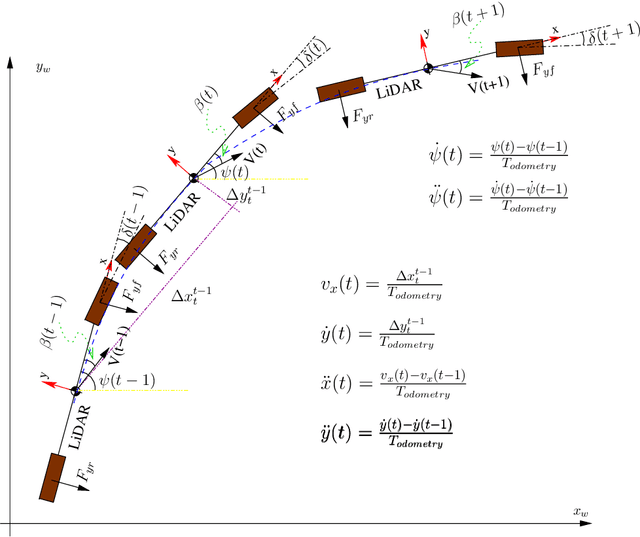

Abstract:Autonomous driving systems are set to become a reality in transport systems and, so, maximum acceptance is being sought among users. Currently, the most advanced architectures require driver intervention when functional system failures or critical sensor operations take place, presenting problems related to driver state, distractions, fatigue, and other factors that prevent safe control. Therefore, this work presents a redundant, accurate, robust, and scalable LiDAR odometry system with fail-aware system features that can allow other systems to perform a safe stop manoeuvre without driver mediation. All odometry systems have drift error, making it difficult to use them for localisation tasks over extended periods. For this reason, the paper presents an accurate LiDAR odometry system with a fail-aware indicator. This indicator estimates a time window in which the system manages the localisation tasks appropriately. The odometry error is minimised by applying a dynamic 6-DoF model and fusing measures based on the Iterative Closest Points (ICP), environment feature extraction, and Singular Value Decomposition (SVD) methods. The obtained results are promising for two reasons: First, in the KITTI odometry data set, the ranking achieved by the proposed method is twelfth, considering only LiDAR-based methods, where its translation and rotation errors are 1.00% and 0.0041 deg/m, respectively. Second, the encouraging results of the fail-aware indicator demonstrate the safety of the proposed LiDAR odometry system. The results depict that, in order to achieve an accurate odometry system, complex models and measurement fusion techniques must be used to improve its behaviour. Furthermore, if an odometry system is to be used for redundant localisation features, it must integrate a fail-aware indicator for use in a safe manner.

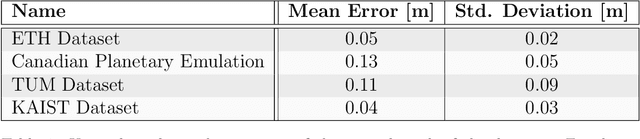

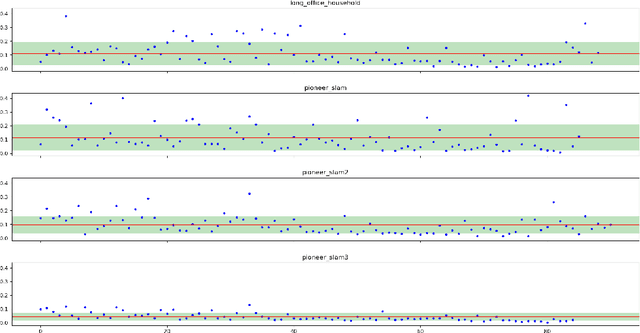

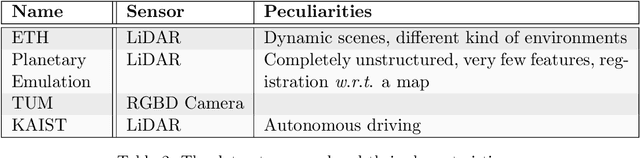

A Benchmark for Point Clouds Registration Algorithms

Apr 06, 2020

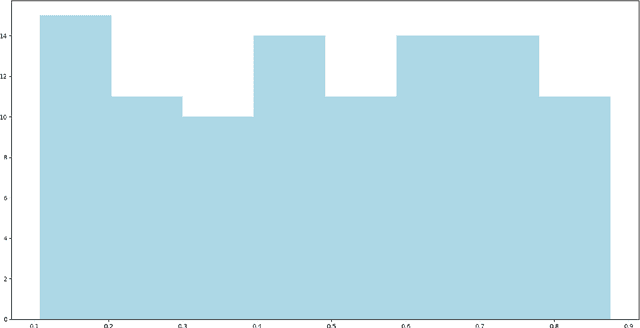

Abstract:Point clouds registration is a fundamental step of many point clouds processing pipelines; however, most algorithms are tested on data collected ad-hoc and not shared with the research community. These data often cover only a very limited set of use cases; therefore, the results cannot be generalised. Public datasets proposed until now, taken individually, cover only a few kinds of environment and mostly a single sensor. For these reasons, we developed a benchmark, for localization and mapping applications, using multiple publicly available datasets. In this way, we have been able to cover many kinds of environments and many kinds of sensor that can produce point clouds. Furthermore, the ground truth has been thoroughly inspected and evaluated to ensure its quality. For some of the datasets, the accuracy of the ground truth system was not reported by the original authors, therefore we estimated it with our own novel method, based on an iterative registration algorithm. Along with the data, we provide a broad set of registration problems, chosen to cover different types of initial misalignment, various degrees of overlap, and different kinds of registration problems. Lastly, we propose a metric to measure the performances of registration algorithms: it combines the commonly used rotation and translation errors together, to allow an objective comparison of the alignments. This work aims at encouraging authors to use a public and shared benchmark, instead than data collected ad-hoc, to ensure objectivity and repeatability, two fundamental characteristics in any scientific field.

Vehicle Ego-Lane Estimation with Sensor Failure Modeling

Feb 06, 2020

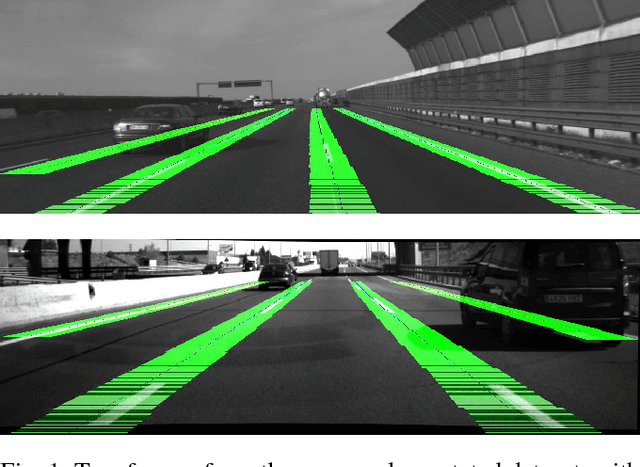

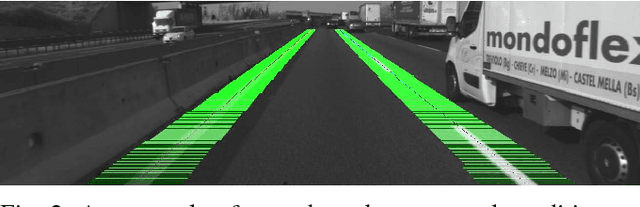

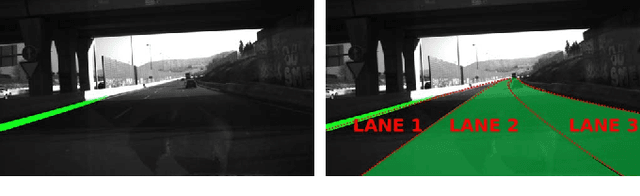

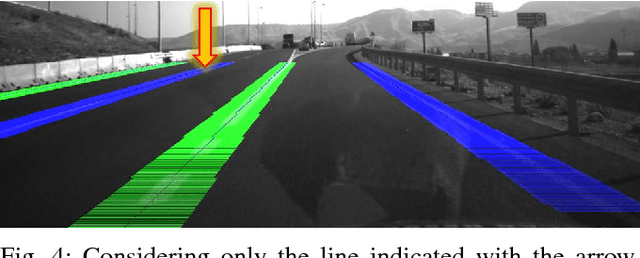

Abstract:We present a probabilistic ego-lane estimation algorithm for highway-like scenarios that is designed to increase the accuracy of the ego-lane estimate, which can be obtained relying only on a noisy line detector and tracker. The contribution relies on a Hidden Markov Model (HMM) with a transient failure model. The proposed algorithm exploits the OpenStreetMap (or other cartographic services) road property lane number as the expected number of lanes and leverages consecutive, possibly incomplete, observations. The algorithm effectiveness is proven by employing different line detectors and showing we could achieve much more usable, i.e. stable and reliable, ego-lane estimates over more than 100 Km of highway scenarios, recorded both in Italy and Spain. Moreover, as we could not find a suitable dataset for a quantitative comparison with other approaches, we collected datasets and manually annotated the Ground Truth about the vehicle ego-lane. Such datasets are made publicly available for usage from the scientific community.

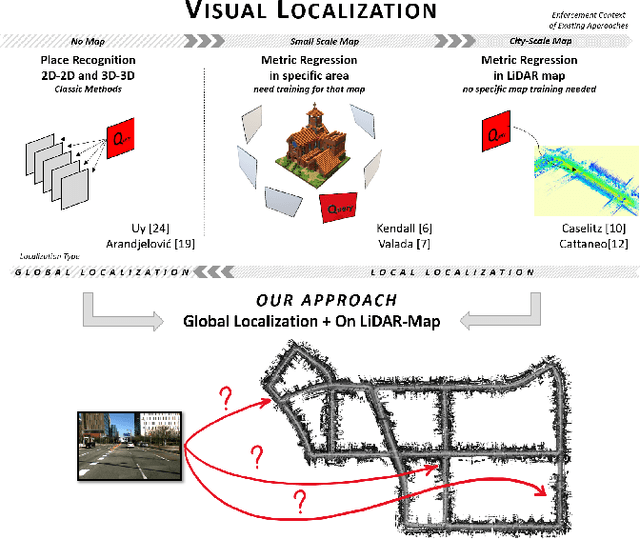

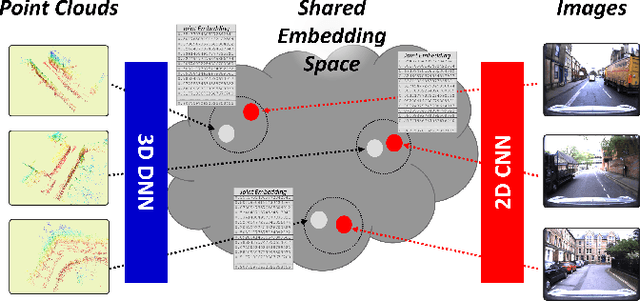

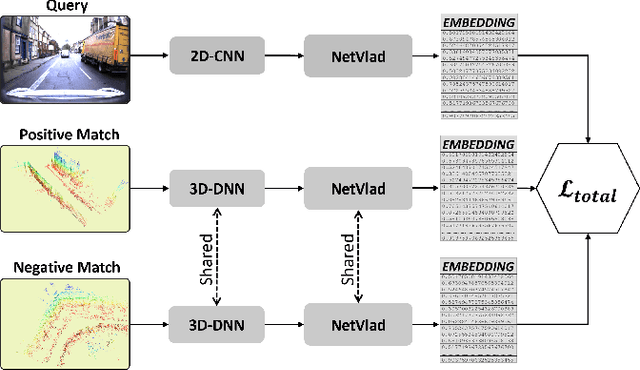

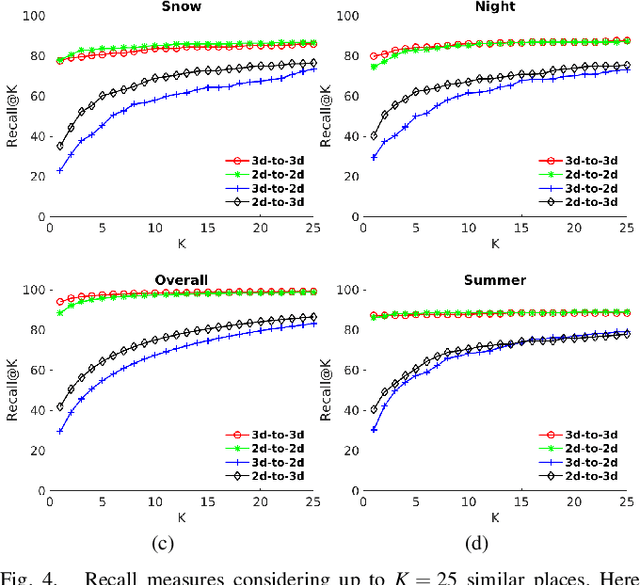

Global visual localization in LiDAR-maps through shared 2D-3D embedding space

Oct 02, 2019

Abstract:Global localization is an important and widely studied problem for many robotic applications. Place recognition approaches can be exploited to solve this task, e.g., in the autonomous driving field. While most vision-based approaches match an image w.r.t an image database, global visual localization within LiDAR-maps remains fairly unexplored, even though the path toward high definition 3D maps, produced mainly from LiDARs, is clear. In this work we leverage DNN approaches to create a shared embedding space between images and LiDAR-maps, allowing for image to 3D-LiDAR place recognition. We trained a 2D and a 3D Deep Neural Networks (DNNs) that create embeddings, respectively from images and from point clouds, that are close to each other whether they refer to the same place. An extensive experimental activity is presented to assess the effectiveness of the approach w.r.t. different learning methods, network architectures, and loss functions. All the evaluations have been performed using the Oxford Robotcar Dataset, which encompasses a wide range of weather and light conditions.

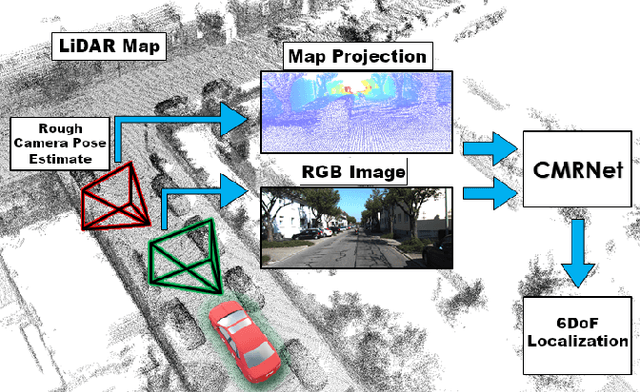

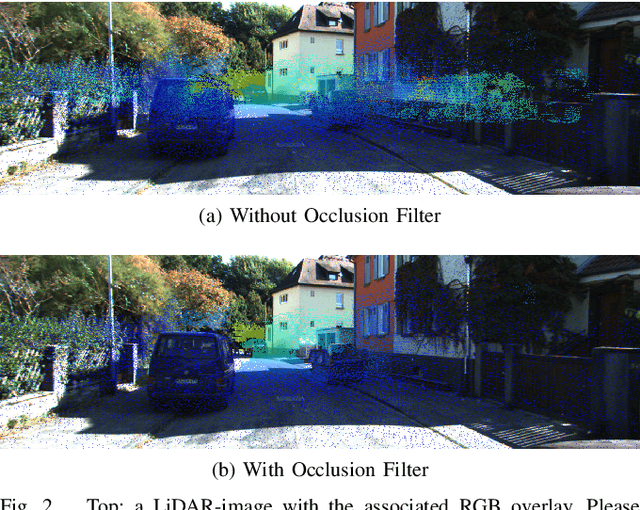

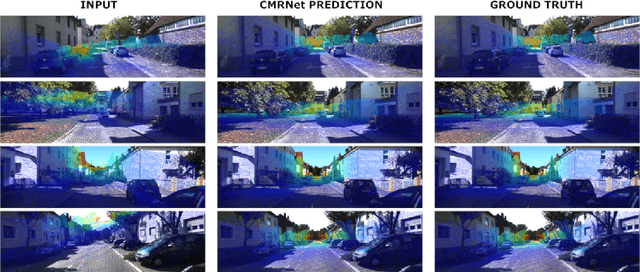

CMRNet: Camera to LiDAR-Map Registration

Jul 17, 2019

Abstract:In this paper we present CMRNet, a realtime approach based on a Convolutional Neural Network to localize an RGB image of a scene in a map built from LiDAR data. Our network is not trained in the working area, i.e. CMRNet does not learn the map. Instead it learns to match an image to the map. We validate our approach on the KITTI dataset, processing each frame independently without any tracking procedure. CMRNet achieves 0.27m and 1.07deg median localization accuracy on the sequence 00 of the odometry dataset, starting from a rough pose estimate displaced up to 3.5m and 17deg. To the best of our knowledge this is the first CNN-based approach that learns to match images from a monocular camera to a given, preexisting 3D LiDAR-map.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge