Rubén Izquierdo

Behavioural gap assessment of human-vehicle interaction in real and virtual reality-based scenarios in autonomous driving

Jul 04, 2024Abstract:In the field of autonomous driving research, the use of immersive virtual reality (VR) techniques is widespread to enable a variety of studies under safe and controlled conditions. However, this methodology is only valid and consistent if the conduct of participants in the simulated setting mirrors their actions in an actual environment. In this paper, we present a first and innovative approach to evaluating what we term the behavioural gap, a concept that captures the disparity in a participant's conduct when engaging in a VR experiment compared to an equivalent real-world situation. To this end, we developed a digital twin of a pre-existed crosswalk and carried out a field experiment (N=18) to investigate pedestrian-autonomous vehicle interaction in both real and simulated driving conditions. In the experiment, the pedestrian attempts to cross the road in the presence of different driving styles and an external Human-Machine Interface (eHMI). By combining survey-based and behavioural analysis methodologies, we develop a quantitative approach to empirically assess the behavioural gap, as a mechanism to validate data obtained from real subjects interacting in a simulated VR-based environment. Results show that participants are more cautious and curious in VR, affecting their speed and decisions, and that VR interfaces significantly influence their actions.

RAG-based Explainable Prediction of Road Users Behaviors for Automated Driving using Knowledge Graphs and Large Language Models

May 01, 2024

Abstract:Prediction of road users' behaviors in the context of autonomous driving has gained considerable attention by the scientific community in the last years. Most works focus on predicting behaviors based on kinematic information alone, a simplification of the reality since road users are humans, and as such they are highly influenced by their surrounding context. In addition, a large plethora of research works rely on powerful Deep Learning techniques, which exhibit high performance metrics in prediction tasks but may lack the ability to fully understand and exploit the contextual semantic information contained in the road scene, not to mention their inability to provide explainable predictions that can be understood by humans. In this work, we propose an explainable road users' behavior prediction system that integrates the reasoning abilities of Knowledge Graphs (KG) and the expressiveness capabilities of Large Language Models (LLM) by using Retrieval Augmented Generation (RAG) techniques. For that purpose, Knowledge Graph Embeddings (KGE) and Bayesian inference are combined to allow the deployment of a fully inductive reasoning system that enables the issuing of predictions that rely on legacy information contained in the graph as well as on current evidence gathered in real time by onboard sensors. Two use cases have been implemented following the proposed approach: 1) Prediction of pedestrians' crossing actions; 2) Prediction of lane change maneuvers. In both cases, the performance attained surpasses the current state of the art in terms of anticipation and F1-score, showing a promising avenue for future research in this field.

Attribute Annotation and Bias Evaluation in Visual Datasets for Autonomous Driving

Dec 11, 2023Abstract:This paper addresses the often overlooked issue of fairness in the autonomous driving domain, particularly in vision-based perception and prediction systems, which play a pivotal role in the overall functioning of Autonomous Vehicles (AVs). We focus our analysis on biases present in some of the most commonly used visual datasets for training person and vehicle detection systems. We introduce an annotation methodology and a specialised annotation tool, both designed to annotate protected attributes of agents in visual datasets. We validate our methodology through an inter-rater agreement analysis and provide the distribution of attributes across all datasets. These include annotations for the attributes age, sex, skin tone, group, and means of transport for more than 90K people, as well as vehicle type, colour, and car type for over 50K vehicles. Generally, diversity is very low for most attributes, with some groups, such as children, wheelchair users, or personal mobility vehicle users, being extremely underrepresented in the analysed datasets. The study contributes significantly to efforts to consider fairness in the evaluation of perception and prediction systems for AVs. This paper follows reproducibility principles. The annotation tool, scripts and the annotated attributes can be accessed publicly at https://github.com/ec-jrc/humaint_annotator.

Pedestrian and Passenger Interaction with Autonomous Vehicles: Field Study in a Crosswalk Scenario

Dec 11, 2023Abstract:This study presents the outcomes of empirical investigations pertaining to human-vehicle interactions involving an autonomous vehicle equipped with both internal and external Human Machine Interfaces (HMIs) within a crosswalk scenario. The internal and external HMIs were integrated with implicit communication techniques, incorporating a combination of gentle and aggressive braking maneuvers within the crosswalk. Data were collected through a combination of questionnaires and quantifiable metrics, including pedestrian decision to cross related to the vehicle distance and speed. The questionnaire responses reveal that pedestrians experience enhanced safety perceptions when the external HMI and gentle braking maneuvers are used in tandem. In contrast, the measured variables demonstrate that the external HMI proves effective when complemented by the gentle braking maneuver. Furthermore, the questionnaire results highlight that the internal HMI enhances passenger confidence only when paired with the aggressive braking maneuver.

Digital twin in virtual reality for human-vehicle interactions in the context of autonomous driving

Mar 20, 2023Abstract:The traditional simulation methods present some limitations, such as the reality gap between simulated experiences and real-world performance. In the field of autonomous driving research, we propose the handling of an immersive virtual reality system for pedestrians to include in simulations real behaviors of agents that interact with the simulated environment in real time, to improve the quality of the virtual-world data and reduce the gap. In this paper we employ a digital twin to replicate a study on communication interfaces between autonomous vehicles and pedestrians, generating an equivalent virtual scenario to compare the results and establish qualitative and quantitative measurements of the discrepancy. The goal is to evaluate the effectiveness and acceptability of implicit and explicit forms of communication in both scenarios and to verify that the behavior carried out by the pedestrian inside the simulator through a virtual reality interface is directly comparable with their role performed in a real traffic situation.

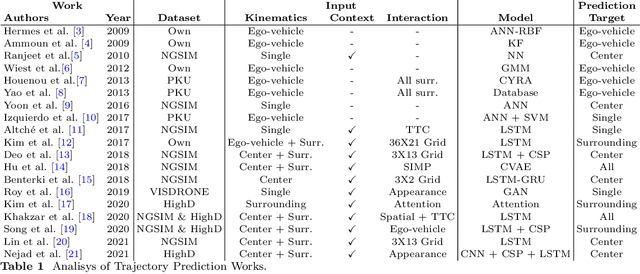

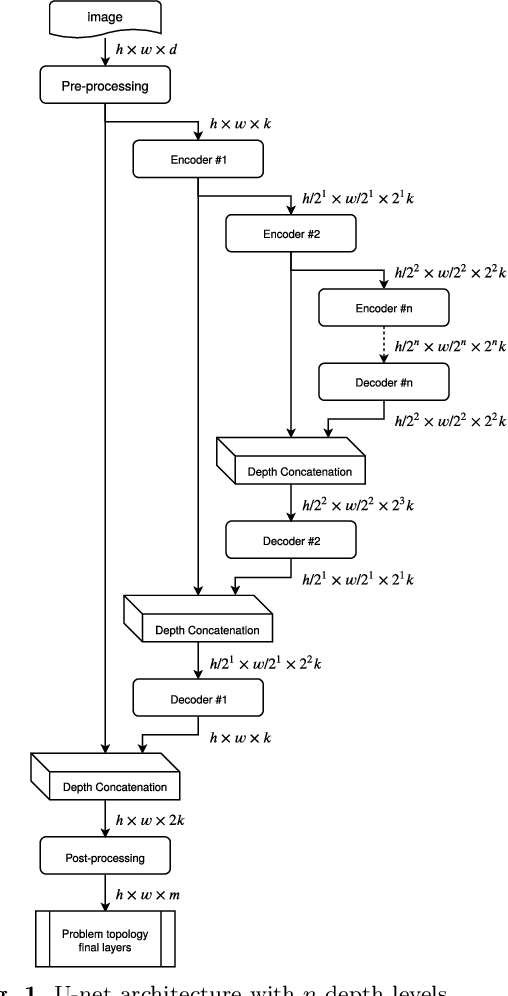

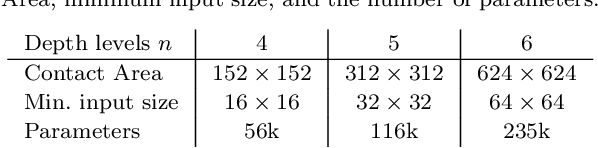

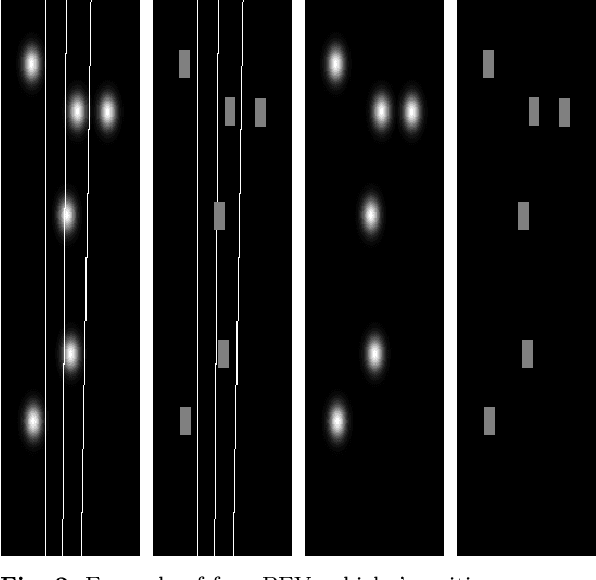

Vehicle Trajectory Prediction on Highways Using Bird Eye View Representations and Deep Learning

Jul 04, 2022

Abstract:This work presents a novel method for predicting vehicle trajectories in highway scenarios using efficient bird's eye view representations and convolutional neural networks. Vehicle positions, motion histories, road configuration, and vehicle interactions are easily included in the prediction model using basic visual representations. The U-net model has been selected as the prediction kernel to generate future visual representations of the scene using an image-to-image regression approach. A method has been implemented to extract vehicle positions from the generated graphical representations to achieve subpixel resolution. The method has been trained and evaluated using the PREVENTION dataset, an on-board sensor dataset. Different network configurations and scene representations have been evaluated. This study found that U-net with 6 depth levels using a linear terminal layer and a Gaussian representation of the vehicles is the best performing configuration. The use of lane markings was found to produce no improvement in prediction performance. The average prediction error is 0.47 and 0.38 meters and the final prediction error is 0.76 and 0.53 meters for longitudinal and lateral coordinates, respectively, for a predicted trajectory length of 2.0 seconds. The prediction error is up to 50% lower compared to the baseline method.

WiFiNet: WiFi-based indoor localisation using CNNs

Apr 14, 2021

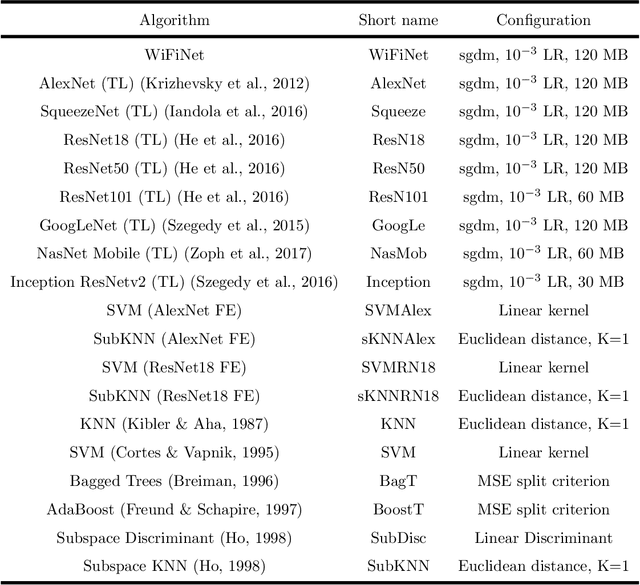

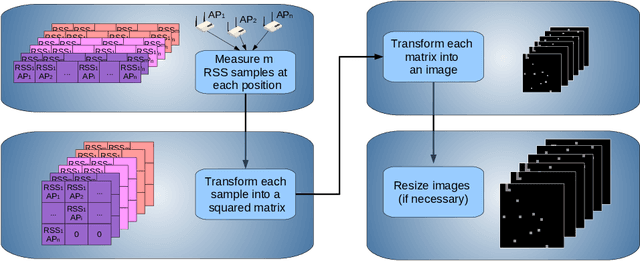

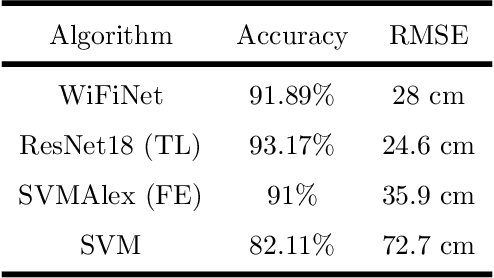

Abstract:Different technologies have been proposed to provide indoor localisation: magnetic field, bluetooth , WiFi, etc. Among them, WiFi is the one with the highest availability and highest accuracy. This fact allows for an ubiquitous accurate localisation available for almost any environment and any device. However, WiFi-based localisation is still an open problem. In this article, we propose a new WiFi-based indoor localisation system that takes advantage of the great ability of Convolutional Neural Networks in classification problems. Three different approaches were used to achieve this goal: a custom architecture called WiFiNet designed and trained specifically to solve this problem and the most popular pre-trained networks using both transfer learning and feature extraction. Results indicate that WiFiNet is as a great approach for indoor localisation in a medium-sized environment (30 positions and 113 access points) as it reduces the mean localisation error (33%) and the processing time when compared with state-of-the-art WiFi indoor localisation algorithms such as SVM.

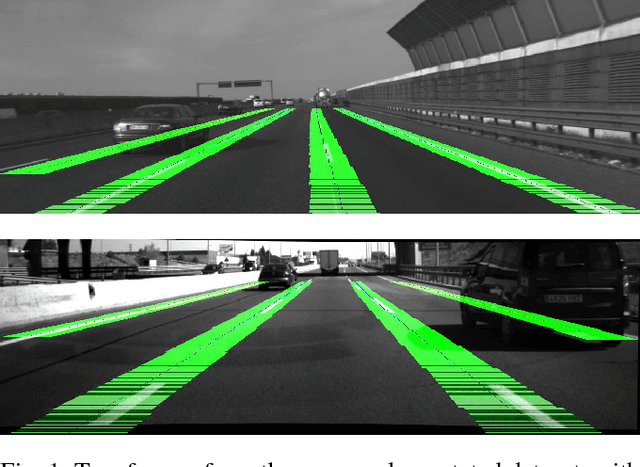

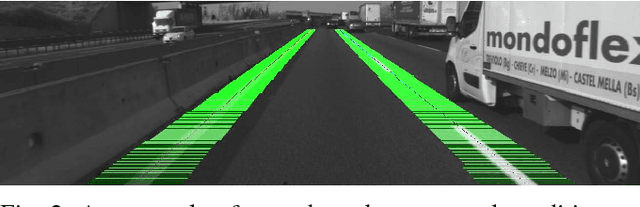

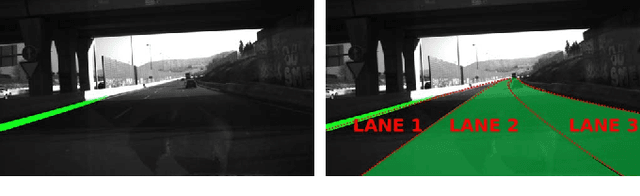

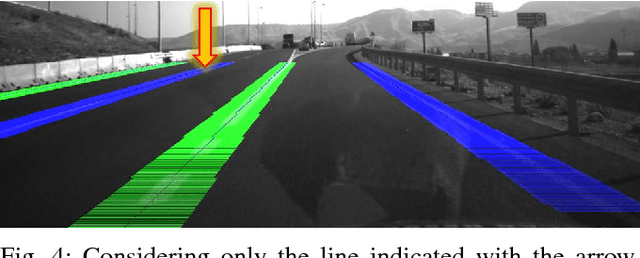

Vehicle Ego-Lane Estimation with Sensor Failure Modeling

Feb 06, 2020

Abstract:We present a probabilistic ego-lane estimation algorithm for highway-like scenarios that is designed to increase the accuracy of the ego-lane estimate, which can be obtained relying only on a noisy line detector and tracker. The contribution relies on a Hidden Markov Model (HMM) with a transient failure model. The proposed algorithm exploits the OpenStreetMap (or other cartographic services) road property lane number as the expected number of lanes and leverages consecutive, possibly incomplete, observations. The algorithm effectiveness is proven by employing different line detectors and showing we could achieve much more usable, i.e. stable and reliable, ego-lane estimates over more than 100 Km of highway scenarios, recorded both in Italy and Spain. Moreover, as we could not find a suitable dataset for a quantitative comparison with other approaches, we collected datasets and manually annotated the Ground Truth about the vehicle ego-lane. Such datasets are made publicly available for usage from the scientific community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge