Carlota Salinas Maldonado

RAG-based Explainable Prediction of Road Users Behaviors for Automated Driving using Knowledge Graphs and Large Language Models

May 01, 2024

Abstract:Prediction of road users' behaviors in the context of autonomous driving has gained considerable attention by the scientific community in the last years. Most works focus on predicting behaviors based on kinematic information alone, a simplification of the reality since road users are humans, and as such they are highly influenced by their surrounding context. In addition, a large plethora of research works rely on powerful Deep Learning techniques, which exhibit high performance metrics in prediction tasks but may lack the ability to fully understand and exploit the contextual semantic information contained in the road scene, not to mention their inability to provide explainable predictions that can be understood by humans. In this work, we propose an explainable road users' behavior prediction system that integrates the reasoning abilities of Knowledge Graphs (KG) and the expressiveness capabilities of Large Language Models (LLM) by using Retrieval Augmented Generation (RAG) techniques. For that purpose, Knowledge Graph Embeddings (KGE) and Bayesian inference are combined to allow the deployment of a fully inductive reasoning system that enables the issuing of predictions that rely on legacy information contained in the graph as well as on current evidence gathered in real time by onboard sensors. Two use cases have been implemented following the proposed approach: 1) Prediction of pedestrians' crossing actions; 2) Prediction of lane change maneuvers. In both cases, the performance attained surpasses the current state of the art in terms of anticipation and F1-score, showing a promising avenue for future research in this field.

Fail-Aware LIDAR-Based Odometry for Autonomous Vehicles

Mar 05, 2021

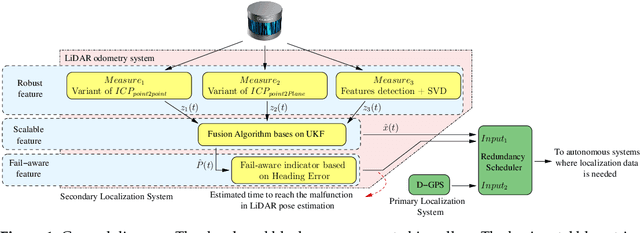

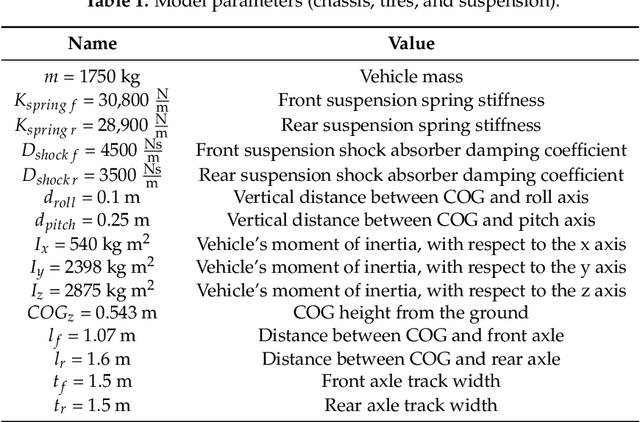

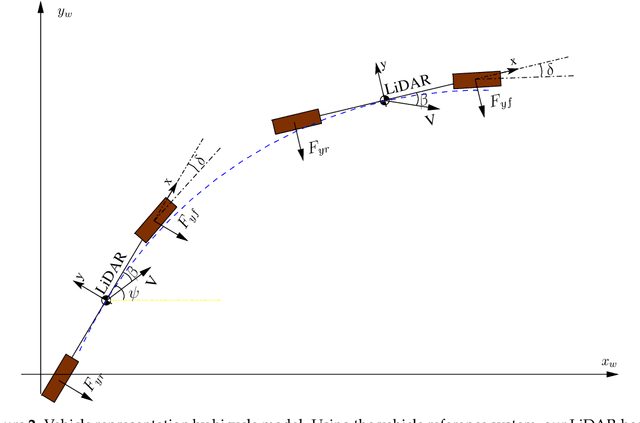

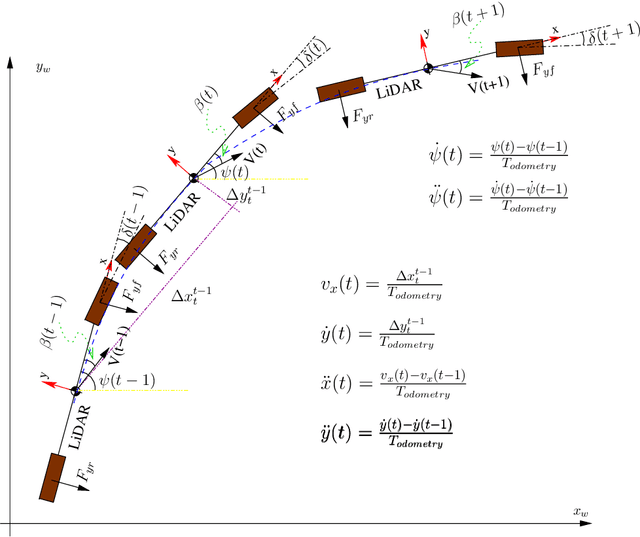

Abstract:Autonomous driving systems are set to become a reality in transport systems and, so, maximum acceptance is being sought among users. Currently, the most advanced architectures require driver intervention when functional system failures or critical sensor operations take place, presenting problems related to driver state, distractions, fatigue, and other factors that prevent safe control. Therefore, this work presents a redundant, accurate, robust, and scalable LiDAR odometry system with fail-aware system features that can allow other systems to perform a safe stop manoeuvre without driver mediation. All odometry systems have drift error, making it difficult to use them for localisation tasks over extended periods. For this reason, the paper presents an accurate LiDAR odometry system with a fail-aware indicator. This indicator estimates a time window in which the system manages the localisation tasks appropriately. The odometry error is minimised by applying a dynamic 6-DoF model and fusing measures based on the Iterative Closest Points (ICP), environment feature extraction, and Singular Value Decomposition (SVD) methods. The obtained results are promising for two reasons: First, in the KITTI odometry data set, the ranking achieved by the proposed method is twelfth, considering only LiDAR-based methods, where its translation and rotation errors are 1.00% and 0.0041 deg/m, respectively. Second, the encouraging results of the fail-aware indicator demonstrate the safety of the proposed LiDAR odometry system. The results depict that, in order to achieve an accurate odometry system, complex models and measurement fusion techniques must be used to improve its behaviour. Furthermore, if an odometry system is to be used for redundant localisation features, it must integrate a fail-aware indicator for use in a safe manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge