Asad Khan

SwishReLU: A Unified Approach to Activation Functions for Enhanced Deep Neural Networks Performance

Jul 11, 2024

Abstract:ReLU, a commonly used activation function in deep neural networks, is prone to the issue of "Dying ReLU". Several enhanced versions, such as ELU, SeLU, and Swish, have been introduced and are considered to be less commonly utilized. However, replacing ReLU can be somewhat challenging due to its inconsistent advantages. While Swish offers a smoother transition similar to ReLU, its utilization generally incurs a greater computational burden compared to ReLU. This paper proposes SwishReLU, a novel activation function combining elements of ReLU and Swish. Our findings reveal that SwishReLU outperforms ReLU in performance with a lower computational cost than Swish. This paper undertakes an examination and comparison of different types of ReLU variants with SwishReLU. Specifically, we compare ELU and SeLU along with Tanh on three datasets: CIFAR-10, CIFAR-100 and MNIST. Notably, applying SwishReLU in the VGG16 model described in Algorithm 2 yields a 6% accuracy improvement on the CIFAR-10 dataset.

Toward A Reinforcement-Learning-Based System for Adjusting Medication to Minimize Speech Disfluency

Dec 12, 2023Abstract:We propose a Reinforcement-Learning-based system that would automatically prescribe a hypothetical patient medications that may help the patient with their mental-health-related speech disfluency, and adjust the medication and the dosages in response to data from the patient. We demonstrate the components of the system: a module that detects and evaluates speech disfluency on a large dataset we built, and a Reinforcement Learning algorithm that automatically finds good combinations of medications. To support the two modules, we collect data on the effect of psychiatric medications for speech disfluency from the literature, and build a plausible patient simulation system. We demonstrate that the Reinforcement Learning system is, under some circumstances, able to converge to a good medication regime. We collect and label a dataset of people with possible speech disfluency and demonstrate our methods using that dataset. Our work is a proof of concept: we show that there is promise in the idea of using automatic data collection to address disfluency.

Interpreting a Machine Learning Model for Detecting Gravitational Waves

Feb 15, 2022

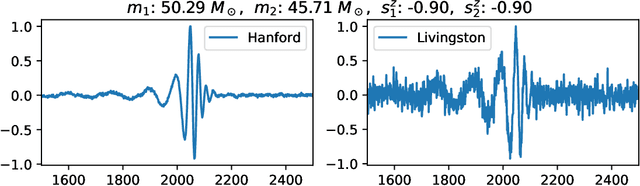

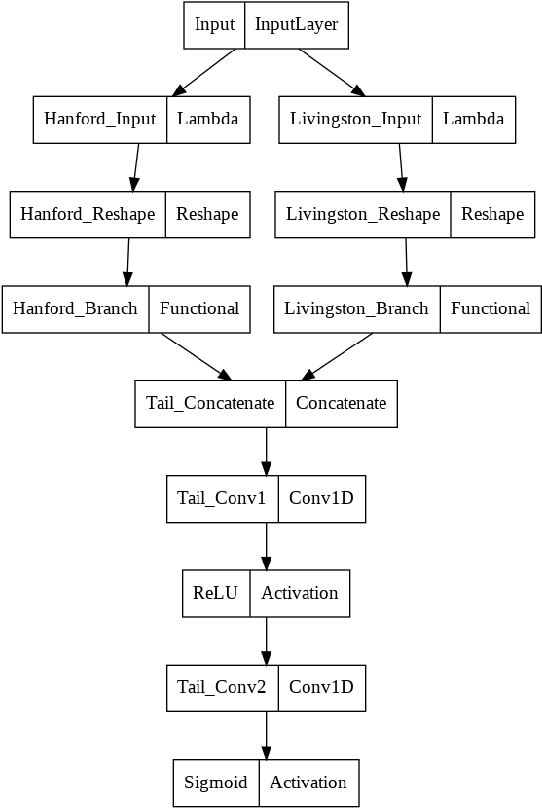

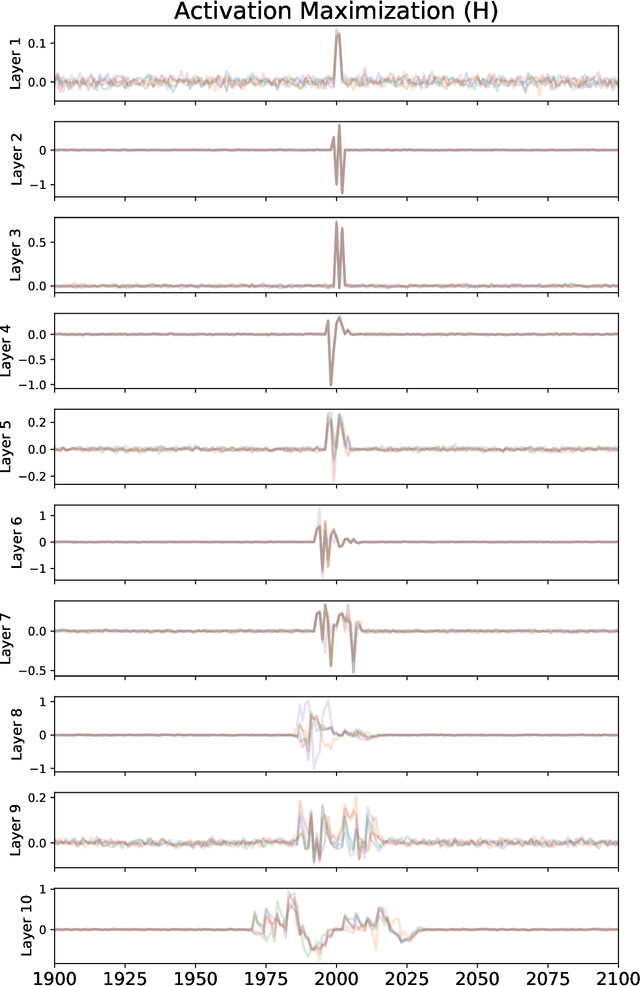

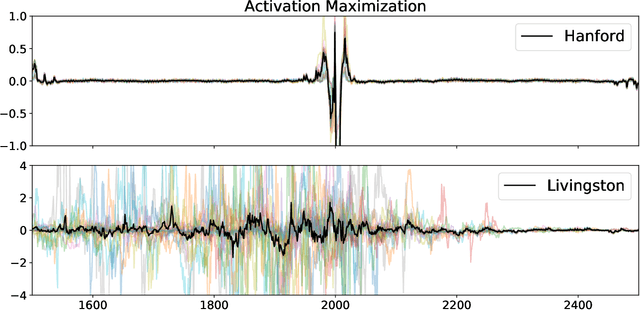

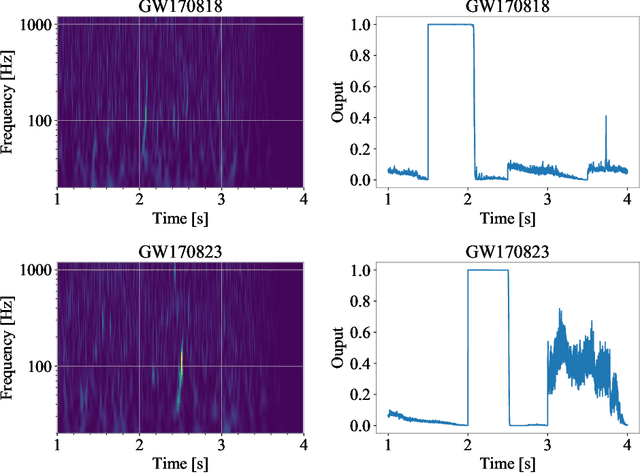

Abstract:We describe a case study of translational research, applying interpretability techniques developed for computer vision to machine learning models used to search for and find gravitational waves. The models we study are trained to detect black hole merger events in non-Gaussian and non-stationary advanced Laser Interferometer Gravitational-wave Observatory (LIGO) data. We produced visualizations of the response of machine learning models when they process advanced LIGO data that contains real gravitational wave signals, noise anomalies, and pure advanced LIGO noise. Our findings shed light on the responses of individual neurons in these machine learning models. Further analysis suggests that different parts of the network appear to specialize in local versus global features, and that this difference appears to be rooted in the branched architecture of the network as well as noise characteristics of the LIGO detectors. We believe efforts to whiten these "black box" models can suggest future avenues for research and help inform the design of interpretable machine learning models for gravitational wave astrophysics.

Inference-optimized AI and high performance computing for gravitational wave detection at scale

Jan 26, 2022

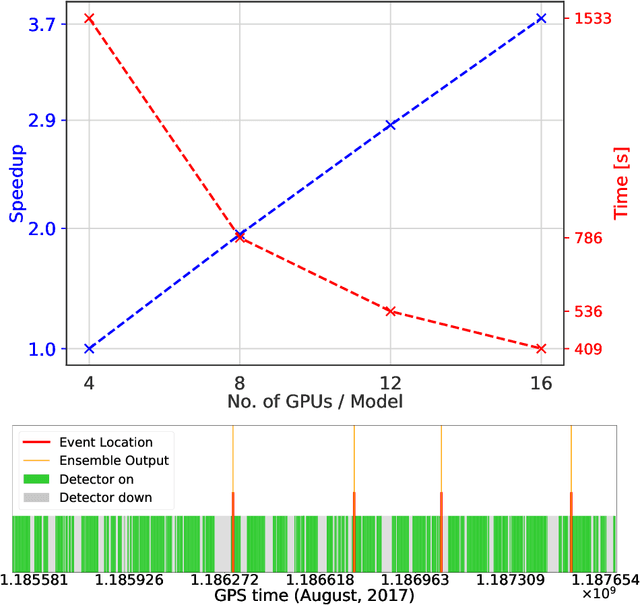

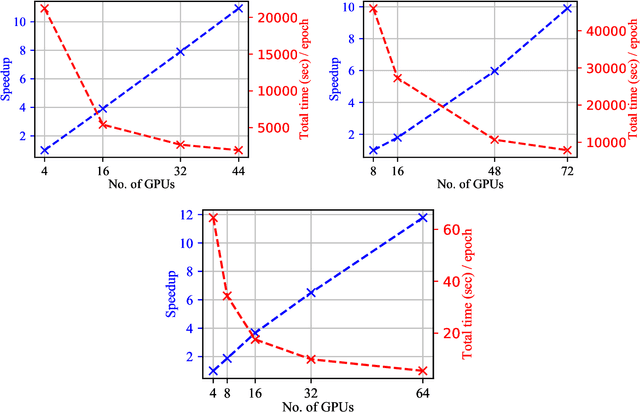

Abstract:We introduce an ensemble of artificial intelligence models for gravitational wave detection that we trained in the Summit supercomputer using 32 nodes, equivalent to 192 NVIDIA V100 GPUs, within 2 hours. Once fully trained, we optimized these models for accelerated inference using NVIDIA TensorRT. We deployed our inference-optimized AI ensemble in the ThetaGPU supercomputer at Argonne Leadership Computer Facility to conduct distributed inference. Using the entire ThetaGPU supercomputer, consisting of 20 nodes each of which has 8 NVIDIA A100 Tensor Core GPUs and 2 AMD Rome CPUs, our NVIDIA TensorRT-optimized AI ensemble porcessed an entire month of advanced LIGO data (including Hanford and Livingston data streams) within 50 seconds. Our inference-optimized AI ensemble retains the same sensitivity of traditional AI models, namely, it identifies all known binary black hole mergers previously identified in this advanced LIGO dataset and reports no misclassifications, while also providing a 3X inference speedup compared to traditional artificial intelligence models. We used time slides to quantify the performance of our AI ensemble to process up to 5 years worth of advanced LIGO data. In this synthetically enhanced dataset, our AI ensemble reports an average of one misclassification for every month of searched advanced LIGO data. We also present the receiver operating characteristic curve of our AI ensemble using this 5 year long advanced LIGO dataset. This approach provides the required tools to conduct accelerated, AI-driven gravitational wave detection at scale.

AI and extreme scale computing to learn and infer the physics of higher order gravitational wave modes of quasi-circular, spinning, non-precessing binary black hole mergers

Dec 13, 2021

Abstract:We use artificial intelligence (AI) to learn and infer the physics of higher order gravitational wave modes of quasi-circular, spinning, non precessing binary black hole mergers. We trained AI models using 14 million waveforms, produced with the surrogate model NRHybSur3dq8, that include modes up to $\ell \leq 4$ and $(5,5)$, except for $(4,0)$ and $(4,1)$, that describe binaries with mass-ratios $q\leq8$ and individual spins $s^z_{\{1,2\}}\in[-0.8, 0.8]$. We use our AI models to obtain deterministic and probabilistic estimates of the mass-ratio, individual spins, effective spin, and inclination angle of numerical relativity waveforms that describe such signal manifold. Our studies indicate that AI provides informative estimates for these physical parameters. This work marks the first time AI is capable of characterizing this high-dimensional signal manifold. Our AI models were trained within 3.4 hours using distributed training on 256 nodes (1,536 NVIDIA V100 GPUs) in the Summit supercomputer.

Interpretable AI forecasting for numerical relativity waveforms of quasi-circular, spinning, non-precessing binary black hole mergers

Oct 13, 2021

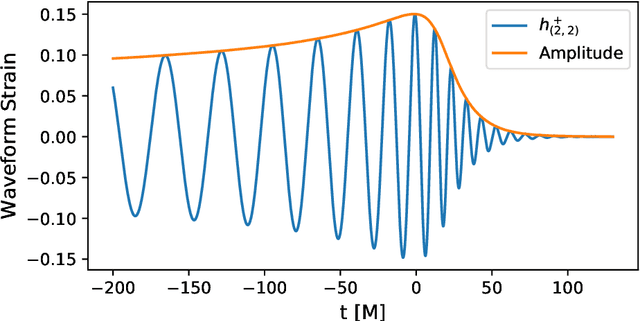

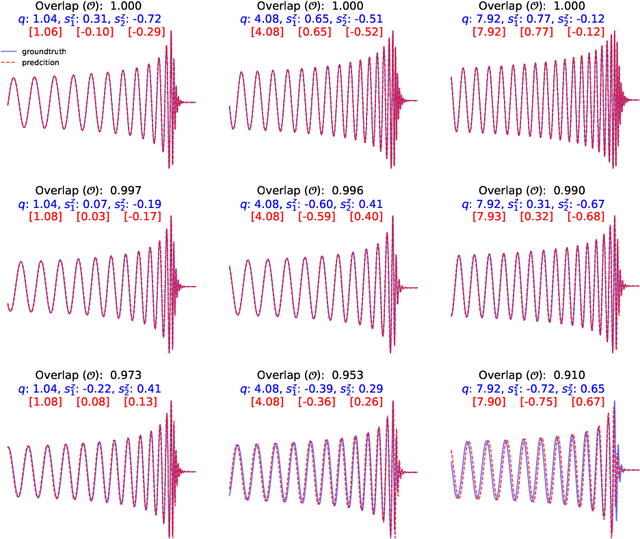

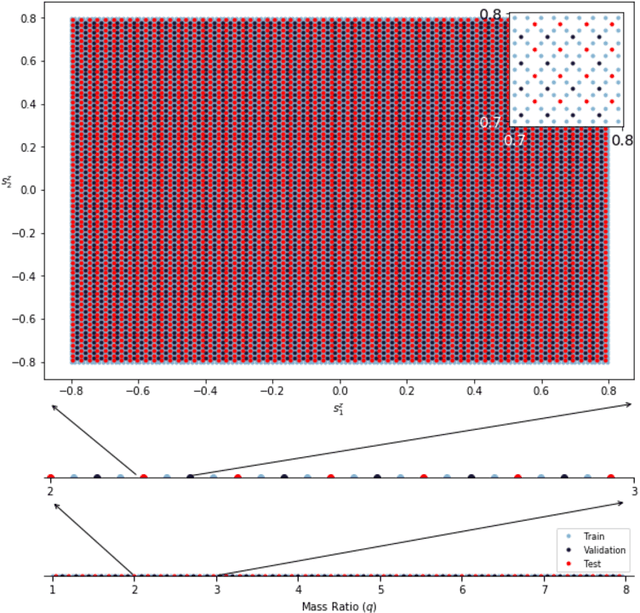

Abstract:We present a deep-learning artificial intelligence model that is capable of learning and forecasting the late-inspiral, merger and ringdown of numerical relativity waveforms that describe quasi-circular, spinning, non-precessing binary black hole mergers. We used the NRHybSur3dq8 surrogate model to produce train, validation and test sets of $\ell=|m|=2$ waveforms that cover the parameter space of binary black hole mergers with mass-ratios $q\leq8$ and individual spins $|s^z_{\{1,2\}}| \leq 0.8$. These waveforms cover the time range $t\in[-5000\textrm{M}, 130\textrm{M}]$, where $t=0M$ marks the merger event, defined as the maximum value of the waveform amplitude. We harnessed the ThetaGPU supercomputer at the Argonne Leadership Computing Facility to train our AI model using a training set of 1.5 million waveforms. We used 16 NVIDIA DGX A100 nodes, each consisting of 8 NVIDIA A100 Tensor Core GPUs and 2 AMD Rome CPUs, to fully train our model within 3.5 hours. Our findings show that artificial intelligence can accurately forecast the dynamical evolution of numerical relativity waveforms in the time range $t\in[-100\textrm{M}, 130\textrm{M}]$. Sampling a test set of 190,000 waveforms, we find that the average overlap between target and predicted waveforms is $\gtrsim99\%$ over the entire parameter space under consideration. We also combined scientific visualization and accelerated computing to identify what components of our model take in knowledge from the early and late-time waveform evolution to accurately forecast the latter part of numerical relativity waveforms. This work aims to accelerate the creation of scalable, computationally efficient and interpretable artificial intelligence models for gravitational wave astrophysics.

Confluence of Artificial Intelligence and High Performance Computing for Accelerated, Scalable and Reproducible Gravitational Wave Detection

Dec 15, 2020

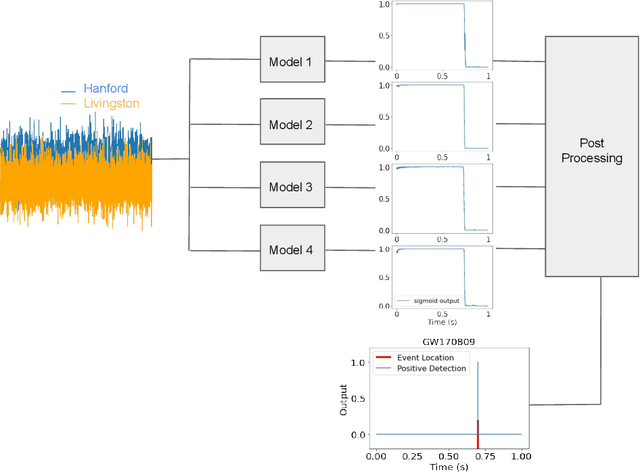

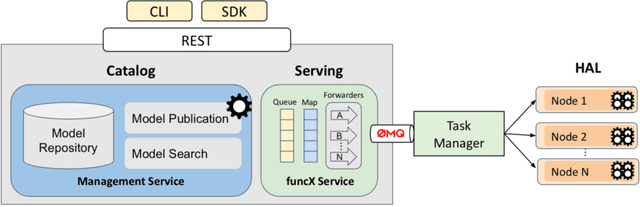

Abstract:Finding new ways to use artificial intelligence (AI) to accelerate the analysis of gravitational wave data, and ensuring the developed models are easily reusable promises to unlock new opportunities in multi-messenger astrophysics (MMA), and to enable wider use, rigorous validation, and sharing of developed models by the community. In this work, we demonstrate how connecting recently deployed DOE and NSF-sponsored cyberinfrastructure allows for new ways to publish models, and to subsequently deploy these models into applications using computing platforms ranging from laptops to high performance computing clusters. We develop a workflow that connects the Data and Learning Hub for Science (DLHub), a repository for publishing machine learning models, with the Hardware Accelerated Learning (HAL) deep learning computing cluster, using funcX as a universal distributed computing service. We then use this workflow to search for binary black hole gravitational wave signals in open source advanced LIGO data. We find that using this workflow, an ensemble of four openly available deep learning models can be run on HAL and process the entire month of August 2017 of advanced LIGO data in just seven minutes, identifying all four binary black hole mergers previously identified in this dataset, and reporting no misclassifications. This approach, which combines advances in AI, distributed computing, and scientific data infrastructure opens new pathways to conduct reproducible, accelerated, data-driven gravitational wave detection.

Physics-inspired deep learning to characterize the signal manifold of quasi-circular, spinning, non-precessing binary black hole mergers

Apr 20, 2020

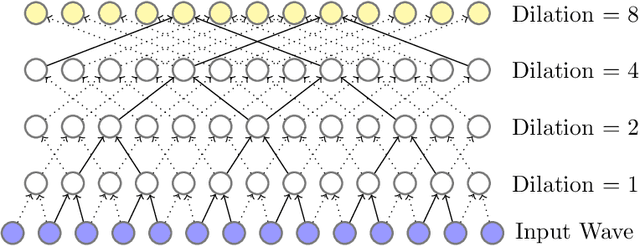

Abstract:The spin distribution of binary black hole mergers contains key information concerning the formation channels of these objects, and the astrophysical environments where they form, evolve and coalesce. To quantify the suitability of deep learning to characterize the signal manifold of quasi-circular, spinning, non-precessing binary black hole mergers, we introduce a modified version of WaveNet trained with a novel optimization scheme that incorporates general relativistic constraints of the spin properties of astrophysical black holes. The neural network model is trained, validated and tested with 1.5 million $\ell=|m|=2$ waveforms generated within the regime of validity of NRHybSur3dq8, i.e., mass-ratios $q\leq8$ and individual black hole spins $ | s^z_{\{1,\,2\}} | \leq 0.8$. Using this neural network model, we quantify how accurately we can infer the astrophysical parameters of black hole mergers in the absence of noise. We do this by computing the overlap between waveforms in the testing data set and the corresponding signals whose mass-ratio and individual spins are predicted by our neural network. We find that the convergence of high performance computing and physics-inspired optimization algorithms enable an accurate reconstruction of the mass-ratio and individual spins of binary black hole mergers across the parameter space under consideration. This is a significant step towards an informed utilization of physics-inspired deep learning models to reconstruct the spin distribution of binary black hole mergers in realistic detection scenarios.

Convergence of Artificial Intelligence and High Performance Computing on NSF-supported Cyberinfrastructure

Mar 18, 2020

Abstract:Significant investments to upgrade or construct large-scale scientific facilities demand commensurate investments in R&D to design algorithms and computing approaches to enable scientific and engineering breakthroughs in the big data era. The remarkable success of Artificial Intelligence (AI) algorithms to turn big-data challenges in industry and technology into transformational digital solutions that drive a multi-billion dollar industry, which play an ever increasing role shaping human social patterns, has promoted AI as the most sought after signal processing tool in big-data research. As AI continues to evolve into a computing tool endowed with statistical and mathematical rigor, and which encodes domain expertise to inform and inspire AI architectures and optimization algorithms, it has become apparent that single-GPU solutions for training, validation, and testing are no longer sufficient. This realization has been driving the confluence of AI and high performance computing (HPC) to reduce time-to-insight and to produce robust, reliable, trustworthy, and computationally efficient AI solutions. In this white paper, we present a summary of recent developments in this field, and discuss avenues to accelerate and streamline the use of HPC platforms to design accelerated AI algorithms.

Enabling real-time multi-messenger astrophysics discoveries with deep learning

Nov 26, 2019

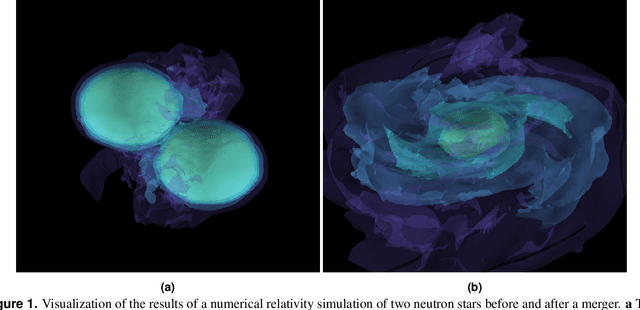

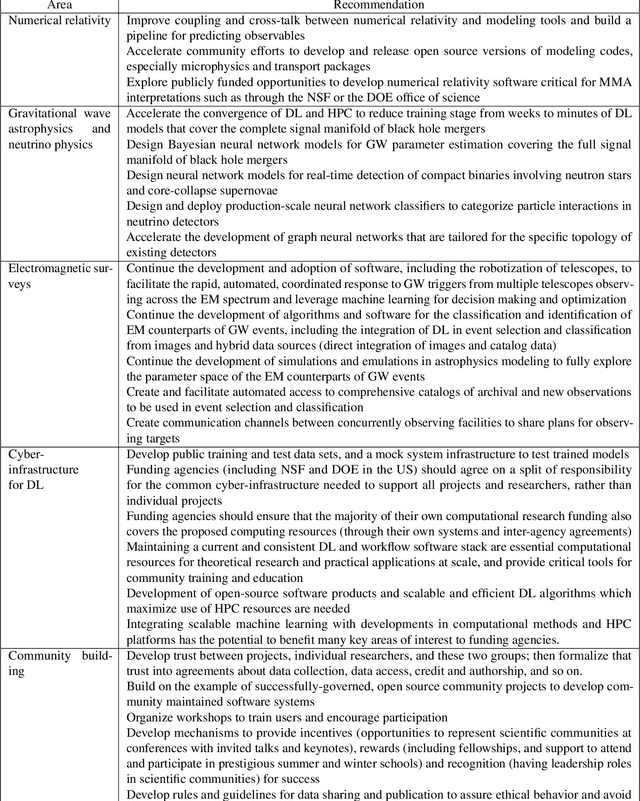

Abstract:Multi-messenger astrophysics is a fast-growing, interdisciplinary field that combines data, which vary in volume and speed of data processing, from many different instruments that probe the Universe using different cosmic messengers: electromagnetic waves, cosmic rays, gravitational waves and neutrinos. In this Expert Recommendation, we review the key challenges of real-time observations of gravitational wave sources and their electromagnetic and astroparticle counterparts, and make a number of recommendations to maximize their potential for scientific discovery. These recommendations refer to the design of scalable and computationally efficient machine learning algorithms; the cyber-infrastructure to numerically simulate astrophysical sources, and to process and interpret multi-messenger astrophysics data; the management of gravitational wave detections to trigger real-time alerts for electromagnetic and astroparticle follow-ups; a vision to harness future developments of machine learning and cyber-infrastructure resources to cope with the big-data requirements; and the need to build a community of experts to realize the goals of multi-messenger astrophysics.

* Invited Expert Recommendation for Nature Reviews Physics. The art work produced by E. A. Huerta and Shawn Rosofsky for this article was used by Carl Conway to design the cover of the October 2019 issue of Nature Reviews Physics

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge