Andrey Somov

FS-Net: Full Scale Network and Adaptive Threshold for Improving Extraction of Micro-Retinal Vessel Structures

Nov 19, 2023

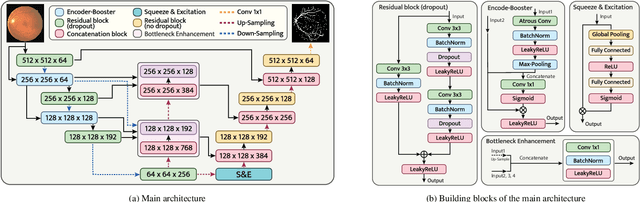

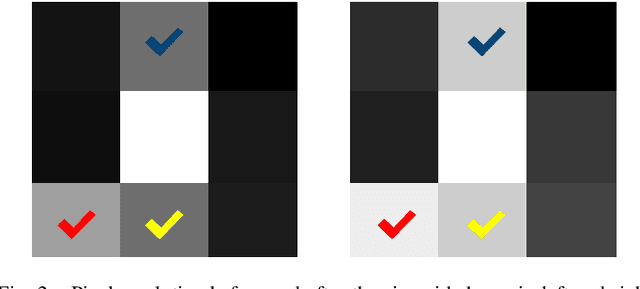

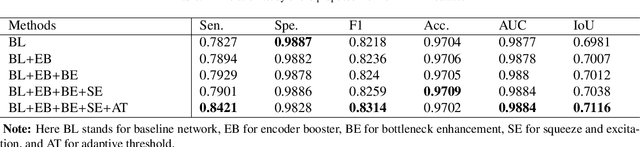

Abstract:Retinal vascular segmentation, is a widely researched subject in biomedical image processing, aims to relieve ophthalmologists' workload when treating and detecting retinal disorders. However, segmenting retinal vessels has its own set of challenges, with prior techniques failing to generate adequate results when segmenting branches and microvascular structures. The neural network approaches used recently are characterized by the inability to keep local and global properties together and the failure to capture tiny end vessels make it challenging to attain the desired result. To reduce this retinal vessel segmentation problem, we propose a full-scale micro-vessel extraction mechanism based on an encoder-decoder neural network architecture, sigmoid smoothing, and an adaptive threshold method. The network consists of of residual, encoder booster, bottleneck enhancement, squeeze, and excitation building blocks. All of these blocks together help to improve the feature extraction and prediction of the segmentation map. The proposed solution has been evaluated using the DRIVE, CHASE-DB1, and STARE datasets, and competitive results are obtained when compared with previous studies. The AUC and accuracy on the DRIVE dataset are 0.9884 and 0.9702, respectively. On the CHASE-DB1 dataset, the scores are 0.9903 and 0.9755, respectively. On the STARE dataset, the scores are 0.9916 and 0.9750, respectively. The performance achieved is one step ahead of what has been done in previous studies, and this results in a higher chance of having this solution in real-life diagnostic centers that seek ophthalmologists attention.

NTIRE 2021 Challenge on Quality Enhancement of Compressed Video: Methods and Results

May 02, 2021

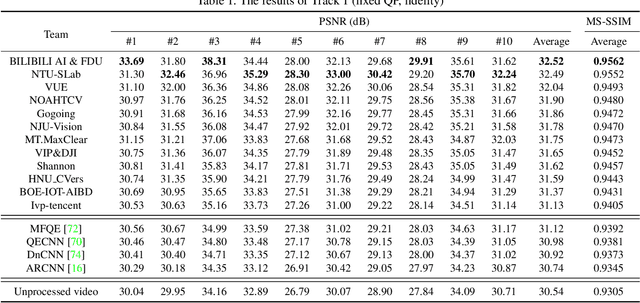

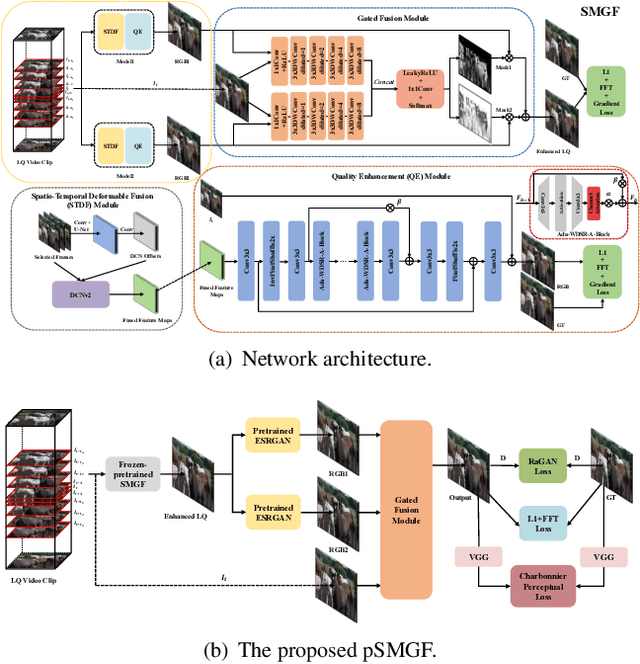

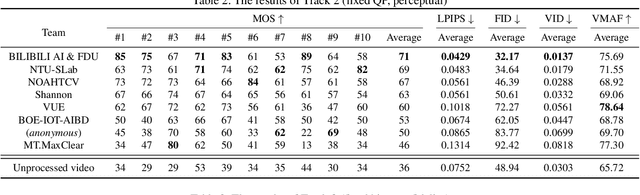

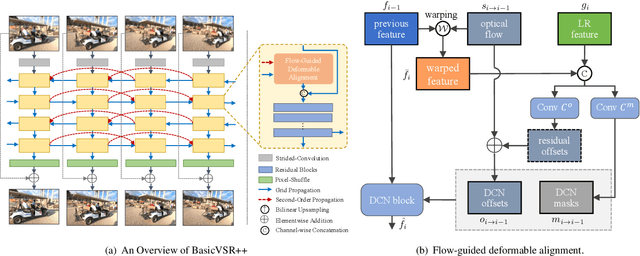

Abstract:This paper reviews the first NTIRE challenge on quality enhancement of compressed video, with a focus on the proposed methods and results. In this challenge, the new Large-scale Diverse Video (LDV) dataset is employed. The challenge has three tracks. Tracks 1 and 2 aim at enhancing the videos compressed by HEVC at a fixed QP, while Track 3 is designed for enhancing the videos compressed by x265 at a fixed bit-rate. Besides, the quality enhancement of Tracks 1 and 3 targets at improving the fidelity (PSNR), and Track 2 targets at enhancing the perceptual quality. The three tracks totally attract 482 registrations. In the test phase, 12 teams, 8 teams and 11 teams submitted the final results of Tracks 1, 2 and 3, respectively. The proposed methods and solutions gauge the state-of-the-art of video quality enhancement. The homepage of the challenge: https://github.com/RenYang-home/NTIRE21_VEnh

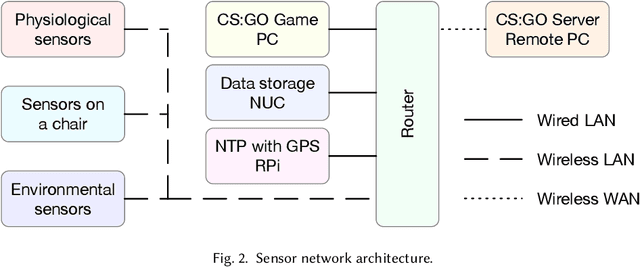

AI-enabled Prediction of eSports Player Performance Using the Data from Heterogeneous Sensors

Dec 07, 2020

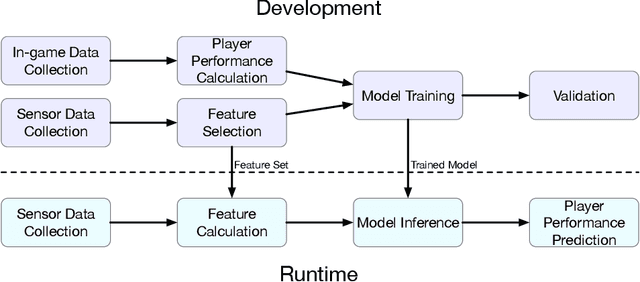

Abstract:The emerging progress of eSports lacks the tools for ensuring high-quality analytics and training in Pro and amateur eSports teams. We report on an Artificial Intelligence (AI) enabled solution for predicting the eSports player in-game performance using exclusively the data from sensors. For this reason, we collected the physiological, environmental, and the game chair data from Pro and amateur players. The player performance is assessed from the game logs in a multiplayer game for each moment of time using a recurrent neural network. We have investigated that attention mechanism improves the generalization of the network and provides the straightforward feature importance as well. The best model achieves ROC AUC score 0.73. The prediction of the performance of particular player is realized although his data are not utilized in the training set. The proposed solution has a number of promising applications for Pro eSports teams as well as a learning tool for amateur players.

Detecting Video Game Player Burnout with the Use of Sensor Data and Machine Learning

Nov 29, 2020

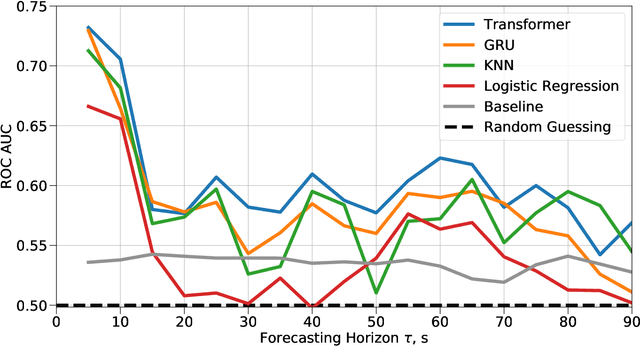

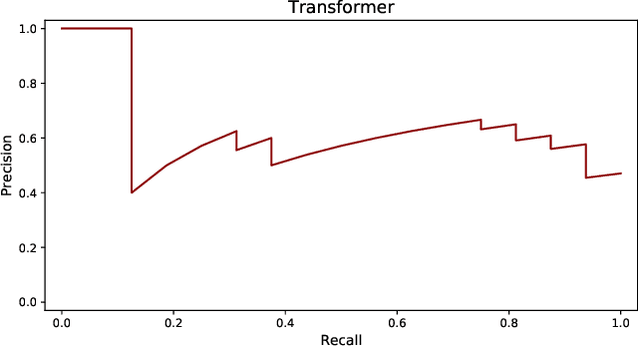

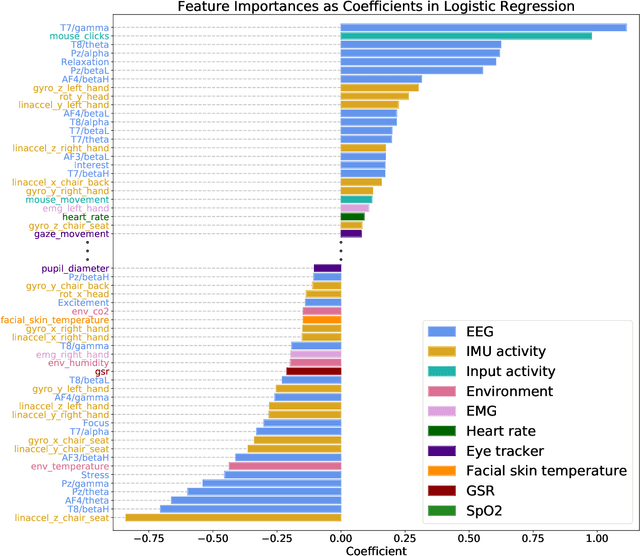

Abstract:Current research in eSports lacks the tools for proper game practising and performance analytics. The majority of prior work relied only on in-game data for advising the players on how to perform better. However, in-game mechanics and trends are frequently changed by new patches limiting the lifespan of the models trained exclusively on the in-game logs. In this article, we propose the methods based on the sensor data analysis for predicting whether a player will win the future encounter. The sensor data were collected from 10 participants in 22 matches in League of Legends video game. We have trained machine learning models including Transformer and Gated Recurrent Unit to predict whether the player wins the encounter taking place after some fixed time in the future. For 10 seconds forecasting horizon Transformer neural network architecture achieves ROC AUC score 0.706. This model is further developed into the detector capable of predicting that a player will lose the encounter occurring in 10 seconds in 88.3% of cases with 73.5% accuracy. This might be used as a players' burnout or fatigue detector, advising players to retreat. We have also investigated which physiological features affect the chance to win or lose the next in-game encounter.

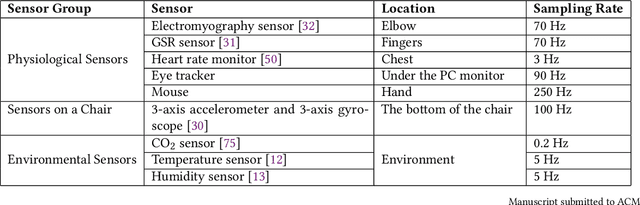

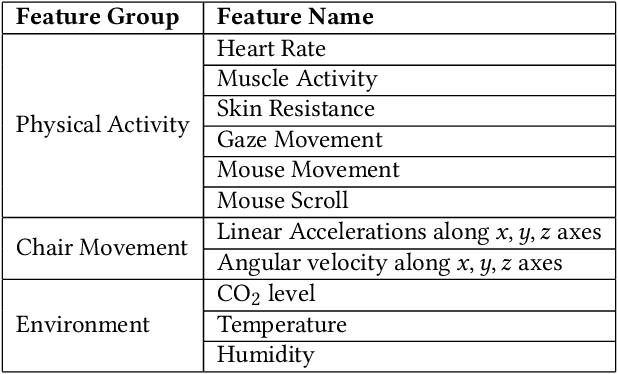

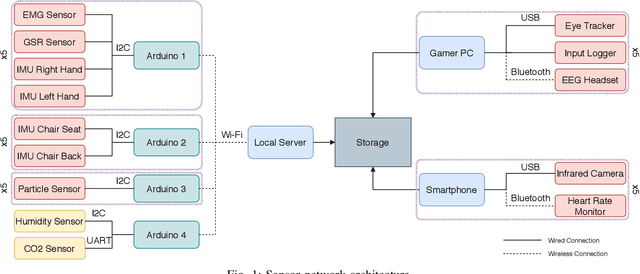

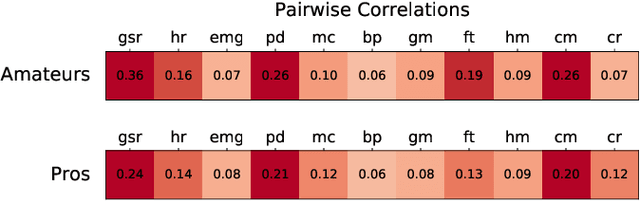

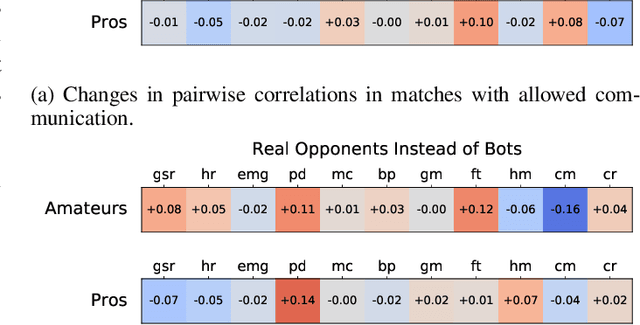

Collection and Validation of Psycophysiological Data from Professional and Amateur Players: a Multimodal eSports Dataset

Nov 02, 2020

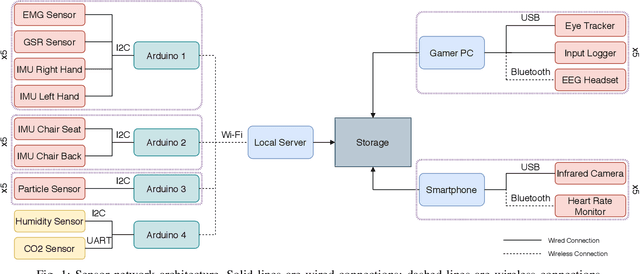

Abstract:Proper training and analytics in eSports require accurately collected and annotated data. Most eSports research focuses exclusively on in-game data analysis, and there is a lack of prior work involving eSports athletes' psychophysiological data. In this paper, we present a dataset collected from professional and amateur teams in 22 matches in League of Legends video game. Recorded data include the players' physiological activity, e.g. movements, pulse, saccades, obtained from various sensors, self-reported after-match survey, and in-game data. An important feature of the dataset is simultaneous data collection from five players, which facilitates the analysis of sensor data on a team level. Upon the collection of dataset we carried out its validation. In particular, we demonstrate that stress and concentration levels for professional players are less correlated, meaning more independent playstyle. Also, we show that the absence of team communication does not affect the professional players as much as amateur ones. To investigate other possible use cases of the dataset, we have trained classical machine learning algorithms for skill prediction and player re-identification using 3-minute sessions of sensor data. Best models achieved 0.856 and 0.521 (0.10 for a chance level) accuracy scores on a validation set for skill prediction and player re-id problems, respectively. The dataset is available at https://github.com/asmerdov/eSports_Sensors_Dataset.

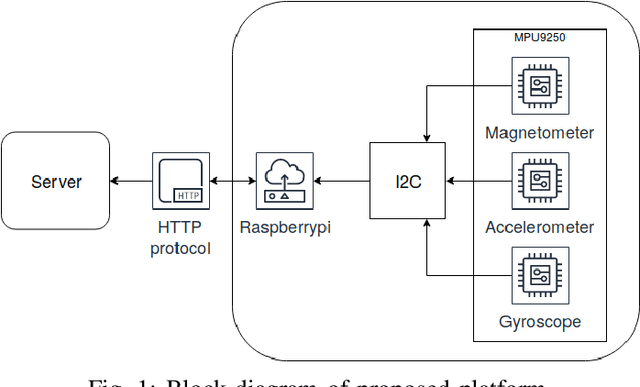

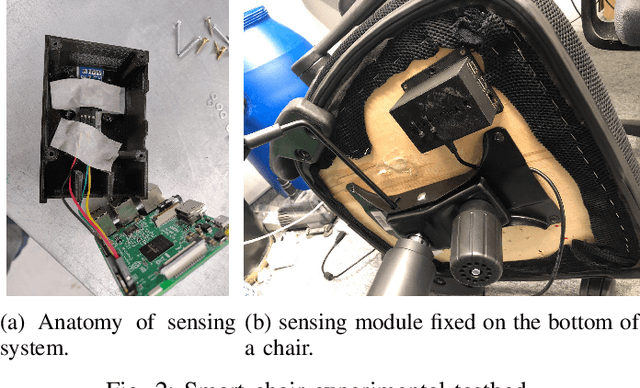

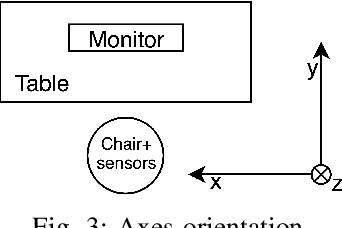

Understanding Cyber Athletes Behaviour Through a Smart Chair: CS:GO and Monolith Team Scenario

Aug 18, 2019

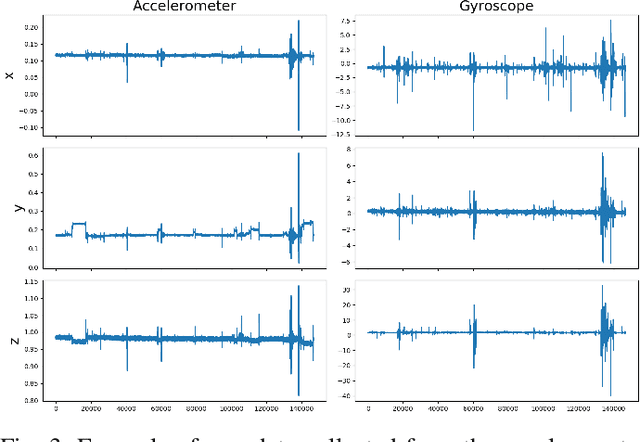

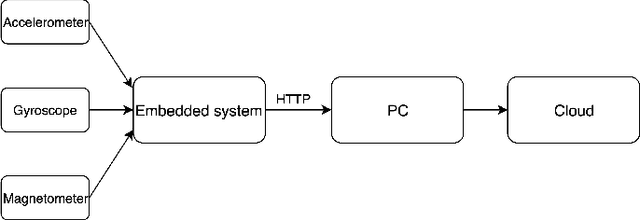

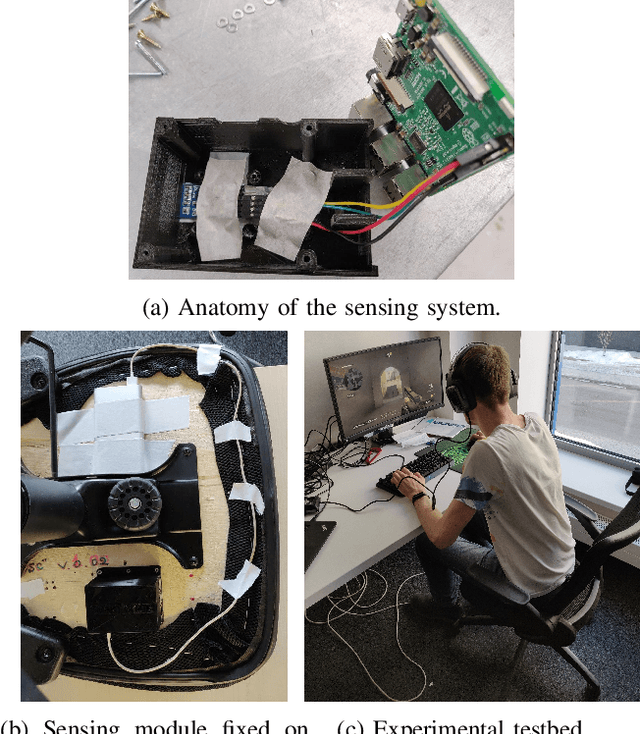

Abstract:eSports is the rapidly developing multidisciplinary domain. However, research and experimentation in eSports are in the infancy. In this work, we propose a smart chair platform - an unobtrusive approach to the collection of data on the eSports athletes and data further processing with machine learning methods. The use case scenario involves three groups of players: `cyber athletes' (Monolith team), semi-professional players and newbies all playing CS:GO discipline. In particular, we collect data from the accelerometer and gyroscope integrated in the chair and apply machine learning algorithms for the data analysis. Our results demonstrate that the professional athletes can be identified by their behaviour on the chair while playing the game.

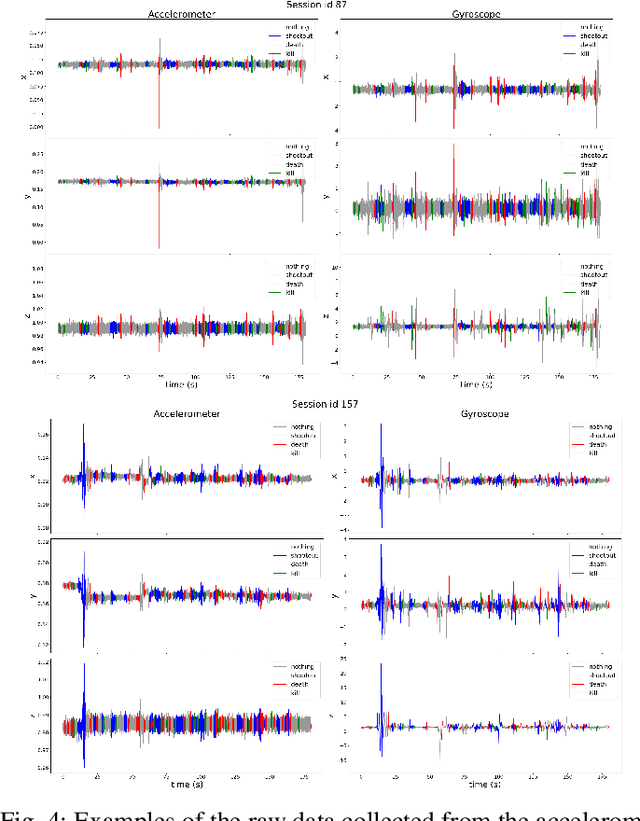

eSports Pro-Players Behavior During the Game Events: Statistical Analysis of Data Obtained Using the Smart Chair

Aug 18, 2019

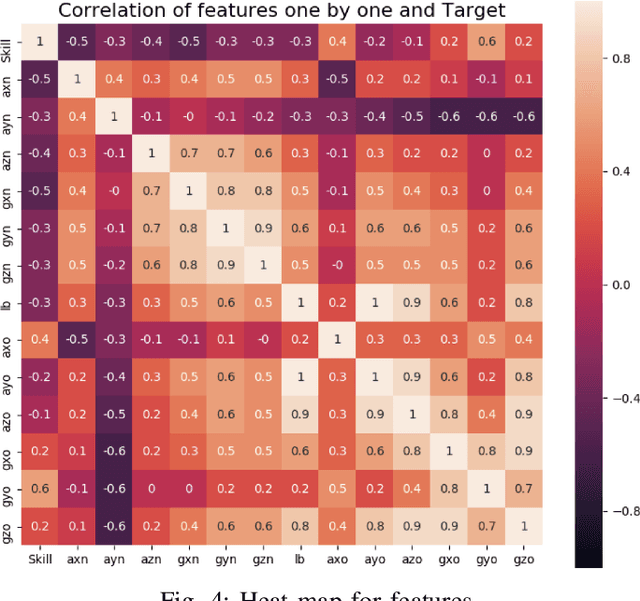

Abstract:Today's competition between the professional eSports teams is so strong that in-depth analysis of players' performance literally crucial for creating a powerful team. There are two main approaches to such an estimation: obtaining features and metrics directly from the in-game data or collecting detailed information about the player including data on his/her physical training. While the correlation between the player's skill and in-game data has already been covered in many papers, there are very few works related to analysis of eSports athlete's skill through his/her physical behavior. We propose the smart chair platform which is to collect data on the person's behavior on the chair using an integrated accelerometer, a gyroscope and a magnetometer. We extract the important game events to define the players' physical reactions to them. The obtained data are used for training machine learning models in order to distinguish between the low-skilled and high-skilled players. We extract and figure out the key features during the game and discuss the results.

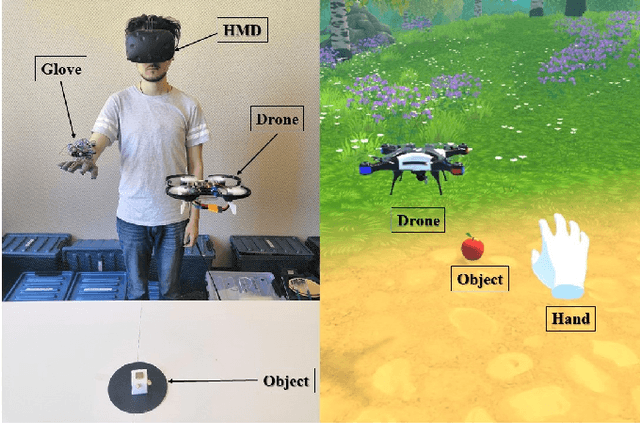

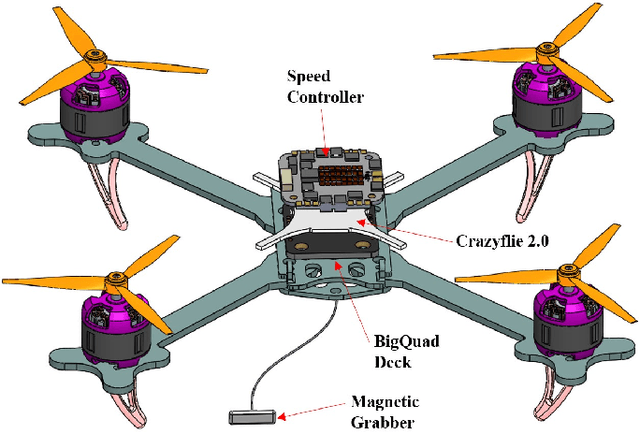

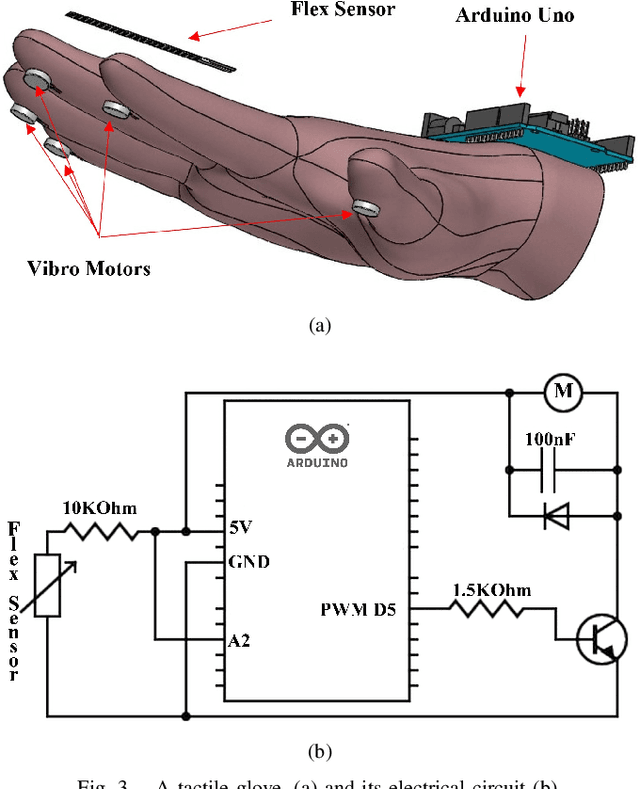

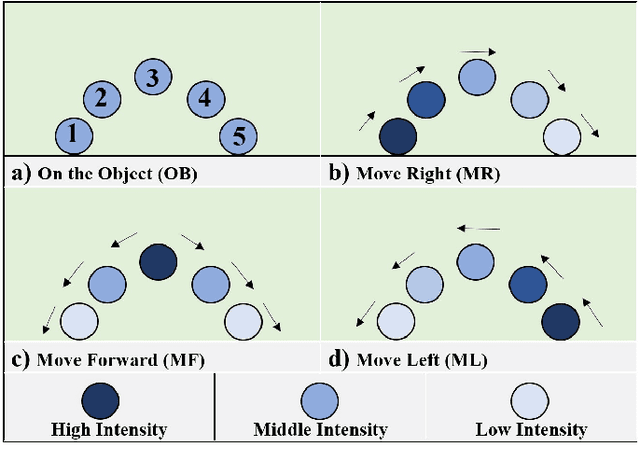

DronePick: Object Picking and Delivery Teleoperation with the Drone Controlled by a Wearable Tactile Display

Aug 07, 2019

Abstract:We report on the teleoperation system DronePick which provides remote object picking and delivery by a human-controlled quadcopter. The main novelty of the proposed system is that the human user continuously gets the visual and haptic feedback for accurate teleoperation. DronePick consists of a quadcopter equipped with a magnetic grabber, a tactile glove with finger motion tracking sensor, hand tracking system, and the Virtual Reality (VR) application. The human operator teleoperates the quadcopter by changing the position of the hand. The proposed vibrotactile patterns representing the location of the remote object relative to the quadcopter are delivered to the glove. It helps the operator to determine when the quadcopter is right above the object. When the "pick" command is sent by clasping the hand in the glove, the quadcopter decreases its altitude and the magnetic grabber attaches the target object. The whole scenario is in parallel simulated in VR. The air flow from the quadcopter and the relative positions of VR objects help the operator to determine the exact position of the delivered object to be picked. The experiments showed that the vibrotactile patterns were recognized by the users at the high recognition rates: the average 99% recognition rate and the average 2.36s recognition time. The real-life implementation of DronePick featuring object picking and delivering to the human was developed and tested.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge