Paul Lukowicz

Calibration-Free Induced Magnetic Field Indoor and Outdoor Positioning via Data-Driven Modeling

Jan 31, 2026Abstract:Induced magnetic field (IMF)-based localization offers a robust alternative to wave-based positioning technologies due to its resilience to non-line-of-sight conditions, environmental dynamics, and wireless interference. However, existing magnetic localization systems typically rely on analytical field inversion, manual calibration, or environment-specific fingerprinting, limiting their scalability and transferability. This paper presents a data-driven IMF localization framework that directly maps induced magnetic field measurements to spatial coordinates using supervised learning, eliminating explicit environment-specific calibration. By replacing explicit field modeling with learning-based inference, the proposed approach captures nonlinear field interactions and environmental effects. An orientation-invariant feature representation enables rotation-independent deployment. The system is evaluated across multiple indoor environments and an outdoor deployment. Benchmarking against classical and deep learning baselines shows that a Random Forest regressor achieves sub-20 cm accuracy in 2D and sub-30 cm in 3D localization. Cross-environment validation demonstrates that models trained indoors generalize to outdoor environments without retraining. We further analyze scalability by varying transmitter spacing, showing that coverage and accuracy can be balanced through deployment density. Overall, this work demonstrates that data-driven IMF localization is a scalable and transferable solution for real-world positioning.

Promoting Sustainable Web Agents: Benchmarking and Estimating Energy Consumption through Empirical and Theoretical Analysis

Nov 06, 2025Abstract:Web agents, like OpenAI's Operator and Google's Project Mariner, are powerful agentic systems pushing the boundaries of Large Language Models (LLM). They can autonomously interact with the internet at the user's behest, such as navigating websites, filling search masks, and comparing price lists. Though web agent research is thriving, induced sustainability issues remain largely unexplored. To highlight the urgency of this issue, we provide an initial exploration of the energy and $CO_2$ cost associated with web agents from both a theoretical -via estimation- and an empirical perspective -by benchmarking. Our results show how different philosophies in web agent creation can severely impact the associated expended energy, and that more energy consumed does not necessarily equate to better results. We highlight a lack of transparency regarding disclosing model parameters and processes used for some web agents as a limiting factor when estimating energy consumption. Our work contributes towards a change in thinking of how we evaluate web agents, advocating for dedicated metrics measuring energy consumption in benchmarks.

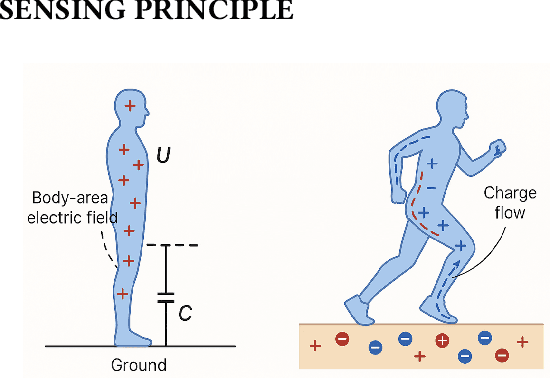

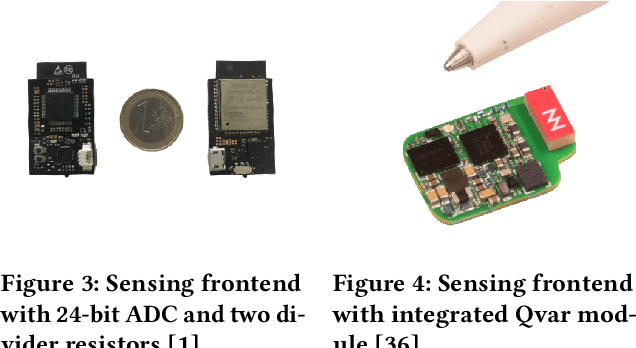

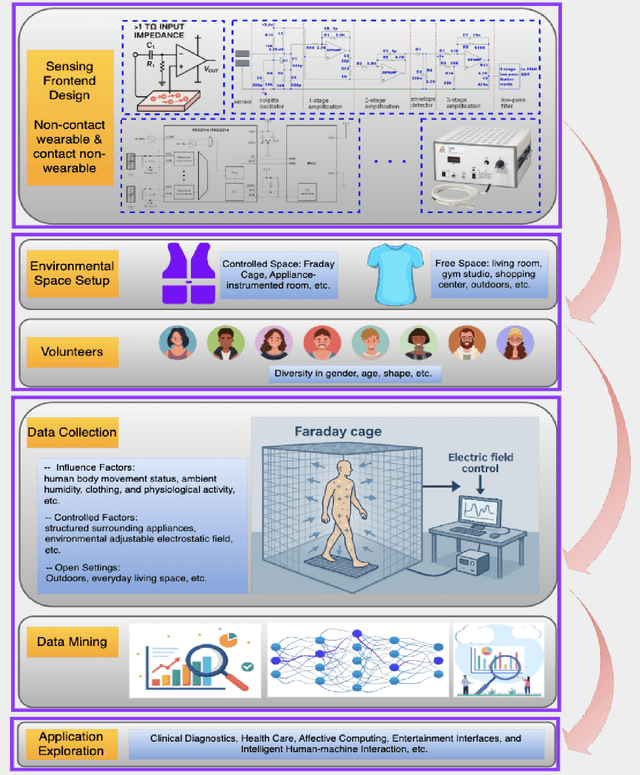

Passive Body-Area Electrostatic Field (Human Body Capacitance) for Ubiquitous Computing

Jul 17, 2025

Abstract:Passive body-area electrostatic field sensing, also referred to as human body capacitance (HBC), is an energy-efficient and non-intrusive sensing modality that exploits the human body's inherent electrostatic properties to perceive human behaviors. This paper presents a focused overview of passive HBC sensing, including its underlying principles, historical evolution, hardware architectures, and applications across research domains. Key challenges, such as susceptibility to environmental variation, are discussed to trigger mitigation techniques. Future research opportunities in sensor fusion and hardware enhancement are highlighted. To support continued innovation, this work provides open-source resources and aims to empower researchers and developers to leverage passive electrostatic sensing for next-generation wearable and ambient intelligence systems.

TinierHAR: Towards Ultra-Lightweight Deep Learning Models for Efficient Human Activity Recognition on Edge Devices

Jul 10, 2025Abstract:Human Activity Recognition (HAR) on resource-constrained wearable devices demands inference models that harmonize accuracy with computational efficiency. This paper introduces TinierHAR, an ultra-lightweight deep learning architecture that synergizes residual depthwise separable convolutions, gated recurrent units (GRUs), and temporal aggregation to achieve SOTA efficiency without compromising performance. Evaluated across 14 public HAR datasets, TinierHAR reduces Parameters by 2.7x (vs. TinyHAR) and 43.3x (vs. DeepConvLSTM), and MACs by 6.4x and 58.6x, respectively, while maintaining the averaged F1-scores. Beyond quantitative gains, this work provides the first systematic ablation study dissecting the contributions of spatial-temporal components across proposed TinierHAR, prior SOTA TinyHAR, and the classical DeepConvLSTM, offering actionable insights for designing efficient HAR systems. We finally discussed the findings and suggested principled design guidelines for future efficient HAR. To catalyze edge-HAR research, we open-source all materials in this work for future benchmarking\footnote{https://github.com/zhaxidele/TinierHAR}

SImpHAR: Advancing impedance-based human activity recognition using 3D simulation and text-to-motion models

Jul 08, 2025Abstract:Human Activity Recognition (HAR) with wearable sensors is essential for applications in healthcare, fitness, and human-computer interaction. Bio-impedance sensing offers unique advantages for fine-grained motion capture but remains underutilized due to the scarcity of labeled data. We introduce SImpHAR, a novel framework addressing this limitation through two core contributions. First, we propose a simulation pipeline that generates realistic bio-impedance signals from 3D human meshes using shortest-path estimation, soft-body physics, and text-to-motion generation serving as a digital twin for data augmentation. Second, we design a two-stage training strategy with decoupled approach that enables broader activity coverage without requiring label-aligned synthetic data. We evaluate SImpHAR on our collected ImpAct dataset and two public benchmarks, showing consistent improvements over state-of-the-art methods, with gains of up to 22.3% and 21.8%, in terms of accuracy and macro F1 score, respectively. Our results highlight the promise of simulation-driven augmentation and modular training for impedance-based HAR.

Human in the Latent Loop (HILL): Interactively Guiding Model Training Through Human Intuition

May 09, 2025Abstract:Latent space representations are critical for understanding and improving the behavior of machine learning models, yet they often remain obscure and intricate. Understanding and exploring the latent space has the potential to contribute valuable human intuition and expertise about respective domains. In this work, we present HILL, an interactive framework allowing users to incorporate human intuition into the model training by interactively reshaping latent space representations. The modifications are infused into the model training loop via a novel approach inspired by knowledge distillation, treating the user's modifications as a teacher to guide the model in reshaping its intrinsic latent representation. The process allows the model to converge more effectively and overcome inefficiencies, as well as provide beneficial insights to the user. We evaluated HILL in a user study tasking participants to train an optimal model, closely observing the employed strategies. The results demonstrated that human-guided latent space modifications enhance model performance while maintaining generalization, yet also revealing the risks of including user biases. Our work introduces a novel human-AI interaction paradigm that infuses human intuition into model training and critically examines the impact of human intervention on training strategies and potential biases.

TxP: Reciprocal Generation of Ground Pressure Dynamics and Activity Descriptions for Improving Human Activity Recognition

May 04, 2025Abstract:Sensor-based human activity recognition (HAR) has predominantly focused on Inertial Measurement Units and vision data, often overlooking the capabilities unique to pressure sensors, which capture subtle body dynamics and shifts in the center of mass. Despite their potential for postural and balance-based activities, pressure sensors remain underutilized in the HAR domain due to limited datasets. To bridge this gap, we propose to exploit generative foundation models with pressure-specific HAR techniques. Specifically, we present a bidirectional Text$\times$Pressure model that uses generative foundation models to interpret pressure data as natural language. TxP accomplishes two tasks: (1) Text2Pressure, converting activity text descriptions into pressure sequences, and (2) Pressure2Text, generating activity descriptions and classifications from dynamic pressure maps. Leveraging pre-trained models like CLIP and LLaMA 2 13B Chat, TxP is trained on our synthetic PressLang dataset, containing over 81,100 text-pressure pairs. Validated on real-world data for activities such as yoga and daily tasks, TxP provides novel approaches to data augmentation and classification grounded in atomic actions. This consequently improved HAR performance by up to 12.4\% in macro F1 score compared to the state-of-the-art, advancing pressure-based HAR with broader applications and deeper insights into human movement.

Talk2X -- An Open-Source Toolkit Facilitating Deployment of LLM-Powered Chatbots on the Web

Apr 04, 2025Abstract:Integrated into websites, LLM-powered chatbots offer alternative means of navigation and information retrieval, leading to a shift in how users access information on the web. Yet, predominantly closed-sourced solutions limit proliferation among web hosts and suffer from a lack of transparency with regard to implementation details and energy efficiency. In this work, we propose our openly available agent Talk2X leveraging an adapted retrieval-augmented generation approach (RAG) combined with an automatically generated vector database, benefiting energy efficiency. Talk2X's architecture is generalizable to arbitrary websites offering developers a ready to use tool for integration. Using a mixed-methods approach, we evaluated Talk2X's usability by tasking users to acquire specific assets from an open science repository. Talk2X significantly improved task completion time, correctness, and user experience supporting users in quickly pinpointing specific information as compared to standard user-website interaction. Our findings contribute technical advancements to an ongoing paradigm shift of how we access information on the web.

Optimization of An Induced Magnetic Field-Based Positioning System

Mar 08, 2025Abstract:Using oscillating magnetic fields for indoor positioning is a robust way to resist dynamic environments. This work presents the hard- and software-related optimizations of an induced magnetic field positioning system. We describe a new coil architecture for both the transmitter and receiver, reducing inter-axes cross-talk. A new analog circuit design on the receiver side attains an acceptable noise level and increases the detection range from 4m to 8m (the covered area is increased from $50m^2$ to $200m^2$). The median positioning error is reduced from 0.56~m to 0.25m in the near field with fingerprinting methods. Experiments in office and factory areas (including robotic and industrial equipment) demonstrate the system's robustness in large areas. This work aims to enlighten researchers working on the same topic with constructive optimization directions on their own induced magnetic field-based systems.

Hybrid CNN-Dilated Self-attention Model Using Inertial and Body-Area Electrostatic Sensing for Gym Workout Recognition, Counting, and User Authentification

Mar 08, 2025Abstract:While human body capacitance ($HBC$) has been explored as a novel wearable motion sensing modality, its competence has never been quantitatively demonstrated compared to that of the dominant inertial measurement unit ($IMU$) in practical scenarios. This work is thus motivated to evaluate the contribution of $HBC$ in wearable motion sensing. A real-life case study, gym workout tracking, is described to assess the effectiveness of $HBC$ as a complement to $IMU$ in activity recognition. Fifty gym sessions from ten volunteers were collected, bringing a fifty-hour annotated $IMU$ and $HBC$ dataset. With a hybrid CNN-Dilated neural network model empowered with the self-attention mechanism, $HBC$ slightly improves accuracy to the $IMU$ for workout recognition and has substantial advantages over $IMU$ for repetition counting. This work helps to enhance the understanding of $HBC$, a novel wearable motion-sensing modality based on the body-area electrostatic field. All materials presented in this work are open-sourced to promote further study \footnote{https://github.com/zhaxidele/Toolkit-for-HBC-sensing}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge