Jakob Karolus

Promoting Sustainable Web Agents: Benchmarking and Estimating Energy Consumption through Empirical and Theoretical Analysis

Nov 06, 2025Abstract:Web agents, like OpenAI's Operator and Google's Project Mariner, are powerful agentic systems pushing the boundaries of Large Language Models (LLM). They can autonomously interact with the internet at the user's behest, such as navigating websites, filling search masks, and comparing price lists. Though web agent research is thriving, induced sustainability issues remain largely unexplored. To highlight the urgency of this issue, we provide an initial exploration of the energy and $CO_2$ cost associated with web agents from both a theoretical -via estimation- and an empirical perspective -by benchmarking. Our results show how different philosophies in web agent creation can severely impact the associated expended energy, and that more energy consumed does not necessarily equate to better results. We highlight a lack of transparency regarding disclosing model parameters and processes used for some web agents as a limiting factor when estimating energy consumption. Our work contributes towards a change in thinking of how we evaluate web agents, advocating for dedicated metrics measuring energy consumption in benchmarks.

Human in the Latent Loop (HILL): Interactively Guiding Model Training Through Human Intuition

May 09, 2025Abstract:Latent space representations are critical for understanding and improving the behavior of machine learning models, yet they often remain obscure and intricate. Understanding and exploring the latent space has the potential to contribute valuable human intuition and expertise about respective domains. In this work, we present HILL, an interactive framework allowing users to incorporate human intuition into the model training by interactively reshaping latent space representations. The modifications are infused into the model training loop via a novel approach inspired by knowledge distillation, treating the user's modifications as a teacher to guide the model in reshaping its intrinsic latent representation. The process allows the model to converge more effectively and overcome inefficiencies, as well as provide beneficial insights to the user. We evaluated HILL in a user study tasking participants to train an optimal model, closely observing the employed strategies. The results demonstrated that human-guided latent space modifications enhance model performance while maintaining generalization, yet also revealing the risks of including user biases. Our work introduces a novel human-AI interaction paradigm that infuses human intuition into model training and critically examines the impact of human intervention on training strategies and potential biases.

Talk2X -- An Open-Source Toolkit Facilitating Deployment of LLM-Powered Chatbots on the Web

Apr 04, 2025Abstract:Integrated into websites, LLM-powered chatbots offer alternative means of navigation and information retrieval, leading to a shift in how users access information on the web. Yet, predominantly closed-sourced solutions limit proliferation among web hosts and suffer from a lack of transparency with regard to implementation details and energy efficiency. In this work, we propose our openly available agent Talk2X leveraging an adapted retrieval-augmented generation approach (RAG) combined with an automatically generated vector database, benefiting energy efficiency. Talk2X's architecture is generalizable to arbitrary websites offering developers a ready to use tool for integration. Using a mixed-methods approach, we evaluated Talk2X's usability by tasking users to acquire specific assets from an open science repository. Talk2X significantly improved task completion time, correctness, and user experience supporting users in quickly pinpointing specific information as compared to standard user-website interaction. Our findings contribute technical advancements to an ongoing paradigm shift of how we access information on the web.

PromptMap: An Alternative Interaction Style for AI-Based Image Generation

Mar 12, 2025Abstract:Recent technological advances popularized the use of image generation among the general public. Crafting effective prompts can, however, be difficult for novice users. To tackle this challenge, we developed PromptMap, a new interaction style for text-to-image AI that allows users to freely explore a vast collection of synthetic prompts through a map-like view with semantic zoom. PromptMap groups images visually by their semantic similarity, allowing users to discover relevant examples. We evaluated PromptMap in a between-subject online study ($n=60$) and a qualitative within-subject study ($n=12$). We found that PromptMap supported users in crafting prompts by providing them with examples. We also demonstrated the feasibility of using LLMs to create vast example collections. Our work contributes a new interaction style that supports users unfamiliar with prompting in achieving a satisfactory image output.

Towards Sustainable Web Agents: A Plea for Transparency and Dedicated Metrics for Energy Consumption

Feb 25, 2025

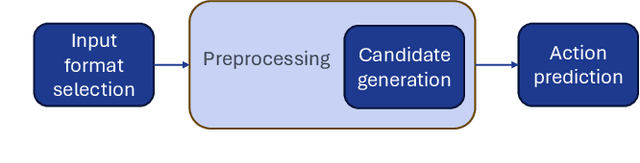

Abstract:Improvements in the area of large language models have shifted towards the construction of models capable of using external tools and interpreting their outputs. These so-called web agents have the ability to interact autonomously with the internet. This allows them to become powerful daily assistants handling time-consuming, repetitive tasks while supporting users in their daily activities. While web agent research is thriving, the sustainability aspect of this research direction remains largely unexplored. We provide an initial exploration of the energy and CO2 cost associated with web agents. Our results show how different philosophies in web agent creation can severely impact the associated expended energy. We highlight lacking transparency regarding the disclosure of model parameters and processes used for some web agents as a limiting factor when estimating energy consumption. As such, our work advocates a change in thinking when evaluating web agents, warranting dedicated metrics for energy consumption and sustainability.

Unreflected Acceptance -- Investigating the Negative Consequences of ChatGPT-Assisted Problem Solving in Physics Education

Aug 21, 2023Abstract:Large language models (LLMs) have recently gained popularity. However, the impact of their general availability through ChatGPT on sensitive areas of everyday life, such as education, remains unclear. Nevertheless, the societal impact on established educational methods is already being experienced by both students and educators. Our work focuses on higher physics education and examines problem solving strategies. In a study, students with a background in physics were assigned to solve physics exercises, with one group having access to an internet search engine (N=12) and the other group being allowed to use ChatGPT (N=27). We evaluated their performance, strategies, and interaction with the provided tools. Our results showed that nearly half of the solutions provided with the support of ChatGPT were mistakenly assumed to be correct by the students, indicating that they overly trusted ChatGPT even in their field of expertise. Likewise, in 42% of cases, students used copy & paste to query ChatGPT -- an approach only used in 4% of search engine queries -- highlighting the stark differences in interaction behavior between the groups and indicating limited reflection when using ChatGPT. In our work, we demonstrated a need to (1) guide students on how to interact with LLMs and (2) create awareness of potential shortcomings for users.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge