Vladimir Shirokun

DroneTrap: Drone Catching in Midair by Soft Robotic Hand with Color-Based Force Detection and Hand Gesture Recognition

Feb 07, 2021

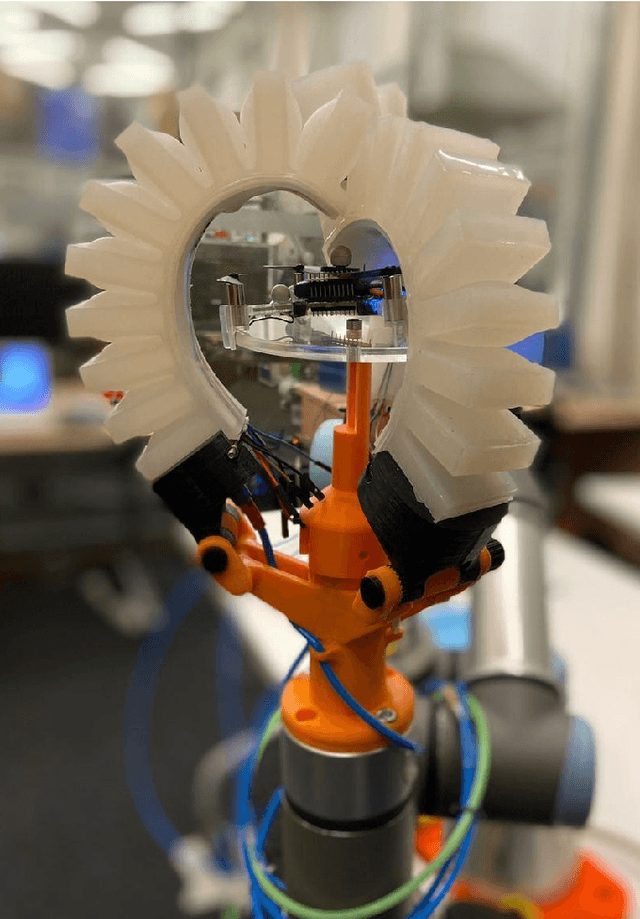

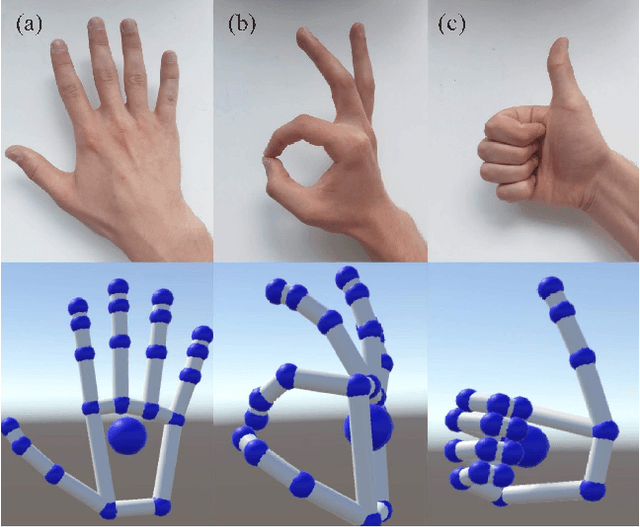

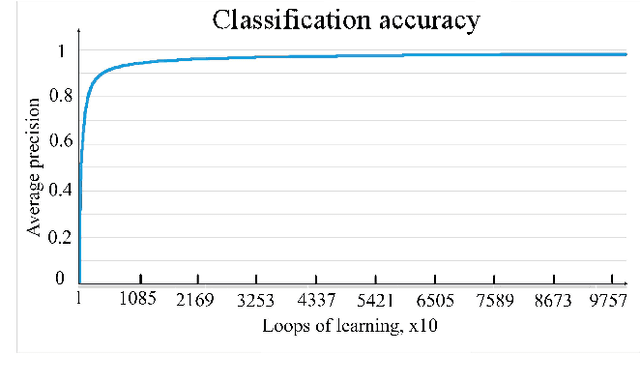

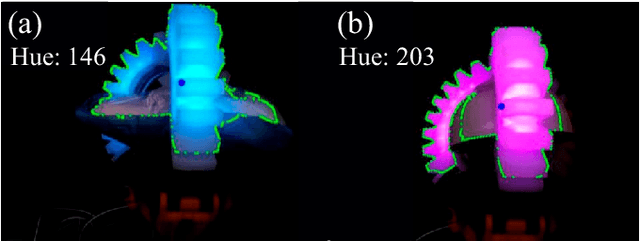

Abstract:The paper proposes a novel concept of docking drones to make this process as safe and fast as possible. The idea behind the project is that a robot with the gripper grasps the drone in midair. The human operator navigates the robotic arm with the ML-based gesture recognition interface. The 3-finger robot hand with soft fingers and integrated touch-sensors is pneumatically actuated. This allows achieving safety while catching to not destroying the drone's mechanical structure, fragile propellers, and motors. Additionally, the soft hand has a unique technology of providing force information through the color of the fingers to the remote computer vision (CV) system. In this case, not only the control system can understand the force applied but also the human operator. The operator has full control of robot motion and task execution without additional programming by wearing a mocap glove with gesture recognition, which was developed and applied for the high-level control of DroneTrap. The experimental results revealed that the developed color-based force estimation can be applied for rigid object capturing with high precision (95.3\%). The proposed technology can potentially revolutionize the landing and deployment of drones for parcel delivery on uneven ground, structure inspections, risque operations, etc.

DronePick: Object Picking and Delivery Teleoperation with the Drone Controlled by a Wearable Tactile Display

Aug 07, 2019

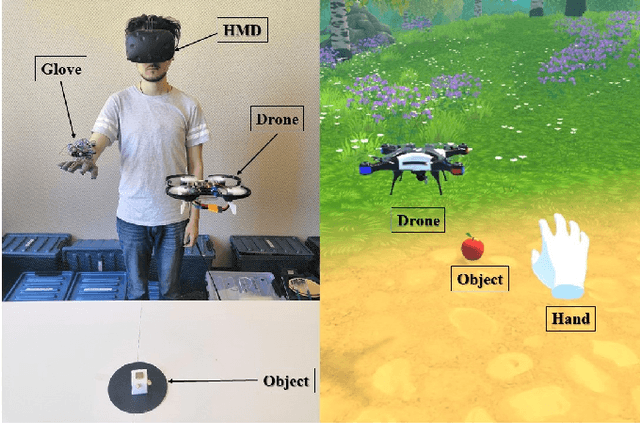

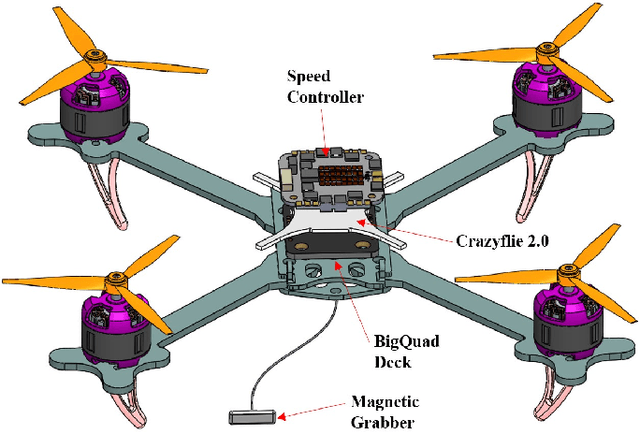

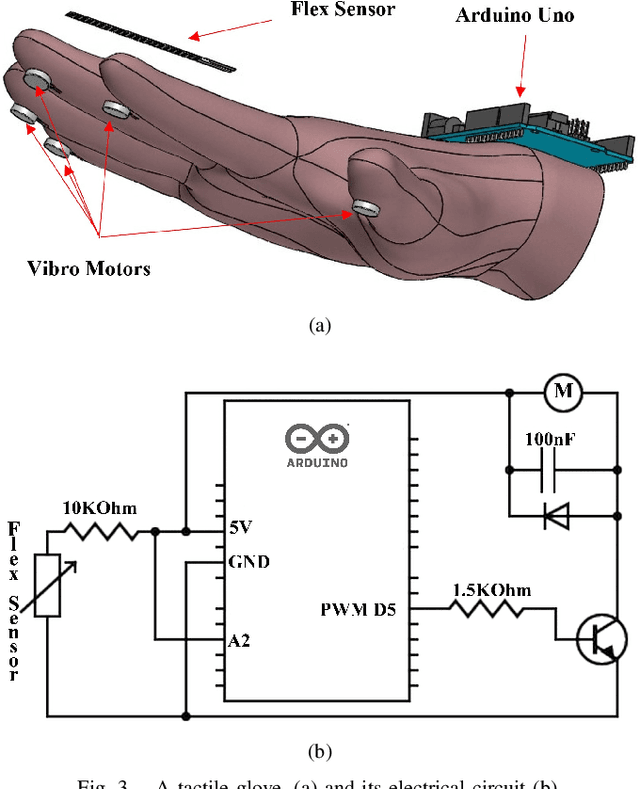

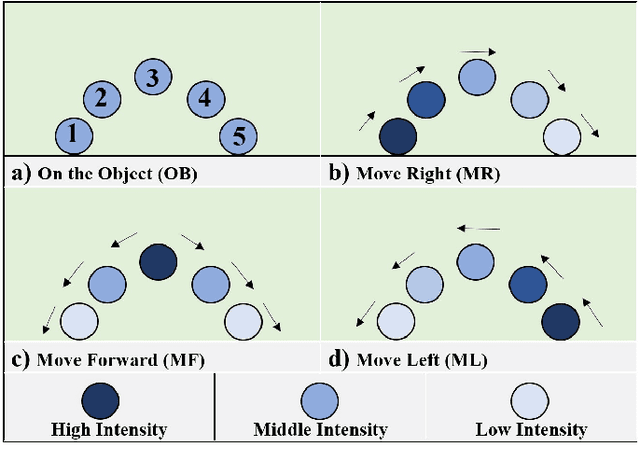

Abstract:We report on the teleoperation system DronePick which provides remote object picking and delivery by a human-controlled quadcopter. The main novelty of the proposed system is that the human user continuously gets the visual and haptic feedback for accurate teleoperation. DronePick consists of a quadcopter equipped with a magnetic grabber, a tactile glove with finger motion tracking sensor, hand tracking system, and the Virtual Reality (VR) application. The human operator teleoperates the quadcopter by changing the position of the hand. The proposed vibrotactile patterns representing the location of the remote object relative to the quadcopter are delivered to the glove. It helps the operator to determine when the quadcopter is right above the object. When the "pick" command is sent by clasping the hand in the glove, the quadcopter decreases its altitude and the magnetic grabber attaches the target object. The whole scenario is in parallel simulated in VR. The air flow from the quadcopter and the relative positions of VR objects help the operator to determine the exact position of the delivered object to be picked. The experiments showed that the vibrotactile patterns were recognized by the users at the high recognition rates: the average 99% recognition rate and the average 2.36s recognition time. The real-life implementation of DronePick featuring object picking and delivering to the human was developed and tested.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge