DroneTrap: Drone Catching in Midair by Soft Robotic Hand with Color-Based Force Detection and Hand Gesture Recognition

Paper and Code

Feb 07, 2021

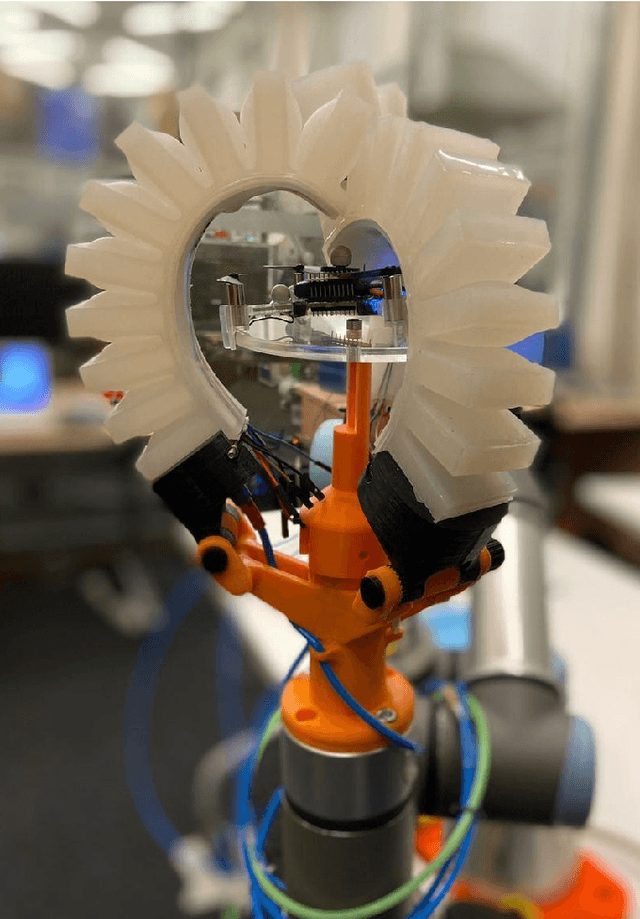

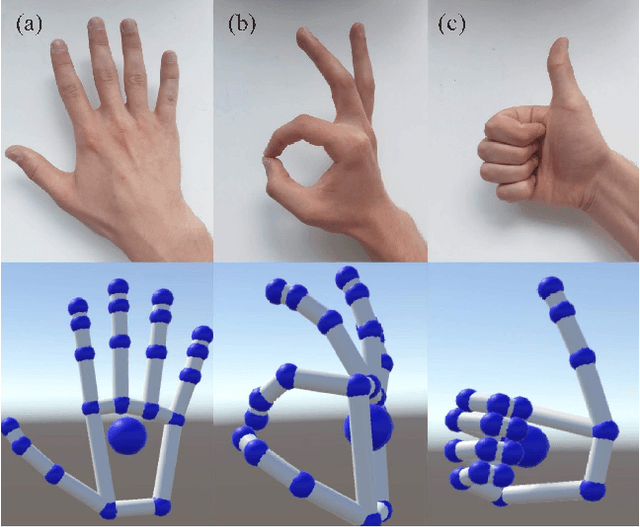

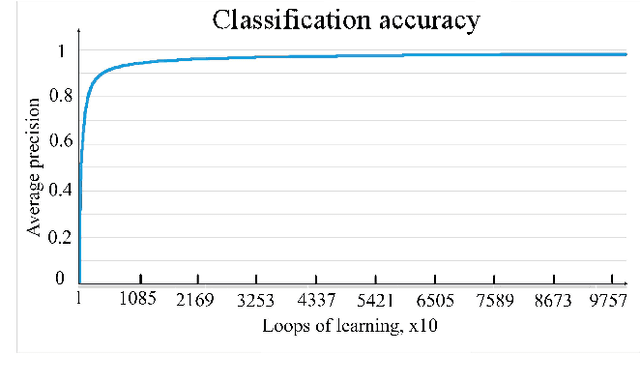

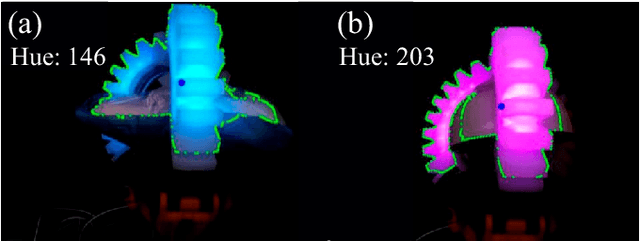

The paper proposes a novel concept of docking drones to make this process as safe and fast as possible. The idea behind the project is that a robot with the gripper grasps the drone in midair. The human operator navigates the robotic arm with the ML-based gesture recognition interface. The 3-finger robot hand with soft fingers and integrated touch-sensors is pneumatically actuated. This allows achieving safety while catching to not destroying the drone's mechanical structure, fragile propellers, and motors. Additionally, the soft hand has a unique technology of providing force information through the color of the fingers to the remote computer vision (CV) system. In this case, not only the control system can understand the force applied but also the human operator. The operator has full control of robot motion and task execution without additional programming by wearing a mocap glove with gesture recognition, which was developed and applied for the high-level control of DroneTrap. The experimental results revealed that the developed color-based force estimation can be applied for rigid object capturing with high precision (95.3\%). The proposed technology can potentially revolutionize the landing and deployment of drones for parcel delivery on uneven ground, structure inspections, risque operations, etc.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge