Amir Kheradmand

An Image-Guided Robotic System for Transcranial Magnetic Stimulation: System Development and Experimental Evaluation

Oct 20, 2024

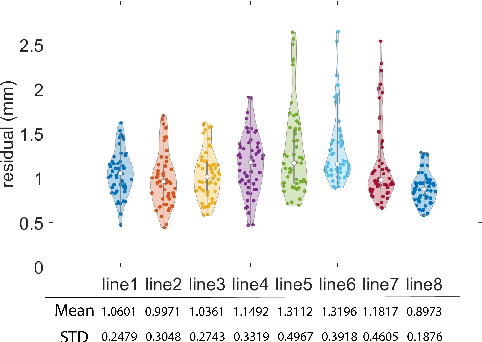

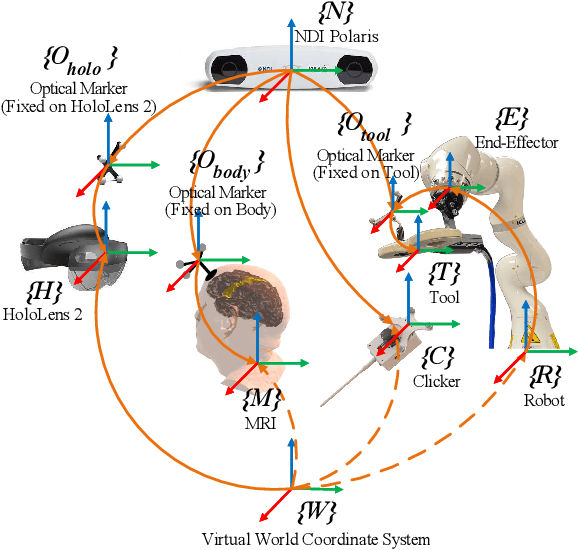

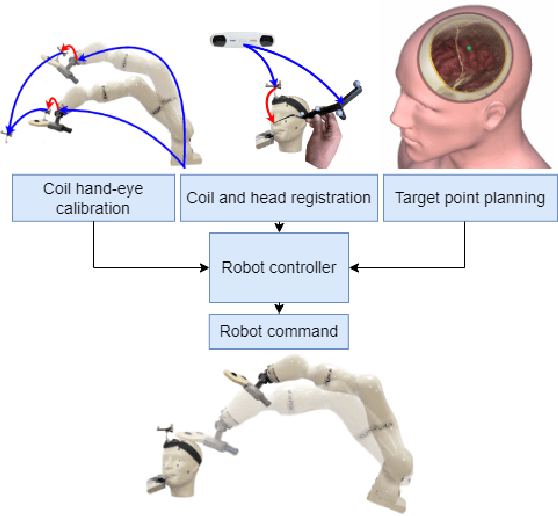

Abstract:Transcranial magnetic stimulation (TMS) is a noninvasive medical procedure that can modulate brain activity, and it is widely used in neuroscience and neurology research. Compared to manual operators, robots may improve the outcome of TMS due to their superior accuracy and repeatability. However, there has not been a widely accepted standard protocol for performing robotic TMS using fine-segmented brain images, resulting in arbitrary planned angles with respect to the true boundaries of the modulated cortex. Given that the recent study in TMS simulation suggests a noticeable difference in outcomes when using different anatomical details, cortical shape should play a more significant role in deciding the optimal TMS coil pose. In this work, we introduce an image-guided robotic system for TMS that focuses on (1) establishing standardized planning methods and heuristics to define a reference (true zero) for the coil poses and (2) solving the issue that the manual coil placement requires expert hand-eye coordination which often leading to low repeatability of the experiments. To validate the design of our robotic system, a phantom study and a preliminary human subject study were performed. Our results show that the robotic method can half the positional error and improve the rotational accuracy by up to two orders of magnitude. The accuracy is proven to be repeatable because the standard deviation of multiple trials is lowered by an order of magnitude. The improved actuation accuracy successfully translates to the TMS application, with a higher and more stable induced voltage in magnetic field sensors.

GBEC: Geometry-Based Hand-Eye Calibration

Apr 08, 2024

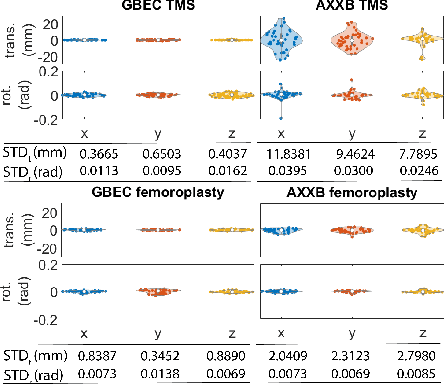

Abstract:Hand-eye calibration is the problem of solving the transformation from the end-effector of a robot to the sensor attached to it. Commonly employed techniques, such as AXXB or AXZB formulations, rely on regression methods that require collecting pose data from different robot configurations, which can produce low accuracy and repeatability. However, the derived transformation should solely depend on the geometry of the end-effector and the sensor attachment. We propose Geometry-Based End-Effector Calibration (GBEC) that enhances the repeatability and accuracy of the derived transformation compared to traditional hand-eye calibrations. To demonstrate improvements, we apply the approach to two different robot-assisted procedures: Transcranial Magnetic Stimulation (TMS) and femoroplasty. We also discuss the generalizability of GBEC for camera-in-hand and marker-in-hand sensor mounting methods. In the experiments, we perform GBEC between the robot end-effector and an optical tracker's rigid body marker attached to the TMS coil or femoroplasty drill guide. Previous research documents low repeatability and accuracy of the conventional methods for robot-assisted TMS hand-eye calibration. When compared to some existing methods, the proposed method relies solely on the geometry of the flange and the pose of the rigid-body marker, making it independent of workspace constraints or robot accuracy, without sacrificing the orthogonality of the rotation matrix. Our results validate the accuracy and applicability of the approach, providing a new and generalizable methodology for obtaining the transformation from the end-effector to a sensor.

On the Fly Robotic-Assisted Medical Instrument Planning and Execution Using Mixed Reality

Apr 08, 2024

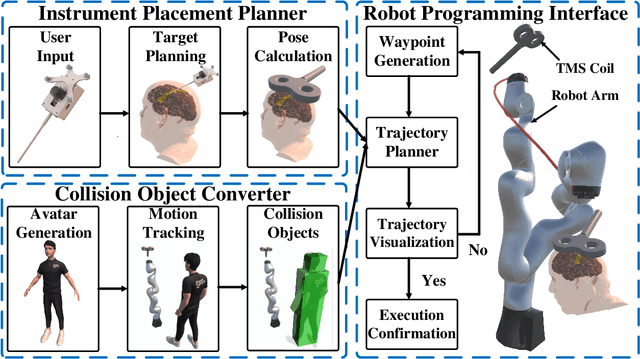

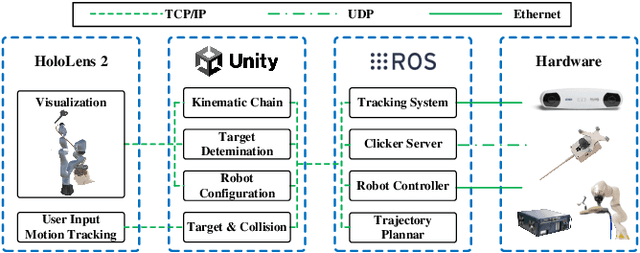

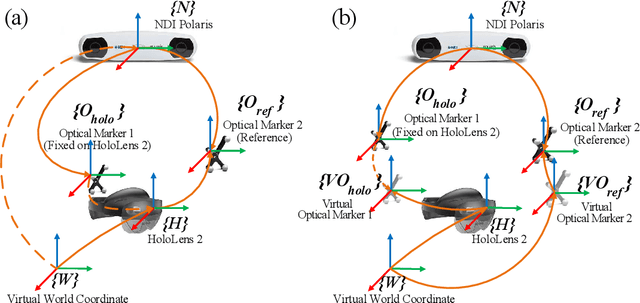

Abstract:Robotic-assisted medical systems (RAMS) have gained significant attention for their advantages in alleviating surgeons' fatigue and improving patients' outcomes. These systems comprise a range of human-computer interactions, including medical scene monitoring, anatomical target planning, and robot manipulation. However, despite its versatility and effectiveness, RAMS demands expertise in robotics, leading to a high learning cost for the operator. In this work, we introduce a novel framework using mixed reality technologies to ease the use of RAMS. The proposed framework achieves real-time planning and execution of medical instruments by providing 3D anatomical image overlay, human-robot collision detection, and robot programming interface. These features, integrated with an easy-to-use calibration method for head-mounted display, improve the effectiveness of human-robot interactions. To assess the feasibility of the framework, two medical applications are presented in this work: 1) coil placement during transcranial magnetic stimulation and 2) drill and injector device positioning during femoroplasty. Results from these use cases demonstrate its potential to extend to a wider range of medical scenarios.

Segment Any Medical Model Extended

Mar 26, 2024Abstract:The Segment Anything Model (SAM) has drawn significant attention from researchers who work on medical image segmentation because of its generalizability. However, researchers have found that SAM may have limited performance on medical images compared to state-of-the-art non-foundation models. Regardless, the community sees potential in extending, fine-tuning, modifying, and evaluating SAM for analysis of medical imaging. An increasing number of works have been published focusing on the mentioned four directions, where variants of SAM are proposed. To this end, a unified platform helps push the boundary of the foundation model for medical images, facilitating the use, modification, and validation of SAM and its variants in medical image segmentation. In this work, we introduce SAMM Extended (SAMME), a platform that integrates new SAM variant models, adopts faster communication protocols, accommodates new interactive modes, and allows for fine-tuning of subcomponents of the models. These features can expand the potential of foundation models like SAM, and the results can be translated to applications such as image-guided therapy, mixed reality interaction, robotic navigation, and data augmentation.

Realtime Robust Shape Estimation of Deformable Linear Object

Mar 24, 2024

Abstract:Realtime shape estimation of continuum objects and manipulators is essential for developing accurate planning and control paradigms. The existing methods that create dense point clouds from camera images, and/or use distinguishable markers on a deformable body have limitations in realtime tracking of large continuum objects/manipulators. The physical occlusion of markers can often compromise accurate shape estimation. We propose a robust method to estimate the shape of linear deformable objects in realtime using scattered and unordered key points. By utilizing a robust probability-based labeling algorithm, our approach identifies the true order of the detected key points and then reconstructs the shape using piecewise spline interpolation. The approach only relies on knowing the number of the key points and the interval between two neighboring points. We demonstrate the robustness of the method when key points are partially occluded. The proposed method is also integrated into a simulation in Unity for tracking the shape of a cable with a length of 1m and a radius of 5mm. The simulation results show that our proposed approach achieves an average length error of 1.07% over the continuum's centerline and an average cross-section error of 2.11mm. The real-world experiments of tracking and estimating a heavy-load cable prove that the proposed approach is robust under occlusion and complex entanglement scenarios.

Toward Process Controlled Medical Robotic System

Aug 10, 2023

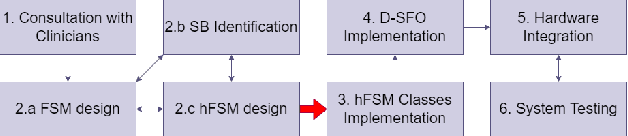

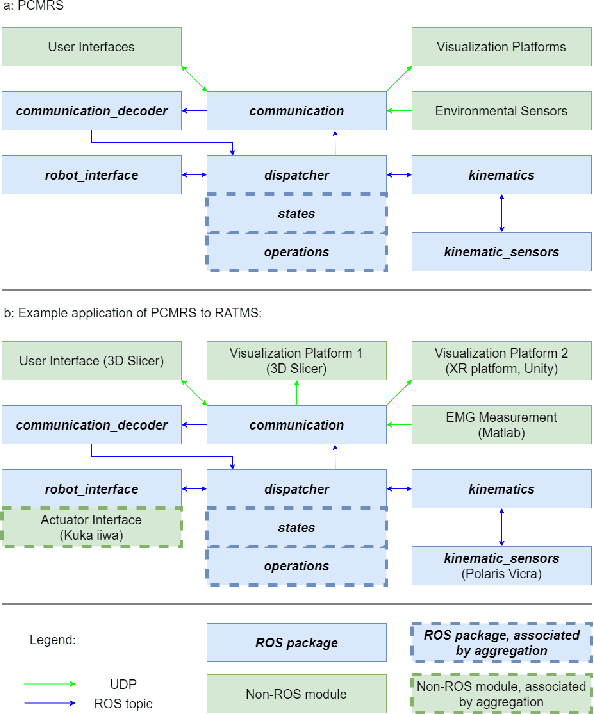

Abstract:Medical errors, defined as unintended acts either of omission or commission that cause the failure of medical actions, are the third leading cause of death in the United States. The application of autonomy and robotics can alleviate some causes of medical errors by improving accuracy and providing means to preciously follow planned procedures. However, for the robotic applications to improve safety, they must maintain constant operating conditions in the presence of disturbances, and provide reliable measurements, evaluation, and control for each state of the procedure. This article addresses the need for process control in medical robotic systems, and proposes a standardized design cycle toward its automation. Monitoring and controlling the changing conditions in a medical or surgical environment necessitates a clear definition of workflows and their procedural dependencies. We propose integrating process control into medical robotic workflows to identify change in states of the system and environment, possible operations, and transitions to new states. Therefore, the system translates clinician experiences and procedure workflows into machine-interpretable languages. The design cycle using hFSM formulation can be a deterministic process, which opens up possibilities for higher-level automation in medical robotics. Shown in our work, with a standardized design cycle and software paradigm, we pave the way toward controlled workflows that can be automatically generated. Additionally, a modular design for a robotic system architecture that integrates hFSM can provide easy software and hardware integration. This article discusses the system design, software implementation, and example application to Robot-Assisted Transcranial Magnetic Stimulation and robot-assisted femoroplasty. We also provide assessments of these two system examples by testing their robotic tool placement.

SAMM : A 3D Slicer Integration to SAM

Apr 12, 2023Abstract:The Segment Anything Model (SAM) is a new image segmentation tool trained with the largest segmentation dataset at this time. The model has demonstrated that it can create high-quality masks for image segmentation with good promptability and generalizability. However, the performance of the model on medical images requires further validation. To assist with the development, assessment, and utilization of SAM on medical images, we introduce Segment Any Medical Model (SAMM), an extension of SAM on 3D Slicer, a widely-used open-source image processing and visualization software that has been extensively used in the medical imaging community. This open-source extension to 3D Slicer and its demonstrations are posted on GitHub (https://github.com/bingogome/samm). SAMM achieves 0.6-second latency of a complete cycle and can infer image masks in nearly real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge