Aleksandra Ćiprijanović

Opportunities in AI/ML for the Rubin LSST Dark Energy Science Collaboration

Jan 20, 2026Abstract:The Vera C. Rubin Observatory's Legacy Survey of Space and Time (LSST) will produce unprecedented volumes of heterogeneous astronomical data (images, catalogs, and alerts) that challenge traditional analysis pipelines. The LSST Dark Energy Science Collaboration (DESC) aims to derive robust constraints on dark energy and dark matter from these data, requiring methods that are statistically powerful, scalable, and operationally reliable. Artificial intelligence and machine learning (AI/ML) are already embedded across DESC science workflows, from photometric redshifts and transient classification to weak lensing inference and cosmological simulations. Yet their utility for precision cosmology hinges on trustworthy uncertainty quantification, robustness to covariate shift and model misspecification, and reproducible integration within scientific pipelines. This white paper surveys the current landscape of AI/ML across DESC's primary cosmological probes and cross-cutting analyses, revealing that the same core methodologies and fundamental challenges recur across disparate science cases. Since progress on these cross-cutting challenges would benefit multiple probes simultaneously, we identify key methodological research priorities, including Bayesian inference at scale, physics-informed methods, validation frameworks, and active learning for discovery. With an eye on emerging techniques, we also explore the potential of the latest foundation model methodologies and LLM-driven agentic AI systems to reshape DESC workflows, provided their deployment is coupled with rigorous evaluation and governance. Finally, we discuss critical software, computing, data infrastructure, and human capital requirements for the successful deployment of these new methodologies, and consider associated risks and opportunities for broader coordination with external actors.

SIDDA: SInkhorn Dynamic Domain Adaptation for Image Classification with Equivariant Neural Networks

Jan 23, 2025

Abstract:Modern neural networks (NNs) often do not generalize well in the presence of a "covariate shift"; that is, in situations where the training and test data distributions differ, but the conditional distribution of classification labels remains unchanged. In such cases, NN generalization can be reduced to a problem of learning more domain-invariant features. Domain adaptation (DA) methods include a range of techniques aimed at achieving this; however, these methods have struggled with the need for extensive hyperparameter tuning, which then incurs significant computational costs. In this work, we introduce SIDDA, an out-of-the-box DA training algorithm built upon the Sinkhorn divergence, that can achieve effective domain alignment with minimal hyperparameter tuning and computational overhead. We demonstrate the efficacy of our method on multiple simulated and real datasets of varying complexity, including simple shapes, handwritten digits, and real astronomical observations. SIDDA is compatible with a variety of NN architectures, and it works particularly well in improving classification accuracy and model calibration when paired with equivariant neural networks (ENNs). We find that SIDDA enhances the generalization capabilities of NNs, achieving up to a $\approx40\%$ improvement in classification accuracy on unlabeled target data. We also study the efficacy of DA on ENNs with respect to the varying group orders of the dihedral group $D_N$, and find that the model performance improves as the degree of equivariance increases. Finally, we find that SIDDA enhances model calibration on both source and target data--achieving over an order of magnitude improvement in the ECE and Brier score. SIDDA's versatility, combined with its automated approach to domain alignment, has the potential to advance multi-dataset studies by enabling the development of highly generalizable models.

DeepUQ: Assessing the Aleatoric Uncertainties from two Deep Learning Methods

Nov 13, 2024

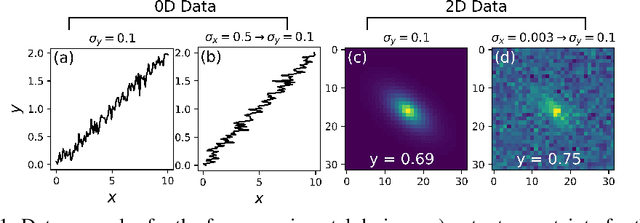

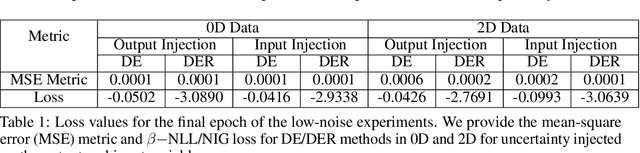

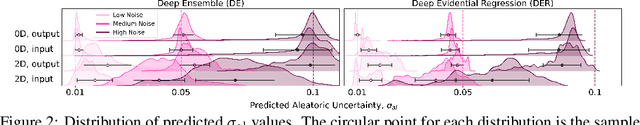

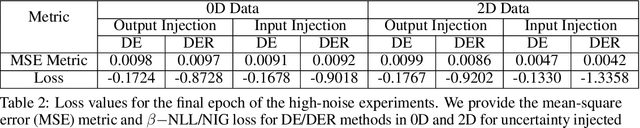

Abstract:Assessing the quality of aleatoric uncertainty estimates from uncertainty quantification (UQ) deep learning methods is important in scientific contexts, where uncertainty is physically meaningful and important to characterize and interpret exactly. We systematically compare aleatoric uncertainty measured by two UQ techniques, Deep Ensembles (DE) and Deep Evidential Regression (DER). Our method focuses on both zero-dimensional (0D) and two-dimensional (2D) data, to explore how the UQ methods function for different data dimensionalities. We investigate uncertainty injected on the input and output variables and include a method to propagate uncertainty in the case of input uncertainty so that we can compare the predicted aleatoric uncertainty to the known values. We experiment with three levels of noise. The aleatoric uncertainty predicted across all models and experiments scales with the injected noise level. However, the predicted uncertainty is miscalibrated to $\rm{std}(\sigma_{\rm al})$ with the true uncertainty for half of the DE experiments and almost all of the DER experiments. The predicted uncertainty is the least accurate for both UQ methods for the 2D input uncertainty experiment and the high-noise level. While these results do not apply to more complex data, they highlight that further research on post-facto calibration for these methods would be beneficial, particularly for high-noise and high-dimensional settings.

Neural Network Prediction of Strong Lensing Systems with Domain Adaptation and Uncertainty Quantification

Oct 23, 2024

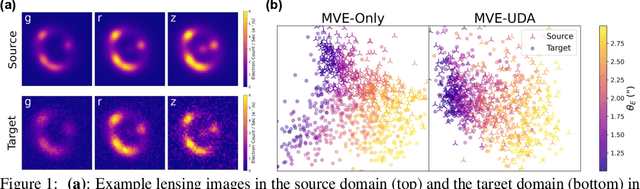

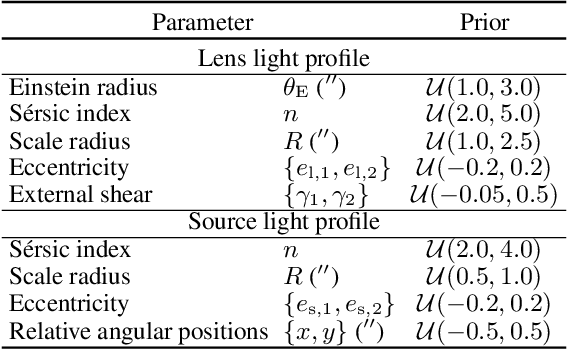

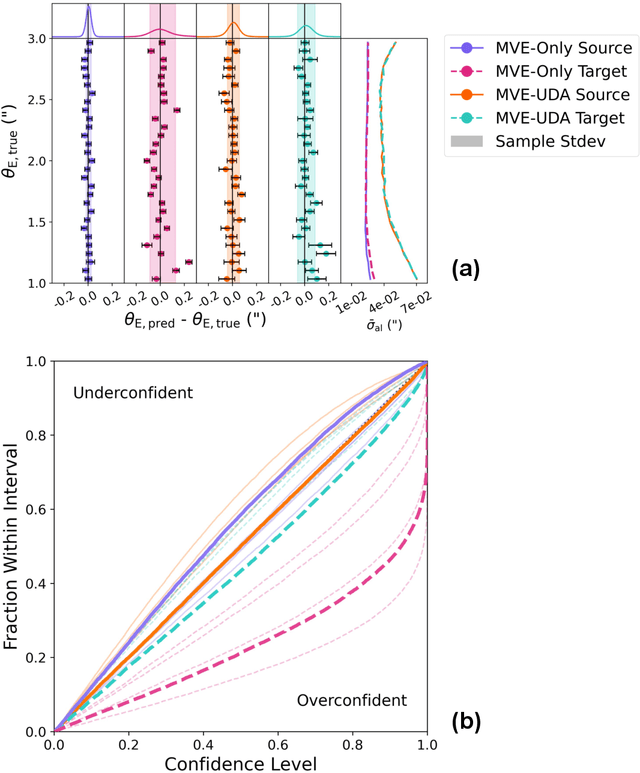

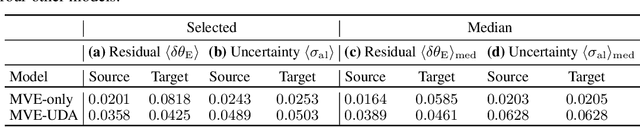

Abstract:Modeling strong gravitational lenses is computationally expensive for the complex data from modern and next-generation cosmic surveys. Deep learning has emerged as a promising approach for finding lenses and predicting lensing parameters, such as the Einstein radius. Mean-variance Estimators (MVEs) are a common approach for obtaining aleatoric (data) uncertainties from a neural network prediction. However, neural networks have not been demonstrated to perform well on out-of-domain target data successfully - e.g., when trained on simulated data and applied to real, observational data. In this work, we perform the first study of the efficacy of MVEs in combination with unsupervised domain adaptation (UDA) on strong lensing data. The source domain data is noiseless, and the target domain data has noise mimicking modern cosmology surveys. We find that adding UDA to MVE increases the accuracy on the target data by a factor of about two over an MVE model without UDA. Including UDA also permits much more well-calibrated aleatoric uncertainty predictions. Advancements in this approach may enable future applications of MVE models to real observational data.

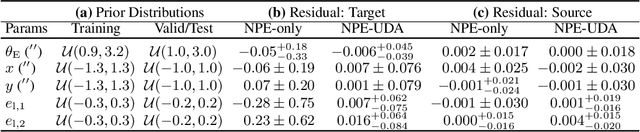

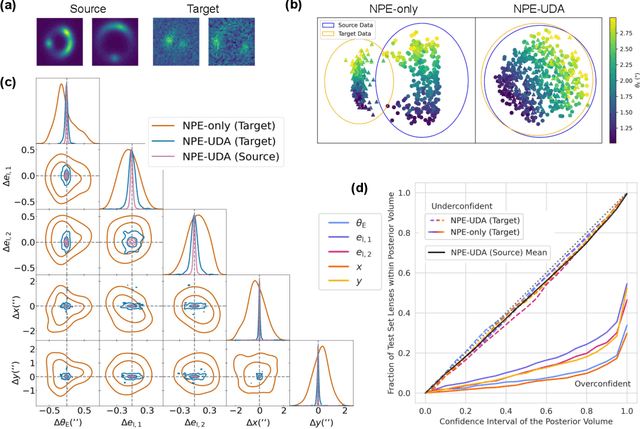

Domain-Adaptive Neural Posterior Estimation for Strong Gravitational Lens Analysis

Oct 21, 2024

Abstract:Modeling strong gravitational lenses is prohibitively expensive for modern and next-generation cosmic survey data. Neural posterior estimation (NPE), a simulation-based inference (SBI) approach, has been studied as an avenue for efficient analysis of strong lensing data. However, NPE has not been demonstrated to perform well on out-of-domain target data -- e.g., when trained on simulated data and then applied to real, observational data. In this work, we perform the first study of the efficacy of NPE in combination with unsupervised domain adaptation (UDA). The source domain is noiseless, and the target domain has noise mimicking modern cosmology surveys. We find that combining UDA and NPE improves the accuracy of the inference by 1-2 orders of magnitude and significantly improves the posterior coverage over an NPE model without UDA. We anticipate that this combination of approaches will help enable future applications of NPE models to real observational data.

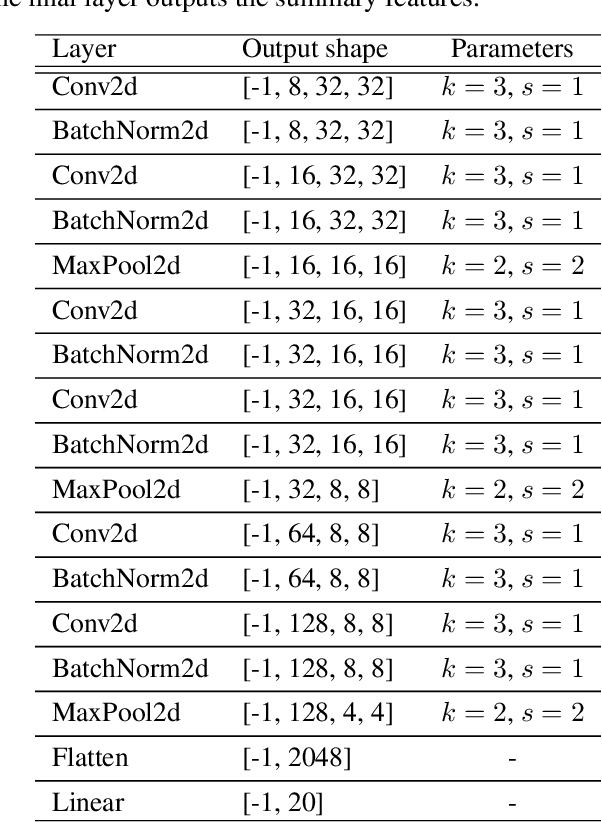

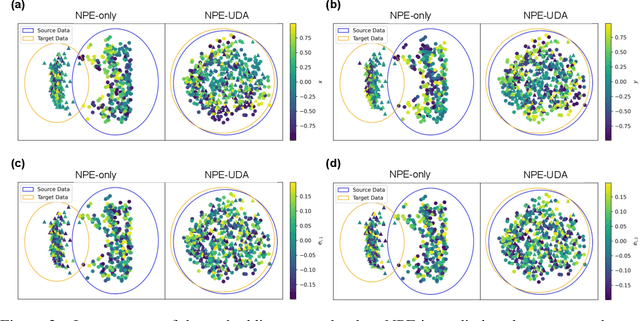

Domain Adaptive Graph Neural Networks for Constraining Cosmological Parameters Across Multiple Data Sets

Nov 02, 2023

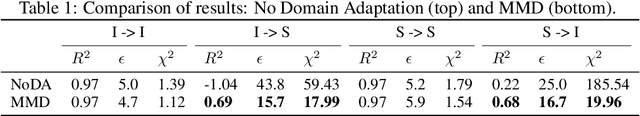

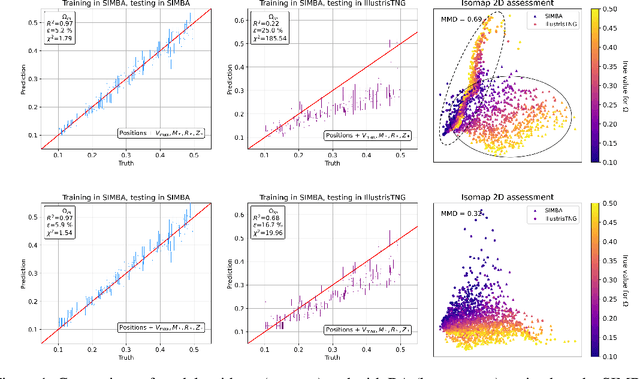

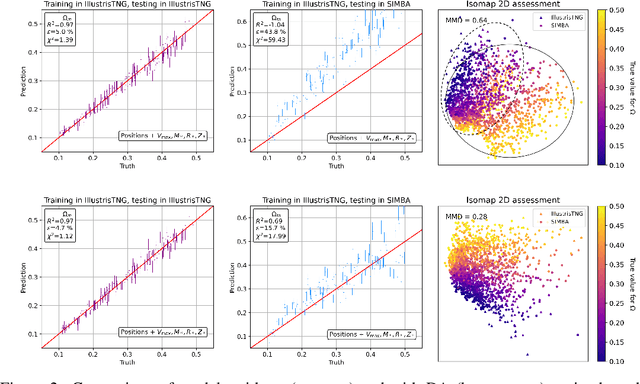

Abstract:Deep learning models have been shown to outperform methods that rely on summary statistics, like the power spectrum, in extracting information from complex cosmological data sets. However, due to differences in the subgrid physics implementation and numerical approximations across different simulation suites, models trained on data from one cosmological simulation show a drop in performance when tested on another. Similarly, models trained on any of the simulations would also likely experience a drop in performance when applied to observational data. Training on data from two different suites of the CAMELS hydrodynamic cosmological simulations, we examine the generalization capabilities of Domain Adaptive Graph Neural Networks (DA-GNNs). By utilizing GNNs, we capitalize on their capacity to capture structured scale-free cosmological information from galaxy distributions. Moreover, by including unsupervised domain adaptation via Maximum Mean Discrepancy (MMD), we enable our models to extract domain-invariant features. We demonstrate that DA-GNN achieves higher accuracy and robustness on cross-dataset tasks (up to $28\%$ better relative error and up to almost an order of magnitude better $\chi^2$). Using data visualizations, we show the effects of domain adaptation on proper latent space data alignment. This shows that DA-GNNs are a promising method for extracting domain-independent cosmological information, a vital step toward robust deep learning for real cosmic survey data.

Neural Inference of Gaussian Processes for Time Series Data of Quasars

Nov 17, 2022

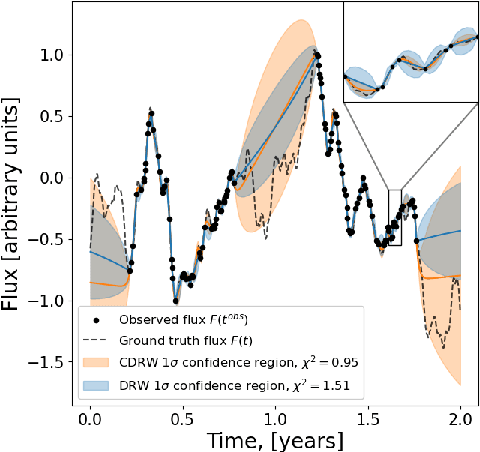

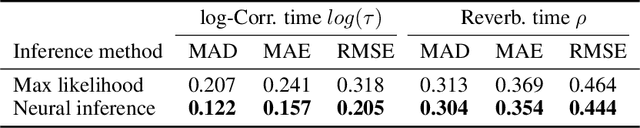

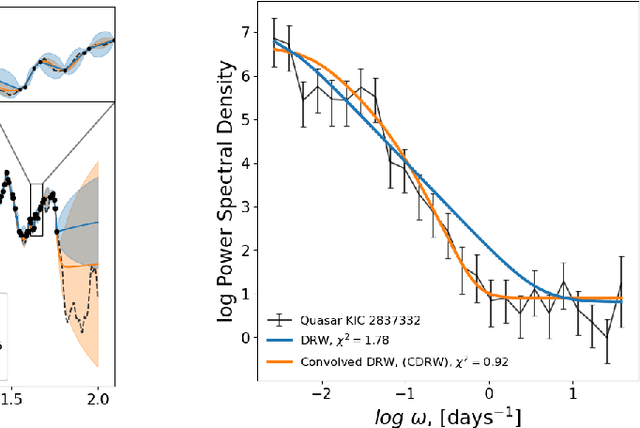

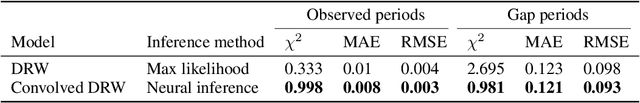

Abstract:The study of quasar light curves poses two problems: inference of the power spectrum and interpolation of an irregularly sampled time series. A baseline approach to these tasks is to interpolate a time series with a Damped Random Walk (DRW) model, in which the spectrum is inferred using Maximum Likelihood Estimation (MLE). However, the DRW model does not describe the smoothness of the time series, and MLE faces many problems in terms of optimization and numerical precision. In this work, we introduce a new stochastic model that we call $\textit{Convolved Damped Random Walk}$ (CDRW). This model introduces a concept of smoothness to a DRW, which enables it to describe quasar spectra completely. We also introduce a new method of inference of Gaussian process parameters, which we call $\textit{Neural Inference}$. This method uses the powers of state-of-the-art neural networks to improve the conventional MLE inference technique. In our experiments, the Neural Inference method results in significant improvement over the baseline MLE (RMSE: $0.318 \rightarrow 0.205$, $0.464 \rightarrow 0.444$). Moreover, the combination of both the CDRW model and Neural Inference significantly outperforms the baseline DRW and MLE in interpolating a typical quasar light curve ($\chi^2$: $0.333 \rightarrow 0.998$, $2.695 \rightarrow 0.981$). The code is published on GitHub.

Semi-Supervised Domain Adaptation for Cross-Survey Galaxy Morphology Classification and Anomaly Detection

Nov 11, 2022

Abstract:In the era of big astronomical surveys, our ability to leverage artificial intelligence algorithms simultaneously for multiple datasets will open new avenues for scientific discovery. Unfortunately, simply training a deep neural network on images from one data domain often leads to very poor performance on any other dataset. Here we develop a Universal Domain Adaptation method DeepAstroUDA, capable of performing semi-supervised domain alignment that can be applied to datasets with different types of class overlap. Extra classes can be present in any of the two datasets, and the method can even be used in the presence of unknown classes. For the first time, we demonstrate the successful use of domain adaptation on two very different observational datasets (from SDSS and DECaLS). We show that our method is capable of bridging the gap between two astronomical surveys, and also performs well for anomaly detection and clustering of unknown data in the unlabeled dataset. We apply our model to two examples of galaxy morphology classification tasks with anomaly detection: 1) classifying spiral and elliptical galaxies with detection of merging galaxies (three classes including one unknown anomaly class); 2) a more granular problem where the classes describe more detailed morphological properties of galaxies, with the detection of gravitational lenses (ten classes including one unknown anomaly class).

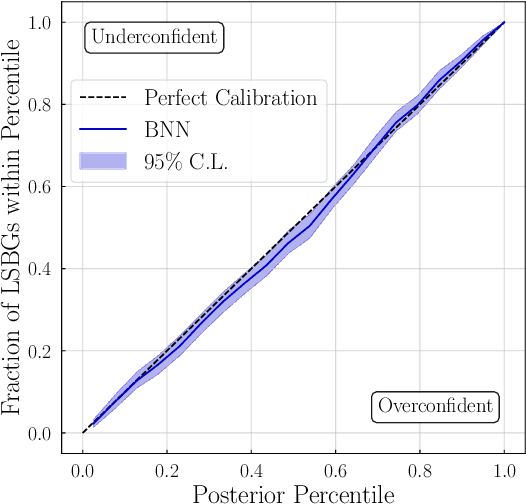

Inferring Structural Parameters of Low-Surface-Brightness-Galaxies with Uncertainty Quantification using Bayesian Neural Networks

Jul 07, 2022

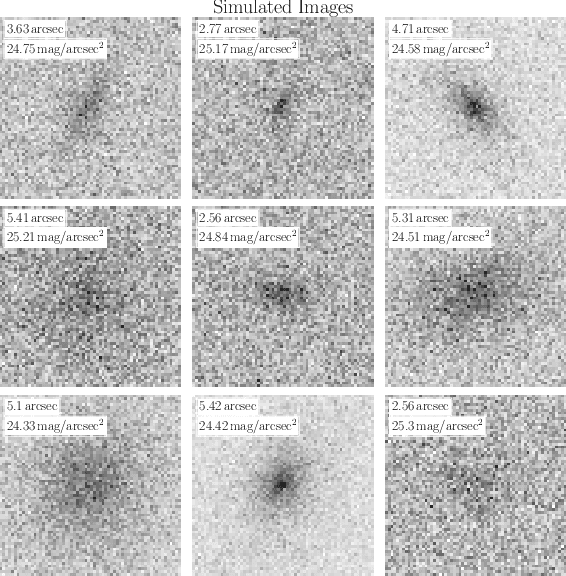

Abstract:Measuring the structural parameters (size, total brightness, light concentration, etc.) of galaxies is a significant first step towards a quantitative description of different galaxy populations. In this work, we demonstrate that a Bayesian Neural Network (BNN) can be used for the inference, with uncertainty quantification, of such morphological parameters from simulated low-surface-brightness galaxy images. Compared to traditional profile-fitting methods, we show that the uncertainties obtained using BNNs are comparable in magnitude, well-calibrated, and the point estimates of the parameters are closer to the true values. Our method is also significantly faster, which is very important with the advent of the era of large galaxy surveys and big data in astrophysics.

Machine Learning and Cosmology

Mar 15, 2022Abstract:Methods based on machine learning have recently made substantial inroads in many corners of cosmology. Through this process, new computational tools, new perspectives on data collection, model development, analysis, and discovery, as well as new communities and educational pathways have emerged. Despite rapid progress, substantial potential at the intersection of cosmology and machine learning remains untapped. In this white paper, we summarize current and ongoing developments relating to the application of machine learning within cosmology and provide a set of recommendations aimed at maximizing the scientific impact of these burgeoning tools over the coming decade through both technical development as well as the fostering of emerging communities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge