Gautham Narayan

Opportunities in AI/ML for the Rubin LSST Dark Energy Science Collaboration

Jan 20, 2026Abstract:The Vera C. Rubin Observatory's Legacy Survey of Space and Time (LSST) will produce unprecedented volumes of heterogeneous astronomical data (images, catalogs, and alerts) that challenge traditional analysis pipelines. The LSST Dark Energy Science Collaboration (DESC) aims to derive robust constraints on dark energy and dark matter from these data, requiring methods that are statistically powerful, scalable, and operationally reliable. Artificial intelligence and machine learning (AI/ML) are already embedded across DESC science workflows, from photometric redshifts and transient classification to weak lensing inference and cosmological simulations. Yet their utility for precision cosmology hinges on trustworthy uncertainty quantification, robustness to covariate shift and model misspecification, and reproducible integration within scientific pipelines. This white paper surveys the current landscape of AI/ML across DESC's primary cosmological probes and cross-cutting analyses, revealing that the same core methodologies and fundamental challenges recur across disparate science cases. Since progress on these cross-cutting challenges would benefit multiple probes simultaneously, we identify key methodological research priorities, including Bayesian inference at scale, physics-informed methods, validation frameworks, and active learning for discovery. With an eye on emerging techniques, we also explore the potential of the latest foundation model methodologies and LLM-driven agentic AI systems to reshape DESC workflows, provided their deployment is coupled with rigorous evaluation and governance. Finally, we discuss critical software, computing, data infrastructure, and human capital requirements for the successful deployment of these new methodologies, and consider associated risks and opportunities for broader coordination with external actors.

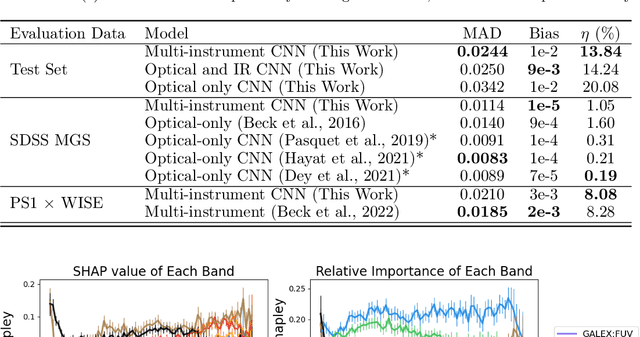

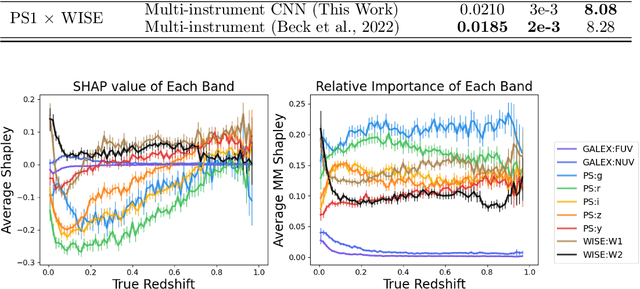

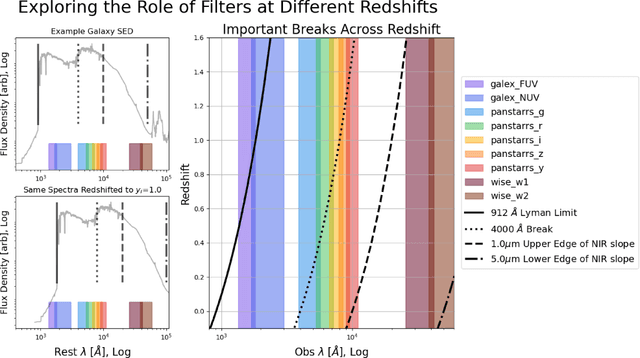

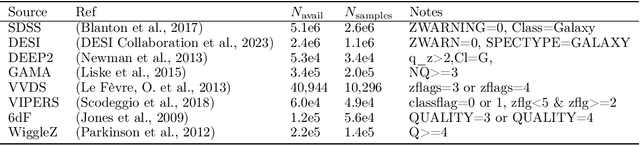

Mantis Shrimp: Exploring Photometric Band Utilization in Computer Vision Networks for Photometric Redshift Estimation

Jan 15, 2025Abstract:We present Mantis Shrimp, a multi-survey deep learning model for photometric redshift estimation that fuses ultra-violet (GALEX), optical (PanSTARRS), and infrared (UnWISE) imagery. Machine learning is now an established approach for photometric redshift estimation, with generally acknowledged higher performance in areas with a high density of spectroscopically identified galaxies over template-based methods. Multiple works have shown that image-based convolutional neural networks can outperform tabular-based color/magnitude models. In comparison to tabular models, image models have additional design complexities: it is largely unknown how to fuse inputs from different instruments which have different resolutions or noise properties. The Mantis Shrimp model estimates the conditional density estimate of redshift using cutout images. The density estimates are well calibrated and the point estimates perform well in the distribution of available spectroscopically confirmed galaxies with (bias = 1e-2), scatter (NMAD = 2.44e-2) and catastrophic outlier rate ($\eta$=17.53$\%$). We find that early fusion approaches (e.g., resampling and stacking images from different instruments) match the performance of late fusion approaches (e.g., concatenating latent space representations), so that the design choice ultimately is left to the user. Finally, we study how the models learn to use information across bands, finding evidence that our models successfully incorporates information from all surveys. The applicability of our model to the analysis of large populations of galaxies is limited by the speed of downloading cutouts from external servers; however, our model could be useful in smaller studies such as generating priors over redshift for stellar population synthesis.

ORACLE: A Real-Time, Hierarchical, Deep-Learning Photometric Classifier for the LSST

Jan 02, 2025

Abstract:We present ORACLE, the first hierarchical deep-learning model for real-time, context-aware classification of transient and variable astrophysical phenomena. ORACLE is a recurrent neural network with Gated Recurrent Units (GRUs), and has been trained using a custom hierarchical cross-entropy loss function to provide high-confidence classifications along an observationally-driven taxonomy with as little as a single photometric observation. Contextual information for each object, including host galaxy photometric redshift, offset, ellipticity and brightness, is concatenated to the light curve embedding and used to make a final prediction. Training on $\sim$0.5M events from the Extended LSST Astronomical Time-Series Classification Challenge, we achieve a top-level (Transient vs Variable) macro-averaged precision of 0.96 using only 1 day of photometric observations after the first detection in addition to contextual information, for each event; this increases to $>$0.99 once 64 days of the light curve has been obtained, and 0.83 at 1024 days after first detection for 19-way classification (including supernova sub-types, active galactic nuclei, variable stars, microlensing events, and kilonovae). We also compare ORACLE with other state-of-the-art classifiers and report comparable performance for the 19-way classification task, in addition to delivering accurate top-level classifications much earlier. The code and model weights used in this work are publicly available at our associated GitHub repository (https://github.com/uiucsn/ELAsTiCC-Classification).

Preliminary Report on Mantis Shrimp: a Multi-Survey Computer Vision Photometric Redshift Model

Feb 05, 2024

Abstract:The availability of large, public, multi-modal astronomical datasets presents an opportunity to execute novel research that straddles the line between science of AI and science of astronomy. Photometric redshift estimation is a well-established subfield of astronomy. Prior works show that computer vision models typically outperform catalog-based models, but these models face additional complexities when incorporating images from more than one instrument or sensor. In this report, we detail our progress creating Mantis Shrimp, a multi-survey computer vision model for photometric redshift estimation that fuses ultra-violet (GALEX), optical (PanSTARRS), and infrared (UnWISE) imagery. We use deep learning interpretability diagnostics to measure how the model leverages information from the different inputs. We reason about the behavior of the CNNs from the interpretability metrics, specifically framing the result in terms of physically-grounded knowledge of galaxy properties.

Machine Learning and Cosmology

Mar 15, 2022Abstract:Methods based on machine learning have recently made substantial inroads in many corners of cosmology. Through this process, new computational tools, new perspectives on data collection, model development, analysis, and discovery, as well as new communities and educational pathways have emerged. Despite rapid progress, substantial potential at the intersection of cosmology and machine learning remains untapped. In this white paper, we summarize current and ongoing developments relating to the application of machine learning within cosmology and provide a set of recommendations aimed at maximizing the scientific impact of these burgeoning tools over the coming decade through both technical development as well as the fostering of emerging communities.

Real-time Detection of Anomalies in Multivariate Time Series of Astronomical Data

Dec 15, 2021

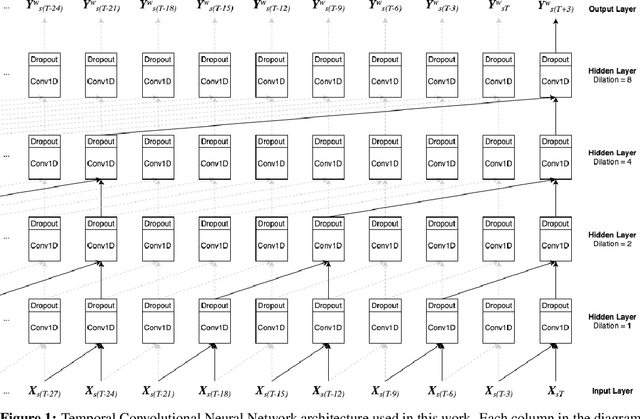

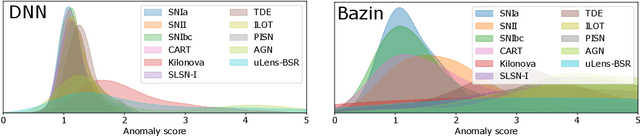

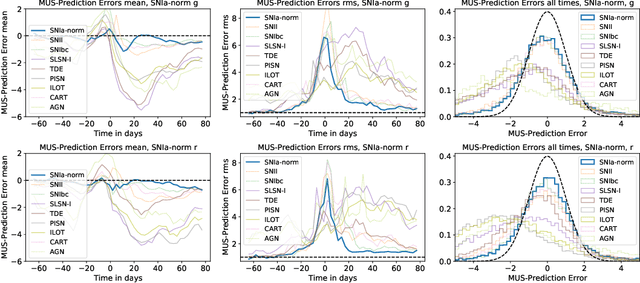

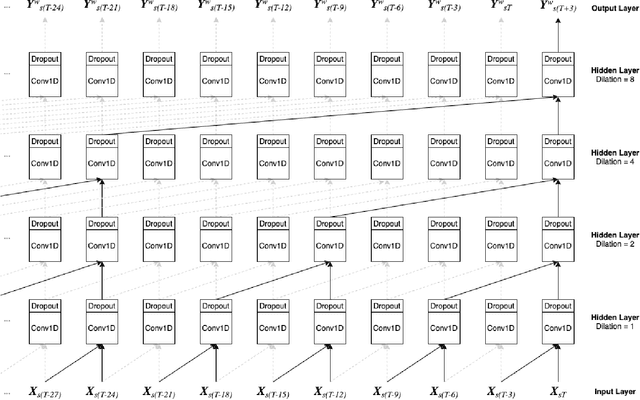

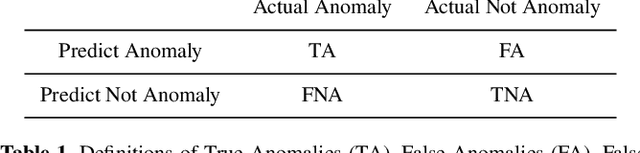

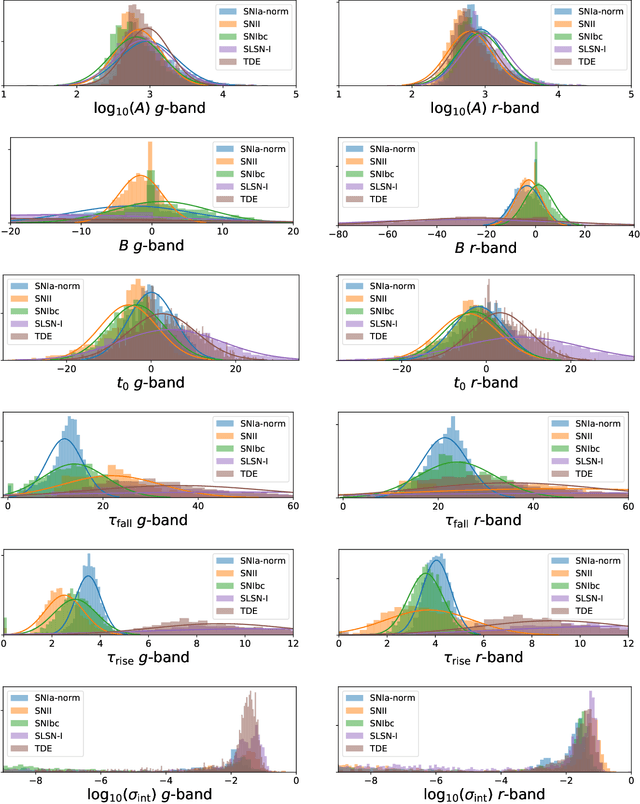

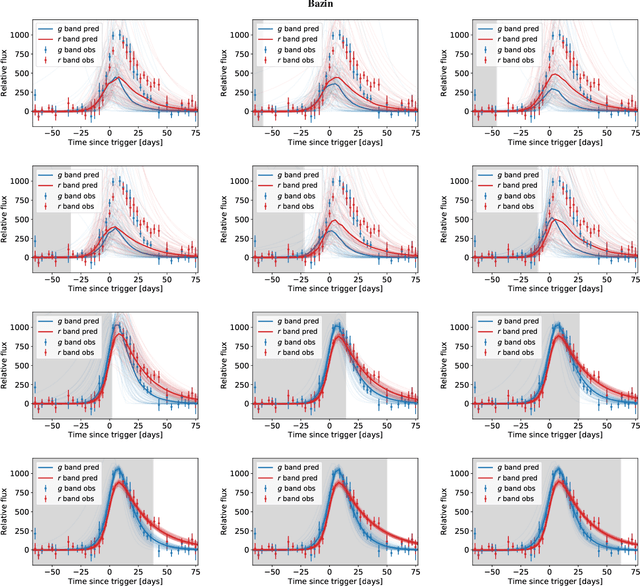

Abstract:Astronomical transients are stellar objects that become temporarily brighter on various timescales and have led to some of the most significant discoveries in cosmology and astronomy. Some of these transients are the explosive deaths of stars known as supernovae while others are rare, exotic, or entirely new kinds of exciting stellar explosions. New astronomical sky surveys are observing unprecedented numbers of multi-wavelength transients, making standard approaches of visually identifying new and interesting transients infeasible. To meet this demand, we present two novel methods that aim to quickly and automatically detect anomalous transient light curves in real-time. Both methods are based on the simple idea that if the light curves from a known population of transients can be accurately modelled, any deviations from model predictions are likely anomalies. The first approach is a probabilistic neural network built using Temporal Convolutional Networks (TCNs) and the second is an interpretable Bayesian parametric model of a transient. We show that the flexibility of neural networks, the attribute that makes them such a powerful tool for many regression tasks, is what makes them less suitable for anomaly detection when compared with our parametric model.

Real-time detection of anomalies in large-scale transient surveys

Oct 29, 2021

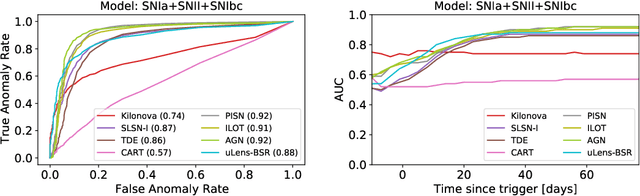

Abstract:New time-domain surveys, such as the Rubin Observatory Legacy Survey of Space and Time (LSST), will observe millions of transient alerts each night, making standard approaches of visually identifying new and interesting transients infeasible. We present two novel methods of automatically detecting anomalous transient light curves in real-time. Both methods are based on the simple idea that if the light curves from a known population of transients can be accurately modelled, any deviations from model predictions are likely anomalies. The first modelling approach is a probabilistic neural network built using Temporal Convolutional Networks (TCNs) and the second is an interpretable Bayesian parametric model of a transient. We demonstrate our methods' ability to provide anomaly scores as a function of time on light curves from the Zwicky Transient Facility. We show that the flexibility of neural networks, the attribute that makes them such a powerful tool for many regression tasks, is what makes them less suitable for anomaly detection when compared with our parametric model. The parametric model is able to identify anomalies with respect to common supernova classes with low false anomaly rates and high true anomaly rates achieving Area Under the Receive Operating Characteristic (ROC) Curve (AUC) scores above 0.8 for most rare classes such as kilonovae, tidal disruption events, intermediate luminosity transients, and pair-instability supernovae. Our ability to identify anomalies improves over the lifetime of the light curves. Our framework, used in conjunction with transient classifiers, will enable fast and prioritised follow-up of unusual transients from new large-scale surveys.

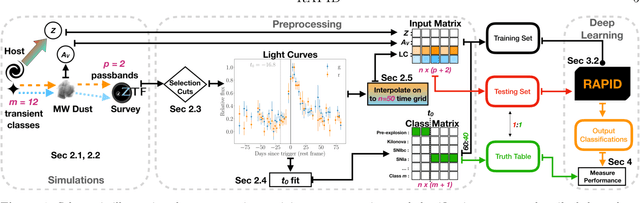

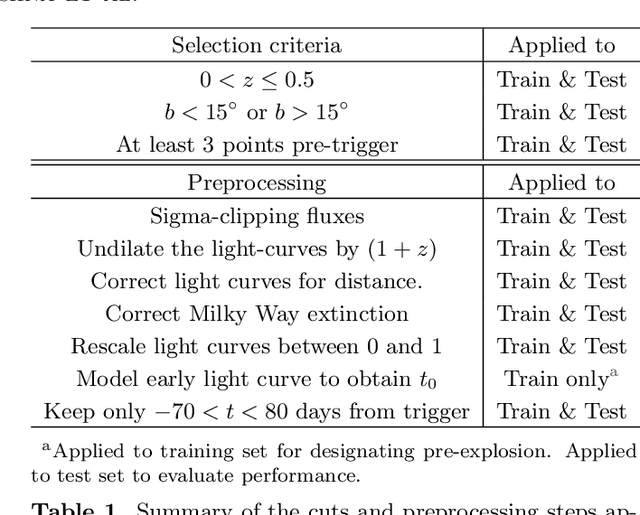

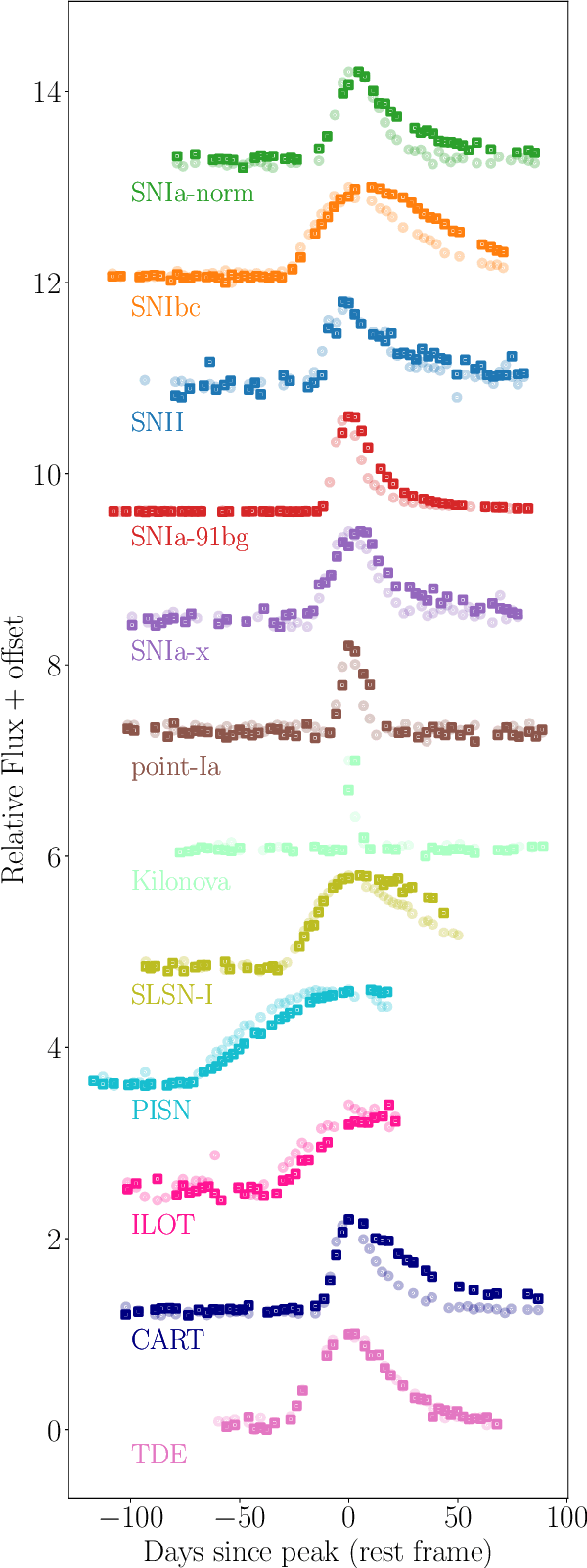

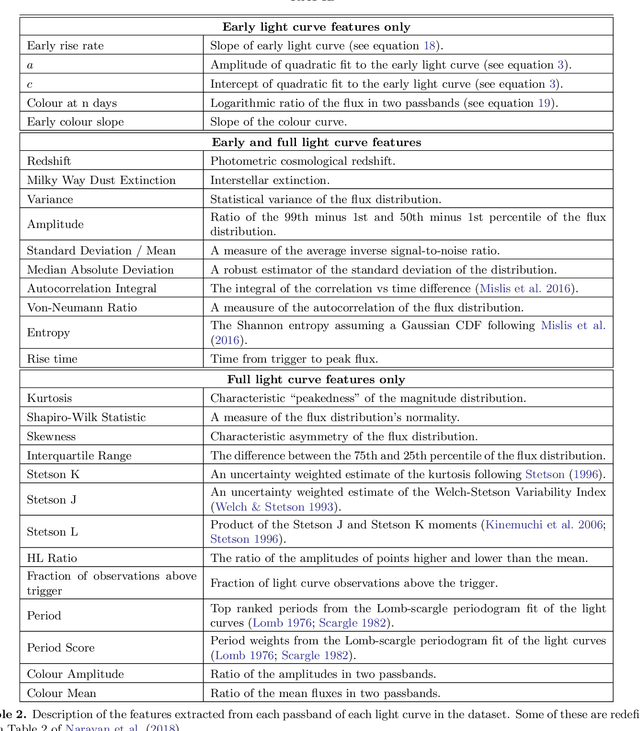

RAPID: Early Classification of Explosive Transients using Deep Learning

Mar 29, 2019

Abstract:We present RAPID (Real-time Automated Photometric IDentification), a novel time-series classification tool capable of automatically identifying transients from within a day of the initial alert, to the full lifetime of a light curve. Using a deep recurrent neural network with Gated Recurrent Units (GRUs), we present the first method specifically designed to provide early classifications of astronomical time-series data, typing 12 different transient classes. Our classifier can process light curves with any phase coverage, and it does not rely on deriving computationally expensive features from the data, making RAPID well-suited for processing the millions of alerts that ongoing and upcoming wide-field surveys such as the Zwicky Transient Facility (ZTF), and the Large Synoptic Survey Telescope (LSST) will produce. The classification accuracy improves over the lifetime of the transient as more photometric data becomes available, and across the 12 transient classes, we obtain an average area under the receiver operating characteristic curve of 0.95 and 0.98 at early and late epochs, respectively. We demonstrate RAPID's ability to effectively provide early classifications of transients from the ZTF data stream. We have made RAPID available as an open-source software package (https://astrorapid.readthedocs.io) for machine learning-based alert-brokers to use for the autonomous and quick classification of several thousand light curves within a few seconds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge