Michelle Lochner

Opportunities in AI/ML for the Rubin LSST Dark Energy Science Collaboration

Jan 20, 2026Abstract:The Vera C. Rubin Observatory's Legacy Survey of Space and Time (LSST) will produce unprecedented volumes of heterogeneous astronomical data (images, catalogs, and alerts) that challenge traditional analysis pipelines. The LSST Dark Energy Science Collaboration (DESC) aims to derive robust constraints on dark energy and dark matter from these data, requiring methods that are statistically powerful, scalable, and operationally reliable. Artificial intelligence and machine learning (AI/ML) are already embedded across DESC science workflows, from photometric redshifts and transient classification to weak lensing inference and cosmological simulations. Yet their utility for precision cosmology hinges on trustworthy uncertainty quantification, robustness to covariate shift and model misspecification, and reproducible integration within scientific pipelines. This white paper surveys the current landscape of AI/ML across DESC's primary cosmological probes and cross-cutting analyses, revealing that the same core methodologies and fundamental challenges recur across disparate science cases. Since progress on these cross-cutting challenges would benefit multiple probes simultaneously, we identify key methodological research priorities, including Bayesian inference at scale, physics-informed methods, validation frameworks, and active learning for discovery. With an eye on emerging techniques, we also explore the potential of the latest foundation model methodologies and LLM-driven agentic AI systems to reshape DESC workflows, provided their deployment is coupled with rigorous evaluation and governance. Finally, we discuss critical software, computing, data infrastructure, and human capital requirements for the successful deployment of these new methodologies, and consider associated risks and opportunities for broader coordination with external actors.

A Classifier-Based Approach to Multi-Class Anomaly Detection for Astronomical Transients

Mar 21, 2024

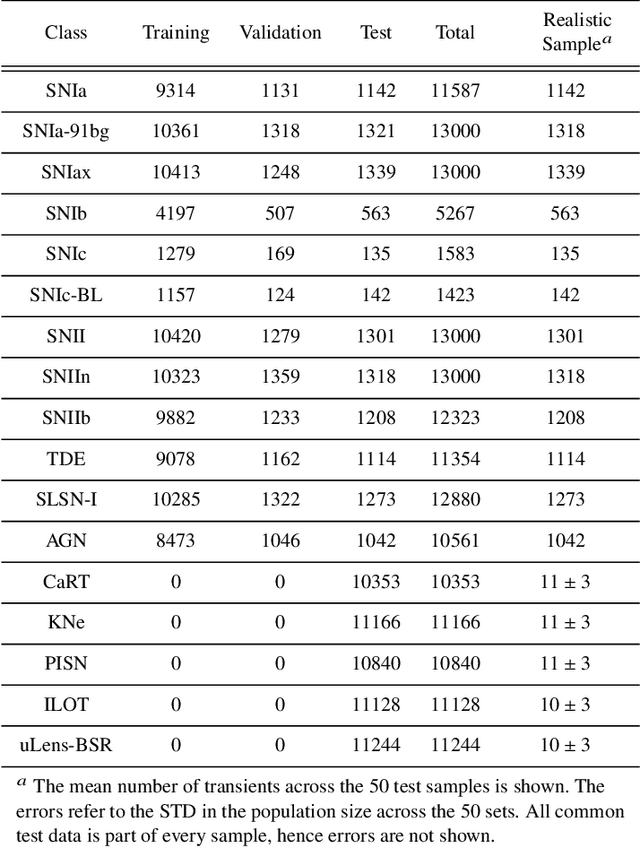

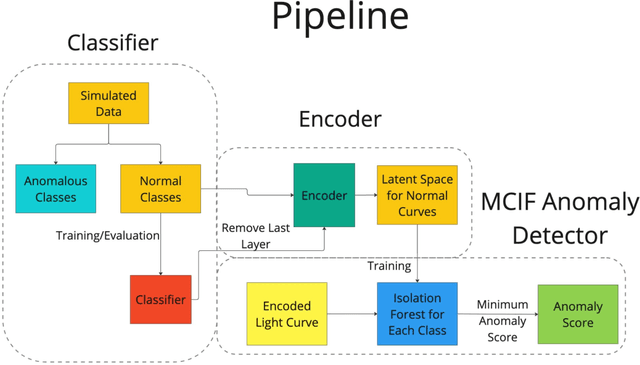

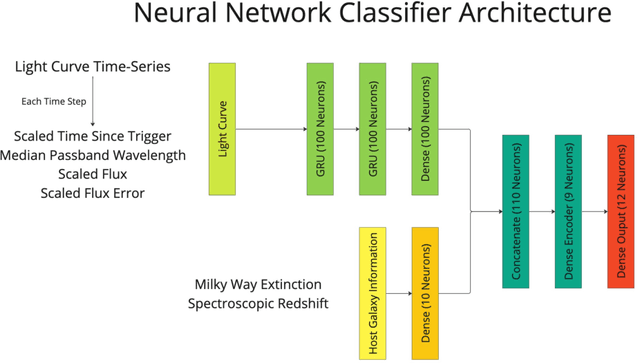

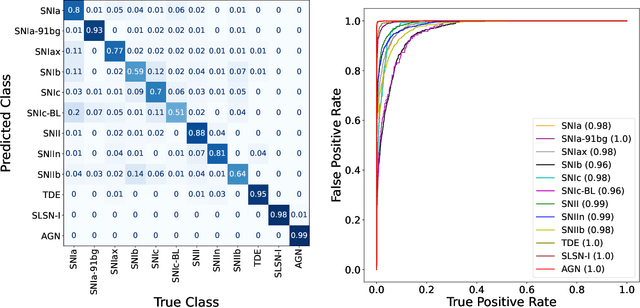

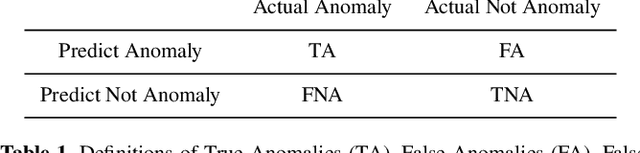

Abstract:Automating real-time anomaly detection is essential for identifying rare transients in the era of large-scale astronomical surveys. Modern survey telescopes are generating tens of thousands of alerts per night, and future telescopes, such as the Vera C. Rubin Observatory, are projected to increase this number dramatically. Currently, most anomaly detection algorithms for astronomical transients rely either on hand-crafted features extracted from light curves or on features generated through unsupervised representation learning, which are then coupled with standard machine learning anomaly detection algorithms. In this work, we introduce an alternative approach to detecting anomalies: using the penultimate layer of a neural network classifier as the latent space for anomaly detection. We then propose a novel method, named Multi-Class Isolation Forests (MCIF), which trains separate isolation forests for each class to derive an anomaly score for a light curve from the latent space representation given by the classifier. This approach significantly outperforms a standard isolation forest. We also use a simpler input method for real-time transient classifiers which circumvents the need for interpolation in light curves and helps the neural network model inter-passband relationships and handle irregular sampling. Our anomaly detection pipeline identifies rare classes including kilonovae, pair-instability supernovae, and intermediate luminosity transients shortly after trigger on simulated Zwicky Transient Facility light curves. Using a sample of our simulations that matched the population of anomalies expected in nature (54 anomalies and 12,040 common transients), our method was able to discover $41\pm3$ anomalies (~75% recall) after following up the top 2000 (~15%) ranked transients. Our novel method shows that classifiers can be effectively repurposed for real-time anomaly detection.

Real-time Detection of Anomalies in Multivariate Time Series of Astronomical Data

Dec 15, 2021

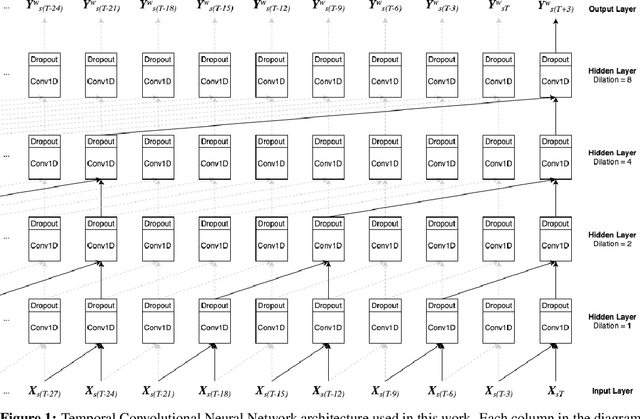

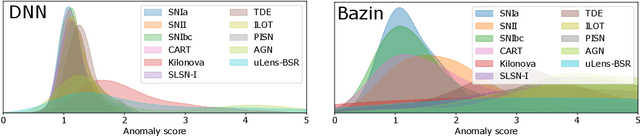

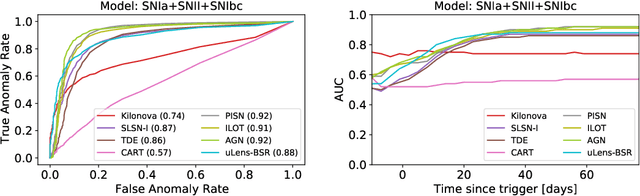

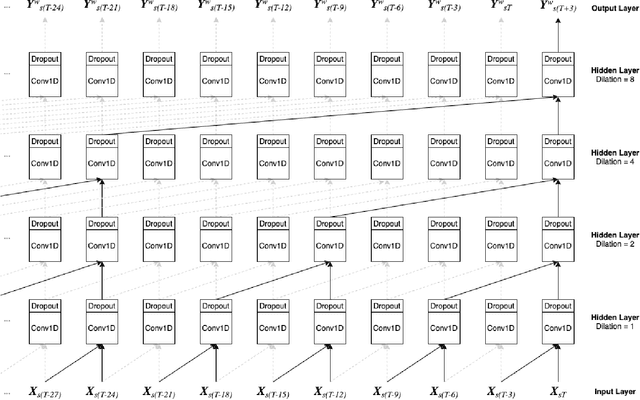

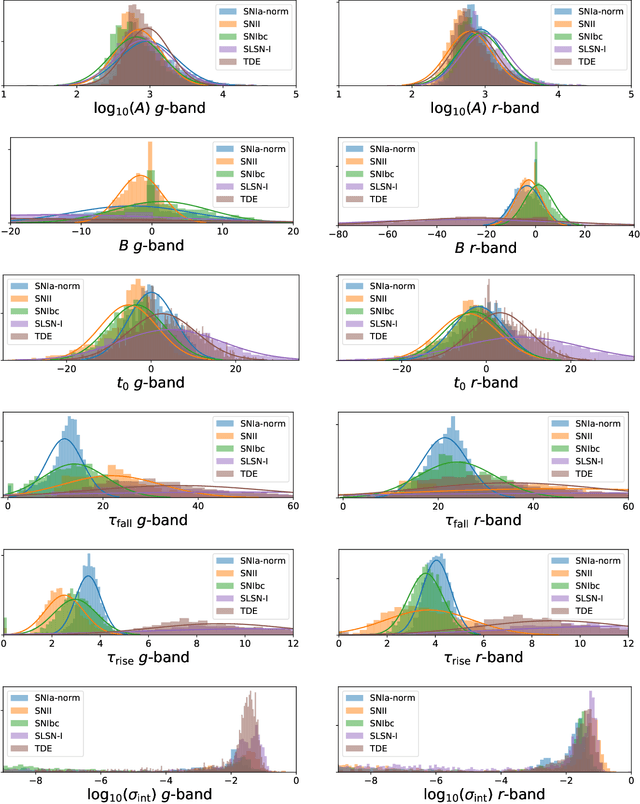

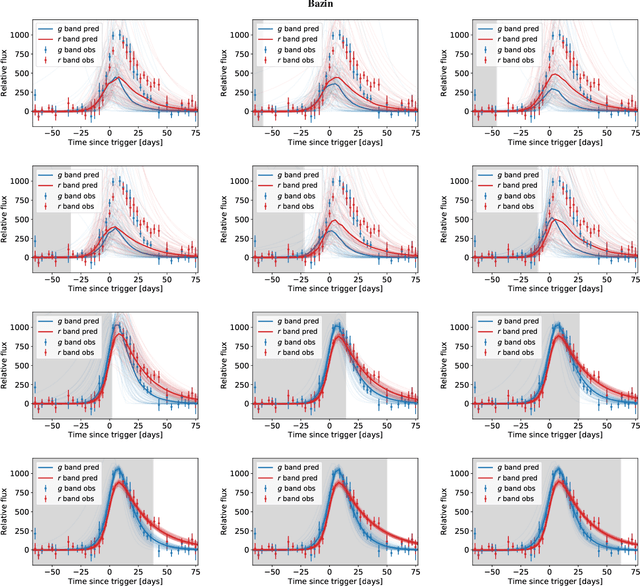

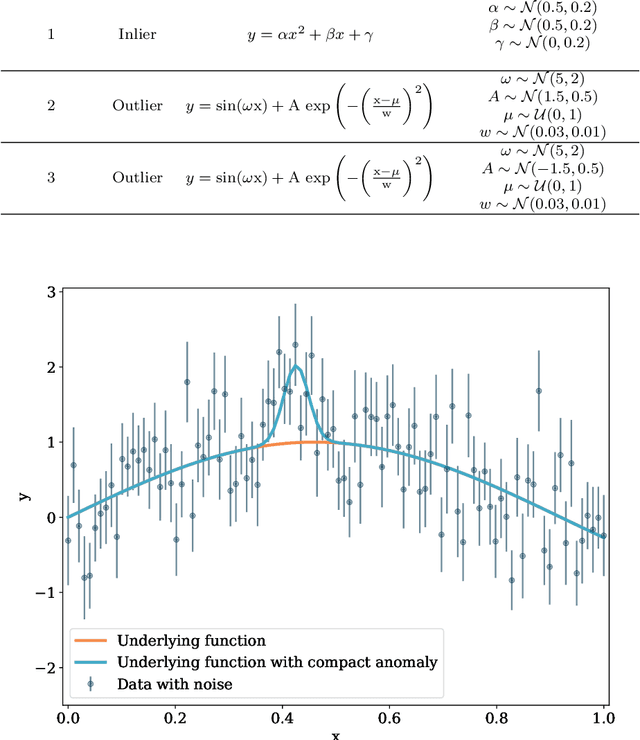

Abstract:Astronomical transients are stellar objects that become temporarily brighter on various timescales and have led to some of the most significant discoveries in cosmology and astronomy. Some of these transients are the explosive deaths of stars known as supernovae while others are rare, exotic, or entirely new kinds of exciting stellar explosions. New astronomical sky surveys are observing unprecedented numbers of multi-wavelength transients, making standard approaches of visually identifying new and interesting transients infeasible. To meet this demand, we present two novel methods that aim to quickly and automatically detect anomalous transient light curves in real-time. Both methods are based on the simple idea that if the light curves from a known population of transients can be accurately modelled, any deviations from model predictions are likely anomalies. The first approach is a probabilistic neural network built using Temporal Convolutional Networks (TCNs) and the second is an interpretable Bayesian parametric model of a transient. We show that the flexibility of neural networks, the attribute that makes them such a powerful tool for many regression tasks, is what makes them less suitable for anomaly detection when compared with our parametric model.

Real-time detection of anomalies in large-scale transient surveys

Oct 29, 2021

Abstract:New time-domain surveys, such as the Rubin Observatory Legacy Survey of Space and Time (LSST), will observe millions of transient alerts each night, making standard approaches of visually identifying new and interesting transients infeasible. We present two novel methods of automatically detecting anomalous transient light curves in real-time. Both methods are based on the simple idea that if the light curves from a known population of transients can be accurately modelled, any deviations from model predictions are likely anomalies. The first modelling approach is a probabilistic neural network built using Temporal Convolutional Networks (TCNs) and the second is an interpretable Bayesian parametric model of a transient. We demonstrate our methods' ability to provide anomaly scores as a function of time on light curves from the Zwicky Transient Facility. We show that the flexibility of neural networks, the attribute that makes them such a powerful tool for many regression tasks, is what makes them less suitable for anomaly detection when compared with our parametric model. The parametric model is able to identify anomalies with respect to common supernova classes with low false anomaly rates and high true anomaly rates achieving Area Under the Receive Operating Characteristic (ROC) Curve (AUC) scores above 0.8 for most rare classes such as kilonovae, tidal disruption events, intermediate luminosity transients, and pair-instability supernovae. Our ability to identify anomalies improves over the lifetime of the light curves. Our framework, used in conjunction with transient classifiers, will enable fast and prioritised follow-up of unusual transients from new large-scale surveys.

Practical Galaxy Morphology Tools from Deep Supervised Representation Learning

Oct 25, 2021

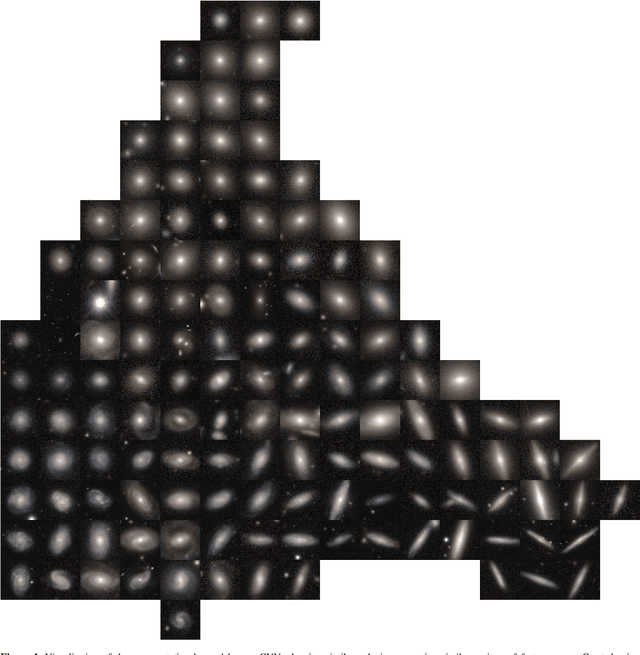

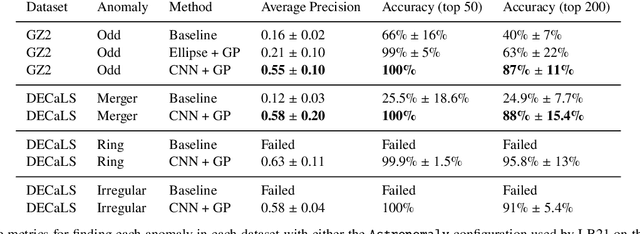

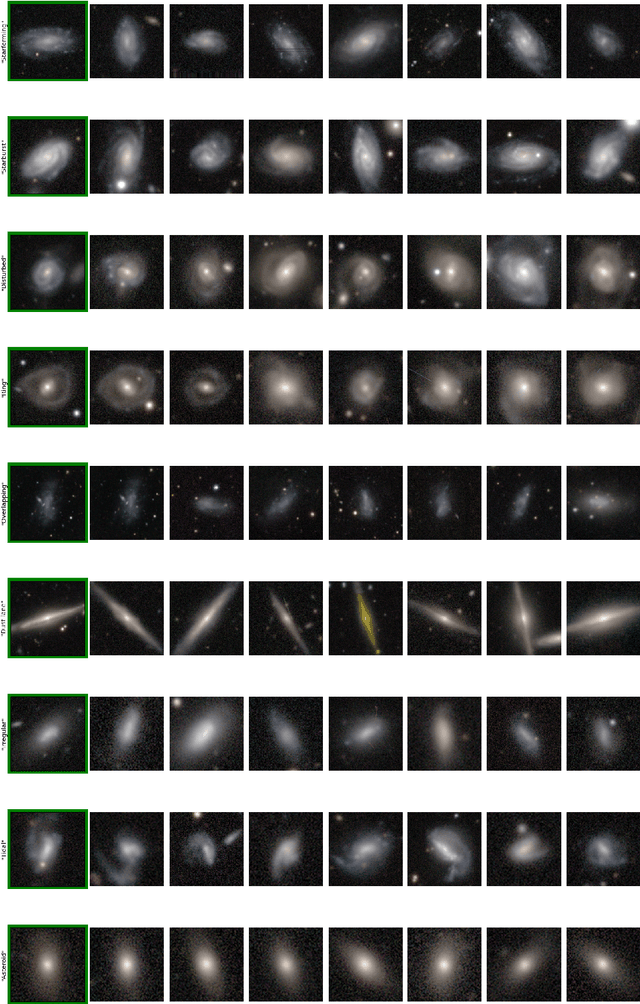

Abstract:Astronomers have typically set out to solve supervised machine learning problems by creating their own representations from scratch. We show that deep learning models trained to answer every Galaxy Zoo DECaLS question learn meaningful semantic representations of galaxies that are useful for new tasks on which the models were never trained. We exploit these representations to outperform existing approaches at several practical tasks crucial for investigating large galaxy samples. The first task is identifying galaxies of similar morphology to a query galaxy. Given a single galaxy assigned a free text tag by humans (e.g. `#diffuse'), we can find galaxies matching that tag for most tags. The second task is identifying the most interesting anomalies to a particular researcher. Our approach is 100\% accurate at identifying the most interesting 100 anomalies (as judged by Galaxy Zoo 2 volunteers). The third task is adapting a model to solve a new task using only a small number of newly-labelled galaxies. Models fine-tuned from our representation are better able to identify ring galaxies than models fine-tuned from terrestrial images (ImageNet) or trained from scratch. We solve each task with very few new labels; either one (for the similarity search) or several hundred (for anomaly detection or fine-tuning). This challenges the longstanding view that deep supervised methods require new large labelled datasets for practical use in astronomy. To help the community benefit from our pretrained models, we release our fine-tuning code zoobot. Zoobot is accessible to researchers with no prior experience in deep learning.

Bayesian Anomaly Detection and Classification

Feb 22, 2019

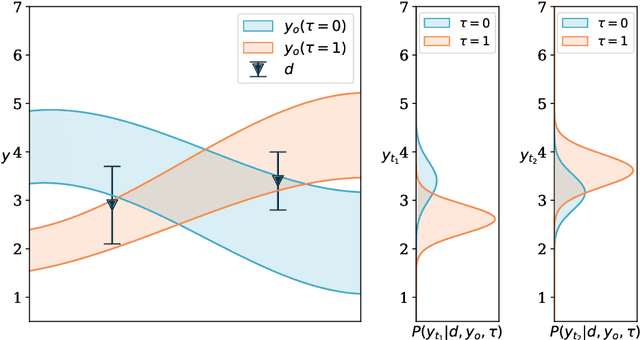

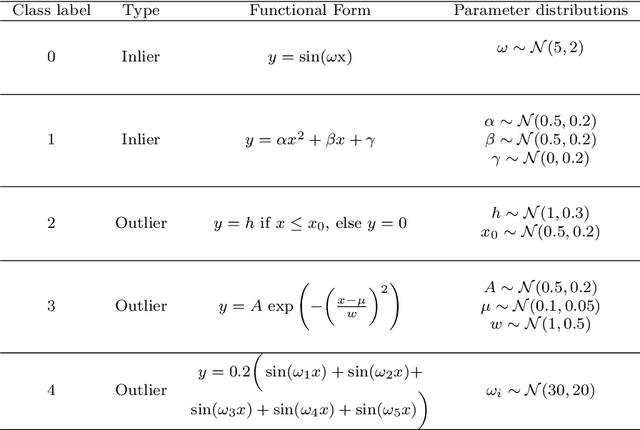

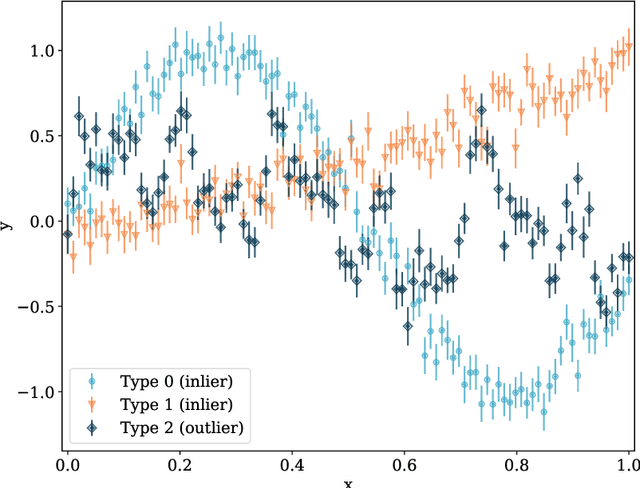

Abstract:Statistical uncertainties are rarely incorporated in machine learning algorithms, especially for anomaly detection. Here we present the Bayesian Anomaly Detection And Classification (BADAC) formalism, which provides a unified statistical approach to classification and anomaly detection within a hierarchical Bayesian framework. BADAC deals with uncertainties by marginalising over the unknown, true, value of the data. Using simulated data with Gaussian noise, BADAC is shown to be superior to standard algorithms in both classification and anomaly detection performance in the presence of uncertainties, though with significantly increased computational cost. Additionally, BADAC provides well-calibrated classification probabilities, valuable for use in scientific pipelines. We show that BADAC can work in online mode and is fairly robust to model errors, which can be diagnosed through model-selection methods. In addition it can perform unsupervised new class detection and can naturally be extended to search for anomalous subsets of data. BADAC is therefore ideal where computational cost is not a limiting factor and statistical rigour is important. We discuss approximations to speed up BADAC, such as the use of Gaussian processes, and finally introduce a new metric, the Rank-Weighted Score (RWS), that is particularly suited to evaluating the ability of algorithms to detect anomalies.

DeepSource: Point Source Detection using Deep Learning

Jul 07, 2018

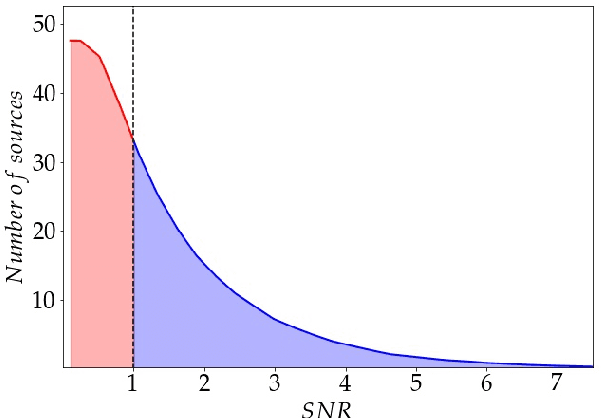

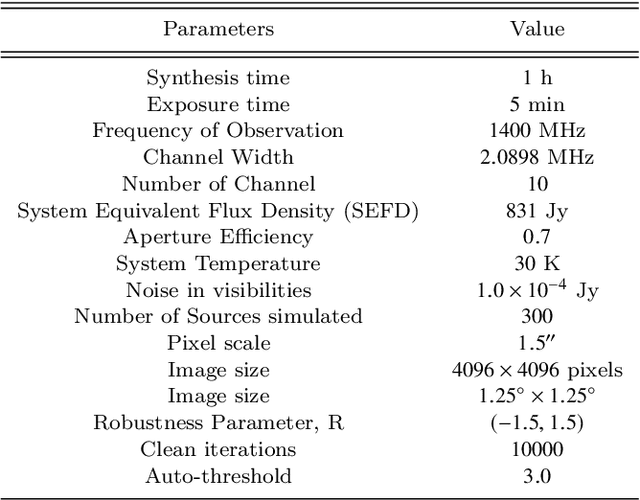

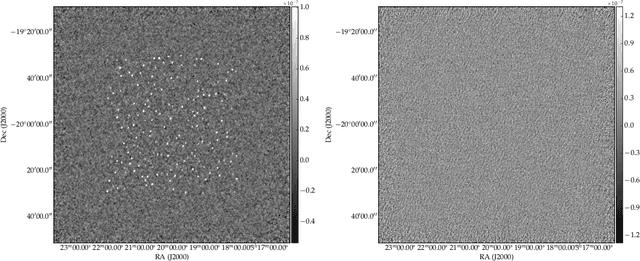

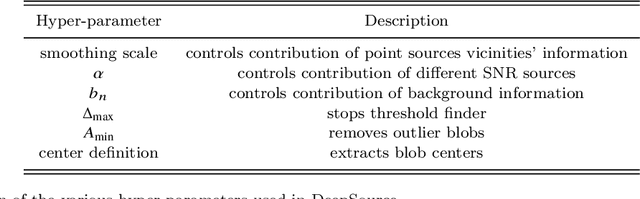

Abstract:Point source detection at low signal-to-noise is challenging for astronomical surveys, particularly in radio interferometry images where the noise is correlated. Machine learning is a promising solution, allowing the development of algorithms tailored to specific telescope arrays and science cases. We present DeepSource - a deep learning solution - that uses convolutional neural networks to achieve these goals. DeepSource enhances the Signal-to-Noise Ratio (SNR) of the original map and then uses dynamic blob detection to detect sources. Trained and tested on two sets of 500 simulated 1 deg x 1 deg MeerKAT images with a total of 300,000 sources, DeepSource is essentially perfect in both purity and completeness down to SNR = 4 and outperforms PyBDSF in all metrics. For uniformly-weighted images it achieves a Purity x Completeness (PC) score at SNR = 3 of 0.73, compared to 0.31 for the best PyBDSF model. For natural-weighting we find a smaller improvement of ~40% in the PC score at SNR = 3. If instead we ask where either of the purity or completeness first drop to 90%, we find that DeepSource reaches this value at SNR = 3.6 compared to the 4.3 of PyBDSF (natural-weighting). A key advantage of DeepSource is that it can learn to optimally trade off purity and completeness for any science case under consideration. Our results show that deep learning is a promising approach to point source detection in astronomical images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge