Nell Byler

Mantis Shrimp: Exploring Photometric Band Utilization in Computer Vision Networks for Photometric Redshift Estimation

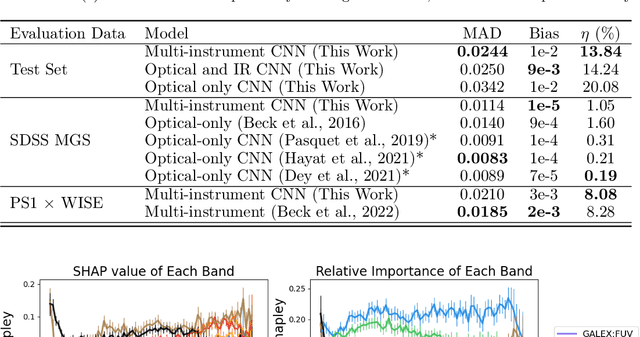

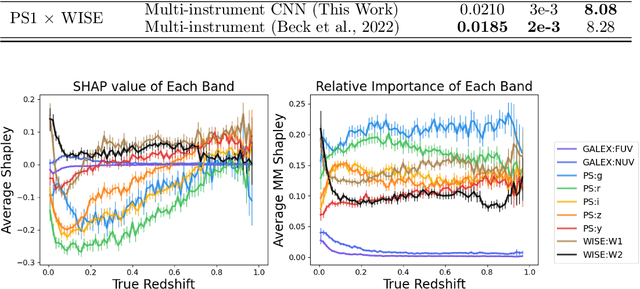

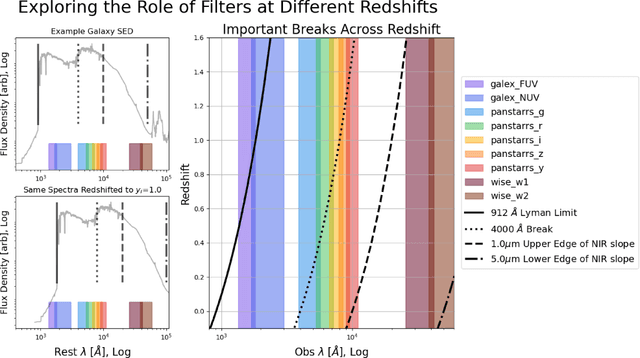

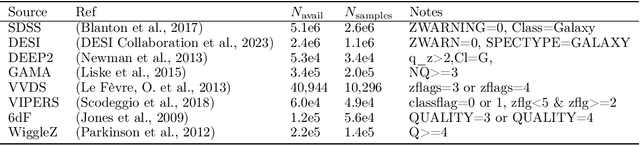

Jan 15, 2025Abstract:We present Mantis Shrimp, a multi-survey deep learning model for photometric redshift estimation that fuses ultra-violet (GALEX), optical (PanSTARRS), and infrared (UnWISE) imagery. Machine learning is now an established approach for photometric redshift estimation, with generally acknowledged higher performance in areas with a high density of spectroscopically identified galaxies over template-based methods. Multiple works have shown that image-based convolutional neural networks can outperform tabular-based color/magnitude models. In comparison to tabular models, image models have additional design complexities: it is largely unknown how to fuse inputs from different instruments which have different resolutions or noise properties. The Mantis Shrimp model estimates the conditional density estimate of redshift using cutout images. The density estimates are well calibrated and the point estimates perform well in the distribution of available spectroscopically confirmed galaxies with (bias = 1e-2), scatter (NMAD = 2.44e-2) and catastrophic outlier rate ($\eta$=17.53$\%$). We find that early fusion approaches (e.g., resampling and stacking images from different instruments) match the performance of late fusion approaches (e.g., concatenating latent space representations), so that the design choice ultimately is left to the user. Finally, we study how the models learn to use information across bands, finding evidence that our models successfully incorporates information from all surveys. The applicability of our model to the analysis of large populations of galaxies is limited by the speed of downloading cutouts from external servers; however, our model could be useful in smaller studies such as generating priors over redshift for stellar population synthesis.

Preliminary Report on Mantis Shrimp: a Multi-Survey Computer Vision Photometric Redshift Model

Feb 05, 2024

Abstract:The availability of large, public, multi-modal astronomical datasets presents an opportunity to execute novel research that straddles the line between science of AI and science of astronomy. Photometric redshift estimation is a well-established subfield of astronomy. Prior works show that computer vision models typically outperform catalog-based models, but these models face additional complexities when incorporating images from more than one instrument or sensor. In this report, we detail our progress creating Mantis Shrimp, a multi-survey computer vision model for photometric redshift estimation that fuses ultra-violet (GALEX), optical (PanSTARRS), and infrared (UnWISE) imagery. We use deep learning interpretability diagnostics to measure how the model leverages information from the different inputs. We reason about the behavior of the CNNs from the interpretability metrics, specifically framing the result in terms of physically-grounded knowledge of galaxy properties.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge