Albert No

Rethinking Benign Relearning: Syntax as the Hidden Driver of Unlearning Failures

Feb 03, 2026Abstract:Machine unlearning aims to remove specific content from trained models while preserving overall performance. However, the phenomenon of benign relearning, in which forgotten information reemerges even from benign fine-tuning data, reveals that existing unlearning methods remain fundamentally fragile. A common explanation attributes this effect to topical relevance, but we find this account insufficient. Through systematic analysis, we demonstrate that syntactic similarity, rather than topicality, is the primary driver: across benchmarks, syntactically similar data consistently trigger recovery even without topical overlap, due to their alignment in representations and gradients with the forgotten content. Motivated by this insight, we introduce syntactic diversification, which paraphrases the original forget queries into heterogeneous structures prior to unlearning. This approach effectively suppresses benign relearning, accelerates forgetting, and substantially alleviates the trade-off between unlearning efficacy and model utility.

Understanding the Reversal Curse Mitigation in Masked Diffusion Models through Attention and Training Dynamics

Feb 02, 2026Abstract:Autoregressive language models (ARMs) suffer from the reversal curse: after learning that "$A$ is $B$", they often fail on the reverse query "$B$ is $A$". Masked diffusion-based language models (MDMs) exhibit this failure in a much weaker form, but the underlying reason has remained unclear. A common explanation attributes this mitigation to the any-order training objective. However, observing "[MASK] is $B$" during training does not necessarily teach the model to handle the reverse prompt "$B$ is [MASK]". We show that the mitigation arises from architectural structure and its interaction with training. In a one-layer Transformer encoder, weight sharing couples the two directions by making forward and reverse attention scores positively correlated. In the same setting, we further show that the corresponding gradients are aligned, so minimizing the forward loss also reduces the reverse loss. Experiments on both controlled toy tasks and large-scale diffusion language models support these mechanisms, explaining why MDMs partially overcome a failure mode that persists in strong ARMs.

Preserve-Then-Quantize: Balancing Rank Budgets for Quantization Error Reconstruction in LLMs

Feb 02, 2026Abstract:Quantization Error Reconstruction (QER) reduces accuracy loss in Post-Training Quantization (PTQ) by approximating weights as $\mathbf{W} \approx \mathbf{Q} + \mathbf{L}\mathbf{R}$, using a rank-$r$ correction to reconstruct quantization error. Prior methods devote the full rank budget to error reconstruction, which is suboptimal when $\mathbf{W}$ has intrinsic low-rank structure and quantization corrupts dominant directions. We propose Structured Residual Reconstruction (SRR), a rank-allocation framework that preserves the top-$k$ singular subspace of the activation-scaled weight before quantization, quantizes only the residual, and uses the remaining rank $r-k$ for error reconstruction. We derive a theory-guided criterion for selecting $k$ by balancing quantization-exposed energy and unrecoverable error under rank constraints. We further show that resulting $\mathbf{Q} + \mathbf{L}\mathbf{R}$ parameterization naturally supports Quantized Parameter-Efficient Fine-Tuning (QPEFT), and stabilizes fine-tuning via gradient scaling along preserved directions. Experiments demonstrate consistent perplexity reductions across diverse models and quantization settings in PTQ, along with a 5.9 percentage-point average gain on GLUE under 2-bit QPEFT.

dgMARK: Decoding-Guided Watermarking for Diffusion Language Models

Jan 30, 2026Abstract:We propose dgMARK, a decoding-guided watermarking method for discrete diffusion language models (dLLMs). Unlike autoregressive models, dLLMs can generate tokens in arbitrary order. While an ideal conditional predictor would be invariant to this order, practical dLLMs exhibit strong sensitivity to the unmasking order, creating a new channel for watermarking. dgMARK steers the unmasking order toward positions whose high-reward candidate tokens satisfy a simple parity constraint induced by a binary hash, without explicitly reweighting the model's learned probabilities. The method is plug-and-play with common decoding strategies (e.g., confidence, entropy, and margin-based ordering) and can be strengthened with a one-step lookahead variant. Watermarks are detected via elevated parity-matching statistics, and a sliding-window detector ensures robustness under post-editing operations including insertion, deletion, substitution, and paraphrasing.

DUSK: Do Not Unlearn Shared Knowledge

May 21, 2025Abstract:Large language models (LLMs) are increasingly deployed in real-world applications, raising concerns about the unauthorized use of copyrighted or sensitive data. Machine unlearning aims to remove such 'forget' data while preserving utility and information from the 'retain' set. However, existing evaluations typically assume that forget and retain sets are fully disjoint, overlooking realistic scenarios where they share overlapping content. For instance, a news article may need to be unlearned, even though the same event, such as an earthquake in Japan, is also described factually on Wikipedia. Effective unlearning should remove the specific phrasing of the news article while preserving publicly supported facts. In this paper, we introduce DUSK, a benchmark designed to evaluate unlearning methods under realistic data overlap. DUSK constructs document sets that describe the same factual content in different styles, with some shared information appearing across all sets and other content remaining unique to each. When one set is designated for unlearning, an ideal method should remove its unique content while preserving shared facts. We define seven evaluation metrics to assess whether unlearning methods can achieve this selective removal. Our evaluation of nine recent unlearning methods reveals a key limitation: while most can remove surface-level text, they often fail to erase deeper, context-specific knowledge without damaging shared content. We release DUSK as a public benchmark to support the development of more precise and reliable unlearning techniques for real-world applications.

R-TOFU: Unlearning in Large Reasoning Models

May 21, 2025Abstract:Large Reasoning Models (LRMs) embed private or copyrighted information not only in their final answers but also throughout multi-step chain-of-thought (CoT) traces, making reliable unlearning far more demanding than in standard LLMs. We introduce Reasoning-TOFU (R-TOFU), the first benchmark tailored to this setting. R-TOFU augments existing unlearning tasks with realistic CoT annotations and provides step-wise metrics that expose residual knowledge invisible to answer-level checks. Using R-TOFU, we carry out a comprehensive comparison of gradient-based and preference-optimization baselines and show that conventional answer-only objectives leave substantial forget traces in reasoning. We further propose Reasoned IDK, a preference-optimization variant that preserves coherent yet inconclusive reasoning, achieving a stronger balance between forgetting efficacy and model utility than earlier refusal styles. Finally, we identify a failure mode: decoding variants such as ZeroThink and LessThink can still reveal forgotten content despite seemingly successful unlearning, emphasizing the need to evaluate models under diverse decoding settings. Together, the benchmark, analysis, and new baseline establish a systematic foundation for studying and improving unlearning in LRMs while preserving their reasoning capabilities.

SEPS: A Separability Measure for Robust Unlearning in LLMs

May 20, 2025Abstract:Machine unlearning aims to selectively remove targeted knowledge from Large Language Models (LLMs), ensuring they forget specified content while retaining essential information. Existing unlearning metrics assess whether a model correctly answers retain queries and rejects forget queries, but they fail to capture real-world scenarios where forget queries rarely appear in isolation. In fact, forget and retain queries often coexist within the same prompt, making mixed-query evaluation crucial. We introduce SEPS, an evaluation framework that explicitly measures a model's ability to both forget and retain information within a single prompt. Through extensive experiments across three benchmarks, we identify two key failure modes in existing unlearning methods: (1) untargeted unlearning indiscriminately erases both forget and retain content once a forget query appears, and (2) targeted unlearning overfits to single-query scenarios, leading to catastrophic failures when handling multiple queries. To address these issues, we propose Mixed Prompt (MP) unlearning, a strategy that integrates both forget and retain queries into a unified training objective. Our approach significantly improves unlearning effectiveness, demonstrating robustness even in complex settings with up to eight mixed forget and retain queries in a single prompt.

SAFEPATH: Preventing Harmful Reasoning in Chain-of-Thought via Early Alignment

May 20, 2025Abstract:Large Reasoning Models (LRMs) have become powerful tools for complex problem solving, but their structured reasoning pathways can lead to unsafe outputs when exposed to harmful prompts. Existing safety alignment methods reduce harmful outputs but can degrade reasoning depth, leading to significant trade-offs in complex, multi-step tasks, and remain vulnerable to sophisticated jailbreak attacks. To address this, we introduce SAFEPATH, a lightweight alignment method that fine-tunes LRMs to emit a short, 8-token Safety Primer at the start of their reasoning, in response to harmful prompts, while leaving the rest of the reasoning process unsupervised. Empirical results across multiple benchmarks indicate that SAFEPATH effectively reduces harmful outputs while maintaining reasoning performance. Specifically, SAFEPATH reduces harmful responses by up to 90.0% and blocks 83.3% of jailbreak attempts in the DeepSeek-R1-Distill-Llama-8B model, while requiring 295.9x less compute than Direct Refusal and 314.1x less than SafeChain. We further introduce a zero-shot variant that requires no fine-tuning. In addition, we provide a comprehensive analysis of how existing methods in LLMs generalize, or fail, when applied to reasoning-centric models, revealing critical gaps and new directions for safer AI.

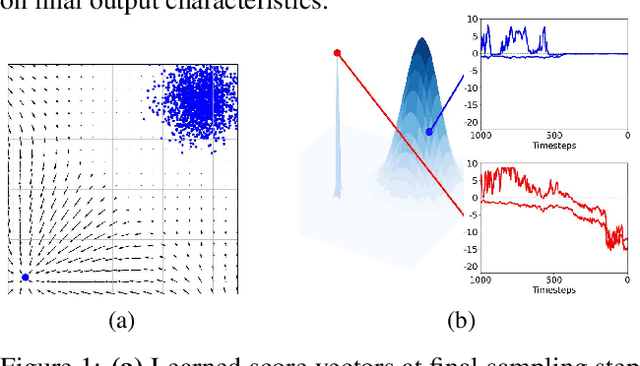

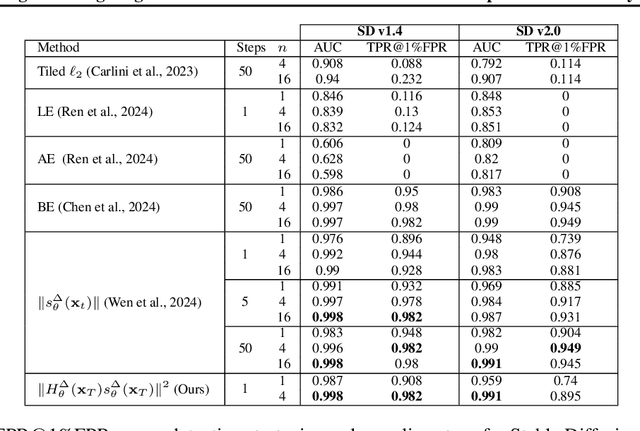

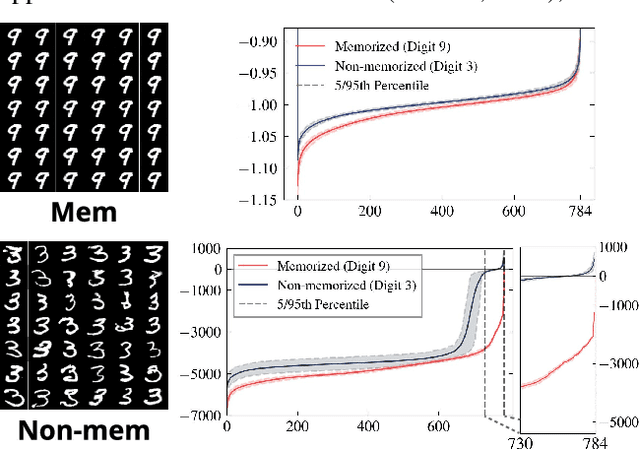

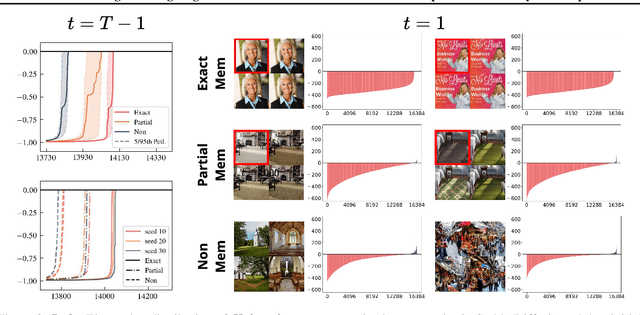

Understanding Memorization in Generative Models via Sharpness in Probability Landscapes

Dec 05, 2024

Abstract:In this paper, we introduce a geometric framework to analyze memorization in diffusion models using the eigenvalues of the Hessian of the log probability density. We propose that memorization arises from isolated points in the learned probability distribution, characterized by sharpness in the probability landscape, as indicated by large negative eigenvalues of the Hessian. Through experiments on various datasets, we demonstrate that these eigenvalues effectively detect and quantify memorization. Our approach provides a clear understanding of memorization in diffusion models and lays the groundwork for developing strategies to ensure secure and reliable generative models

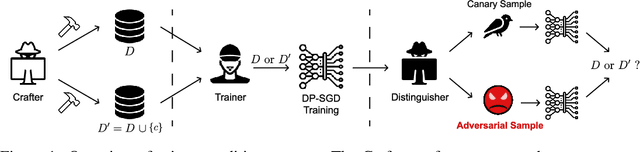

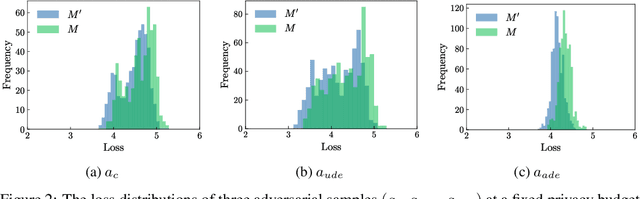

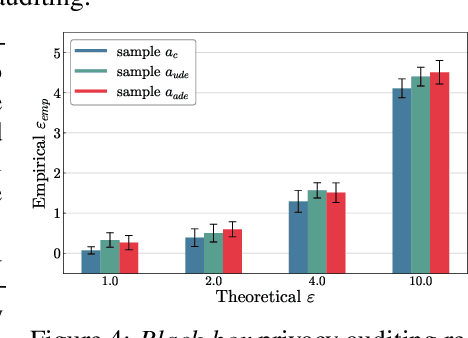

Adversarial Sample-Based Approach for Tighter Privacy Auditing in Final Model-Only Scenarios

Dec 02, 2024

Abstract:Auditing Differentially Private Stochastic Gradient Descent (DP-SGD) in the final model setting is challenging and often results in empirical lower bounds that are significantly looser than theoretical privacy guarantees. We introduce a novel auditing method that achieves tighter empirical lower bounds without additional assumptions by crafting worst-case adversarial samples through loss-based input-space auditing. Our approach surpasses traditional canary-based heuristics and is effective in both white-box and black-box scenarios. Specifically, with a theoretical privacy budget of $\varepsilon = 10.0$, our method achieves empirical lower bounds of $6.68$ in white-box settings and $4.51$ in black-box settings, compared to the baseline of $4.11$ for MNIST. Moreover, we demonstrate that significant privacy auditing results can be achieved using in-distribution (ID) samples as canaries, obtaining an empirical lower bound of $4.33$ where traditional methods produce near-zero leakage detection. Our work offers a practical framework for reliable and accurate privacy auditing in differentially private machine learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge