Ahmed Ibrahim

TempoNet: Learning Realistic Communication and Timing Patterns for Network Traffic Simulation

Jan 22, 2026Abstract:Realistic network traffic simulation is critical for evaluating intrusion detection systems, stress-testing network protocols, and constructing high-fidelity environments for cybersecurity training. While attack traffic can often be layered into training environments using red-teaming or replay methods, generating authentic benign background traffic remains a core challenge -- particularly in simulating the complex temporal and communication dynamics of real-world networks. This paper introduces TempoNet, a novel generative model that combines multi-task learning with multi-mark temporal point processes to jointly model inter-arrival times and all packet- and flow-header fields. TempoNet captures fine-grained timing patterns and higher-order correlations such as host-pair behavior and seasonal trends, addressing key limitations of GAN-, LLM-, and Bayesian-based methods that fail to reproduce structured temporal variation. TempoNet produces temporally consistent, high-fidelity traces, validated on real-world datasets. Furthermore, we show that intrusion detection models trained on TempoNet-generated background traffic perform comparably to those trained on real data, validating its utility for real-world security applications.

QSAR-Guided Generative Framework for the Discovery of Synthetically Viable Odorants

Dec 28, 2025Abstract:The discovery of novel odorant molecules is key for the fragrance and flavor industries, yet efficiently navigating the vast chemical space to identify structures with desirable olfactory properties remains a significant challenge. Generative artificial intelligence offers a promising approach for \textit{de novo} molecular design but typically requires large sets of molecules to learn from. To address this problem, we present a framework combining a variational autoencoder (VAE) with a quantitative structure-activity relationship (QSAR) model to generate novel odorants from limited training sets of odor molecules. The self-supervised learning capabilities of the VAE allow it to learn SMILES grammar from ChemBL database, while its training objective is augmented with a loss term derived from an external QSAR model to structure the latent representation according to odor probability. While the VAE demonstrated high internal consistency in learning the QSAR supervision signal, validation against an external, unseen ground truth dataset (Unique Good Scents) confirms the model generates syntactically valid structures (100\% validity achieved via rejection sampling) and 94.8\% unique structures. The latent space is effectively structured by odor likelihood, evidenced by a Fréchet ChemNet Distance (FCD) of $\approx$ 6.96 between generated molecules and known odorants, compared to $\approx$ 21.6 for the ChemBL baseline. Structural analysis via Bemis-Murcko scaffolds reveals that 74.4\% of candidates possess novel core frameworks distinct from the training data, indicating the model performs extensive chemical space exploration beyond simple derivatization of known odorants. Generated candidates display physicochemical properties ....

Comparison of Innovative Strategies for the Coverage Problem: Path Planning, Search Optimization, and Applications in Underwater Robotics

Jun 18, 2025Abstract:In many applications, including underwater robotics, the coverage problem requires an autonomous vehicle to systematically explore a defined area while minimizing redundancy and avoiding obstacles. This paper investigates coverage path planning strategies to enhance the efficiency of underwater gliders, particularly in maximizing the probability of detecting a radioactive source while ensuring safe navigation. We evaluate three path-planning approaches: the Traveling Salesman Problem (TSP), Minimum Spanning Tree (MST), and Optimal Control Problem (OCP). Simulations were conducted in MATLAB, comparing processing time, uncovered areas, path length, and traversal time. Results indicate that OCP is preferable when traversal time is constrained, although it incurs significantly higher computational costs. Conversely, MST-based approaches provide faster but less optimal solutions. These findings offer insights into selecting appropriate algorithms based on mission priorities, balancing efficiency and computational feasibility.

A Unified Framework for Human AI Collaboration in Security Operations Centers with Trusted Autonomy

May 29, 2025Abstract:This article presents a structured framework for Human-AI collaboration in Security Operations Centers (SOCs), integrating AI autonomy, trust calibration, and Human-in-the-loop decision making. Existing frameworks in SOCs often focus narrowly on automation, lacking systematic structures to manage human oversight, trust calibration, and scalable autonomy with AI. Many assume static or binary autonomy settings, failing to account for the varied complexity, criticality, and risk across SOC tasks considering Humans and AI collaboration. To address these limitations, we propose a novel autonomy tiered framework grounded in five levels of AI autonomy from manual to fully autonomous, mapped to Human-in-the-Loop (HITL) roles and task-specific trust thresholds. This enables adaptive and explainable AI integration across core SOC functions, including monitoring, protection, threat detection, alert triage, and incident response. The proposed framework differentiates itself from previous research by creating formal connections between autonomy, trust, and HITL across various SOC levels, which allows for adaptive task distribution according to operational complexity and associated risks. The framework is exemplified through a simulated cyber range that features the cybersecurity AI-Avatar, a fine-tuned LLM-based SOC assistant. The AI-Avatar case study illustrates human-AI collaboration for SOC tasks, reducing alert fatigue, enhancing response coordination, and strategically calibrating trust. This research systematically presents both the theoretical and practical aspects and feasibility of designing next-generation cognitive SOCs that leverage AI not to replace but to enhance human decision-making.

Backdoor Detection through Replicated Execution of Outsourced Training

Mar 31, 2025

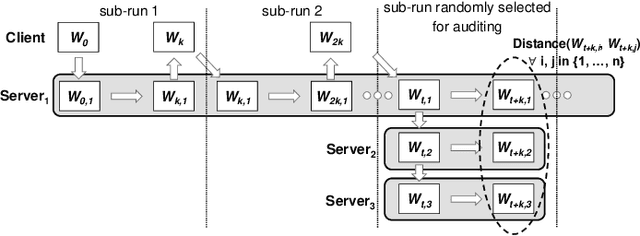

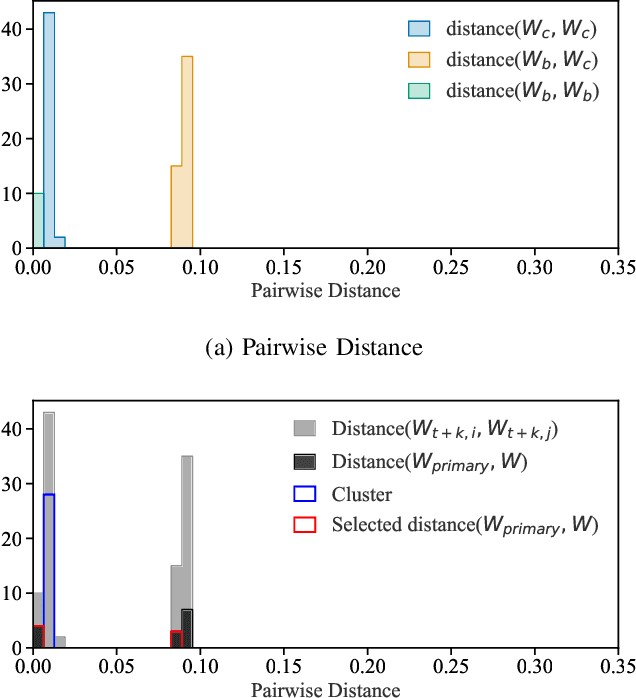

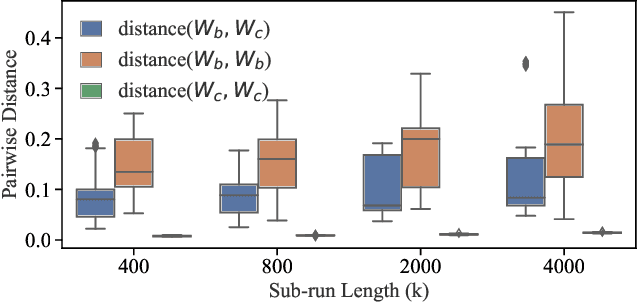

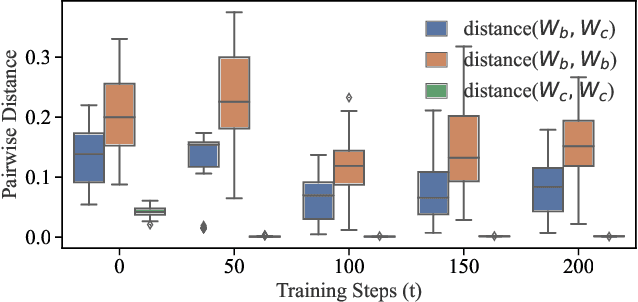

Abstract:It is common practice to outsource the training of machine learning models to cloud providers. Clients who do so gain from the cloud's economies of scale, but implicitly assume trust: the server should not deviate from the client's training procedure. A malicious server may, for instance, seek to insert backdoors in the model. Detecting a backdoored model without prior knowledge of both the backdoor attack and its accompanying trigger remains a challenging problem. In this paper, we show that a client with access to multiple cloud providers can replicate a subset of training steps across multiple servers to detect deviation from the training procedure in a similar manner to differential testing. Assuming some cloud-provided servers are benign, we identify malicious servers by the substantial difference between model updates required for backdooring and those resulting from clean training. Perhaps the strongest advantage of our approach is its suitability to clients that have limited-to-no local compute capability to perform training; we leverage the existence of multiple cloud providers to identify malicious updates without expensive human labeling or heavy computation. We demonstrate the capabilities of our approach on an outsourced supervised learning task where $50\%$ of the cloud providers insert their own backdoor; our approach is able to correctly identify $99.6\%$ of them. In essence, our approach is successful because it replaces the signature-based paradigm taken by existing approaches with an anomaly-based detection paradigm. Furthermore, our approach is robust to several attacks from adaptive adversaries utilizing knowledge of our detection scheme.

A Low-Complexity Plug-and-Play Deep Learning Model for Massive MIMO Precoding Across Sites

Feb 12, 2025

Abstract:Massive multiple-input multiple-output (mMIMO) technology has transformed wireless communication by enhancing spectral efficiency and network capacity. This paper proposes a novel deep learning-based mMIMO precoder to tackle the complexity challenges of existing approaches, such as weighted minimum mean square error (WMMSE), while leveraging meta-learning domain generalization and a teacher-student architecture to improve generalization across diverse communication environments. When deployed to a previously unseen site, the proposed model achieves excellent sum-rate performance while maintaining low computational complexity by avoiding matrix inversions and by using a simpler neural network structure. The model is trained and tested on a custom ray-tracing dataset composed of several base station locations. The experimental results indicate that our method effectively balances computational efficiency with high sum-rate performance while showcasing strong generalization performance in unseen environments. Furthermore, with fine-tuning, the proposed model outperforms WMMSE across all tested sites and SNR conditions while reducing complexity by at least 73$\times$.

Brain Stroke Segmentation Using Deep Learning Models: A Comparative Study

Mar 25, 2024

Abstract:Stroke segmentation plays a crucial role in the diagnosis and treatment of stroke patients by providing spatial information about affected brain regions and the extent of damage. Segmenting stroke lesions accurately is a challenging task, given that conventional manual techniques are time consuming and prone to errors. Recently, advanced deep models have been introduced for general medical image segmentation, demonstrating promising results that surpass many state of the art networks when evaluated on specific datasets. With the advent of the vision Transformers, several models have been introduced based on them, while others have aimed to design better modules based on traditional convolutional layers to extract long-range dependencies like Transformers. The question of whether such high-level designs are necessary for all segmentation cases to achieve the best results remains unanswered. In this study, we selected four types of deep models that were recently proposed and evaluated their performance for stroke segmentation: a pure Transformer-based architecture (DAE-Former), two advanced CNN-based models (LKA and DLKA) with attention mechanisms in their design, an advanced hybrid model that incorporates CNNs with Transformers (FCT), and the well-known self-adaptive nnUNet framework with its configuration based on given data. We examined their performance on two publicly available datasets, and found that the nnUNet achieved the best results with the simplest design among all. Revealing the robustness issue of Transformers to such variabilities serves as a potential reason for their weaker performance. Furthermore, nnUNet's success underscores the significant impact of preprocessing and postprocessing techniques in enhancing segmentation results, surpassing the focus solely on architectural designs

Homogenous and Heterogenous Parallel Clustering: An Overview

Feb 14, 2022Abstract:Recent advances in computer architecture and networking opened the opportunity for parallelizing the clustering algorithms. This divide-and-conquer strategy often results in better results to centralized clustering with a much-improved time performance. This paper reviews key parallel clustering and provides insight into their strategy. The review brings together disparate attempts in parallel clustering to provide a comprehensive account of advances in this emerging field

Pervasive Hand Gesture Recognition for Smartphones using Non-audible Sound and Deep Learning

Aug 04, 2021

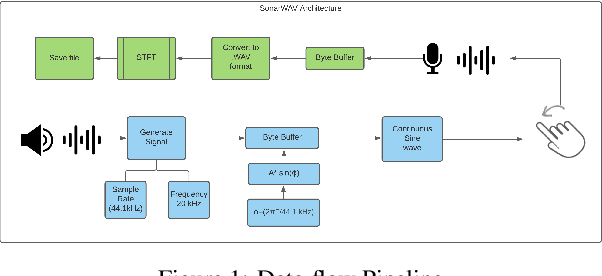

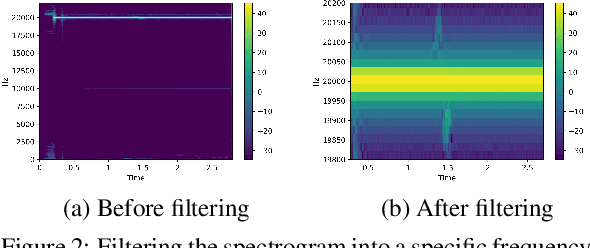

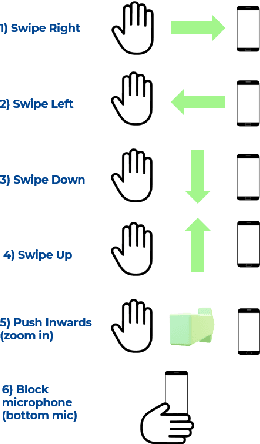

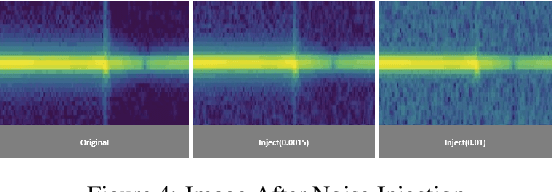

Abstract:Due to the mass advancement in ubiquitous technologies nowadays, new pervasive methods have come into the practice to provide new innovative features and stimulate the research on new human-computer interactions. This paper presents a hand gesture recognition method that utilizes the smartphone's built-in speakers and microphones. The proposed system emits an ultrasonic sonar-based signal (inaudible sound) from the smartphone's stereo speakers, which is then received by the smartphone's microphone and processed via a Convolutional Neural Network (CNN) for Hand Gesture Recognition. Data augmentation techniques are proposed to improve the detection accuracy and three dual-channel input fusion methods are compared. The first method merges the dual-channel audio as a single input spectrogram image. The second method adopts early fusion by concatenating the dual-channel spectrograms. The third method adopts late fusion by having two convectional input branches processing each of the dual-channel spectrograms and then the outputs are merged by the last layers. Our experimental results demonstrate a promising detection accuracy for the six gestures presented in our publicly available dataset with an accuracy of 93.58\% as a baseline.

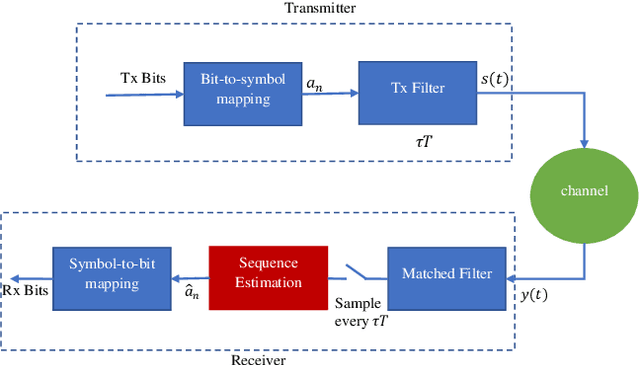

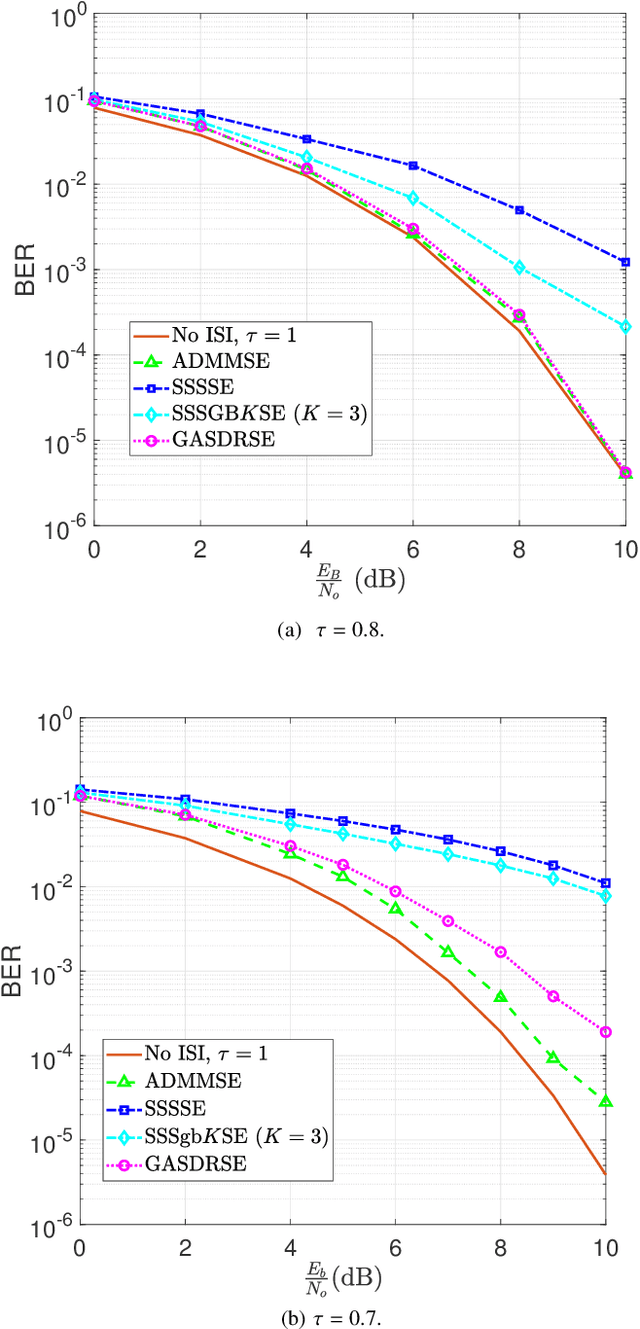

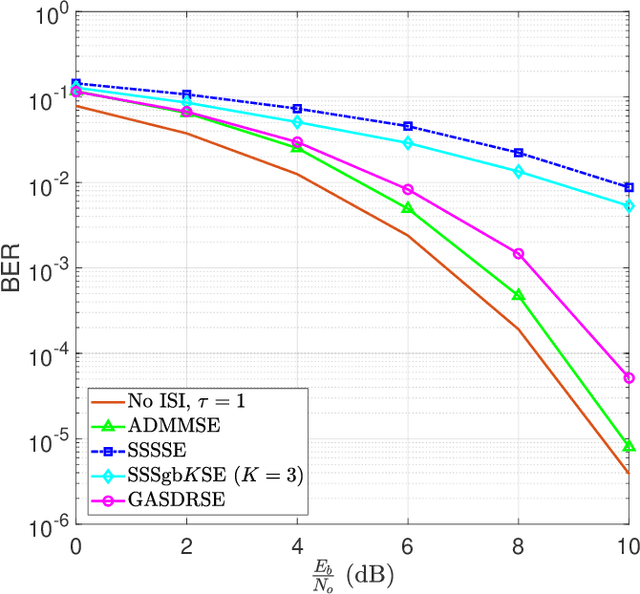

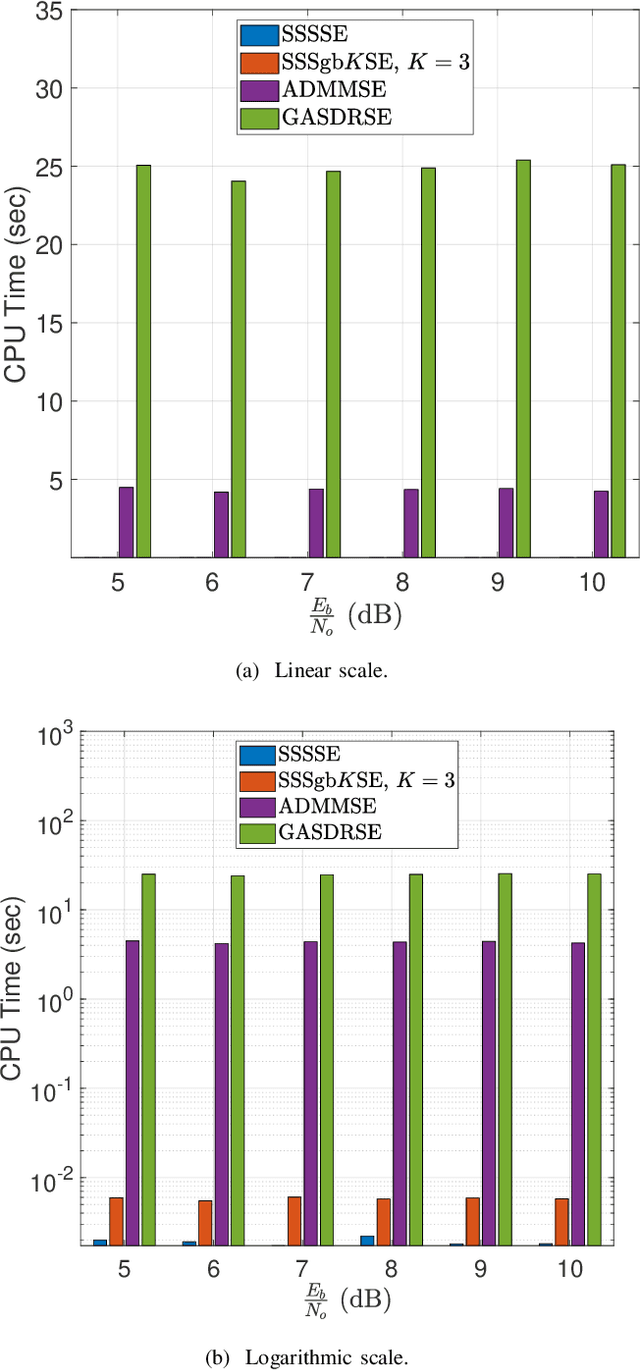

A Novel Low Complexity Faster-than-Nyquist Signaling Detector for Ultra High-Order QAM

Jul 02, 2021

Abstract:Faster-than-Nyquist (FTN) signaling is a promising non-orthogonal pulse modulation technique that can improve the spectral efficiency (SE) of next generation communication systems at the expense of higher detection complexity to remove the introduced inter-symbol interference (ISI). In this paper, we investigate the detection problem of ultra high-order quadrature-amplitude modulation (QAM) FTN signaling where we exploit a mathematical programming technique based on the alternating directions multiplier method (ADMM). The proposed ADMM sequence estimation (ADMMSE) FTN signaling detector demonstrates an excellent trade-off between performance and computational effort enabling, for the first time in the FTN signaling literature, successful detection and SE gains for QAM modulation orders as high as 64K (65,536). The complexity of the proposed ADMMSE detector is polynomial in the length of the transmit symbols sequence and its sensitivity to the modulation order increases only logarithmically. Simulation results show that for 16-QAM, the proposed ADMMSE FTN signaling detector achieves comparable SE gains to the generalized approach semidefinite relaxation-based sequence estimation (GASDRSE) FTN signaling detector, but at an experimentally evaluated much lower computational time. Simulation results additionally show SE gains for modulation orders starting from 4-QAM, or quadrature phase shift keying (QPSK), up to and including 64K-QAM when compared to conventional Nyquist signaling. The very low computational effort required makes the proposed ADMMSE detector a practically promising FTN signaling detector for both low order and ultra high-order QAM FTN signaling systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge