Helge Janicke

A Unified Framework for Human AI Collaboration in Security Operations Centers with Trusted Autonomy

May 29, 2025Abstract:This article presents a structured framework for Human-AI collaboration in Security Operations Centers (SOCs), integrating AI autonomy, trust calibration, and Human-in-the-loop decision making. Existing frameworks in SOCs often focus narrowly on automation, lacking systematic structures to manage human oversight, trust calibration, and scalable autonomy with AI. Many assume static or binary autonomy settings, failing to account for the varied complexity, criticality, and risk across SOC tasks considering Humans and AI collaboration. To address these limitations, we propose a novel autonomy tiered framework grounded in five levels of AI autonomy from manual to fully autonomous, mapped to Human-in-the-Loop (HITL) roles and task-specific trust thresholds. This enables adaptive and explainable AI integration across core SOC functions, including monitoring, protection, threat detection, alert triage, and incident response. The proposed framework differentiates itself from previous research by creating formal connections between autonomy, trust, and HITL across various SOC levels, which allows for adaptive task distribution according to operational complexity and associated risks. The framework is exemplified through a simulated cyber range that features the cybersecurity AI-Avatar, a fine-tuned LLM-based SOC assistant. The AI-Avatar case study illustrates human-AI collaboration for SOC tasks, reducing alert fatigue, enhancing response coordination, and strategically calibrating trust. This research systematically presents both the theoretical and practical aspects and feasibility of designing next-generation cognitive SOCs that leverage AI not to replace but to enhance human decision-making.

ExplainableDetector: Exploring Transformer-based Language Modeling Approach for SMS Spam Detection with Explainability Analysis

May 12, 2024Abstract:SMS, or short messaging service, is a widely used and cost-effective communication medium that has sadly turned into a haven for unwanted messages, commonly known as SMS spam. With the rapid adoption of smartphones and Internet connectivity, SMS spam has emerged as a prevalent threat. Spammers have taken notice of the significance of SMS for mobile phone users. Consequently, with the emergence of new cybersecurity threats, the number of SMS spam has expanded significantly in recent years. The unstructured format of SMS data creates significant challenges for SMS spam detection, making it more difficult to successfully fight spam attacks in the cybersecurity domain. In this work, we employ optimized and fine-tuned transformer-based Large Language Models (LLMs) to solve the problem of spam message detection. We use a benchmark SMS spam dataset for this spam detection and utilize several preprocessing techniques to get clean and noise-free data and solve the class imbalance problem using the text augmentation technique. The overall experiment showed that our optimized fine-tuned BERT (Bidirectional Encoder Representations from Transformers) variant model RoBERTa obtained high accuracy with 99.84\%. We also work with Explainable Artificial Intelligence (XAI) techniques to calculate the positive and negative coefficient scores which explore and explain the fine-tuned model transparency in this text-based spam SMS detection task. In addition, traditional Machine Learning (ML) models were also examined to compare their performance with the transformer-based models. This analysis describes how LLMs can make a good impact on complex textual-based spam data in the cybersecurity field.

Detecting Anomalies in Blockchain Transactions using Machine Learning Classifiers and Explainability Analysis

Jan 07, 2024

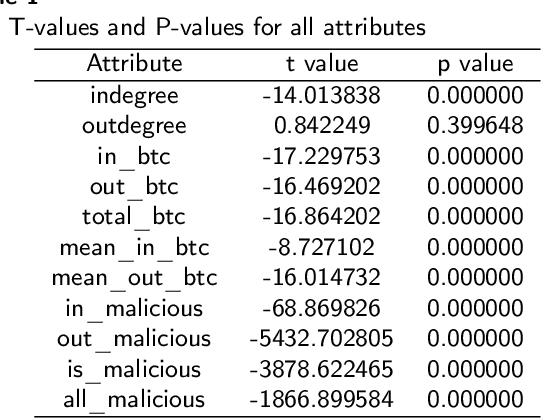

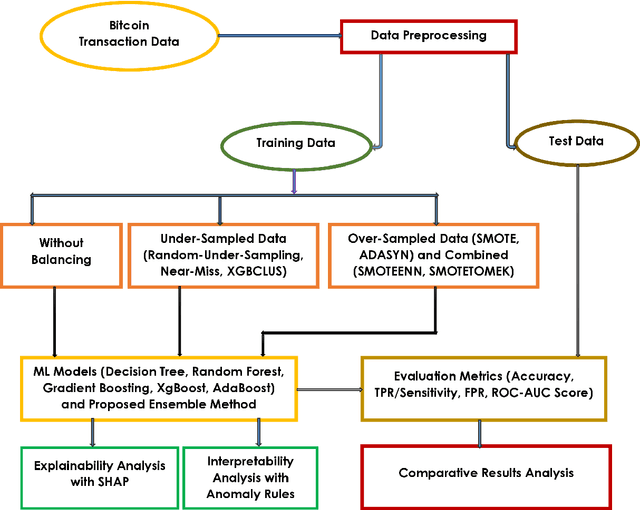

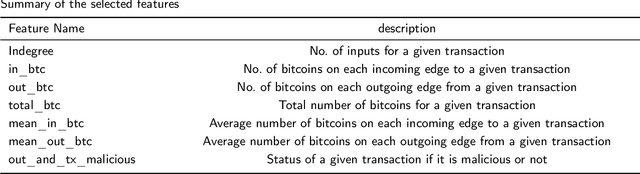

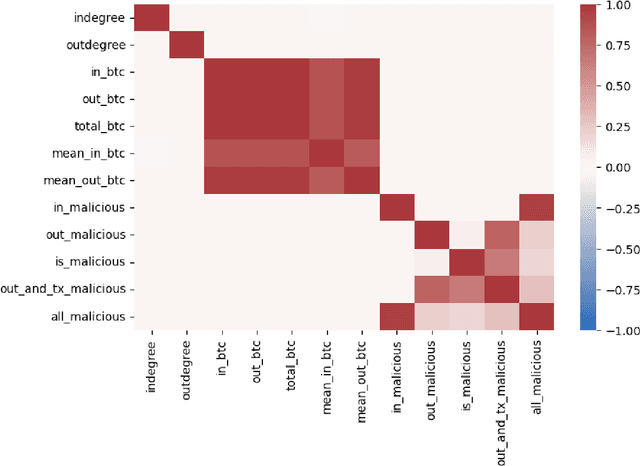

Abstract:As the use of Blockchain for digital payments continues to rise in popularity, it also becomes susceptible to various malicious attacks. Successfully detecting anomalies within Blockchain transactions is essential for bolstering trust in digital payments. However, the task of anomaly detection in Blockchain transaction data is challenging due to the infrequent occurrence of illicit transactions. Although several studies have been conducted in the field, a limitation persists: the lack of explanations for the model's predictions. This study seeks to overcome this limitation by integrating eXplainable Artificial Intelligence (XAI) techniques and anomaly rules into tree-based ensemble classifiers for detecting anomalous Bitcoin transactions. The Shapley Additive exPlanation (SHAP) method is employed to measure the contribution of each feature, and it is compatible with ensemble models. Moreover, we present rules for interpreting whether a Bitcoin transaction is anomalous or not. Additionally, we have introduced an under-sampling algorithm named XGBCLUS, designed to balance anomalous and non-anomalous transaction data. This algorithm is compared against other commonly used under-sampling and over-sampling techniques. Finally, the outcomes of various tree-based single classifiers are compared with those of stacking and voting ensemble classifiers. Our experimental results demonstrate that: (i) XGBCLUS enhances TPR and ROC-AUC scores compared to state-of-the-art under-sampling and over-sampling techniques, and (ii) our proposed ensemble classifiers outperform traditional single tree-based machine learning classifiers in terms of accuracy, TPR, and FPR scores.

Security-Enhancing Digital Twins: Characteristics, Indicators, and Future Perspectives

May 10, 2023Abstract:The term "digital twin" (DT) has become a key theme of the cyber-physical systems (CPSs) area, while remaining vaguely defined as a virtual replica of an entity. This article identifies DT characteristics essential for enhancing CPS security and discusses indicators to evaluate them.

Security and Privacy for Artificial Intelligence: Opportunities and Challenges

Feb 09, 2021

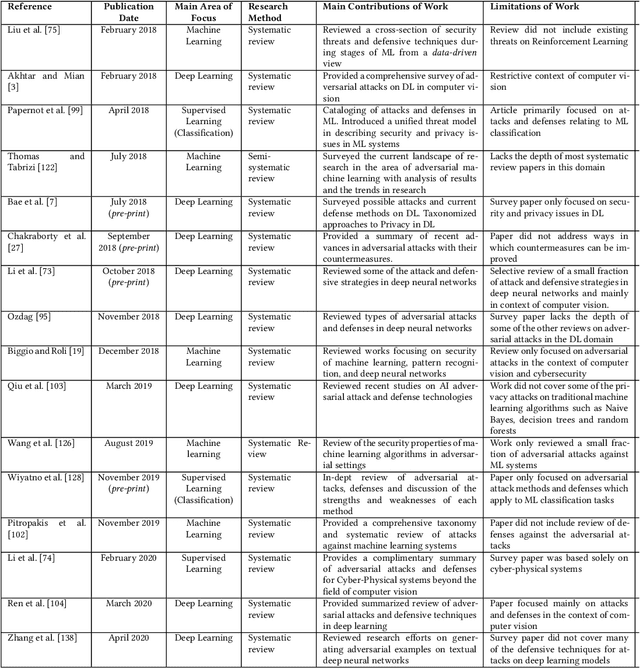

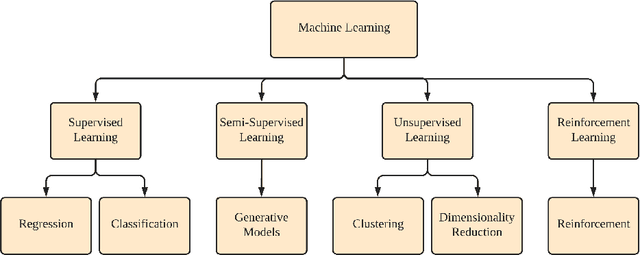

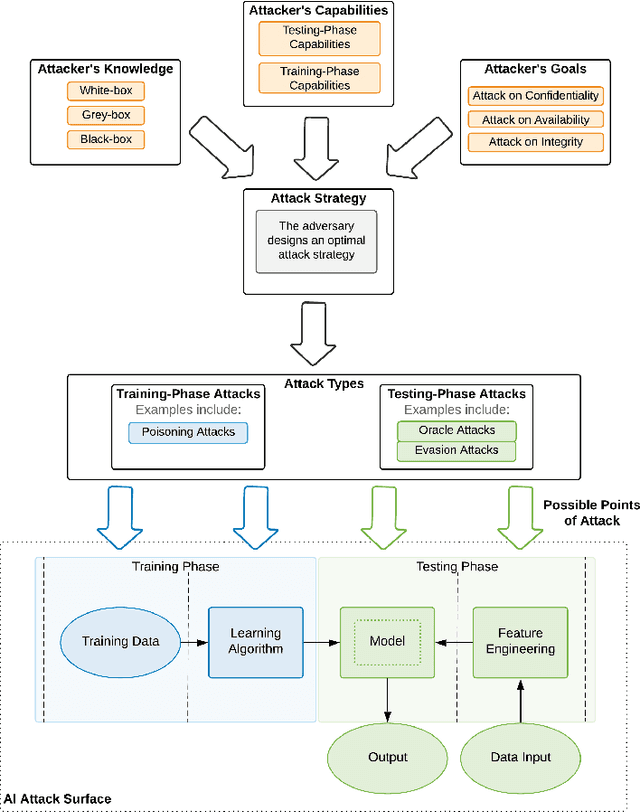

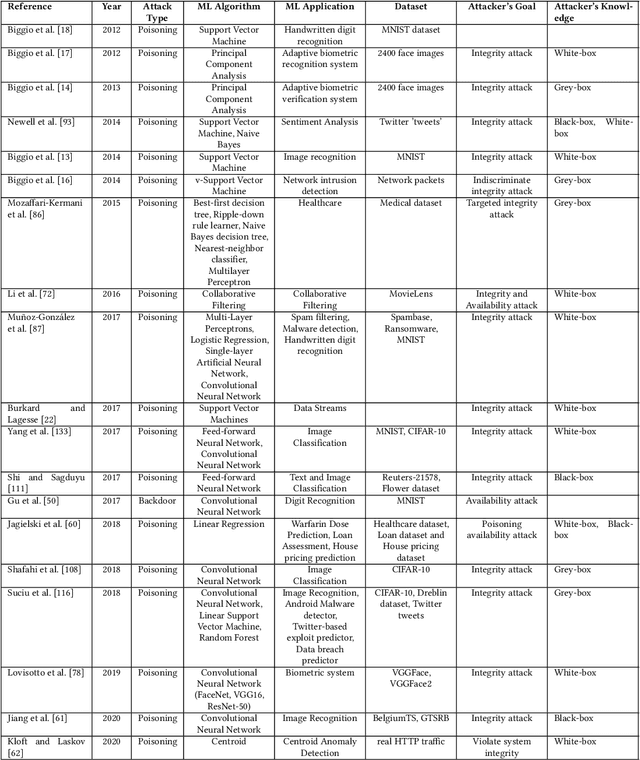

Abstract:The increased adoption of Artificial Intelligence (AI) presents an opportunity to solve many socio-economic and environmental challenges; however, this cannot happen without securing AI-enabled technologies. In recent years, most AI models are vulnerable to advanced and sophisticated hacking techniques. This challenge has motivated concerted research efforts into adversarial AI, with the aim of developing robust machine and deep learning models that are resilient to different types of adversarial scenarios. In this paper, we present a holistic cyber security review that demonstrates adversarial attacks against AI applications, including aspects such as adversarial knowledge and capabilities, as well as existing methods for generating adversarial examples and existing cyber defence models. We explain mathematical AI models, especially new variants of reinforcement and federated learning, to demonstrate how attack vectors would exploit vulnerabilities of AI models. We also propose a systematic framework for demonstrating attack techniques against AI applications and reviewed several cyber defences that would protect AI applications against those attacks. We also highlight the importance of understanding the adversarial goals and their capabilities, especially the recent attacks against industry applications, to develop adaptive defences that assess to secure AI applications. Finally, we describe the main challenges and future research directions in the domain of security and privacy of AI technologies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge