Jean-François Frigon

A Foundation Model for Massive MIMO Precoding with an Adaptive per-User Rate-Power Tradeoff

Jul 24, 2025Abstract:Deep learning (DL) has emerged as a solution for precoding in massive multiple-input multiple-output (mMIMO) systems due to its capacity to learn the characteristics of the propagation environment. However, training such a model requires high-quality, local datasets at the deployment site, which are often difficult to collect. We propose a transformer-based foundation model for mMIMO precoding that seeks to minimize the energy consumption of the transmitter while dynamically adapting to per-user rate requirements. At equal energy consumption, zero-shot deployment of the proposed foundation model significantly outperforms zero forcing, and approaches weighted minimum mean squared error performance with 8x less complexity. To address model adaptation in data-scarce settings, we introduce a data augmentation method that finds training samples similar to the target distribution by computing the cosine similarity between the outputs of the pre-trained feature extractor. Our work enables the implementation of DL-based solutions in practice by addressing challenges of data availability and training complexity. Moreover, the ability to dynamically configure per-user rate requirements can be leveraged by higher level resource allocation and scheduling algorithms for greater control over energy efficiency, spectral efficiency and fairness.

Compression of Site-Specific Deep Neural Networks for Massive MIMO Precoding

Feb 12, 2025

Abstract:The deployment of deep learning (DL) models for precoding in massive multiple-input multiple-output (mMIMO) systems is often constrained by high computational demands and energy consumption. In this paper, we investigate the compute energy efficiency of mMIMO precoders using DL-based approaches, comparing them to conventional methods such as zero forcing and weighted minimum mean square error (WMMSE). Our energy consumption model accounts for both memory access and calculation energy within DL accelerators. We propose a framework that incorporates mixed-precision quantization-aware training and neural architecture search to reduce energy usage without compromising accuracy. Using a ray-tracing dataset covering various base station sites, we analyze how site-specific conditions affect the energy efficiency of compressed models. Our results show that deep neural network compression generates precoders with up to 35 times higher energy efficiency than WMMSE at equal performance, depending on the scenario and the desired rate. These results establish a foundation and a benchmark for the development of energy-efficient DL-based mMIMO precoders.

A Low-Complexity Plug-and-Play Deep Learning Model for Massive MIMO Precoding Across Sites

Feb 12, 2025

Abstract:Massive multiple-input multiple-output (mMIMO) technology has transformed wireless communication by enhancing spectral efficiency and network capacity. This paper proposes a novel deep learning-based mMIMO precoder to tackle the complexity challenges of existing approaches, such as weighted minimum mean square error (WMMSE), while leveraging meta-learning domain generalization and a teacher-student architecture to improve generalization across diverse communication environments. When deployed to a previously unseen site, the proposed model achieves excellent sum-rate performance while maintaining low computational complexity by avoiding matrix inversions and by using a simpler neural network structure. The model is trained and tested on a custom ray-tracing dataset composed of several base station locations. The experimental results indicate that our method effectively balances computational efficiency with high sum-rate performance while showcasing strong generalization performance in unseen environments. Furthermore, with fine-tuning, the proposed model outperforms WMMSE across all tested sites and SNR conditions while reducing complexity by at least 73$\times$.

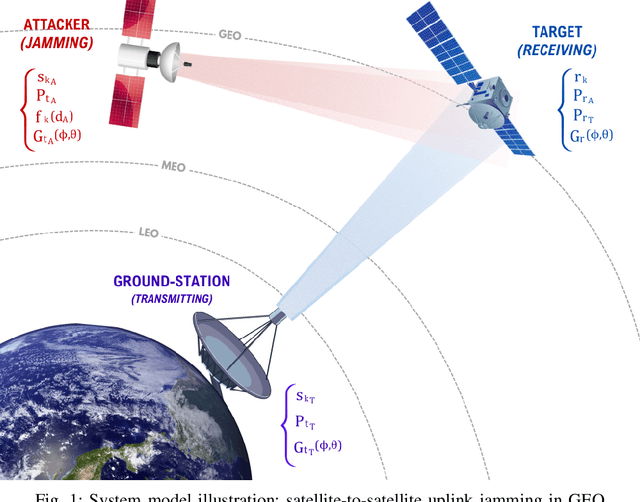

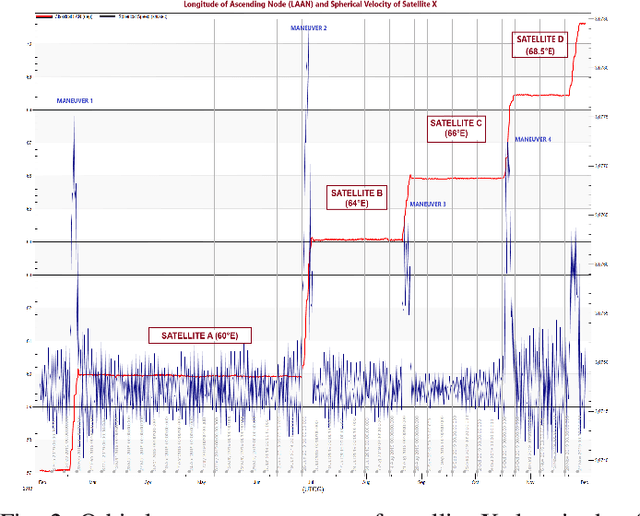

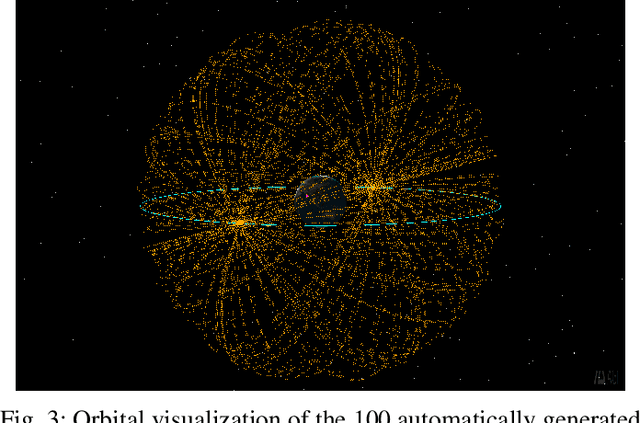

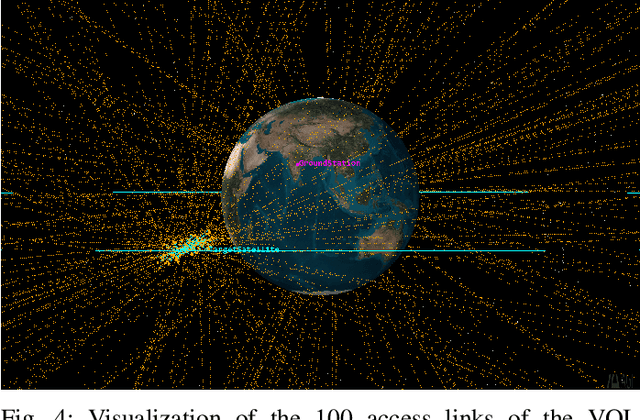

Adaptive Detection of on-Orbit Jamming for Securing GEO Satellite Links

Nov 25, 2024

Abstract:This paper introduces a scenario where a maneuverable satellite in geostationary orbit (GEO) conducts on-orbit attacks, targeting communication between a GEO satellite and a ground station, with the ability to switch between stationary and time-variant jamming modes. We propose a machine learning-based detection approach, employing the random forest algorithm with principal component analysis (PCA) to enhance detection accuracy in the stationary model. At the same time, an adaptive threshold-based technique is implemented for the time-variant model to detect dynamic jamming events effectively. Our methodology emphasizes the need for the use of orbital dynamics in integrating physical constraints from satellite dynamics to improve model robustness and detection accuracy. Simulation results highlight the effectiveness of PCA in enhancing the performance of the stationary model, while the adaptive thresholding method achieves high accuracy in detecting jamming in the time-variant scenario. This approach provides a robust solution for mitigating the evolving threats to satellite communication in GEO environments.

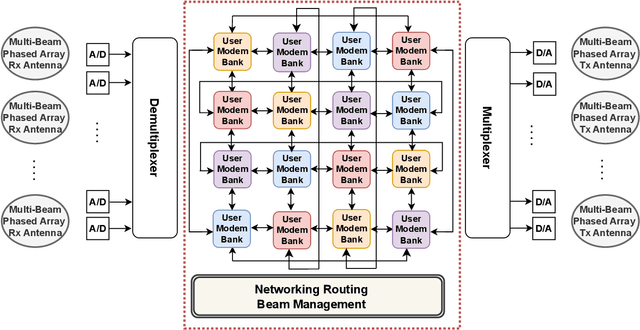

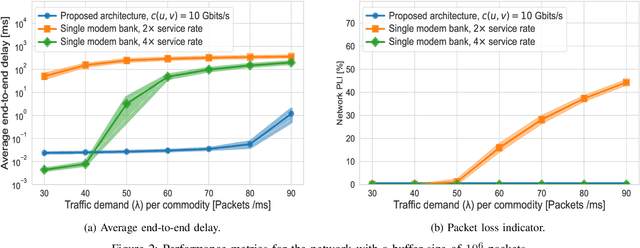

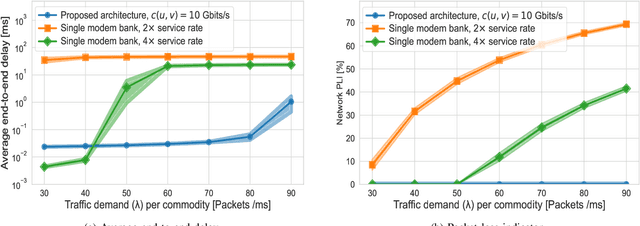

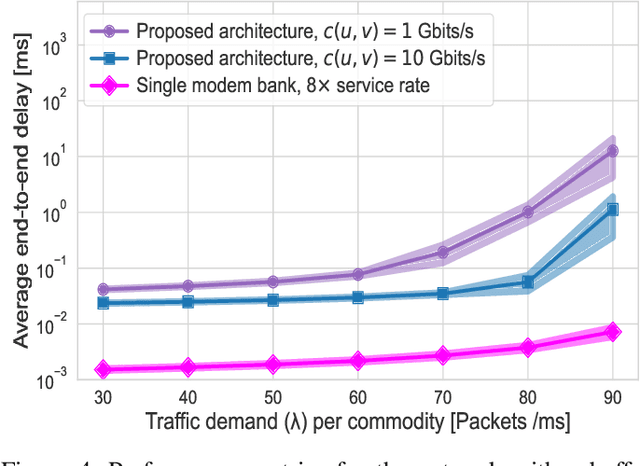

A Scalable Architecture for Future Regenerative Satellite Payloads

Jul 08, 2024

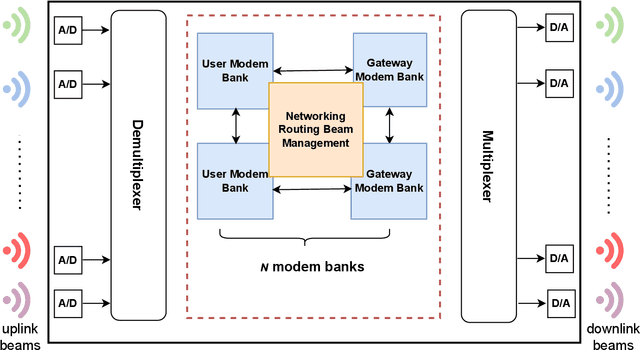

Abstract:This paper addresses the limitations of current satellite payload architectures, which are predominantly hardware-driven and lack the flexibility to adapt to increasing data demands and uneven traffic. To overcome these challenges, we present a novel architecture for future regenerative and programmable satellite payloads and utilize interconnected modem banks to promote higher scalability and flexibility. We formulate an optimization problem to efficiently manage traffic among these modem banks and balance the load. Additionally, we provide comparative numerical simulation results, considering end-to-end delay and packet loss analysis. The results illustrate that our proposed architecture maintains lower delays and packet loss even with higher traffic demands and smaller buffer sizes.

SAGE-HB: Swift Adaptation and Generalization in Massive MIMO Hybrid Beamforming

Jan 19, 2024

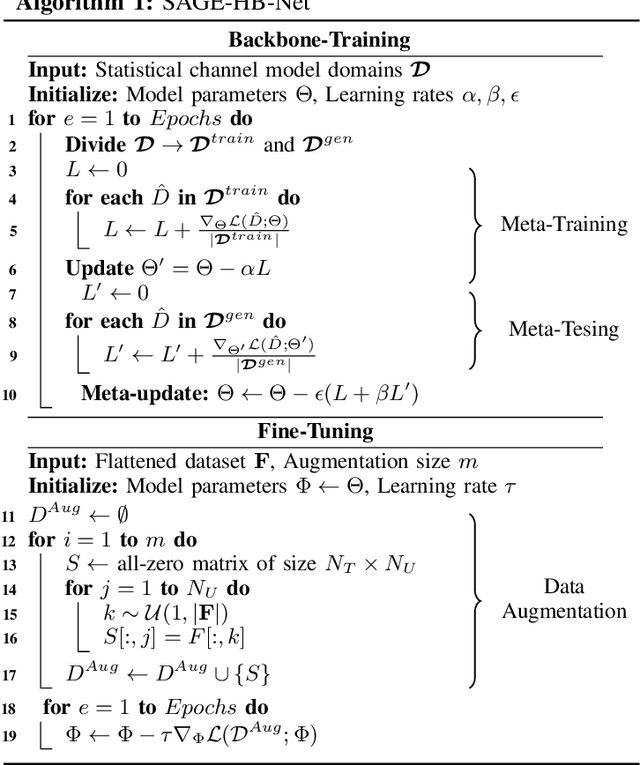

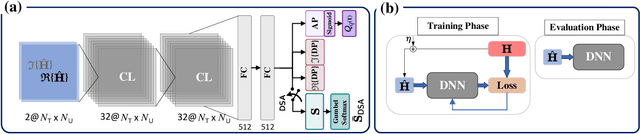

Abstract:Deep learning (DL)-based solutions have emerged as promising candidates for beamforming in massive Multiple-Input Multiple-Output (mMIMO) systems. Nevertheless, it remains challenging to seamlessly adapt these solutions to practical deployment scenarios, typically necessitating extensive data for fine-tuning while grappling with domain adaptation and generalization issues. In response, we propose a novel approach combining Meta-Learning Domain Generalization (MLDG) with novel data augmentation techniques during fine-tuning. This approach not only accelerates adaptation to new channel environments but also significantly reduces the data requirements for fine-tuning, thereby enhancing the practicality and efficiency of DL-based mMIMO systems. The proposed approach is validated by simulating the performance of a backbone model when deployed in a new channel environment, and with different antenna configurations, path loss, and base station height parameters. Our proposed approach demonstrates superior zero-shot performance compared to existing methods and also achieves near-optimal performance with significantly fewer fine-tuning data samples.

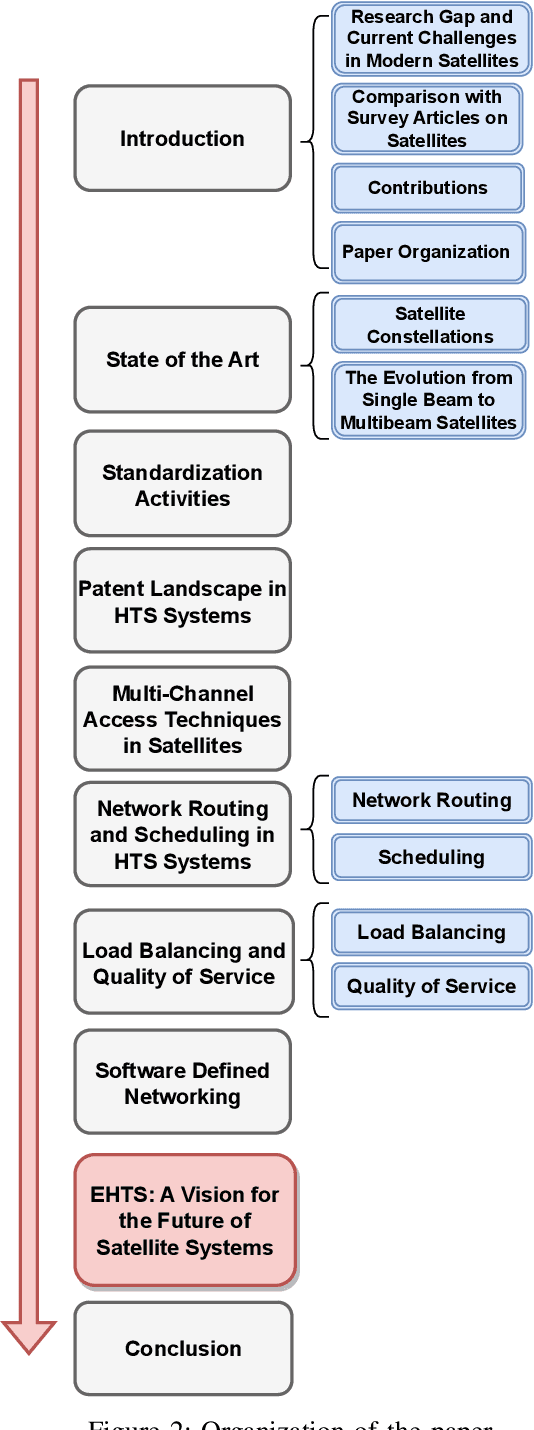

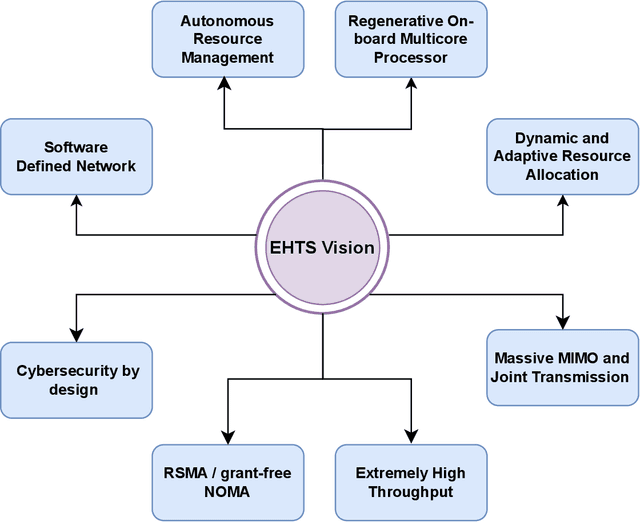

Evolution of High Throughput Satellite Systems: Vision, Requirements, and Key Technologies

Oct 06, 2023

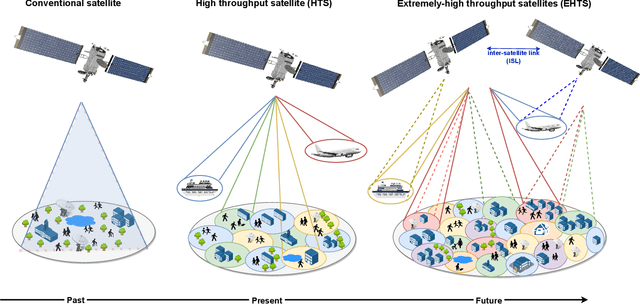

Abstract:High throughput satellites (HTS), with their digital payload technology, are expected to play a key role as enablers of the upcoming 6G networks. HTS are mainly designed to provide higher data rates and capacities. Fueled by technological advancements including beamforming, advanced modulation techniques, reconfigurable phased array technologies, and electronically steerable antennas, HTS have emerged as a fundamental component for future network generation. This paper offers a comprehensive state-of-the-art of HTS systems, with a focus on standardization, patents, channel multiple access techniques, routing, load balancing, and the role of software-defined networking (SDN). In addition, we provide a vision for next-satellite systems that we named as extremely-HTS (EHTS) toward autonomous satellites supported by the main requirements and key technologies expected for these systems. The EHTS system will be designed such that it maximizes spectrum reuse and data rates, and flexibly steers the capacity to satisfy user demand. We introduce a novel architecture for future regenerative payloads while summarizing the challenges imposed by this architecture.

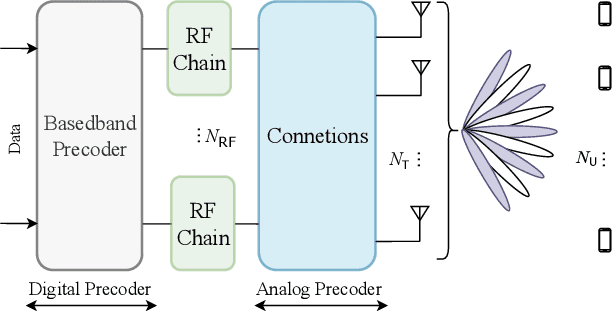

Learning Energy-Efficient Hardware Configurations for Massive MIMO Beamforming

Aug 11, 2023

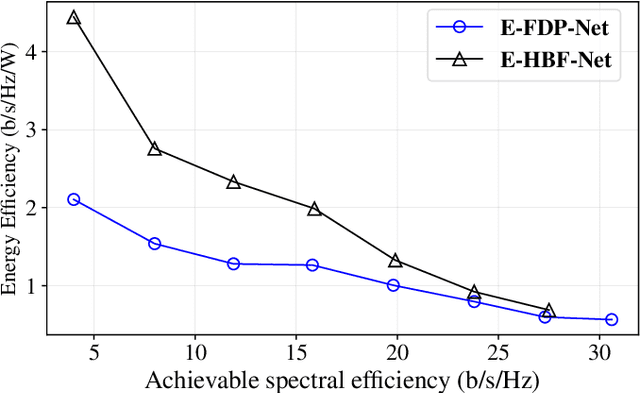

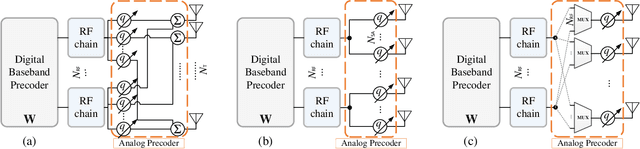

Abstract:Hybrid beamforming (HBF) and antenna selection are promising techniques for improving the energy efficiency~(EE) of massive multiple-input multiple-output~(mMIMO) systems. However, the transmitter architecture may contain several parameters that need to be optimized, such as the power allocated to the antennas and the connections between the antennas and the radio frequency chains. Therefore, finding the optimal transmitter architecture requires solving a non-convex mixed integer problem in a large search space. In this paper, we consider the problem of maximizing the EE of fully digital precoder~(FDP) and hybrid beamforming~(HBF) transmitters. First, we propose an energy model for different beamforming structures. Then, based on the proposed energy model, we develop an unsupervised deep learning method to maximize the EE by designing the transmitter configuration for FDP and HBF. The proposed deep neural networks can provide different trade-offs between spectral efficiency and energy consumption while adapting to different numbers of active users. Finally, to ensure that the proposed method can be implemented in practice, we investigate the ability of the model to be trained exclusively using imperfect channel state information~(CSI), both for the input to the deep learning model and for the calculation of the loss function. Simulation results show that the proposed solutions can outperform conventional methods in terms of EE while being trained with imperfect CSI. Furthermore, we show that the proposed solutions are less complex and more robust to noise than conventional methods.

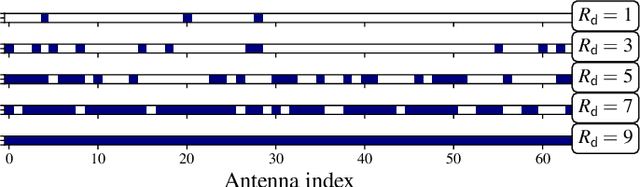

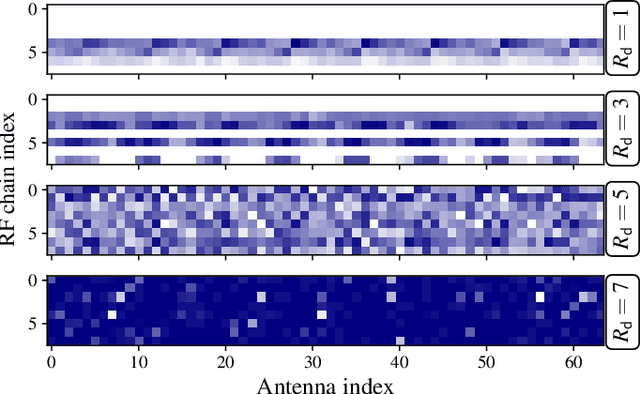

Flexible Unsupervised Learning for Massive MIMO Subarray Hybrid Beamforming

Aug 10, 2022

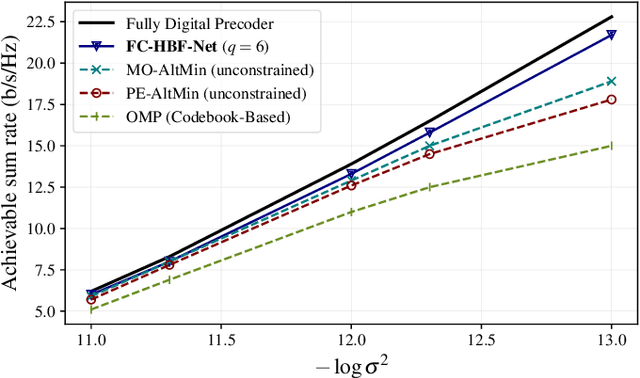

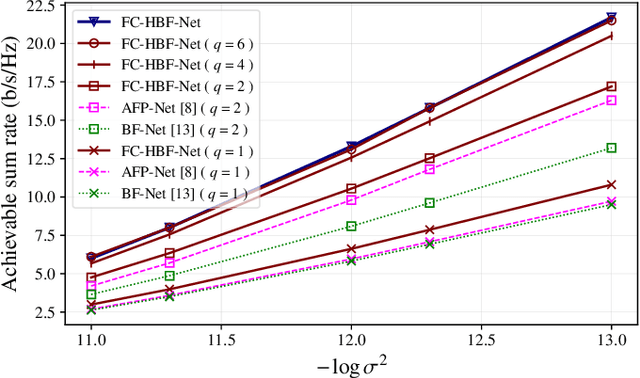

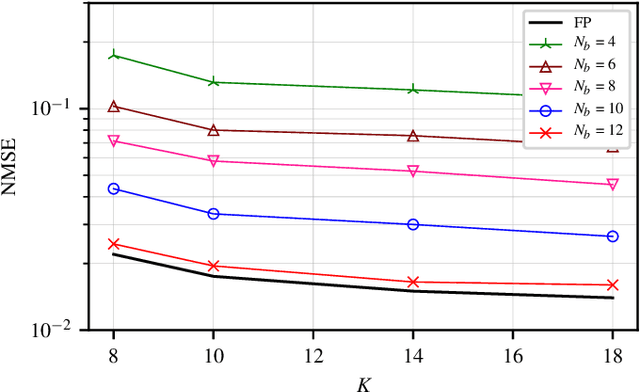

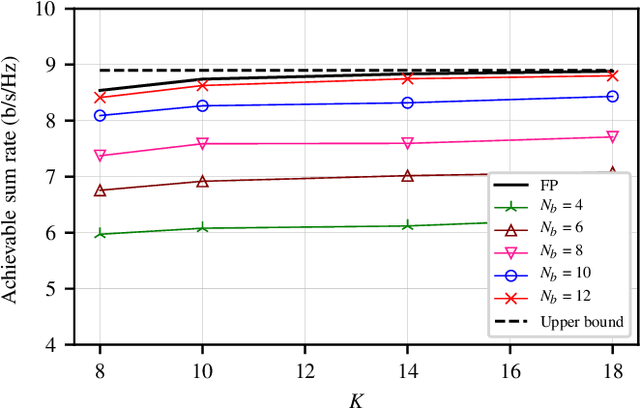

Abstract:Hybrid beamforming is a promising technology to improve the energy efficiency of massive MIMO systems. In particular, subarray hybrid beamforming can further decrease power consumption by reducing the number of phase-shifters. However, designing the hybrid beamforming vectors is a complex task due to the discrete nature of the subarray connections and the phase-shift amounts. Finding the optimal connections between RF chains and antennas requires solving a non-convex problem in a large search space. In addition, conventional solutions assume that perfect CSI is available, which is not the case in practical systems. Therefore, we propose a novel unsupervised learning approach to design the hybrid beamforming for any subarray structure while supporting quantized phase-shifters and noisy CSI. One major feature of the proposed architecture is that no beamforming codebook is required, and the neural network is trained to take into account the phase-shifter quantization. Simulation results show that the proposed deep learning solutions can achieve higher sum-rates than existing methods.

RSSI-Based Hybrid Beamforming Design with Deep Learning

Mar 12, 2020

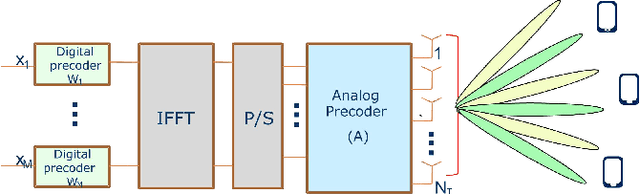

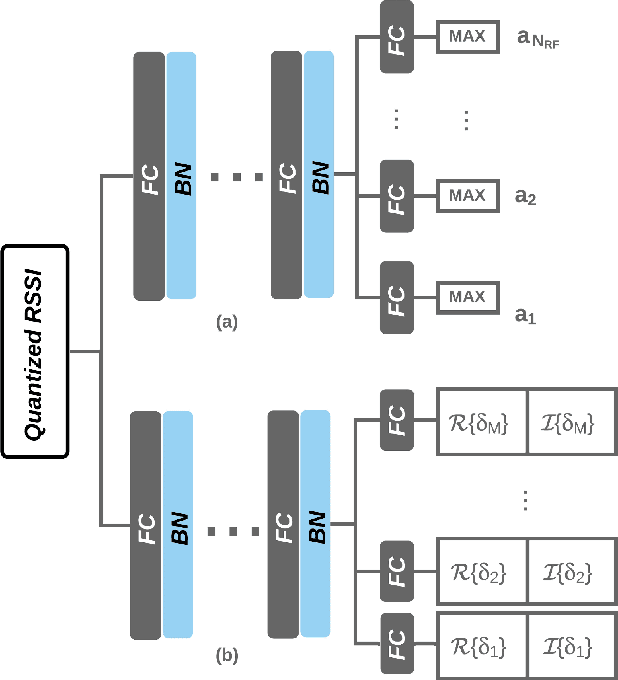

Abstract:Hybrid beamforming is a promising technology for 5G millimetre-wave communications. However, its implementation is challenging in practical multiple-input multiple-output (MIMO) systems because non-convex optimization problems have to be solved, introducing additional latency and energy consumption. In addition, the channel-state information (CSI) must be either estimated from pilot signals or fed back through dedicated channels, introducing a large signaling overhead. In this paper, a hybrid precoder is designed based only on received signal strength indicator (RSSI) feedback from each user. A deep learning method is proposed to perform the associated optimization with reasonable complexity. Results demonstrate that the obtained sum-rates are very close to the ones obtained with full-CSI optimal but complex solutions. Finally, the proposed solution allows to greatly increase the spectral efficiency of the system when compared to existing techniques, as minimal CSI feedback is required.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge