Gunes Karabulut Kurt

Burst Aware Forecasting of User Traffic Demand in LEO Satellite Networks

Jan 20, 2026Abstract:In Low Earth Orbit (LEO) satellite networks, Beam Hopping (BH) technology enables the efficient utilization of limited radio resources by adapting to varying user demands and link conditions. Effective BH planning requires prior knowledge of upcoming traffic at the time of scheduling, making forecasting an important sub-task. Forecasting becomes particularly critical under heavy load conditions where an unexpected demand burst combined with link degradation may cause buffer overflows and packet loss. To address this challenge, we propose a burst aware forecasting solution. This challenge may arise in a wide range of wireless networks; therefore, the proposed solution is broadly applicable to settings characterized by bursty traffic patterns where accurate demand forecasting is essential. Our approach introduces three key enhancements to a transformer architecture: (i) a distance from the last burst embedding to capture burst proximity, (ii) two additional linear layers in the decoder to forecast both upcoming bursts and their relative impact, and (iii) use of an asymmetric cost function during model training to better capture burst dynamics. Empirical evaluations in an Earth-fixed cell under high-traffic demand scenario demonstrate that the proposed model reduces prediction error by up to 94% at a one-step horizon and maintains the ability to accurately capture bursts even near the end of longer prediction horizons following Mean Square Error (MSE) metric.

Modeling the Doppler Shift in Cislunar Environment with Gaussian Mixture Models

Sep 08, 2025Abstract:This study investigates the RF-based Doppler shift distribution characterization of the Lunar South Pole (LSP) based inter-satellite link (ISL) in varying inclination. Doppler shift in parts per million (ppm) is determined and analyzed, as it provides an independence from the carrier frequency. Due to unknown relative velocity states duration, the Gaussian Mixture Model (GMM) is found to be the best fitting distribution for ISLs with $1^\circ$ inclination interval Doppler shift with respect to a predetermined satellite. Goodness-of-fit is investigated and quantified with Kullback-Leibler (KL) divergence and weighted mean relative difference (WMRD) error metrics. Simulation results show that ISL Doppler shifts reach up to $\pm1.89$ ppm as the inclination of the other orbit deviates higher from the reference orbit, inclining $80^\circ$. Regarding the error measurements of GMM fitting, the WMRD and KL divergence metrics for ISL take values up to 0.6575 and 2.2963, respectively.

Space Shift Keying-Enabled ISAC for Efficient Debris Detection and Communication in LEO Satellite Networks

Jul 17, 2025

Abstract:The proliferation of space debris in low Earth orbit (LEO) presents critical challenges for orbital safety, particularly for satellite constellations. Integrated sensing and communication (ISAC) systems provide a promising dual function solution by enabling both environmental sensing and data communication. This study explores the use of space shift keying (SSK) modulation within ISAC frameworks, evaluating its performance when combined with sinusoidal and chirp radar waveforms. SSK is particularly attractive due to its low hardware complexity and robust communication performance. Our results demonstrate that both waveforms achieve comparable bit error rate (BER) performance under SSK, validating its effectiveness for ISAC applications. However, waveform selection significantly affects sensing capability: while the sinusoidal waveform supports simpler implementation, its high ambiguity limits range detection. In contrast, the chirp waveform enables range estimation and provides a modest improvement in velocity detection accuracy. These findings highlight the strength of SSK as a modulation scheme for ISAC and emphasize the importance of selecting appropriate waveforms to optimize sensing accuracy without compromising communication performance. This insight supports the design of efficient and scalable ISAC systems for space applications, particularly in the context of orbital debris monitoring.

Green Satellite Networks Using Segment Routing and Software-Defined Networking

Apr 29, 2025Abstract:This paper presents a comprehensive evaluation of network performance in software defined networking (SDN)-based low Earth orbit (LEO) satellite networks, focusing on the Telesat Lightspeed constellation. We propose a green traffic engineering (TE) approach leveraging segment routing IPv6 (SRv6) to enhance energy efficiency. Through simulations, we analyze the impact of SRv6, multi-protocol label switching (MPLS), IPv4, and IPv6 with open shortest path first (OSPF) on key network performance metrics, including peak and average CPU usage, memory consumption, packet delivery rate (PDR), and packet overhead under varying traffic loads. Results show that the proposed green TE approach using SRv6 achieves notable energy efficiency, maintaining lower CPU usage and high PDR compared to traditional protocols. While SRv6 and MPLS introduce slightly higher memory usage and overhead due to their advanced configurations, these trade-offs remain manageable. Our findings highlight SRv6 with green TE as a promising solution for optimizing energy efficiency in LEO satellite networks, contributing to the development of more sustainable and efficient satellite communications.

Multi-Orbiter Continuous Lunar Beaming

Apr 15, 2025Abstract:In this work, free-space optics-based continuous wireless power transmission between multiple low lunar orbit satellites and a solar panel on the lunar rover located at the lunar south pole are investigated based on the time-varying harvested power and overall system efficiency metrics. The performances are compared between a solar panel with the tracking ability and a fixed solar panel that induces \textit{the cosine effect} due to the time-dependent angle of incidence (AoI). In our work, the Systems Tool Kit (STK) high-precision orbit propagator, which calculates the ephemeris data precisely, is utilized. Interestingly, orbiter deployments in constellations change significantly during a Moon revolution; thus, short-duration simulations cannot be used straightforwardly. In our work, many satellite configurations are assessed to be able to find a Cislunar constellation that establishes a continuous line-of-sight (LoS) between the solar panel and at least a single LLO satellite. It is found that 40-satellite schemes enable the establishment of a continuous WPT system model. Besides, a satellite selection method (SSM) is introduced so that only the best LoS link among multiple simultaneous links from multiple satellites will be active for optimum efficiency. Our benchmark system of a 40-satellite quadruple orbit scheme is compared with 30-satellite and a single satellite schemes based on the average harvested powers and overall system efficiencies 27.3 days so the trade-off options can be assessed from the multiple Cislunar models. The outcomes show that the average system efficiencies of single, 30-satellite, and 40-satellite schemes are 2.84%, 32.33%, and 33.29%, respectively, for the tracking panel and 0.97%, 18.33%, and 20.44%, respectively, for the fixed solar panel case.

Hybrid FSO and RF Lunar Wireless Power Transfer

Mar 26, 2025

Abstract:This study focuses on the feasibility analyses of the hybrid FSO and RF-based WPT system used in the realistic Cislunar environment, which is established by using STK HPOP software in which many external forces are incorporated. In our proposed multi-hop scheme, a solar-powered satellite (SPS) beams the laser power to the low lunar orbit (LLO) satellite in the first hop, then the harvested power is used as a relay power for RF-based WPT to two critical lunar regions, which are lunar south pole (LSP) (0{\deg}E,90{\deg}S) and Malapert Mountain (0{\deg}E,86{\deg}S), owing to the multi-point coverage feature of RF systems. The end-to-end system is analyzed for two cases, i) the perfect alignment, and ii) the misalignment fading due to the random mechanical vibrations in the optical inter-satellite link. It is found that the harvested power is maximized when the distance between the SPS and LLO satellite is minimized and it is calculated as 331.94 kW, however, when the random misalignment fading is considered, the mean of the harvested power reduces to 309.49 kW for the same distance. In the next hop, the power harvested by the solar array on the LLO satellite is consumed entirely as the relay power. Identical parabolic antennas are considered during the RF-based WPT system between the LLO satellite and the LSP, which utilizes a full-tracking module, and between the LLO satellite and the Malapert Mountain region, which uses a half-tracking module that executes the tracking on the receiver dish only. In the perfectly aligned hybrid WPT system, 19.80 W and 573.7 mW of maximum harvested powers are yielded at the LSP and Mountain Malapert, respectively. On the other hand, when the misalignment fading in the end-to-end system is considered, the mean of the maximum harvested powers degrades to 18.41 W and 534.4 mW for the former and latter hybrid WPT links.

Kise-Manitow's Hand in Space: Securing Communication and Connections in Space

Feb 13, 2025

Abstract:The increasing complexity of space systems, coupled with their critical operational roles, demands a robust, scalable, and sustainable security framework. This paper presents a novel system-of-systems approach for the upcoming Lunar Gateway. We demonstrate the application of the secure-by-component approach to the two earliest deployed systems in the Gateway, emphasizing critical security controls both internally and for external communication and connections. Additionally, we present a phased approach for the integration of Canadarm3, addressing the unique security challenges that arise from both inter-system interactions and the arm's autonomous capabilities.

Adaptive Detection of on-Orbit Jamming for Securing GEO Satellite Links

Nov 25, 2024

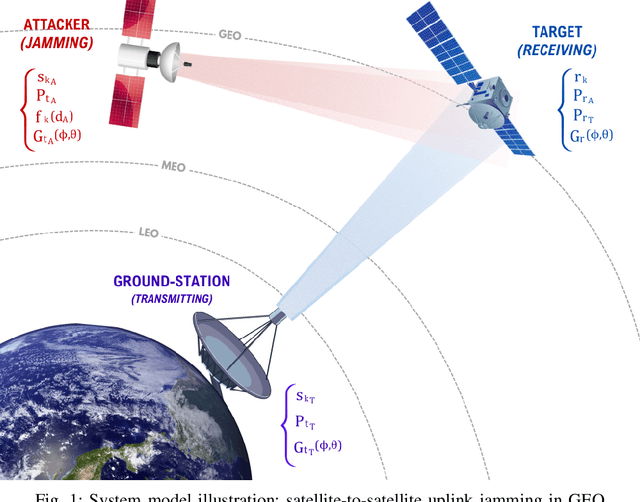

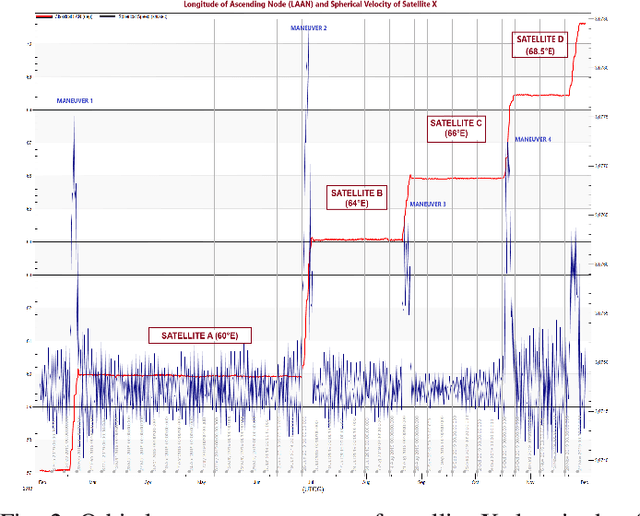

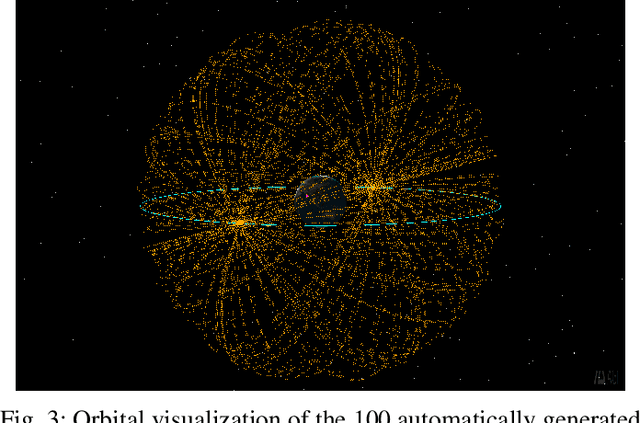

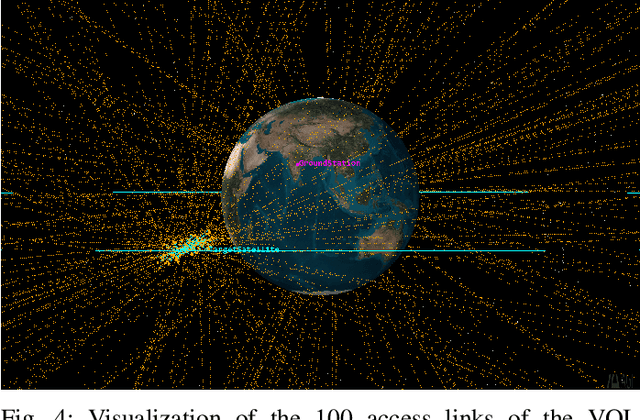

Abstract:This paper introduces a scenario where a maneuverable satellite in geostationary orbit (GEO) conducts on-orbit attacks, targeting communication between a GEO satellite and a ground station, with the ability to switch between stationary and time-variant jamming modes. We propose a machine learning-based detection approach, employing the random forest algorithm with principal component analysis (PCA) to enhance detection accuracy in the stationary model. At the same time, an adaptive threshold-based technique is implemented for the time-variant model to detect dynamic jamming events effectively. Our methodology emphasizes the need for the use of orbital dynamics in integrating physical constraints from satellite dynamics to improve model robustness and detection accuracy. Simulation results highlight the effectiveness of PCA in enhancing the performance of the stationary model, while the adaptive thresholding method achieves high accuracy in detecting jamming in the time-variant scenario. This approach provides a robust solution for mitigating the evolving threats to satellite communication in GEO environments.

Securing Satellite Link Segment: A Secure-by-Component Design

Nov 19, 2024

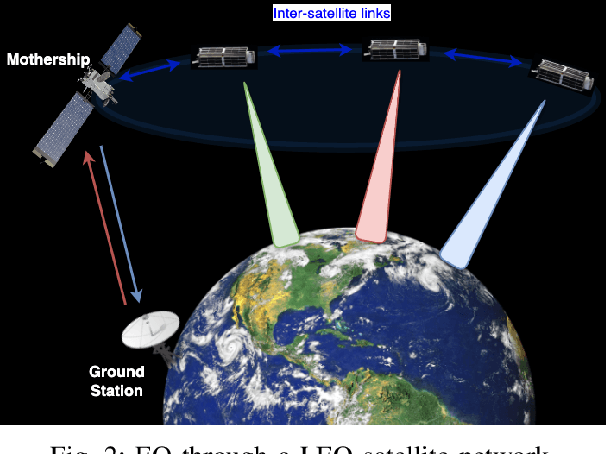

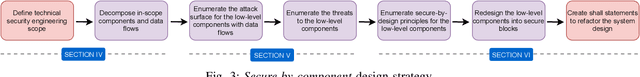

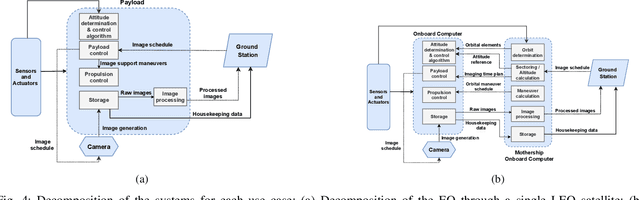

Abstract:The rapid evolution of communication technologies, compounded by recent geopolitical events such as the Viasat cyberattack in February 2022, has highlighted the urgent need for fast and reliable satellite missions for military and civil security operations. Consequently, this paper examines two Earth observation (EO) missions: one utilizing a single low Earth orbit (LEO) satellite and another through a network of LEO satellites, employing a secure-by-component design strategy. This approach begins by defining the scope of technical security engineering, decomposing the system into components and data flows, and enumerating attack surfaces. Then it proceeds by identifying threats to low-level components, applying secure-by-design principles, redesigning components into secure blocks in alignment with the Space Attack Research & Tactic Analysis (SPARTA) framework, and crafting shall statements to refactor the system design, with a particular focus on improving the security of the link segment.

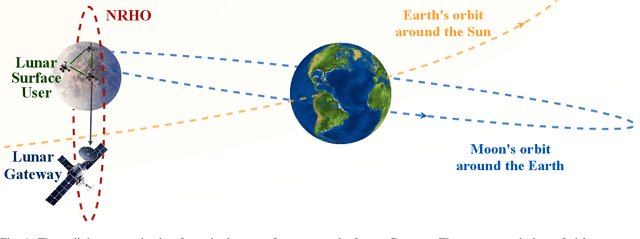

Cislunar Communication Performance and System Analysis with Uncharted Phenomena

Sep 14, 2024

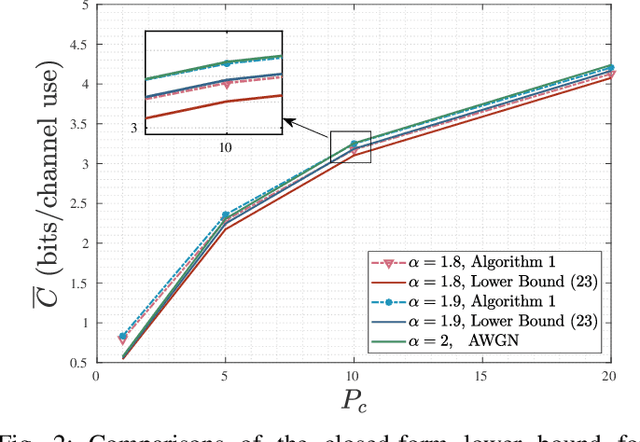

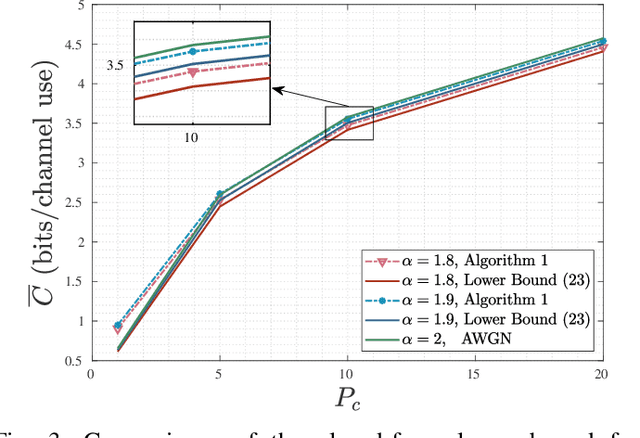

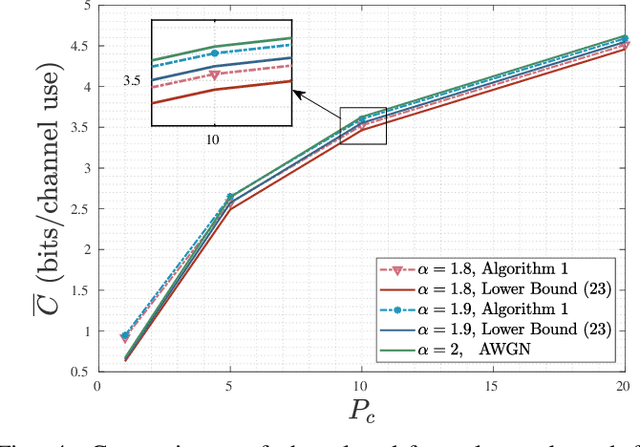

Abstract:The Moon and its surrounding cislunar space have numerous unknowns, uncertainties, or partially charted phenomena that need to be investigated to determine the extent to which they affect cislunar communication. These include temperature fluctuations, spacecraft distance and velocity dynamics, surface roughness, and the diversity of propagation mechanisms. To develop robust and dynamically operative Cislunar space networks (CSNs), we need to analyze the communication system by incorporating inclusive models that account for the wide range of possible propagation environments and noise characteristics. In this paper, we consider that the communication signal can be subjected to both Gaussian and non-Gaussian noise, but also to different fading conditions. First, we analyze the communication link by showing the relationship between the brightness temperatures of the Moon and the equivalent noise temperature at the receiver of the Lunar Gateway. We propose to analyze the ergodic capacity and the outage probability, as they are essential metrics for the development of reliable communication. In particular, we model the noise with the additive symmetric alpha-stable distribution, which allows a generic analysis for Gaussian and non-Gaussian signal characteristics. Then, we present the closed-form bounds for the ergodic capacity and the outage probability. Finally, the results show the theoretically and operationally achievable performance bounds for the cislunar communication. To give insight into further designs, we also provide our results with comprehensive system settings that include mission objectives as well as orbital and system dynamics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge