Olfa Ben Yahia

Adaptive Detection of on-Orbit Jamming for Securing GEO Satellite Links

Nov 25, 2024

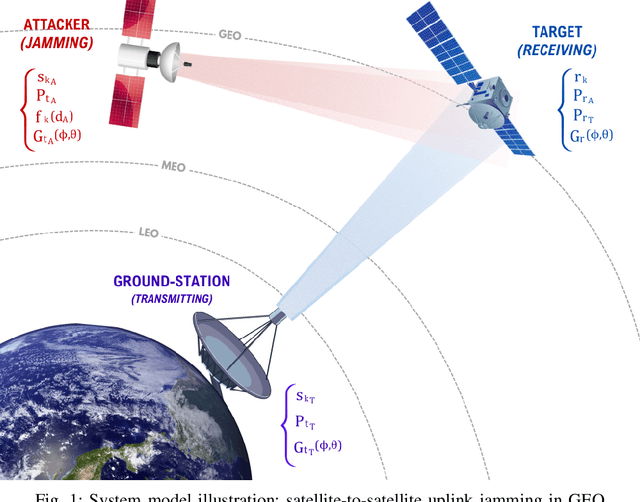

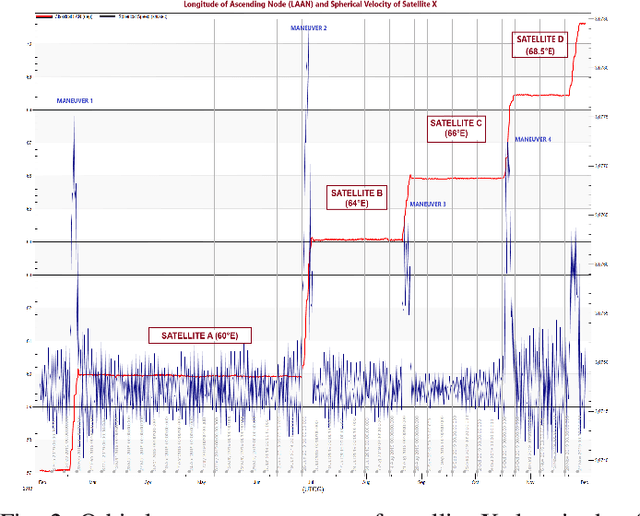

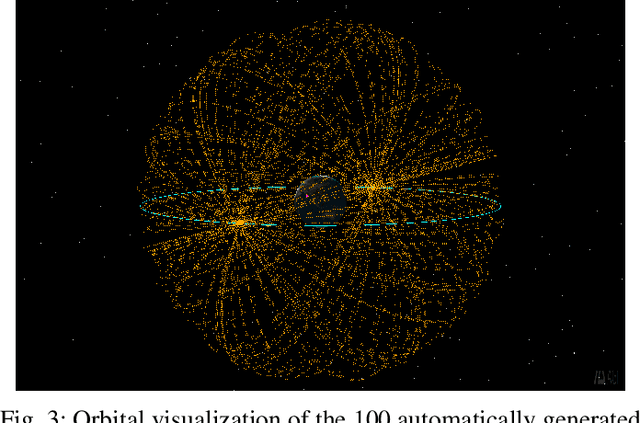

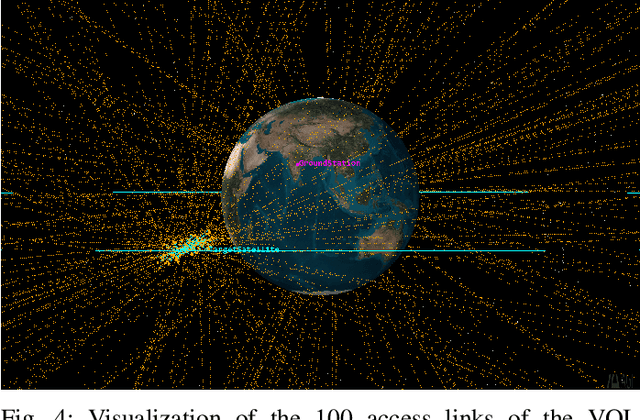

Abstract:This paper introduces a scenario where a maneuverable satellite in geostationary orbit (GEO) conducts on-orbit attacks, targeting communication between a GEO satellite and a ground station, with the ability to switch between stationary and time-variant jamming modes. We propose a machine learning-based detection approach, employing the random forest algorithm with principal component analysis (PCA) to enhance detection accuracy in the stationary model. At the same time, an adaptive threshold-based technique is implemented for the time-variant model to detect dynamic jamming events effectively. Our methodology emphasizes the need for the use of orbital dynamics in integrating physical constraints from satellite dynamics to improve model robustness and detection accuracy. Simulation results highlight the effectiveness of PCA in enhancing the performance of the stationary model, while the adaptive thresholding method achieves high accuracy in detecting jamming in the time-variant scenario. This approach provides a robust solution for mitigating the evolving threats to satellite communication in GEO environments.

Securing Satellite Link Segment: A Secure-by-Component Design

Nov 19, 2024

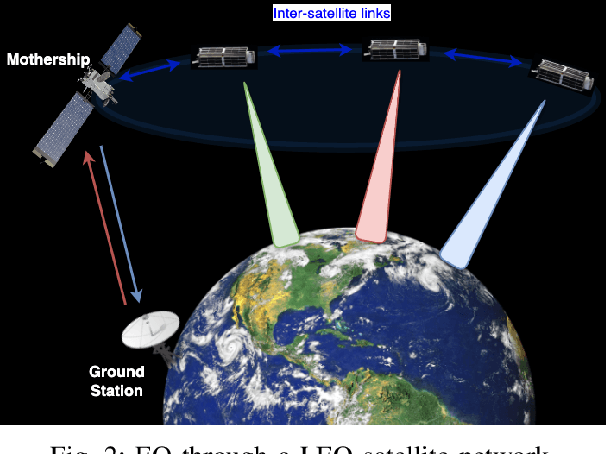

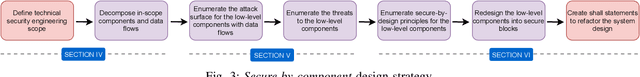

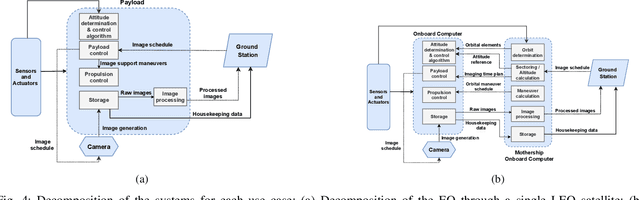

Abstract:The rapid evolution of communication technologies, compounded by recent geopolitical events such as the Viasat cyberattack in February 2022, has highlighted the urgent need for fast and reliable satellite missions for military and civil security operations. Consequently, this paper examines two Earth observation (EO) missions: one utilizing a single low Earth orbit (LEO) satellite and another through a network of LEO satellites, employing a secure-by-component design strategy. This approach begins by defining the scope of technical security engineering, decomposing the system into components and data flows, and enumerating attack surfaces. Then it proceeds by identifying threats to low-level components, applying secure-by-design principles, redesigning components into secure blocks in alignment with the Space Attack Research & Tactic Analysis (SPARTA) framework, and crafting shall statements to refactor the system design, with a particular focus on improving the security of the link segment.

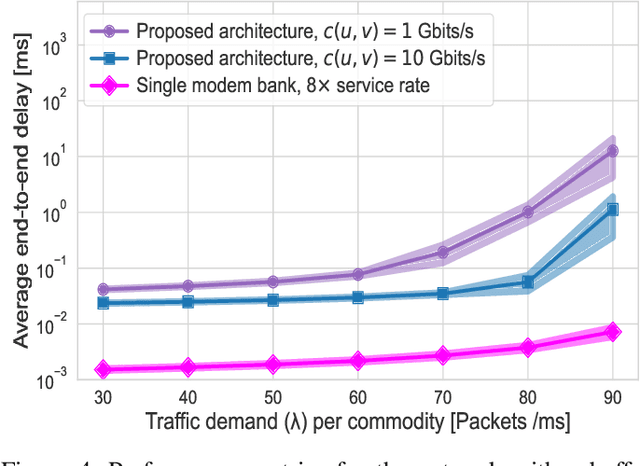

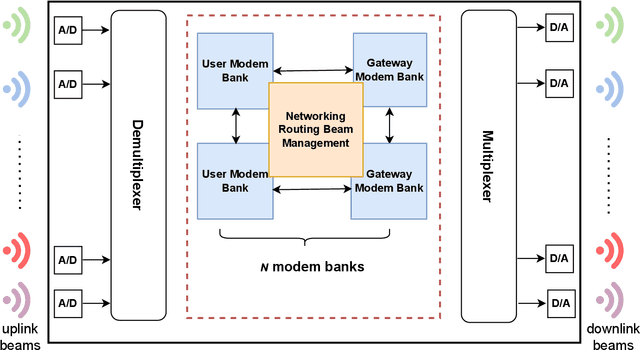

Online Convex Optimization for On-Board Routing in High-Throughput Satellites

Sep 02, 2024Abstract:The rise in low Earth orbit (LEO) satellite Internet services has led to increasing demand, often exceeding available data rates and compromising the quality of service. While deploying more satellites offers a short-term fix, designing higher-performance satellites with enhanced transmission capabilities provides a more sustainable solution. Achieving the necessary high capacity requires interconnecting multiple modem banks within a satellite payload. However, there is a notable gap in research on internal packet routing within extremely high-throughput satellites. To address this, we propose a real-time optimal flow allocation and priority queue scheduling method using online convex optimization-based model predictive control. We model the problem as a multi-commodity flow instance and employ an online interior-point method to solve the routing and scheduling optimization iteratively. This approach minimizes packet loss and supports real-time rerouting with low computational overhead. Our method is tested in simulation on a next-generation extremely high-throughput satellite model, demonstrating its effectiveness compared to a reference batch optimization and to traditional methods.

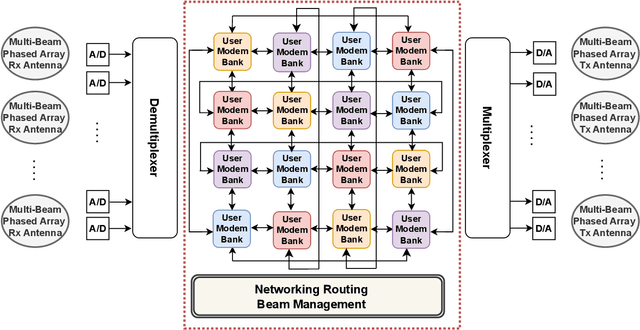

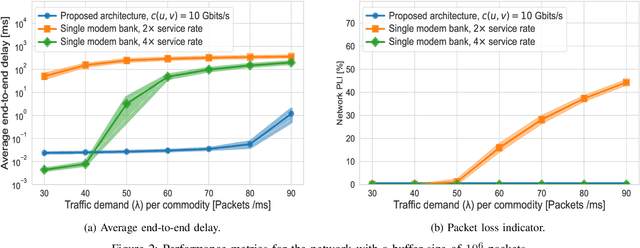

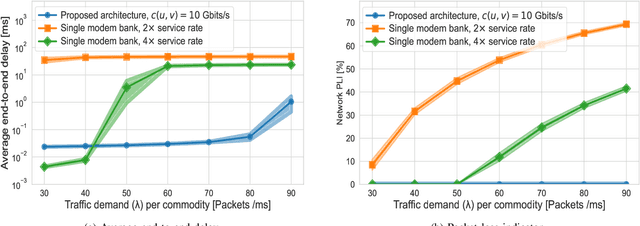

A Scalable Architecture for Future Regenerative Satellite Payloads

Jul 08, 2024

Abstract:This paper addresses the limitations of current satellite payload architectures, which are predominantly hardware-driven and lack the flexibility to adapt to increasing data demands and uneven traffic. To overcome these challenges, we present a novel architecture for future regenerative and programmable satellite payloads and utilize interconnected modem banks to promote higher scalability and flexibility. We formulate an optimization problem to efficiently manage traffic among these modem banks and balance the load. Additionally, we provide comparative numerical simulation results, considering end-to-end delay and packet loss analysis. The results illustrate that our proposed architecture maintains lower delays and packet loss even with higher traffic demands and smaller buffer sizes.

Quality of Service-Constrained Online Routing in High Throughput Satellites

Oct 11, 2023Abstract:High Throughput Satellites (HTSs) outpace traditional satellites due to their multi-beam transmission. The rise of low Earth orbit mega constellations amplifies HTS data rate demands to terabits/second with acceptable latency. This surge in data rate necessitates multiple modems, often exceeding single device capabilities. Consequently, satellites employ several processors, forming a complex packet-switch network. This can lead to potential internal congestion and challenges in adhering to strict quality of service (QoS) constraints. While significant research exists on constellation-level routing, a literature gap remains on the internal routing within a singular HTS. The intricacy of this internal network architecture presents a significant challenge to achieve high data rates. This paper introduces an online optimal flow allocation and scheduling method for HTSs. The problem is treated as a multi-commodity flow instance with different priority data streams. An initial full time horizon model is proposed as a benchmark. We apply a model predictive control (MPC) approach to enable adaptive routing based on current information and the forecast within the prediction time horizon while allowing for deviation of the latter. Importantly, MPC is inherently suited to handle uncertainty in incoming flows. Our approach minimizes packet loss by optimally and adaptively managing the priority queue schedulers and flow exchanges between satellite processing modules. Central to our method is a routing model focusing on optimal priority scheduling to enhance data rates and maintain QoS. The model's stages are critically evaluated, and results are compared to traditional methods via numerical simulations. Through simulations, our method demonstrates performance nearly on par with the hindsight optimum, showcasing its efficiency and adaptability in addressing satellite communication challenges.

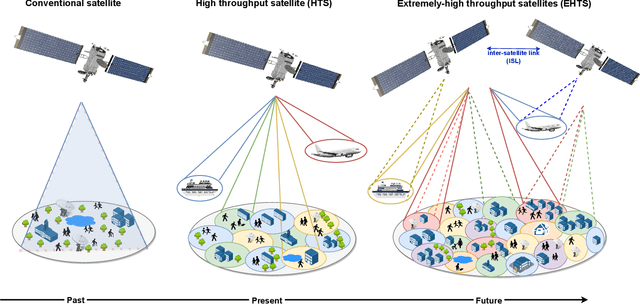

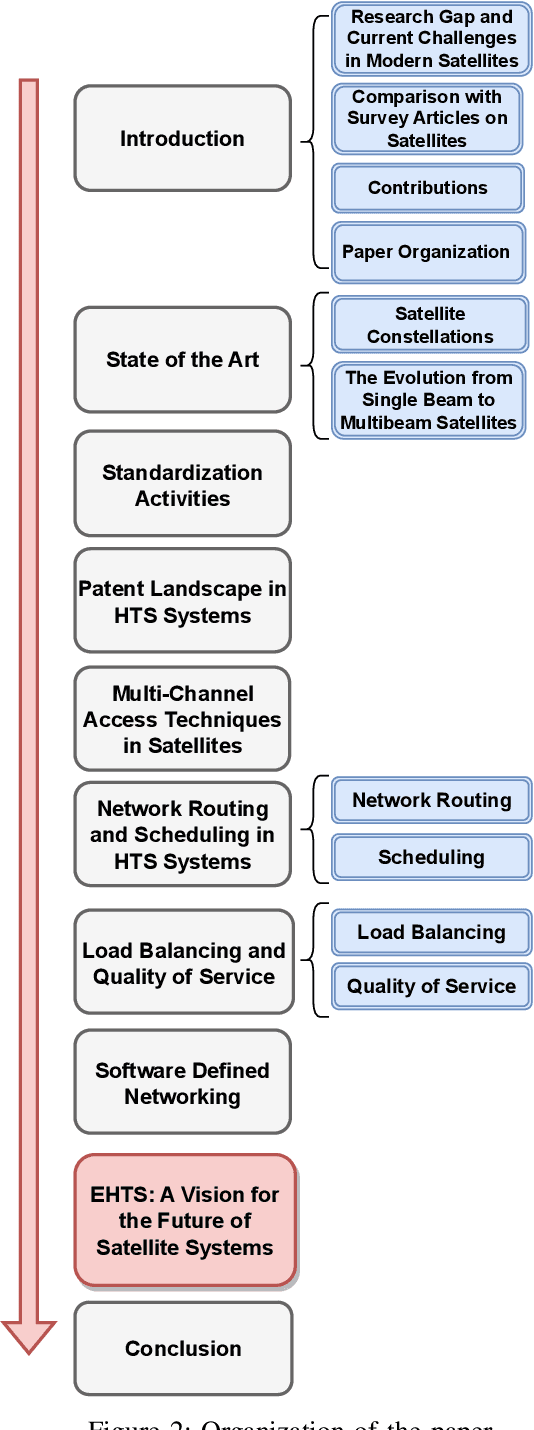

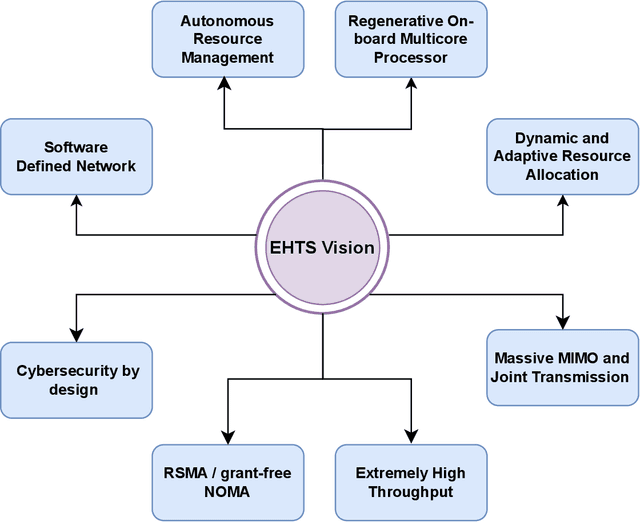

Evolution of High Throughput Satellite Systems: Vision, Requirements, and Key Technologies

Oct 06, 2023

Abstract:High throughput satellites (HTS), with their digital payload technology, are expected to play a key role as enablers of the upcoming 6G networks. HTS are mainly designed to provide higher data rates and capacities. Fueled by technological advancements including beamforming, advanced modulation techniques, reconfigurable phased array technologies, and electronically steerable antennas, HTS have emerged as a fundamental component for future network generation. This paper offers a comprehensive state-of-the-art of HTS systems, with a focus on standardization, patents, channel multiple access techniques, routing, load balancing, and the role of software-defined networking (SDN). In addition, we provide a vision for next-satellite systems that we named as extremely-HTS (EHTS) toward autonomous satellites supported by the main requirements and key technologies expected for these systems. The EHTS system will be designed such that it maximizes spectrum reuse and data rates, and flexibly steers the capacity to satisfy user demand. We introduce a novel architecture for future regenerative payloads while summarizing the challenges imposed by this architecture.

Multi-State Inter-Satellite Channel Models

May 12, 2023Abstract:The advancement in satellite networks (SatNets) offers vast opportunities for large-scale connectivity and flexibility. The intersection of inter-satellite communication (ISC) and the developments in rate-hungry enhanced mobile broadband (eMBB) services have resulted in potential ultra-dense deployments such as mega-constellations. The integration of inter-satellite links (ISL) with high spectrum bands requires a well-defined channel model to anticipate possible challenges and provide solutions. In this research, we address the key concerns for defining SatNets channels and establish a comprehensive node-to-node ISL channel model for the Markov state model. The model takes into consideration satellite mobility, celestial body radiation, and cumulative thermal noise. Using this model, we analyze the bit error rate, outage, and achievable capacity of various ISC cases and propose a state-based power allocation method to improve their performance.

Optical Satellite Eavesdropping

Dec 09, 2021

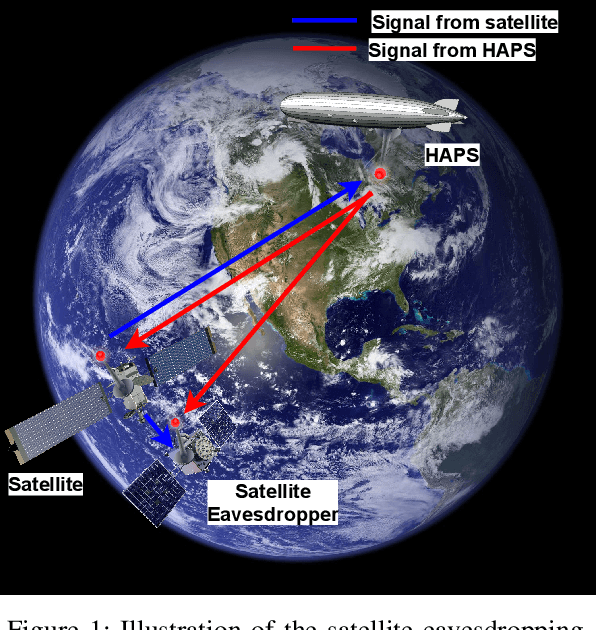

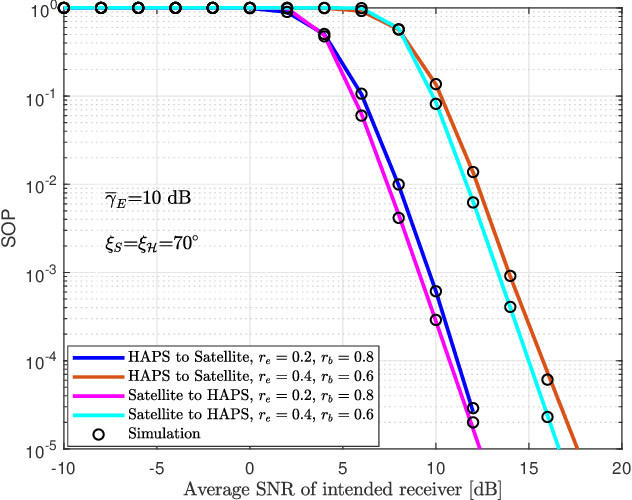

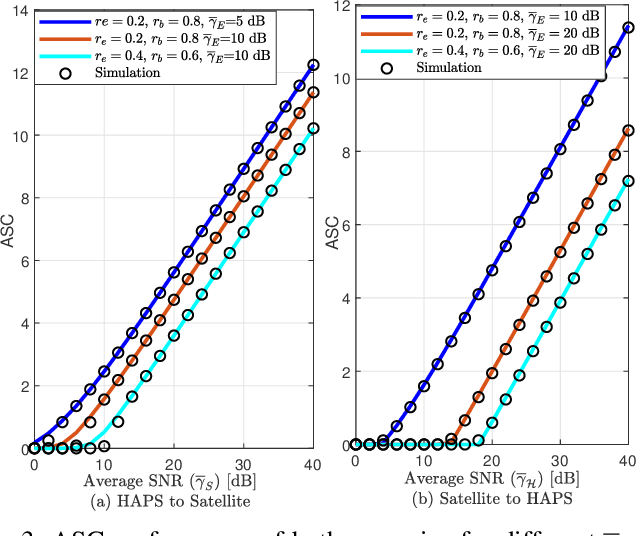

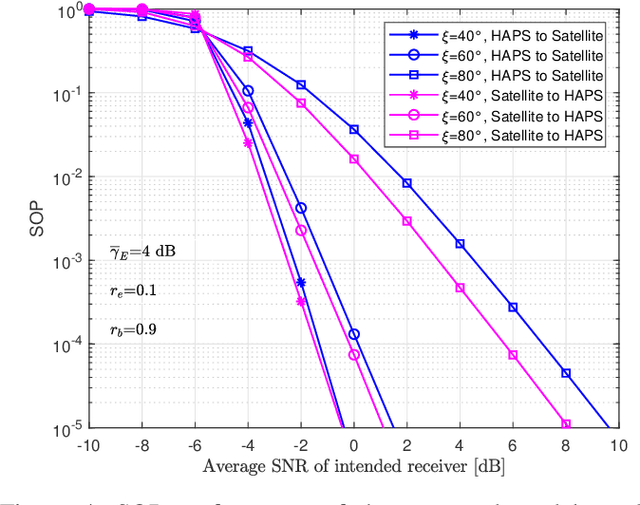

Abstract:In recent years, satellite communication (SatCom) systems have been widely used for navigation, broadcasting application, disaster recovery, weather sensing, and even spying on the Earth. As the number of satellites is highly increasing and with the radical revolution in wireless technology, eavesdropping on SatCom will be possible in next-generation networks. In this context, we introduce the satellite eavesdropping approach, where an eavesdropping satellite can intercept optical communications established between a low Earth orbit (LEO) satellite and a high altitude platform station (HAPS). Specifically, we propose two practical eavesdropping scenarios for satellite-to-HAPS (downlink) and HAPS-to-satellite (uplink) optical communications, where the eavesdropper satellite can eavesdrop on the transmitted signal or the received signal. To quantify the secrecy performance of the scenarios, the average secrecy capacity (ASC) and secrecy outage probability (SOP) expressions are derived and validated with Monte Carlo (MC) simulations. We observe that turbulence-induced fading significantly impacts the secrecy performance of free-space optical (FSO) communication.

On the Performance of HAPS-assisted Hybrid RF-FSO Multicast Communication Systems

Sep 21, 2021

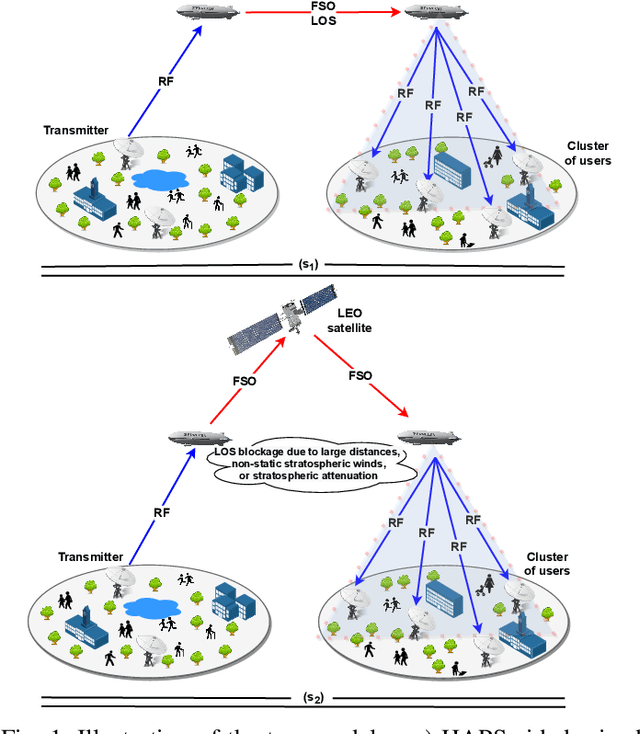

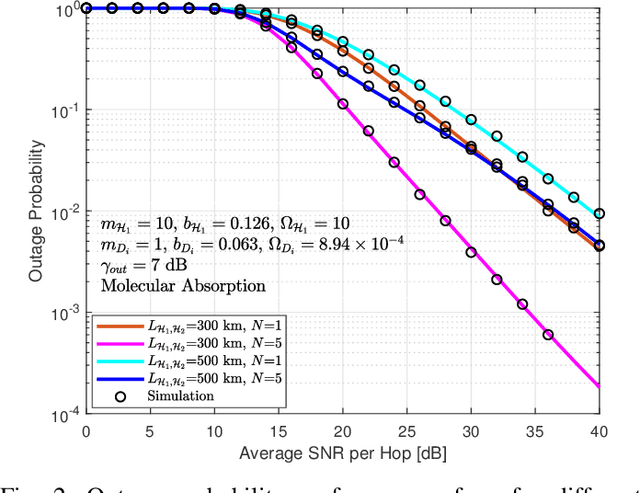

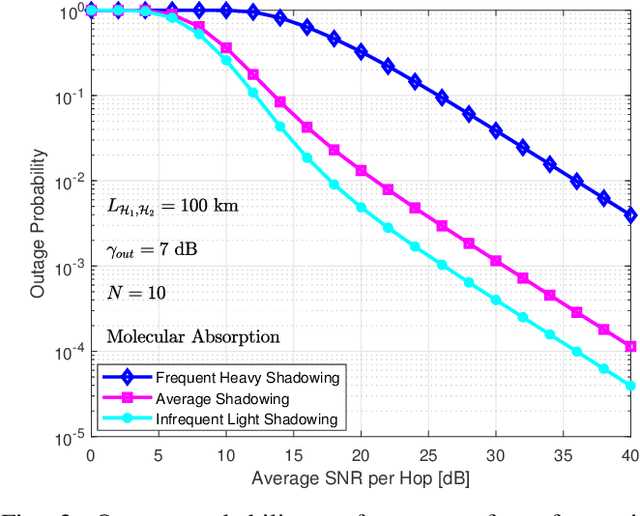

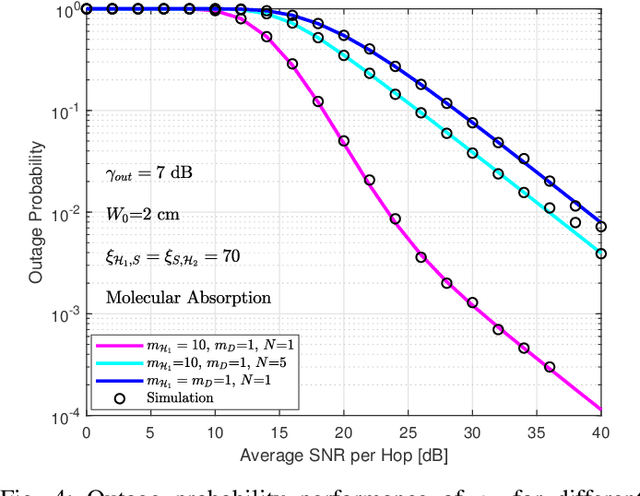

Abstract:Multicast routing is considered a promising approach for real-time applications to address the massive data traffic demands. In this work, we study the outage probability of a multicast downlink communication network for non-terrestrial communication systems. More precisely, we propose two practical use-cases. In the former model, we propose a high altitude platform station (HAPS) aided mixed radio frequency (RF)/ free-space optical (FSO)/RF communication scheme where a terrestrial ground station intends to communicate with a cluster of nodes through two stratospheric HAPS systems. In the latter model, we assume that the line of sight (LOS) connectivity is inaccessible between the two HAPS systems due to high attenuation caused by large propagation distances. Thereby, we propose a low Earth orbit (LEO) satellite-aided mixed RF/FSO/FSO/RF communication. For the proposed scenarios, outage probability expressions are derived and validated with Monte Carlo (MC) simulations under different conditions.

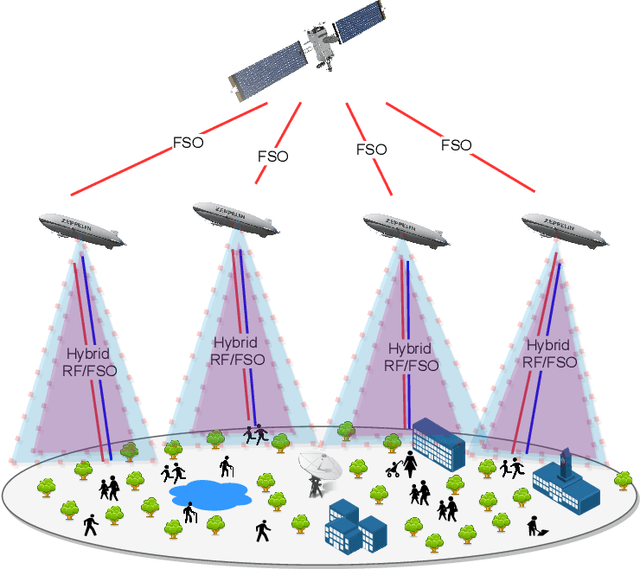

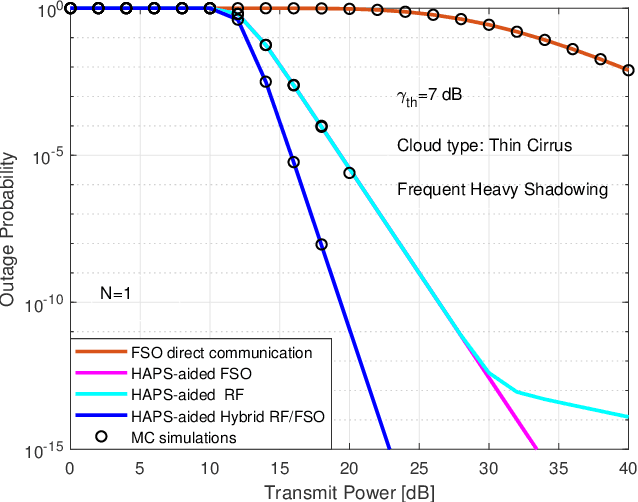

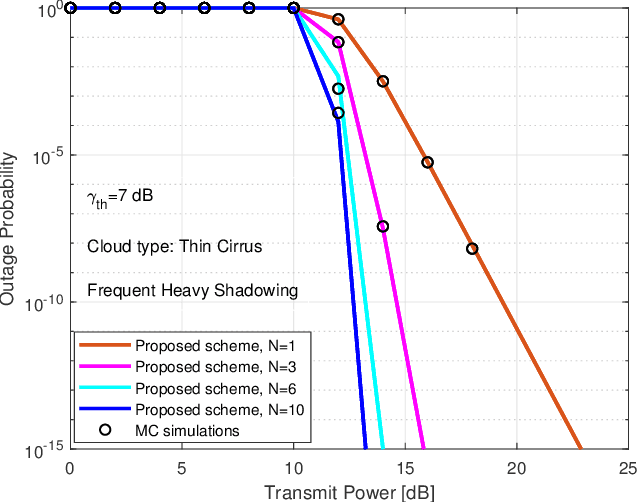

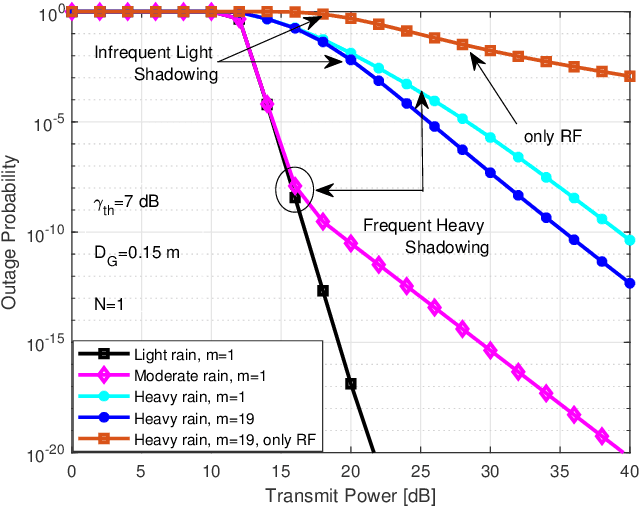

HAPS Selection for Hybrid RF/FSO Satellite Networks

Jul 27, 2021

Abstract:Non-terrestrial networks have been attracting much interest from the industry and academia. Satellites and high altitude platform station (HAPS) systems are expected to be the key enablers of next-generation wireless networks. In this paper, we introduce a novel downlink satellite communication (SatCom) model where free-space optical (FSO) communication is used between a satellite and HAPS node, and a hybrid FSO/radio-frequency (RF) transmission model is used between the HAPS node and ground station (GS). In the first phase of transmission, the satellite selects the HAPS node that provides the highest signal-to-noise ratio (SNR). In the second phase, the selected HAPS decodes and forwards the signal to the GS. To evaluate the performance of the proposed system, outage probability expressions are derived for exponentiated Weibull (EW) and shadowed-Rician fading models while considering the atmospheric turbulence, stratospheric attenuation, and attenuation due to scattering, path loss, and pointing errors. Furthermore, the impact of aperture averaging, temperature, and wind speed are investigated. Finally, we provide some important guidelines that can be helpful for the design of practical HAPS-aided SatCom.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge