Zoubeir Mlika

Learning Energy-Efficient Hardware Configurations for Massive MIMO Beamforming

Aug 11, 2023

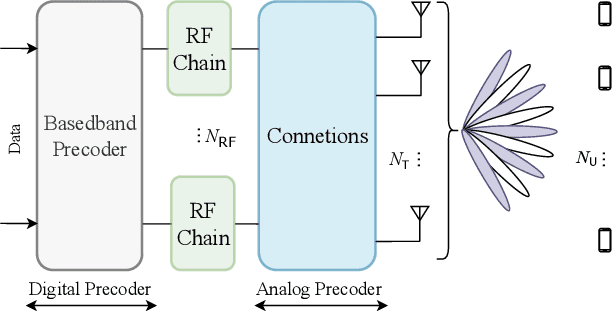

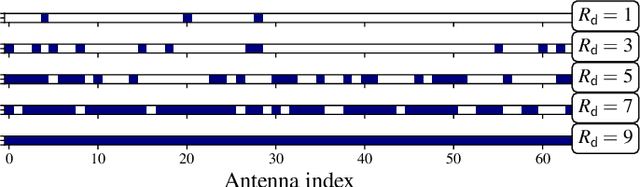

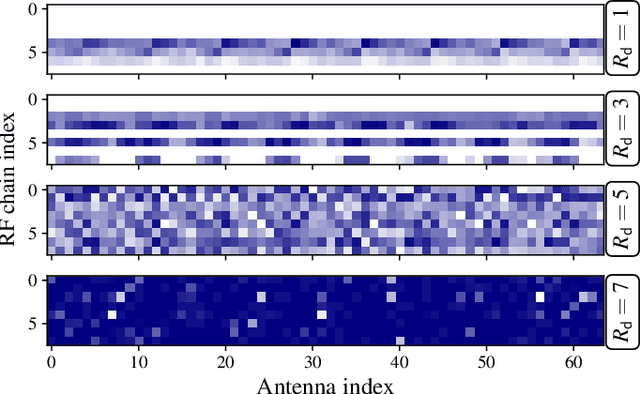

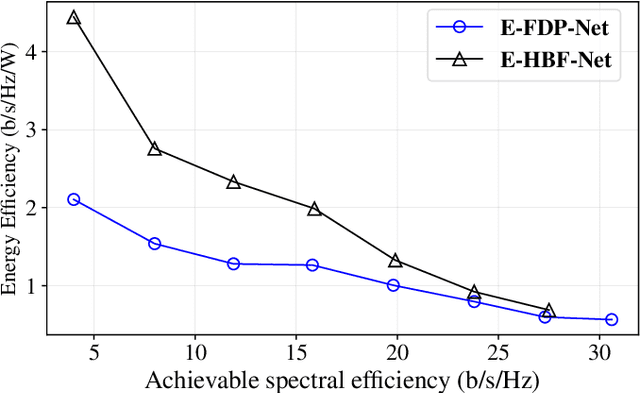

Abstract:Hybrid beamforming (HBF) and antenna selection are promising techniques for improving the energy efficiency~(EE) of massive multiple-input multiple-output~(mMIMO) systems. However, the transmitter architecture may contain several parameters that need to be optimized, such as the power allocated to the antennas and the connections between the antennas and the radio frequency chains. Therefore, finding the optimal transmitter architecture requires solving a non-convex mixed integer problem in a large search space. In this paper, we consider the problem of maximizing the EE of fully digital precoder~(FDP) and hybrid beamforming~(HBF) transmitters. First, we propose an energy model for different beamforming structures. Then, based on the proposed energy model, we develop an unsupervised deep learning method to maximize the EE by designing the transmitter configuration for FDP and HBF. The proposed deep neural networks can provide different trade-offs between spectral efficiency and energy consumption while adapting to different numbers of active users. Finally, to ensure that the proposed method can be implemented in practice, we investigate the ability of the model to be trained exclusively using imperfect channel state information~(CSI), both for the input to the deep learning model and for the calculation of the loss function. Simulation results show that the proposed solutions can outperform conventional methods in terms of EE while being trained with imperfect CSI. Furthermore, we show that the proposed solutions are less complex and more robust to noise than conventional methods.

Competitive Algorithms and Reinforcement Learning for NOMA in IoT Networks

Jan 28, 2022

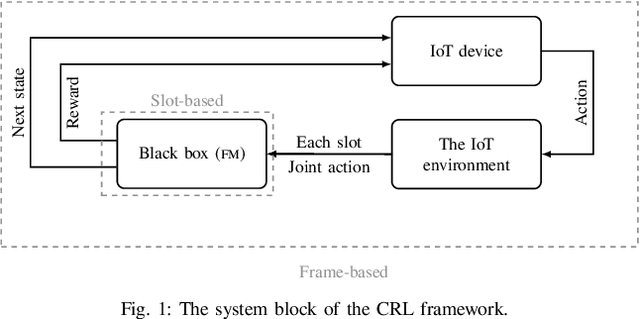

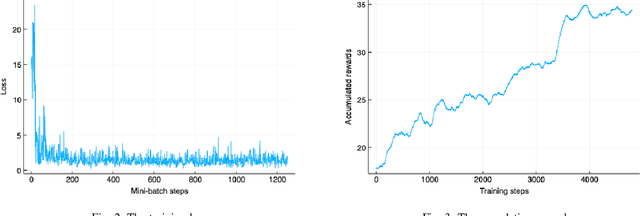

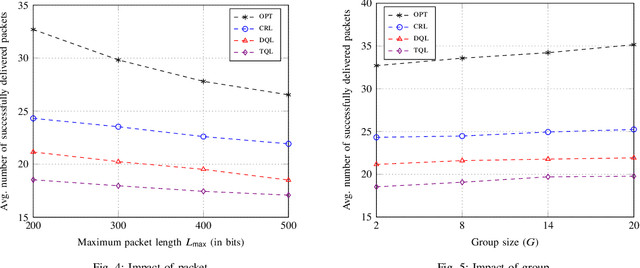

Abstract:This paper studies the problem of massive Internet of things (IoT) access in beyond fifth generation (B5G) networks using non-orthogonal multiple access (NOMA) technique. The problem involves massive IoT devices grouping and power allocation in order to respect the low latency as well as the limited operating energy of the IoT devices. The considered objective function, maximizing the number of successfully received IoT packets, is different from the classical sum-rate-related objective functions. The problem is first divided into multiple NOMA grouping subproblems. Then, using competitive analysis, an efficient online competitive algorithm (CA) is proposed to solve each subproblem. Next, to solve the power allocation problem, we propose a new reinforcement learning (RL) framework in which a RL agent learns to use the CA as a black box and combines the obtained solutions to each subproblem to determine the power allocation for each NOMA group. Our simulations results reveal that the proposed innovative RL framework outperforms deep-Q-learning methods and is close-to-optimal.

* arXiv admin note: text overlap with arXiv:2002.07957

Network Slicing with MEC and Deep Reinforcement Learning for the Internet of Vehicles

Jan 27, 2022

Abstract:The interconnection of vehicles in the future fifth generation (5G) wireless ecosystem forms the so-called Internet of vehicles (IoV). IoV offers new kinds of applications requiring delay-sensitive, compute-intensive and bandwidth-hungry services. Mobile edge computing (MEC) and network slicing (NS) are two of the key enabler technologies in 5G networks that can be used to optimize the allocation of the network resources and guarantee the diverse requirements of IoV applications. As traditional model-based optimization techniques generally end up with NP-hard and strongly non-convex and non-linear mathematical programming formulations, in this paper, we introduce a model-free approach based on deep reinforcement learning (DRL) to solve the resource allocation problem in MEC-enabled IoV network based on network slicing. Furthermore, the solution uses non-orthogonal multiple access (NOMA) to enable a better exploitation of the scarce channel resources. The considered problem addresses jointly the channel and power allocation, the slice selection and the vehicles selection (vehicles grouping). We model the problem as a single-agent Markov decision process. Then, we solve it using DRL using the well-known DQL algorithm. We show that our approach is robust and effective under different network conditions compared to benchmark solutions.

Data-Aware Device Scheduling for Federated Edge Learning

Feb 18, 2021

Abstract:Federated Edge Learning (FEEL) involves the collaborative training of machine learning models among edge devices, with the orchestration of a server in a wireless edge network. Due to frequent model updates, FEEL needs to be adapted to the limited communication bandwidth, scarce energy of edge devices, and the statistical heterogeneity of edge devices' data distributions. Therefore, a careful scheduling of a subset of devices for training and uploading models is necessary. In contrast to previous work in FEEL where the data aspects are under-explored, we consider data properties at the heart of the proposed scheduling algorithm. To this end, we propose a new scheduling scheme for non-independent and-identically-distributed (non-IID) and unbalanced datasets in FEEL. As the data is the key component of the learning, we propose a new set of considerations for data characteristics in wireless scheduling algorithms in FEEL. In fact, the data collected by the devices depends on the local environment and usage pattern. Thus, the datasets vary in size and distributions among the devices. In the proposed algorithm, we consider both data and resource perspectives. In addition to minimizing the completion time of FEEL as well as the transmission energy of the participating devices, the algorithm prioritizes devices with rich and diverse datasets. We first define a general framework for the data-aware scheduling and the main axes and requirements for diversity evaluation. Then, we discuss diversity aspects and some exploitable techniques and metrics. Next, we formulate the problem and present our FEEL scheduling algorithm. Evaluations in different scenarios show that our proposed FEEL scheduling algorithm can help achieve high accuracy in few rounds with a reduced cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge