Aaron M. Dollar

ARC-Calib: Autonomous Markerless Camera-to-Robot Calibration via Exploratory Robot Motions

Mar 18, 2025Abstract:Camera-to-robot (also known as eye-to-hand) calibration is a critical component of vision-based robot manipulation. Traditional marker-based methods often require human intervention for system setup. Furthermore, existing autonomous markerless calibration methods typically rely on pre-trained robot tracking models that impede their application on edge devices and require fine-tuning for novel robot embodiments. To address these limitations, this paper proposes a model-based markerless camera-to-robot calibration framework, ARC-Calib, that is fully autonomous and generalizable across diverse robots and scenarios without requiring extensive data collection or learning. First, exploratory robot motions are introduced to generate easily trackable trajectory-based visual patterns in the camera's image frames. Then, a geometric optimization framework is proposed to exploit the coplanarity and collinearity constraints from the observed motions to iteratively refine the estimated calibration result. Our approach eliminates the need for extra effort in either environmental marker setup or data collection and model training, rendering it highly adaptable across a wide range of real-world autonomous systems. Extensive experiments are conducted in both simulation and the real world to validate its robustness and generalizability.

Improving the accuracy of automated labeling of specimen images datasets via a confidence-based process

Nov 15, 2024Abstract:The digitization of natural history collections over the past three decades has unlocked a treasure trove of specimen imagery and metadata. There is great interest in making this data more useful by further labeling it with additional trait data, and modern deep learning machine learning techniques utilizing convolutional neural nets (CNNs) and similar networks show particular promise to reduce the amount of required manual labeling by human experts, making the process much faster and less expensive. However, in most cases, the accuracy of these approaches is too low for reliable utilization of the automatic labeling, typically in the range of 80-85% accuracy. In this paper, we present and validate an approach that can greatly improve this accuracy, essentially by examining the confidence that the network has in the generated label as well as utilizing a user-defined threshold to reject labels that fall below a chosen level. We demonstrate that a naive model that produced 86% initial accuracy can achieve improved performance - over 95% accuracy (rejecting about 40% of the labels) or over 99% accuracy (rejecting about 65%) by selecting higher confidence thresholds. This gives flexibility to adapt existing models to the statistical requirements of various types of research and has the potential to move these automatic labeling approaches from being unusably inaccurate to being an invaluable new tool. After validating the approach in a number of ways, we annotate the reproductive state of a large dataset of over 600,000 herbarium specimens. The analysis of the results points at under-investigated correlations as well as general alignment with known trends. By sharing this new dataset alongside this work, we want to allow ecologists to gather insights for their own research questions, at their chosen point of accuracy/coverage trade-off.

Interactive Robot-Environment Self-Calibration via Compliant Exploratory Actions

Mar 19, 2024

Abstract:Calibrating robots into their workspaces is crucial for manipulation tasks. Existing calibration techniques often rely on sensors external to the robot (cameras, laser scanners, etc.) or specialized tools. This reliance complicates the calibration process and increases the costs and time requirements. Furthermore, the associated setup and measurement procedures require significant human intervention, which makes them more challenging to operate. Using the built-in force-torque sensors, which are nowadays a default component in collaborative robots, this work proposes a self-calibration framework where robot-environmental spatial relations are automatically estimated through compliant exploratory actions by the robot itself. The self-calibration approach converges, verifies its own accuracy, and terminates upon completion, autonomously purely through interactive exploration of the environment's geometries. Extensive experiments validate the effectiveness of our self-calibration approach in accurately establishing the robot-environment spatial relationships without the need for additional sensing equipment or any human intervention.

RB5 Low-Cost Explorer: Implementing Autonomous Long-Term Exploration on Low-Cost Robotic Hardware

Feb 14, 2024Abstract:This systems paper presents the implementation and design of RB5, a wheeled robot for autonomous long-term exploration with fewer and cheaper sensors. Requiring just an RGB-D camera and low-power computing hardware, the system consists of an experimental platform with rocker-bogie suspension. It operates in unknown and GPS-denied environments and on indoor and outdoor terrains. The exploration consists of a methodology that extends frontier- and sampling-based exploration with a path-following vector field and a state-of-the-art SLAM algorithm. The methodology allows the robot to explore its surroundings at lower update frequencies, enabling the use of lower-performing and lower-cost hardware while still retaining good autonomous performance. The approach further consists of a methodology to interact with a remotely located human operator based on an inexpensive long-range and low-power communication technology from the internet-of-things domain (i.e., LoRa) and a customized communication protocol. The results and the feasibility analysis show the possible applications and limitations of the approach.

Energy-Aware Ergodic Search: Continuous Exploration for Multi-Agent Systems with Battery Constraints

Oct 14, 2023

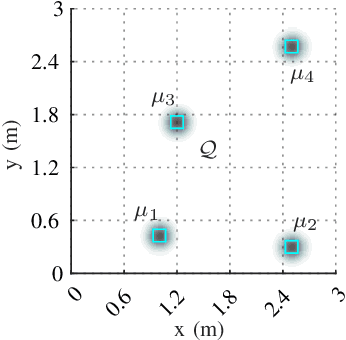

Abstract:Autonomous exploration without interruption is important in scenarios such as search and rescue and precision agriculture, where consistent presence is needed to detect events over large areas. Ergodic search already derives continuous coverage trajectories in these scenarios so that a robot spends more time in areas with high information density. However, existing literature on ergodic search does not consider the robot's energy constraints, limiting how long a robot can explore. In fact, if the robots are battery-powered, it is physically not possible to continuously explore on a single battery charge. Our paper tackles this challenge by integrating ergodic search methods with energy-aware coverage. We trade off battery usage and coverage quality, maintaining uninterrupted exploration of a given space by at least one agent. Our approach derives an abstract battery model for future state-of-charge estimation and extends canonical ergodic search to ergodic search under battery constraints. Empirical data from simulations and real-world experiments demonstrate the effectiveness of our energy-aware ergodic search, which ensures continuous and uninterrupted exploration and guarantees spatial coverage.

Non-Parametric Self-Identification and Model Predictive Control of Dexterous In-Hand Manipulation

Jul 14, 2023

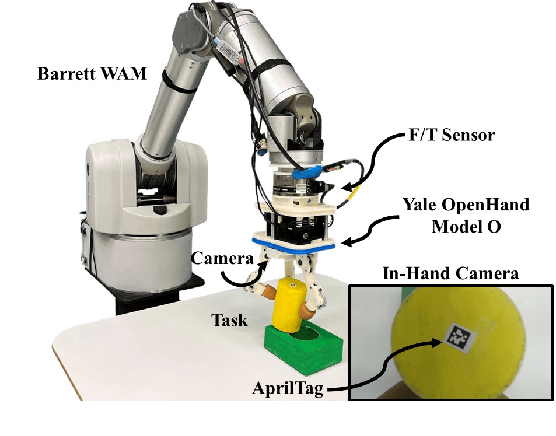

Abstract:Building hand-object models for dexterous in-hand manipulation remains a crucial and open problem. Major challenges include the difficulty of obtaining the geometric and dynamical models of the hand, object, and time-varying contacts, as well as the inevitable physical and perception uncertainties. Instead of building accurate models to map between the actuation inputs and the object motions, this work proposes to enable the hand-object systems to continuously approximate their local models via a self-identification process where an underlying manipulation model is estimated through a small number of exploratory actions and non-parametric learning. With a very small number of data points, as opposed to most data-driven methods, our system self-identifies the underlying manipulation models online through exploratory actions and non-parametric learning. By integrating the self-identified hand-object model into a model predictive control framework, the proposed system closes the control loop to provide high accuracy in-hand manipulation. Furthermore, the proposed self-identification is able to adaptively trigger online updates through additional exploratory actions, as soon as the self-identified local models render large discrepancies against the observed manipulation outcomes. We implemented the proposed approach on a sensorless underactuated Yale Model O hand with a single external camera to observe the object's motion. With extensive experiments, we show that the proposed self-identification approach can enable accurate and robust dexterous manipulation without requiring an accurate system model nor a large amount of data for offline training.

Towards Generalized Robot Assembly through Compliance-Enabled Contact Formations

Mar 09, 2023

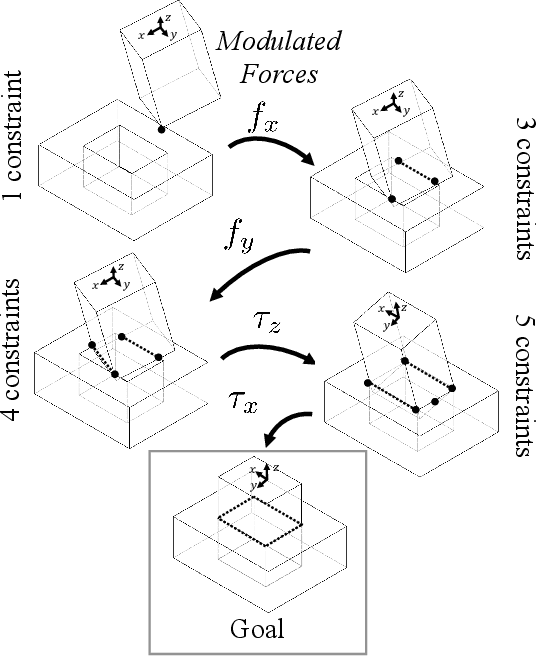

Abstract:Contact can be conceptualized as a set of constraints imposed on two bodies that are interacting with one another in some way. The nature of a contact, whether a point, line, or surface, dictates how these bodies are able to move with respect to one another given a force, and a set of contacts can provide either partial or full constraint on a body's motion. Decades of work have explored how to explicitly estimate the location of a contact and its dynamics, e.g., frictional properties, but investigated methods have been computationally expensive and there often exists significant uncertainty in the final calculation. This has affected further advancements in contact-rich tasks that are seemingly simple to humans, such as generalized peg-in-hole insertions. In this work, instead of explicitly estimating the individual contact dynamics between an object and its hole, we approach this problem by investigating compliance-enabled contact formations. More formally, contact formations are defined according to the constraints imposed on an object's available degrees-of-freedom. Rather than estimating individual contact positions, we abstract out this calculation to an implicit representation, allowing the robot to either acquire, maintain, or release constraints on the object during the insertion process, by monitoring forces enacted on the end effector through time. Using a compliant robot, our method is desirable in that we are able to complete industry-relevant insertion tasks of tolerances <0.25mm without prior knowledge of the exact hole location or its orientation. We showcase our method on more generalized insertion tasks, such as commercially available non-cylindrical objects and open world plug tasks.

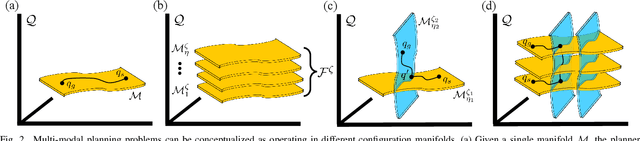

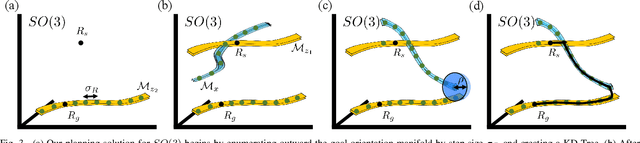

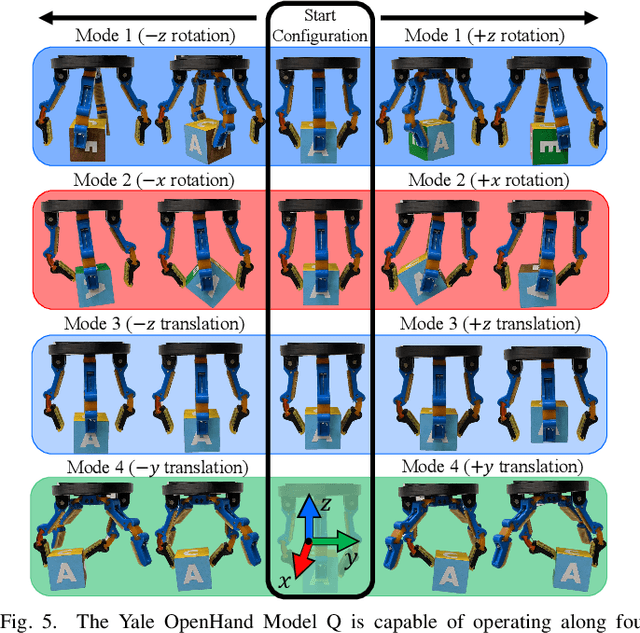

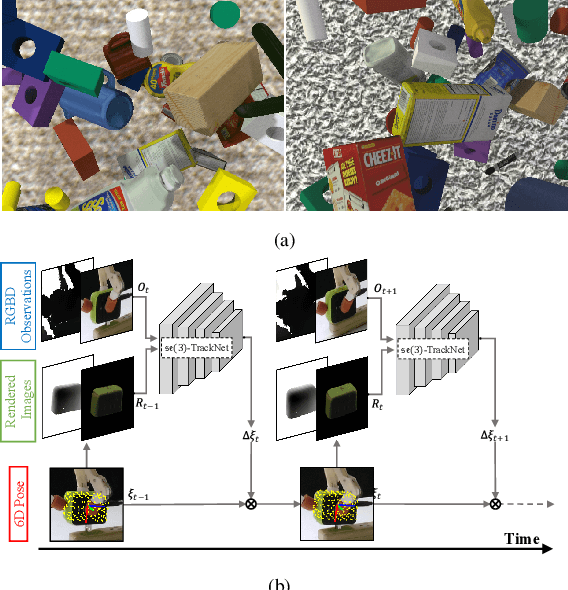

Complex In-Hand Manipulation via Compliance-Enabled Finger Gaiting and Multi-Modal Planning

Jan 20, 2022

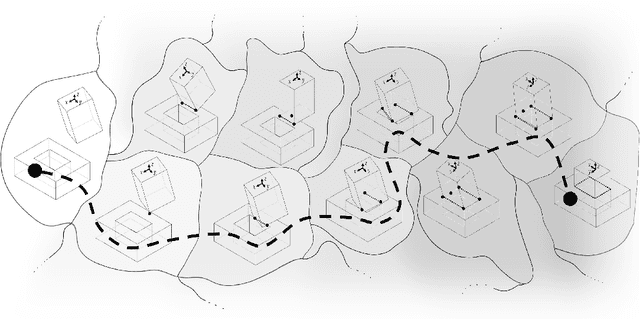

Abstract:Constraining contacts to remain fixed on an object during manipulation limits the potential workspace size, as motion is subject to the hand's kinematic topology. Finger gaiting is one way to alleviate such restraints. It allows contacts to be freely broken and remade so as to operate on different manipulation manifolds. This capability, however, has traditionally been difficult or impossible to practically realize. A finger gaiting system must simultaneously plan for and control forces on the object while maintaining stability during contact switching. This work alleviates the traditional requirement by taking advantage of system compliance, allowing the hand to more easily switch contacts while maintaining a stable grasp. Our method achieves complete SO(3) finger gaiting control of grasped objects against gravity by developing a manipulation planner that operates via orthogonal safe modes of a compliant, underactuated hand absent of tactile sensors or joint encoders. During manipulation, a low-latency 6D pose object tracker provides feedback via vision, allowing the planner to update its plan online so as to adaptively recover from trajectory deviations. The efficacy of this method is showcased by manipulating both convex and non-convex objects on a real robot. Its robustness is evaluated via perturbation rejection and long trajectory goals. To the best of the authors' knowledge, this is the first work that has autonomously achieved full SO(3) control of objects within-hand via finger gaiting and without a support surface, elucidating a valuable step towards realizing true robot in-hand manipulation capabilities.

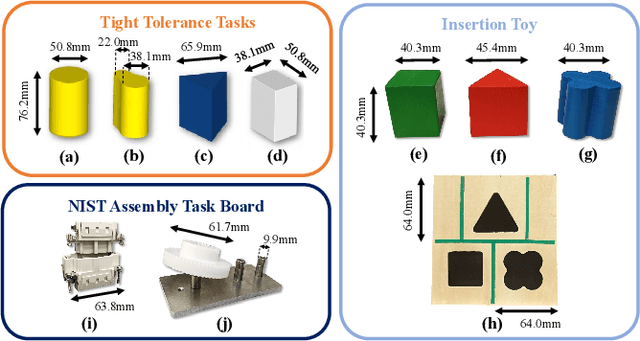

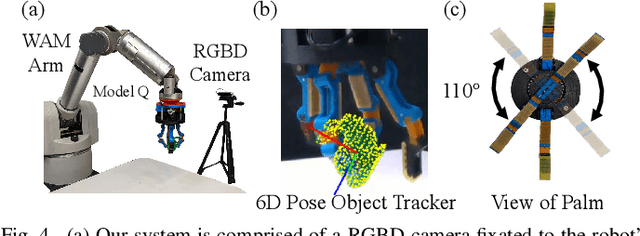

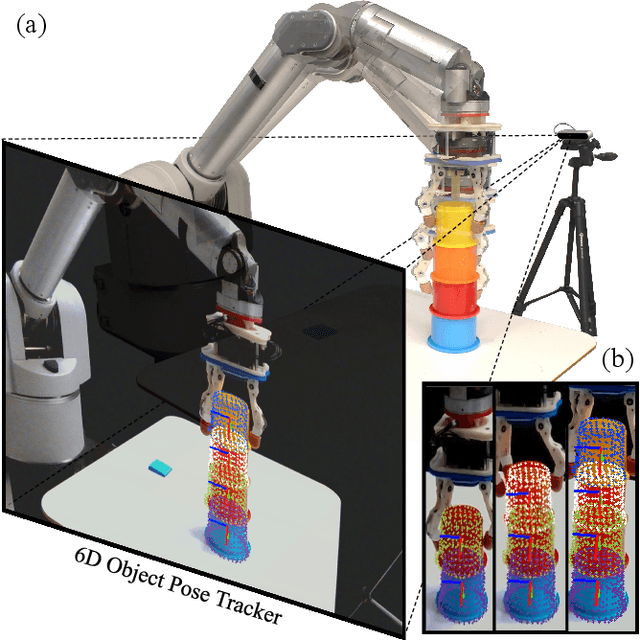

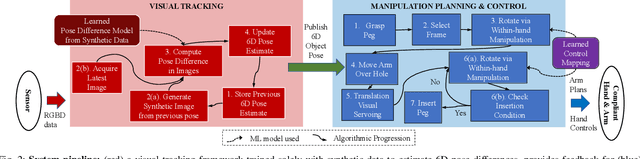

Vision-driven Compliant Manipulation for Reliable, High-Precision Assembly Tasks

Jun 26, 2021

Abstract:Highly constrained manipulation tasks continue to be challenging for autonomous robots as they require high levels of precision, typically less than 1mm, which is often incompatible with what can be achieved by traditional perception systems. This paper demonstrates that the combination of state-of-the-art object tracking with passively adaptive mechanical hardware can be leveraged to complete precision manipulation tasks with tight, industrially-relevant tolerances (0.25mm). The proposed control method closes the loop through vision by tracking the relative 6D pose of objects in the relevant workspace. It adjusts the control reference of both the compliant manipulator and the hand to complete object insertion tasks via within-hand manipulation. Contrary to previous efforts for insertion, our method does not require expensive force sensors, precision manipulators, or time-consuming, online learning, which is data hungry. Instead, this effort leverages mechanical compliance and utilizes an object agnostic manipulation model of the hand learned offline, off-the-shelf motion planning, and an RGBD-based object tracker trained solely with synthetic data. These features allow the proposed system to easily generalize and transfer to new tasks and environments. This paper describes in detail the system components and showcases its efficacy with extensive experiments involving tight tolerance peg-in-hole insertion tasks of various geometries as well as open-world constrained placement tasks.

Model Predictive Actor-Critic: Accelerating Robot Skill Acquisition with Deep Reinforcement Learning

Mar 25, 2021

Abstract:Substantial advancements to model-based reinforcement learning algorithms have been impeded by the model-bias induced by the collected data, which generally hurts performance. Meanwhile, their inherent sample efficiency warrants utility for most robot applications, limiting potential damage to the robot and its environment during training. Inspired by information theoretic model predictive control and advances in deep reinforcement learning, we introduce Model Predictive Actor-Critic (MoPAC), a hybrid model-based/model-free method that combines model predictive rollouts with policy optimization as to mitigate model bias. MoPAC leverages optimal trajectories to guide policy learning, but explores via its model-free method, allowing the algorithm to learn more expressive dynamics models. This combination guarantees optimal skill learning up to an approximation error and reduces necessary physical interaction with the environment, making it suitable for real-robot training. We provide extensive results showcasing how our proposed method generally outperforms current state-of-the-art and conclude by evaluating MoPAC for learning on a physical robotic hand performing valve rotation and finger gaiting--a task that requires grasping, manipulation, and then regrasping of an object.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge